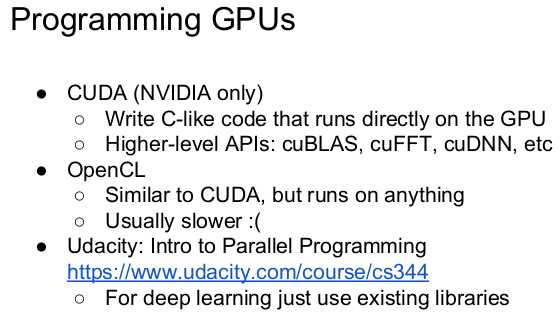

CPU and GPU

If you aren’t careful, training can bottleneck on reading data and transferring to GPU! Solutions:

- - Read all data into RAM

- - Use SSD instead of HDD

- - Use multiple CPU threads to prefetch data

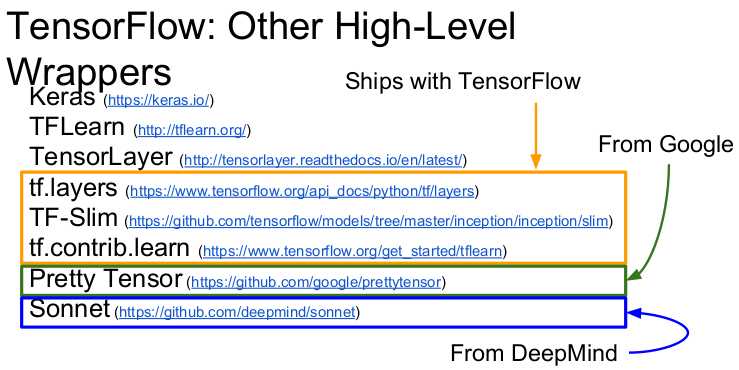

The point of deep learning frameworks

- Easily build big computational graphs

- Easily compute gradients in computational graphs

- Run it all efficiently on GPU (wrap cuDNN, cuBLAS, etc)

DL frameworks

Pytorch大法好

TensorFlow

First define the graph, and then run it many times.

TOO UGLY!!! Introduces a lot of terms that doesn‘t seem to be important if it is designed right. And the api is not pythonic at all!

use tensorboard to make life easier!

PyTorch

Pytorch大法好

Tensor: ndarray that can do computations on GPU

Variable: node in a computational graph that supports Autograd.

- x.data. Tensor

- x.grad. Variable of gradients with the same size of x.data

- x.grad.data. the Tensor of gradients

nice and clean!

torch.nn package

- already defined layers

- build model on layers

torch.optim

update automatically with various optimization algorithms.

torchvision

pretrained models

Visdom

visualization.