作者:一只虫

转载请注明出处

Mt端的Tmall解决了Pc端3页废除通用cookies的机制,只是mt端的数据有点少19/page

import requests

from lxml import etree

from pymysql import *

from tkinter import *

from urllib import parse

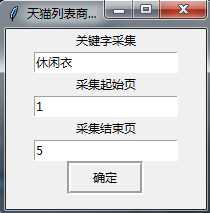

window = Tk()

window.title("天猫列表商品采集")

window.geometry(‘200x180‘)

Label(window, text=‘关键字采集‘).pack()

name = StringVar()

Entry(window, textvariable=name).pack()

Label(window, text=‘采集起始页‘).pack()

to_page = StringVar()

Entry(window, textvariable=to_page).pack()

Label(window, text=‘采集结束页‘).pack()

w_page = StringVar()

Entry(window, textvariable=w_page).pack()

class Tmall(object):

def __init__(self,name,to_page, w_page):

self.url = ‘https://list.tmall.com/m/search_items.htm?page_size=20&page_no={}&q=‘ + name

self.headers = {‘accept‘:‘text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8‘,

‘accept-encoding‘:‘gzip, deflate, br‘,

‘accept-language‘:‘zh-CN,zh;q=0.9‘,

‘cache-control‘:‘max-age=0‘,

‘upgrade-insecure-requests‘:‘1‘,

‘user-agent‘:‘Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.119 Safari/537.36 ‘}

self.proxies = {

"http": "http://113.207.44.70:3128",

}

# 生成url列表

self.url_list = [self.url.format(i) for i in range(to_page, w_page + 1)]

# 请求url

def get_data(self, url):

try:

response = requests.get(url, headers=self.headers, proxies=self.proxies, timeout=10)

return response.json()

except Exception as e:

‘‘‘吧报错信息写入log日志‘‘‘

with open(‘log.txt‘, ‘a‘,encoding=‘utf-8‘) as f:

f.write(repr(e) + ‘\n‘)

# 解析数据

def parse_data(self, data):

try:

data_list = []

for i in data["item"]:

# 图片链接

img_link = i[‘img‘] if i[‘img‘] else i[‘img‘]

# 商品链接

goods_link = i[‘url‘] if i[‘url‘] else "空"

# 商品价格

price = i[‘price‘] if i[‘price‘] else "空"

# 商品名称

title = i[‘title‘] if i[‘title‘] else "空"

# 公司

company = i[‘shop_name‘] if i[‘shop_name‘] else "空"

# 月交易

deal_count = i[‘sold‘] if i[‘sold‘] else "空"

# 评论数

comment_count = i[‘comment_num‘] if i[‘comment_num‘] else "空"

time = {

‘name‘: ‘天猫‘,

‘img_link‘: img_link,

‘goods_link‘: goods_link,

‘price‘: price,

‘title‘: title,

‘company‘: company,

‘deal_count‘: deal_count,

‘comment_count‘: comment_count,

}

data_list.append(time)

return data_list

except Exception as e:

‘‘‘吧报错信息写入log日志‘‘‘

with open(‘log.txt‘, ‘a‘,encoding=‘utf-8‘) as f:

f.write(repr(e) + ‘\n‘)

# 保存数据

def save_data(self, data_list):

try:

conn = Connect(host="127.0.0.1", user="root", password="root", database="data_list", port=3306,

charset="utf8")

cs1 = conn.cursor()

# 执行insert语句,并返回受影响的行数:添加一条数据

for index, data in enumerate(data_list):

count = cs1.execute(

‘insert into data(name,goods_link,img_link,title,price,company,deal_count,comment_count) values("%s","%s","%s","%s","%s","%s","%s","%s")‘ % (

data[‘name‘], data[‘goods_link‘], data[‘img_link‘], data[‘title‘], data[‘price‘],

data[‘company‘], data[‘deal_count‘], data[‘comment_count‘]))

# 关闭Cursor对象

print(count)

cs1.close()

# 提交之前的操作,此处为insert操作

conn.commit()

except Exception as e:

‘‘‘吧报错信息写入log日志‘‘‘

with open(‘log.txt‘, ‘a‘,encoding=‘utf-8‘) as f:

f.write(repr(e) + ‘\n‘)

finally:

# 关闭Connection对象

conn.close()

def run(self):

# 构建url

# 构建请求头

# 发起请求

for url in self.url_list:

data = self.get_data(url)

# 解析响应,抽取数据

data_list = self.parse_data(data)

# 保存数据

self.save_data(data_list)

def main():

n = str(name.get())

t = int(to_page.get())

w = int(w_page.get())

all = Tmall(n,t,w)

all.run()

if __name__ == ‘__main__‘:

Button(window, text="确定", relief=‘groove‘, width=9, height=1, bd=4, command=main).pack()

window.mainloop()