标签:iframe nal 无符号 waitkey efficient 显示 lock SM max

-----------------------------------------------------------------------

更新一下进展:

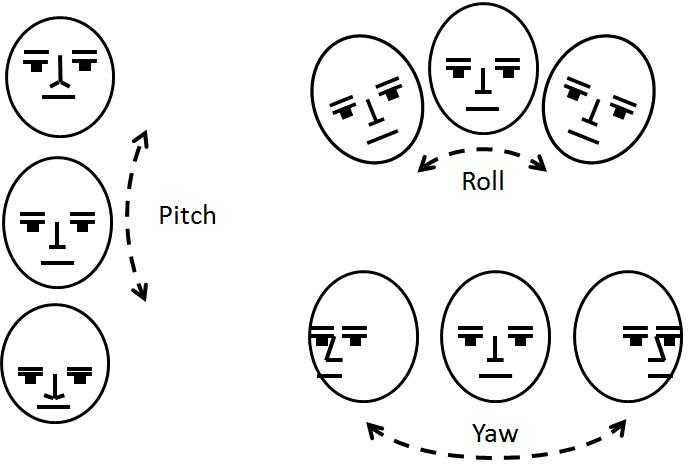

自从发现了下面的 pitch roll yaw 对照,基本上也算是了解了个大概了。

然后就又发现了下面的文章:

原贴地址:https://www.amobbs.com/thread-5504669-1-1.html

四元数转换成欧拉角,pitch角范围问题?

用下面的语句将四元数[w x y z]转换成欧拉角roll,pitch,yaw

Roll = atan2(2 * (w * z + x * y) , 1 - 2 * (z * z + x * x)); Pitch = asin(2 * (w * x - y * z)); Yaw = atan2(2 * (w * y + z * x) , 1 - 2 * (x * x + y * y));

Roll和Pitch的范围是-180到180度,Pitch用asin计算范围只有-90到90度。

最大的问题时当Pitch(超过)90度,也就是说88度89度90度,然后递减又是89度88度87度,这个90度的分解处,roll和yaw角会有一个180度的转变(假定原来roll和yaw都是0,在pitch角90度的分解处会突然变成180度)

请问这个该如何解决?

感谢~~~

----------------------------------------------------------------------

从上面的文章大致找到了获取角度的处理,然而获取角度之后大概应该也就知道朝向的问题了。有很多的突破!

获取人脸跟踪结果后,我们除了得到面部关键顶点还可以直接获取人脸朝向(Get3DPose函数)。下图是人脸朝向的定义:

|

Angle |

Value |

|

Pitch angle 0=neutral |

-90 = looking down towards the floor +90 = looking up towards the ceiling Face Tracking tracks when the user’s head pitch is less than 20 degrees, but works best when less than 10 degrees. |

|

Roll angle 0 = neutral |

-90 = horizontal parallel with right shoulder of subject +90 = horizontal parallel with left shoulder of the subject Face Tracking tracks when the user’s head roll is less than 90 degrees, but works best when less than 45 degrees. |

|

Yaw angle 0 = neutral |

-90 = turned towards the right shoulder of the subject +90 = turned towards the left shoulder of the subject Face Tracking tracks when the user’s head yaw is less than 45 degrees, but works best when less than 30 degrees |

自然如果你做简单的人头旋转头部动作识别,肯定得用到这块。如果你做人脸标表情、动作或者三维建模,也少不了使用这个旋转角度对人脸进行一些仿射变换。很显然,Roll方向上的人脸旋转可以消除掉(我在我的人脸识别中就这样)。如果你使用OpenCV进行放射旋转,要熟悉矩阵旋转公式,一旦人脸旋转,那么所有的顶点坐标都要跟着旋转,如果扣除人脸区域,那么相应的人脸顶点坐标也要减去x轴和y轴的数值。还有需要考虑一些临界情况,比如人脸从左侧移除,人脸从上方、下方走出,会不会导致程序崩溃?此时的人脸数据不可以使用。

之后还有动画单元等概念,也是可以通过函数直接获取结果,这部分我未进行研究,如果做表情或者人脸动画,则需要深入研究下。

==================================================================================================

原贴地址:http://brightguo.com/kinect-face-tracking/

=================================================================================================

// win32_KinectFaceTracking.cpp : 定义控制台应用程序的入口点。 /**************************************************** 程序用途:KinectFace Tracking简单例子 开发环境:VisualStudio 2010 win32程序 OpenCV2.4.4 显示界面库 Kinect SDK v1.6 驱动版本 Windows 7 操作系统 开发人员:箫鸣 开发时间:2013-3-11~ 2013-3-12 联系方式:weibo.com/guoming0000 guoming0000@sina.com www.ilovecode.cn 备注:另有配套相关博客文章: Kinect Face Tracking SDK[Kinect人脸跟踪] ******************************************************/ #include "stdafx.h" #include <windows.h> #include <opencv2opencv.hpp> #include <mmsystem.h> #include <assert.h> //#include <strsafe.h> #include "NuiApi.h" using namespace cv; using namespace std; //---------------------------------------------------- #define _WINDOWS #include <FaceTrackLib.h> //显示网状人脸,初始化人脸模型 HRESULT VisualizeFaceModel(IFTImage* pColorImg, IFTModel* pModel, FT_CAMERA_CONFIG const* pCameraConfig, FLOAT const* pSUCoef, FLOAT zoomFactor, POINT viewOffset, IFTResult* pAAMRlt, UINT32 color);//pColorImg为图像缓冲区,pModel三维人脸模型 //---图像大小等参数-------------------------------------------- #define COLOR_WIDTH 640 #define COLOR_HIGHT 480 #define DEPTH_WIDTH 320 #define DEPTH_HIGHT 240 #define SKELETON_WIDTH 640 #define SKELETON_HIGHT 480 #define CHANNEL 3 BYTE DepthBuf[DEPTH_WIDTH*DEPTH_HIGHT*CHANNEL]; //---人脸跟踪用到的变量------------------------------------------ IFTImage* pColorFrame,*pColorDisplay; //彩色图像数据,pColorDisplay是用于处理的深度数据 IFTImage* pDepthFrame; //深度图像数据 FT_VECTOR3D m_hint3D[2]; //头和肩膀中心的坐标 //----各种内核事件和句柄----------------------------------------------------------------- HANDLE m_hNextColorFrameEvent; HANDLE m_hNextDepthFrameEvent; HANDLE m_hNextSkeletonEvent; HANDLE m_pColorStreamHandle;//保存图像数据流的句柄,用以提取数据 HANDLE m_pDepthStreamHandle; HANDLE m_hEvNuiProcessStop;//用于结束的事件对象 //----------------------------------------------------------------------------------- //获取彩色图像数据,并进行显示 int DrawColor(HANDLE h) { const NUI_IMAGE_FRAME * pImageFrame = NULL; HRESULT hr = NuiImageStreamGetNextFrame( h, 0, &pImageFrame ); if( FAILED( hr ) ) { cout<<"Get Color Image Frame Failed"<<endl; return -1; } INuiFrameTexture * pTexture = pImageFrame->pFrameTexture; NUI_LOCKED_RECT LockedRect; pTexture->LockRect( 0, &LockedRect, NULL, 0 );//提取数据帧到LockedRect中,包括两个数据对象:pitch表示每行字节数,pBits第一个字节的地址 if( LockedRect.Pitch != 0 )//如果每行字节数不为0 { BYTE * pBuffer = (BYTE*) LockedRect.pBits;//pBuffer指向数据帧的第一个字节的地址 //该函数的作用是在LockedRect第一个字节开始的地址复制min(pColorFrame->GetBufferSize(), UINT(pTexture->BufferLen()))个字节到pColorFrame->GetBuffer()所指的缓冲区 memcpy(pColorFrame->GetBuffer(), PBYTE(LockedRect.pBits), //PBYTE表示无符号单字节数值 min(pColorFrame->GetBufferSize(), UINT(pTexture->BufferLen())));//GetBuffer()它的作用是返回一个可写的缓冲指针 //OpenCV显示彩色视频 Mat temp(COLOR_HIGHT,COLOR_WIDTH,CV_8UC4,pBuffer); imshow("ColorVideo",temp); int c = waitKey(1);//按下ESC结束 //如果在视频界面按下ESC,q,Q都会导致整个程序退出 if( c == 27 || c == ‘q‘ || c == ‘Q‘ ) { SetEvent(m_hEvNuiProcessStop); } } NuiImageStreamReleaseFrame( h, pImageFrame ); return 0; } //获取深度图像数据,并进行显示 int DrawDepth(HANDLE h) { const NUI_IMAGE_FRAME * pImageFrame = NULL; HRESULT hr = NuiImageStreamGetNextFrame( h, 0, &pImageFrame ); if( FAILED( hr ) ) { cout<<"Get Depth Image Frame Failed"<<endl; return -1; } INuiFrameTexture * pTexture = pImageFrame->pFrameTexture; NUI_LOCKED_RECT LockedRect; pTexture->LockRect( 0, &LockedRect, NULL, 0 ); if( LockedRect.Pitch != 0 ) { USHORT * pBuff = (USHORT*) LockedRect.pBits;//注意这里需要转换,因为每个数据是2个字节,存储的同上面的颜色信息不一样,这里是2个字节一个信息,不能再用BYTE,转化为USHORT // pDepthBuffer = pBuff; memcpy(pDepthFrame->GetBuffer(), PBYTE(LockedRect.pBits), min(pDepthFrame->GetBufferSize(), UINT(pTexture->BufferLen()))); for(int i=0;i<DEPTH_WIDTH*DEPTH_HIGHT;i++) { BYTE index = pBuff[i]&0x07;//提取ID信息 USHORT realDepth = (pBuff[i]&0xFFF8)>>3;//提取距离信息 BYTE scale = 255 - (BYTE)(256*realDepth/0x0fff);//因为提取的信息时距离信息 DepthBuf[CHANNEL*i] = DepthBuf[CHANNEL*i+1] = DepthBuf[CHANNEL*i+2] = 0; switch( index ) { case 0: DepthBuf[CHANNEL*i]=scale/2; DepthBuf[CHANNEL*i+1]=scale/2; DepthBuf[CHANNEL*i+2]=scale/2; break; case 1: DepthBuf[CHANNEL*i]=scale; break; case 2: DepthBuf[CHANNEL*i+1]=scale; break; case 3: DepthBuf[CHANNEL*i+2]=scale; break; case 4: DepthBuf[CHANNEL*i]=scale; DepthBuf[CHANNEL*i+1]=scale; break; case 5: DepthBuf[CHANNEL*i]=scale; DepthBuf[CHANNEL*i+2]=scale; break; case 6: DepthBuf[CHANNEL*i+1]=scale; DepthBuf[CHANNEL*i+2]=scale; break; case 7: DepthBuf[CHANNEL*i]=255-scale/2; DepthBuf[CHANNEL*i+1]=255-scale/2; DepthBuf[CHANNEL*i+2]=255-scale/2; break; } } Mat temp(DEPTH_HIGHT,DEPTH_WIDTH,CV_8UC3,DepthBuf); imshow("DepthVideo",temp); int c = waitKey(1);//按下ESC结束 if( c == 27 || c == ‘q‘ || c == ‘Q‘ ) { SetEvent(m_hEvNuiProcessStop); } } NuiImageStreamReleaseFrame( h, pImageFrame ); return 0; } //获取骨骼数据,并进行显示 int DrawSkeleton() { NUI_SKELETON_FRAME SkeletonFrame;//骨骼帧的定义 cv::Point pt[20]; Mat skeletonMat=Mat(SKELETON_HIGHT,SKELETON_WIDTH,CV_8UC3,Scalar(0,0,0)); //直接从kinect中提取骨骼帧 HRESULT hr = NuiSkeletonGetNextFrame( 0, &SkeletonFrame ); if( FAILED( hr ) ) { cout<<"Get Skeleton Image Frame Failed"<<endl; return -1; } bool bFoundSkeleton = false; for( int i = 0 ; i < NUI_SKELETON_COUNT ; i++ ) { if( SkeletonFrame.SkeletonData[i].eTrackingState == NUI_SKELETON_TRACKED ) { bFoundSkeleton = true; } } // 跟踪到了骨架 if( bFoundSkeleton ) { NuiTransformSmooth(&SkeletonFrame,NULL); for( int i = 0 ; i < NUI_SKELETON_COUNT ; i++ ) { if( SkeletonFrame.SkeletonData[i].eTrackingState == NUI_SKELETON_TRACKED ) { for (int j = 0; j < NUI_SKELETON_POSITION_COUNT; j++) { float fx,fy; NuiTransformSkeletonToDepthImage( SkeletonFrame.SkeletonData[i].SkeletonPositions[j], &fx, &fy ); pt[j].x = (int) ( fx * SKELETON_WIDTH )/320; pt[j].y = (int) ( fy * SKELETON_HIGHT )/240; circle(skeletonMat,pt[j],5,CV_RGB(255,0,0)); } // cout<<"one people"<<endl; // cv::line(skeletonMat,pt[NUI_SKELETON_POSITION_SHOULDER_CENTER],pt[NUI_SKELETON_POSITION_SPINE],CV_RGB(0,255,0)); // cv::line(skeletonMat,pt[NUI_SKELETON_POSITION_SPINE],pt[NUI_SKELETON_POSITION_HIP_CENTER],CV_RGB(0,255,0)); cv::line(skeletonMat,pt[NUI_SKELETON_POSITION_HEAD],pt[NUI_SKELETON_POSITION_SHOULDER_CENTER],CV_RGB(0,255,0)); cv::line(skeletonMat,pt[NUI_SKELETON_POSITION_HAND_RIGHT],pt[NUI_SKELETON_POSITION_WRIST_RIGHT],CV_RGB(0,255,0)); cv::line(skeletonMat,pt[NUI_SKELETON_POSITION_WRIST_RIGHT],pt[NUI_SKELETON_POSITION_ELBOW_RIGHT],CV_RGB(0,255,0)); cv::line(skeletonMat,pt[NUI_SKELETON_POSITION_ELBOW_RIGHT],pt[NUI_SKELETON_POSITION_SHOULDER_RIGHT],CV_RGB(0,255,0)); cv::line(skeletonMat,pt[NUI_SKELETON_POSITION_SHOULDER_RIGHT],pt[NUI_SKELETON_POSITION_SHOULDER_CENTER],CV_RGB(0,255,0)); cv::line(skeletonMat,pt[NUI_SKELETON_POSITION_SHOULDER_CENTER],pt[NUI_SKELETON_POSITION_SHOULDER_LEFT],CV_RGB(0,255,0)); cv::line(skeletonMat,pt[NUI_SKELETON_POSITION_SHOULDER_LEFT],pt[NUI_SKELETON_POSITION_ELBOW_LEFT],CV_RGB(0,255,0)); cv::line(skeletonMat,pt[NUI_SKELETON_POSITION_ELBOW_LEFT],pt[NUI_SKELETON_POSITION_WRIST_LEFT],CV_RGB(0,255,0)); cv::line(skeletonMat,pt[NUI_SKELETON_POSITION_WRIST_LEFT],pt[NUI_SKELETON_POSITION_HAND_LEFT],CV_RGB(0,255,0)); m_hint3D[0].x=SkeletonFrame.SkeletonData[i].SkeletonPositions[NUI_SKELETON_POSITION_SHOULDER_CENTER].x; m_hint3D[0].y=SkeletonFrame.SkeletonData[i].SkeletonPositions[NUI_SKELETON_POSITION_SHOULDER_CENTER].y; m_hint3D[0].z=SkeletonFrame.SkeletonData[i].SkeletonPositions[NUI_SKELETON_POSITION_SHOULDER_CENTER].z; m_hint3D[1].x=SkeletonFrame.SkeletonData[i].SkeletonPositions[NUI_SKELETON_POSITION_HEAD].x; m_hint3D[1].y=SkeletonFrame.SkeletonData[i].SkeletonPositions[NUI_SKELETON_POSITION_HEAD].y; m_hint3D[1].z=SkeletonFrame.SkeletonData[i].SkeletonPositions[NUI_SKELETON_POSITION_HEAD].z; // cout<<"("<<m_hint3D[0].x<<","<<m_hint3D[0].y<<","<<m_hint3D[0].z<<")"<<endl; // cout<<"("<<m_hint3D[1].x<<","<<m_hint3D[1].y<<","<<m_hint3D[1].z<<")"<<endl<<endl; } } } imshow("SkeletonVideo",skeletonMat); waitKey(1); int c = waitKey(1);//按下ESC结束 if( c == 27 || c == ‘q‘ || c == ‘Q‘ ) { SetEvent(m_hEvNuiProcessStop); } return 0; } DWORD WINAPI KinectDataThread(LPVOID pParam)//线程函数 { HANDLE hEvents[4] = {m_hEvNuiProcessStop,m_hNextColorFrameEvent, m_hNextDepthFrameEvent,m_hNextSkeletonEvent};//内核事件 while(1) { int nEventIdx; nEventIdx=WaitForMultipleObjects(sizeof(hEvents)/sizeof(hEvents[0]), hEvents,FALSE,100); if (WAIT_OBJECT_0 == WaitForSingleObject(m_hEvNuiProcessStop, 0)) { break; } // Process signal events if (WAIT_OBJECT_0 == WaitForSingleObject(m_hNextColorFrameEvent, 0)) { DrawColor(m_pColorStreamHandle);//获取彩色图像并进行显示 } if (WAIT_OBJECT_0 == WaitForSingleObject(m_hNextDepthFrameEvent, 0)) { DrawDepth(m_pDepthStreamHandle); } if (WAIT_OBJECT_0 == WaitForSingleObject(m_hNextSkeletonEvent, 0)) { DrawSkeleton(); } //这种方式关闭程序时可能出问题 // switch(nEventIdx) // { // case 0: // break; // case 1: // DrawColor(m_pVideoStreamHandle); // case 2: // DrawDepth(m_pDepthStreamHandle); // case 3: // DrawSkeleton(); // } } CloseHandle(m_hEvNuiProcessStop); m_hEvNuiProcessStop = NULL; CloseHandle( m_hNextSkeletonEvent ); CloseHandle( m_hNextDepthFrameEvent ); CloseHandle( m_hNextColorFrameEvent ); return 0; } int main() { m_hint3D[0].x=0;//肩膀的中心坐标,三维向量 m_hint3D[0].y=0; m_hint3D[0].z=0; m_hint3D[1].x=0;//头的中心坐标,三维向量 m_hint3D[1].y=0; m_hint3D[1].z=0; //使用微软提供的API来操作Kinect之前,要进行NUI初始化 HRESULT hr = NuiInitialize(NUI_INITIALIZE_FLAG_USES_DEPTH_AND_PLAYER_INDEX|NUI_INITIALIZE_FLAG_USES_COLOR|NUI_INITIALIZE_FLAG_USES_SKELETON); if( hr != S_OK )//Kinect提供了两种处理返回值的方式,就是判断上面的函数是否执行成功。 { cout<<"NuiInitialize failed"<<endl; return hr; } //1、Color ----打开KINECT设备的彩色图信息通道 m_hNextColorFrameEvent = CreateEvent( NULL, TRUE, FALSE, NULL );//创建一个windows事件对象,创建成功则返回事件的句柄 m_pColorStreamHandle = NULL;//保存图像数据流的句柄,用以提取数据 //打开KINECT设备的彩色图信息通道,并用m_pColorStreamHandle保存该流的句柄,以便于以后读取 hr = NuiImageStreamOpen(NUI_IMAGE_TYPE_COLOR,NUI_IMAGE_RESOLUTION_640x480, 0, 2, m_hNextColorFrameEvent, &m_pColorStreamHandle); if( FAILED( hr ) ) { cout<<"Could not open image stream video"<<endl; return hr; } //2、Depth -----打开Kinect设备的深度图信息通道 m_hNextDepthFrameEvent = CreateEvent( NULL, TRUE, FALSE, NULL ); m_pDepthStreamHandle = NULL;//保存深度数据流的句柄,用以提取数据 //打开KINECT设备的深度图信息通道,并用m_pDepthStreamHandle保存该流的句柄,以便于以后读取 hr = NuiImageStreamOpen( NUI_IMAGE_TYPE_DEPTH_AND_PLAYER_INDEX, NUI_IMAGE_RESOLUTION_320x240, 0, 2, m_hNextDepthFrameEvent, &m_pDepthStreamHandle); if( FAILED( hr ) ) { cout<<"Could not open depth stream video"<<endl; return hr; } //3、Skeleton -----定义骨骼信号事件句柄,打开骨骼跟踪事件 m_hNextSkeletonEvent = CreateEvent( NULL, TRUE, FALSE, NULL ); hr = NuiSkeletonTrackingEnable( m_hNextSkeletonEvent, NUI_SKELETON_TRACKING_FLAG_ENABLE_IN_NEAR_RANGE|NUI_SKELETON_TRACKING_FLAG_ENABLE_SEATED_SUPPORT); if( FAILED( hr ) ) { cout<<"Could not open skeleton stream video"<<endl; return hr; } //4、用于结束的事件对象 m_hEvNuiProcessStop = CreateEvent(NULL,TRUE,FALSE,NULL); //5、开启一个线程---用于读取彩色、深度、骨骼数据,该线程用于调用线程函数KinectDataThread,线程函数对深度读取彩色和深度图像并进行骨骼跟踪,同时进行显示 HANDLE m_hProcesss = CreateThread(NULL, 0, KinectDataThread, 0, 0, 0); //////////////////////////////////////////////////////////////////////// //m_hint3D[0] = FT_VECTOR3D(0, 0, 0);//头中心坐标,初始化 //m_hint3D[1] = FT_VECTOR3D(0, 0, 0);//肩膀的中心坐标 pColorFrame = FTCreateImage();//彩色图像数据,数据类型为IFTImage* pDepthFrame = FTCreateImage();//深度图像数据 pColorDisplay = FTCreateImage(); //----------1、创建一个人脸跟踪实例----------------------- IFTFaceTracker* pFT = FTCreateFaceTracker();//返回数据类型为IFTFaceTracker* if(!pFT) { return -1;// Handle errors } //初始化人脸跟踪所需要的数据数据-----FT_CAMERA_CONFIG包含彩色或深度传感器的信息(长,宽,焦距) FT_CAMERA_CONFIG myCameraConfig = {COLOR_WIDTH, COLOR_HIGHT, NUI_CAMERA_COLOR_NOMINAL_FOCAL_LENGTH_IN_PIXELS}; // width, height, focal length FT_CAMERA_CONFIG depthConfig = {DEPTH_WIDTH, DEPTH_HIGHT, NUI_CAMERA_DEPTH_NOMINAL_FOCAL_LENGTH_IN_PIXELS}; //depthConfig.FocalLength = NUI_CAMERA_DEPTH_NOMINAL_FOCAL_LENGTH_IN_PIXELS; //depthConfig.Width = DEPTH_WIDTH; //depthConfig.Height = DEPTH_HIGHT;//这里一定要填,而且要填对才行!! //IFTFaceTracker的初始化, --- 人脸跟踪主要接口IFTFaceTracker的初始化 hr = pFT->Initialize(&myCameraConfig, &depthConfig, NULL, NULL); if( FAILED(hr) ) { return -2;// Handle errors } // 2、----------创建一个实例接受3D跟踪结果---------------- IFTResult* pFTResult = NULL; hr = pFT->CreateFTResult(&pFTResult); if(FAILED(hr)) { return -11; } // prepare Image and SensorData for 640x480 RGB images if(!pColorFrame) { return -12;// Handle errors } // Attach assumes that the camera code provided by the application // is filling the buffer cameraFrameBuffer //申请内存空间 pColorDisplay->Allocate(COLOR_WIDTH, COLOR_HIGHT, FTIMAGEFORMAT_UINT8_B8G8R8X8); hr = pColorFrame->Allocate(COLOR_WIDTH, COLOR_HIGHT, FTIMAGEFORMAT_UINT8_B8G8R8X8); if (FAILED(hr)) { return hr; } hr = pDepthFrame->Allocate(DEPTH_WIDTH, DEPTH_HIGHT, FTIMAGEFORMAT_UINT16_D13P3); if (FAILED(hr)) { return hr; } //填充FT_SENSOR_DATA结构,包含用于人脸追踪所需要的所有输入数据 FT_SENSOR_DATA sensorData; POINT point; sensorData.ZoomFactor = 1.0f; point.x = 0; point.y = 0; sensorData.ViewOffset = point;//POINT(0,0) bool isTracked = false;//跟踪判断条件 //int iFaceTrackTimeCount=0; // 跟踪人脸 while ( 1 ) { sensorData.pVideoFrame = pColorFrame;//彩色图像数据 sensorData.pDepthFrame = pDepthFrame;//深度图像数据 //初始化追踪,比较耗时 if(!isTracked)//为false { //会耗费较多cpu计算资源,开始跟踪 hr = pFT->StartTracking(&sensorData, NULL, m_hint3D, pFTResult);//输入为彩色图像,深度图像,人头和肩膀的三维坐标 if(SUCCEEDED(hr) && SUCCEEDED(pFTResult->GetStatus())) { isTracked = true; } else { isTracked = false; } } else { //继续追踪,很迅速,它一般使用一个已大概知晓的人脸模型,所以它的调用不会消耗多少cpu计算,pFTResult存放跟踪的结果 hr = pFT->ContinueTracking(&sensorData, m_hint3D, pFTResult); if(FAILED(hr) || FAILED (pFTResult->GetStatus())) { // 跟丢 isTracked = false; } } int bStop; if(isTracked) { IFTModel* ftModel;//三维人脸模型 HRESULT hr = pFT->GetFaceModel(&ftModel);//得到三维人脸模型 FLOAT* pSU = NULL; UINT numSU; BOOL suConverged; pFT->GetShapeUnits(NULL, &pSU, &numSU, &suConverged); POINT viewOffset = {0, 0}; pColorFrame->CopyTo(pColorDisplay,NULL,0,0);//将彩色图像pColorFrame复制到pColorDisplay中,然后对pColorDisplay进行直接处理 hr = VisualizeFaceModel(pColorDisplay, ftModel, &myCameraConfig, pSU, 1.0, viewOffset, pFTResult, 0x00FFFF00);//该函数为画网格 if(FAILED(hr)) printf("显示失败!!n"); Mat tempMat(COLOR_HIGHT,COLOR_WIDTH,CV_8UC4,pColorDisplay->GetBuffer()); imshow("faceTracking",tempMat); bStop = waitKey(1);//按下ESC结束 } else// -----------当isTracked = false时,则值显示获取到的彩色图像信息 { pColorFrame->CopyTo(pColorDisplay,NULL,0,0); Mat tempMat(COLOR_HIGHT,COLOR_WIDTH,CV_8UC4,pColorDisplay->GetBuffer()); imshow("faceTracking",tempMat); bStop = waitKey(1); } if(m_hEvNuiProcessStop!=NULL) { if( bStop == 27 || bStop == ‘q‘ || bStop == ‘Q‘ ) { SetEvent(m_hEvNuiProcessStop); if(m_hProcesss!=NULL) { WaitForSingleObject(m_hProcesss,INFINITE); CloseHandle(m_hProcesss); m_hProcesss = NULL; } break; } } else { break; } //这里也要判断是否m_hEvNuiProcessStop已经被激活了! Sleep(16); // iFaceTrackTimeCount++; // if(iFaceTrackTimeCount>16*1000) // break; } if(m_hProcesss!=NULL) { WaitForSingleObject(m_hProcesss,INFINITE); CloseHandle(m_hProcesss); m_hProcesss = NULL; } // Clean up. pFTResult->Release(); pColorFrame->Release(); pFT->Release(); NuiShutdown(); return 0; } //显示网状人脸,初始化人脸模型 HRESULT VisualizeFaceModel(IFTImage* pColorImg, IFTModel* pModel, FT_CAMERA_CONFIG const* pCameraConfig, FLOAT const* pSUCoef, FLOAT zoomFactor, POINT viewOffset, IFTResult* pAAMRlt, UINT32 color)//zoomFactor = 1.0f viewOffset = POINT(0,0) pAAMRlt为跟踪结果 { if (!pColorImg || !pModel || !pCameraConfig || !pSUCoef || !pAAMRlt) { return E_POINTER; } HRESULT hr = S_OK; UINT vertexCount = pModel->GetVertexCount();//面部特征点的个数 FT_VECTOR2D* pPts2D = reinterpret_cast<FT_VECTOR2D*>(_malloca(sizeof(FT_VECTOR2D) * vertexCount));//二维向量 reinterpret_cast强制类型转换符 _malloca在堆栈上分配内存 //复制_malloca(sizeof(FT_VECTOR2D) * vertexCount)个字节到pPts2D,用于存放面部特征点,该步相当于初始化 if (pPts2D) { FLOAT *pAUs; UINT auCount;//UINT类型在WINDOWS API中有定义,它对应于32位无符号整数 hr = pAAMRlt->GetAUCoefficients(&pAUs, &auCount); if (SUCCEEDED(hr)) { //rotationXYZ人脸旋转角度! FLOAT scale, rotationXYZ[3], translationXYZ[3]; hr = pAAMRlt->Get3DPose(&scale, rotationXYZ, translationXYZ); if (SUCCEEDED(hr)) { hr = pModel->GetProjectedShape(pCameraConfig, zoomFactor, viewOffset, pSUCoef, pModel->GetSUCount(), pAUs, auCount, scale, rotationXYZ, translationXYZ, pPts2D, vertexCount); //这里获取了vertexCount个面部特征点,存放在pPts2D指针数组中 if (SUCCEEDED(hr)) { POINT* p3DMdl = reinterpret_cast<POINT*>(_malloca(sizeof(POINT) * vertexCount)); if (p3DMdl) { for (UINT i = 0; i < vertexCount; ++i) { p3DMdl[i].x = LONG(pPts2D[i].x + 0.5f); p3DMdl[i].y = LONG(pPts2D[i].y + 0.5f); } FT_TRIANGLE* pTriangles; UINT triangleCount; hr = pModel->GetTriangles(&pTriangles, &triangleCount); if (SUCCEEDED(hr)) { struct EdgeHashTable { UINT32* pEdges; UINT edgesAlloc; void Insert(int a, int b) { UINT32 v = (min(a, b) << 16) | max(a, b); UINT32 index = (v + (v << 8)) * 49157, i; for (i = 0; i < edgesAlloc - 1 && pEdges[(index + i) & (edgesAlloc - 1)] && v != pEdges[(index + i) & (edgesAlloc - 1)]; ++i) { } pEdges[(index + i) & (edgesAlloc - 1)] = v; } } eht; eht.edgesAlloc = 1 << UINT(log(2.f * (1 + vertexCount + triangleCount)) / log(2.f)); eht.pEdges = reinterpret_cast<UINT32*>(_malloca(sizeof(UINT32) * eht.edgesAlloc)); if (eht.pEdges) { ZeroMemory(eht.pEdges, sizeof(UINT32) * eht.edgesAlloc); for (UINT i = 0; i < triangleCount; ++i) { eht.Insert(pTriangles[i].i, pTriangles[i].j); eht.Insert(pTriangles[i].j, pTriangles[i].k); eht.Insert(pTriangles[i].k, pTriangles[i].i); } for (UINT i = 0; i < eht.edgesAlloc; ++i) { if(eht.pEdges[i] != 0) { pColorImg->DrawLine(p3DMdl[eht.pEdges[i] >> 16], p3DMdl[eht.pEdges[i] & 0xFFFF], color, 1); } } _freea(eht.pEdges); } // 画出人脸矩形框 RECT rectFace; hr = pAAMRlt->GetFaceRect(&rectFace);//得到人脸矩形 if (SUCCEEDED(hr)) { POINT leftTop = {rectFace.left, rectFace.top};//左上角 POINT rightTop = {rectFace.right - 1, rectFace.top};//右上角 POINT leftBottom = {rectFace.left, rectFace.bottom - 1};//左下角 POINT rightBottom = {rectFace.right - 1, rectFace.bottom - 1};//右下角 UINT32 nColor = 0xff00ff; SUCCEEDED(hr = pColorImg->DrawLine(leftTop, rightTop, nColor, 1)) && SUCCEEDED(hr = pColorImg->DrawLine(rightTop, rightBottom, nColor, 1)) && SUCCEEDED(hr = pColorImg->DrawLine(rightBottom, leftBottom, nColor, 1)) && SUCCEEDED(hr = pColorImg->DrawLine(leftBottom, leftTop, nColor, 1)); } } _freea(p3DMdl); } else { hr = E_OUTOFMEMORY; } } } } _freea(pPts2D); } else { hr = E_OUTOFMEMORY; } return hr; }

IFTModel* ftModel; HRESULT hr = context->m_pFaceTracker->GetFaceModel(&ftModel),hR; if(FAILED(hr)) return false; FLOAT* pSU = NULL; UINT numSU; BOOL suConverged; FLOAT headScale,tempDistance=1; context->m_pFaceTracker->GetShapeUnits(&headScale, &pSU, &numSU, &suConverged); POINT viewOffset = {0, 0}; IFTResult* pAAMRlt = context->m_pFTResult; UINT vertexCount = ftModel->GetVertexCount();//顶点 FT_VECTOR2D* pPts2D = reinterpret_cast<FT_VECTOR2D*>(_malloca(sizeof(FT_VECTOR2D) * vertexCount)); if ( pPts2D ) { FLOAT* pAUs; UINT auCount; hr = pAAMRlt->GetAUCoefficients(&pAUs, &auCount); if (SUCCEEDED(hr)) { FLOAT scale, rotationXYZ[3], translationXYZ[3]; hr = pAAMRlt->Get3DPose(&scale, rotationXYZ, translationXYZ); //rotationXYZ是最重要的数据! if (SUCCEEDED(hr)) { hr = ftModel->GetProjectedShape(&m_videoConfig, 1.0, viewOffset, pSU, ftModel->GetSUCount(), pAUs, auCount, scale, rotationXYZ, translationXYZ, pPts2D, vertexCount); FT_VECTOR3D* pPts3D = reinterpret_cast<FT_VECTOR3D*>(_malloca(sizeof(FT_VECTOR3D) * vertexCount)); hR = ftModel->Get3DShape(pSU,ftModel->GetSUCount(),pAUs,ftModel->GetAUCount(),scale,rotationXYZ,translationXYZ,pPts3D,vertexCount); if (SUCCEEDED(hr)&&SUCCEEDED(hR)) { //pPts3D就是三维坐标了,后面随你玩了~~

标签:iframe nal 无符号 waitkey efficient 显示 lock SM max

原文地址:https://www.cnblogs.com/wainiwann/p/8868448.html