标签:strip 需要 http active 部分 dom numpy 第一章 show

1.主题

爬取小说网站的《全职高手》小说第一章

2.代码

导入包

import random import requests import re

import matplotlib.pyplot as plt

from wordcloud import WordCloud,ImageColorGenerator,STOPWORDS

import jieba

import numpy as np

from PIL import Image

取出所需要的标题和正文

req1 = requests.get(‘http://www.biqukan.cc/book/11/10358.html‘,headers=header[random.randint(0, 4)]) #向目标网站发送get请求 req2 = requests.get(‘http://www.biqukan.cc/book/11/10358_2.html‘, headers=header[random.randint(0, 4)]) result1 = req1.content result1 = result1.decode(‘gbk‘) result2 = req2.content result2 = result2.decode(‘gbk‘) title_re = re.compile(r‘ <li class="active">(.*?)</li>‘) #取出文章的标题 text_re = re.compile(r‘<br><br>([\s\S]*?)</div>‘) title = re.findall(title_re, result1) #找出标题 text1 = re.findall(text_re, result1) #找出第一部分的正文 text2 = re.findall(text_re, result2) title = title[0] print(title) text1.append(text2[0]) text1 = ‘\r\n‘.join(text1) text1 = text1.split(‘\r\n‘) text_1 = []

定义一个获取所有章节 url的函数

def get_url(url):

req = requests.get(url,headers = header[random.randint(0,4)])

result = req.content

result = result.decode(‘gbk‘)

res = r‘<dd class="col-md-3"><a href=(.*?) title=‘

list_url = re.findall(res,result)

list_url_ = [] #定义一个空列表

for url_ in list_url:

if ‘"‘‘"‘ in url_:

url_ = url_.replace(‘"‘,‘‘)

url_ = url_.replace(‘"‘, ‘‘)

list_url_.append(‘http://www.biqukan.cc/book/11/‘ + url_)

elif "‘""‘" in url_:

url_ = url_.replace("‘", ‘‘)

url_ = url_.replace("‘", ‘‘)

list_url_.append(‘http://www.biqukan.cc/book/11/‘ + url_)

return list_url_

去掉句子中多余的部分

for sentence in text1:

sentence = sentence.strip()

if ‘ ‘ in sentence:

sentence = sentence.replace(‘ ‘, ‘‘)

if ‘<br />‘ in sentence:

sentence = sentence.replace(‘<br />‘, ‘‘)

text_1.append(sentence)

else:

text_1.append(sentence)

elif ‘<br />‘ in sentence:

sentence = sentence.replace(‘<br />‘, ‘‘)

text_1.append(sentence)

elif ‘-->><p class="text-danger text-center mg0">本章未完,点击下一页继续阅读</p>‘ in sentence:

sentence = sentence.replace(r‘-->><p class="text-danger text-center mg0">本章未完,点击下一页继续阅读</p>‘,‘‘)

text_1.append(sentence)

else:

text_1.append(sentence)

将数据放入txt文本文件

fo = open("qzgs.txt", "wb")

for url_txt in get_url(‘http://www.biqukan.cc/book/11/‘):

get_txt(url_txt)

fo.close()

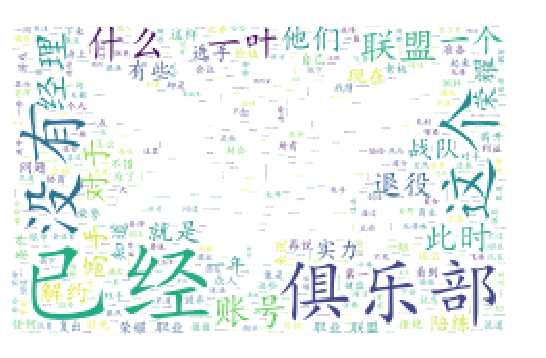

读取要生成词云的文件和生成形状的图片

text_from_file_with_apath = open(‘qzgs.txt‘,encoding=‘gbk‘).read()

abel_mask = np.array(Image.open("qzgs.jpg"))

进行分隔

wordlist_after_jieba = jieba.cut(text_from_file_with_apath, cut_all = True) wl_space_split = " ".join(wordlist_after_jieba)

设置词云生成图片的样式

wordcloud = WordCloud(

background_color=‘white‘,

mask = abel_mask,

max_words = 80,

max_font_size = 150,

random_state = 30,

scale=.5

stopwords = {}.fromkeys([‘nbsp‘, ‘br‘]),

font_path = ‘C:/Users/Windows/fonts/simkai.ttf‘,

).generate(wl_space_split)

image_colors = ImageColorGenerator(abel_mask)

显示词云生成的图片

plt.imshow(my_wordcloud)

plt.axis("off")

plt.show()

3.数据截图

4.遇到的问题及解决方法

词云一直安装失败

解决方法:去百度上下载了词云,然后来安装,才安装成功

5.总结

使用了Python后发现Python的用途很广,很多地方都需要,是个要好好学的语言

标签:strip 需要 http active 部分 dom numpy 第一章 show

原文地址:https://www.cnblogs.com/wlh0329/p/8973883.html