标签:etl dal class utf8 区块 \n 表达式 sts import

import requests, re, jieba from bs4 import BeautifulSoup from datetime import datetime # 获取新闻细节 def getNewsDetail(newsUrl): resd = requests.get(newsUrl) resd.encoding = ‘gb2312‘ soupd = BeautifulSoup(resd.text, ‘html.parser‘) content = soupd.select(‘#endText‘)[0].text info = soupd.select(‘.post_time_source‘)[0].text date = re.search(‘(\d{4}.\d{2}.\d{2}\s\d{2}.\d{2}.\d{2})‘, info).group(1) # 识别时间格式 dateTime = datetime.strptime(date, ‘%Y-%m-%d %H:%M:%S‘) # 用datetime将时间字符串转换为datetime类型 sources = re.search(‘来源:\s*(.*)‘, info).group(1) keyWords = getKeyWords(content) print(‘发布时间:{0}\n来源:{1}‘.format(dateTime, sources)) print(‘关键词:{}、{}、{}‘.format(keyWords[0], keyWords[1], keyWords[2])) print(content) fo = open("D:\python/test.txt", ‘w‘, encoding=‘utf8‘) fo.write(content) fo.close() # 通过jieba分词,获取新闻关键词 def getKeyWords(content): content = ‘‘.join(re.findall(‘[\u4e00-\u9fa5]‘, content)) # 通过正则表达式选取中文字符数组,拼接为无标点字符内容 wordSet = set(jieba._lcut(content)) wordDict = {} for i in wordSet: wordDict[i] = content.count(i) deleteList, keyWords = [], [] for i in wordDict.keys(): if len(i) < 2: deleteList.append(i) # 去掉单字无意义字符 for i in deleteList: del wordDict[i] dictList = list(wordDict.items()) dictList.sort(key=lambda item: item[1], reverse=True) # 排序,返回前三关键字 for i in range(3): keyWords.append(dictList[i][0]) return keyWords # 获取一页的新闻 def getListPage(listUrl): res = requests.get(listUrl) res.encoding = ‘gbk‘ soup = BeautifulSoup(res.text, ‘html.parser‘) for new in soup.select(‘#news-flow-content‘)[0].select(‘li‘): url = new.select(‘a‘)[0][‘href‘] title = new.select(‘a‘)[0].text print(‘标题:{0}\n链接:{1}‘.format(title, url)) print(getNewsDetail(url)) break listUrl = ‘http://tech.163.com/it/‘ getListPage(listUrl)

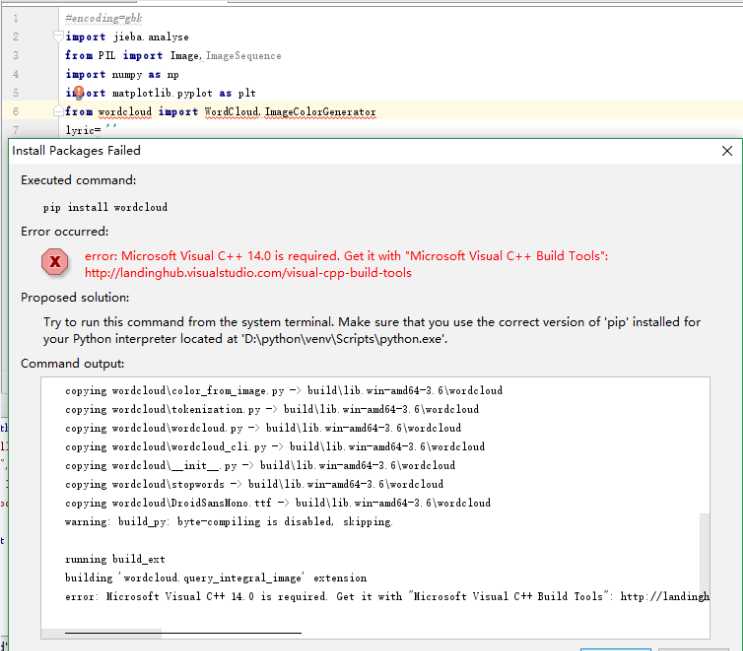

因为一直无法下载python中wordcloud的相关文件,于是便使用了网上词云的生成器。网址是:https://timdream.org/wordcloud

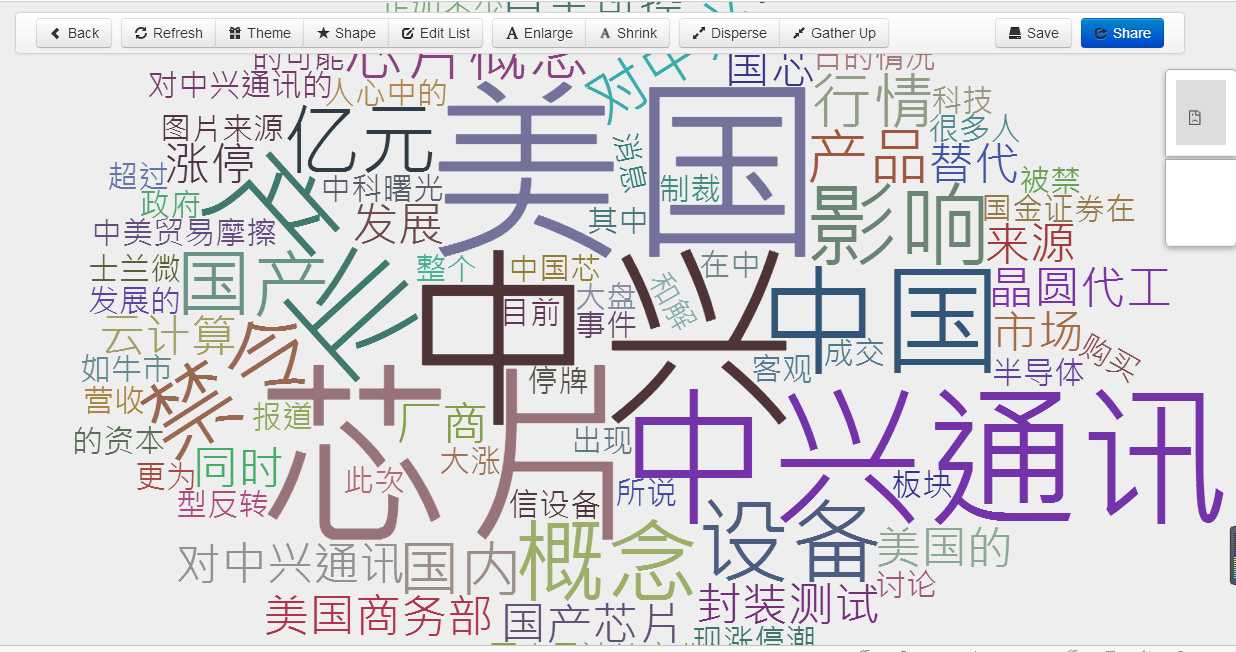

运行效果图:

此次爬取的是一个关于区块链的新闻内容,通过使用python爬取新闻页面的内容,然后使用网上的词云生成器生成词。

标签:etl dal class utf8 区块 \n 表达式 sts import

原文地址:https://www.cnblogs.com/zxc109525/p/8972524.html