标签:from img 网络 .sh port image 技术 1.5 end

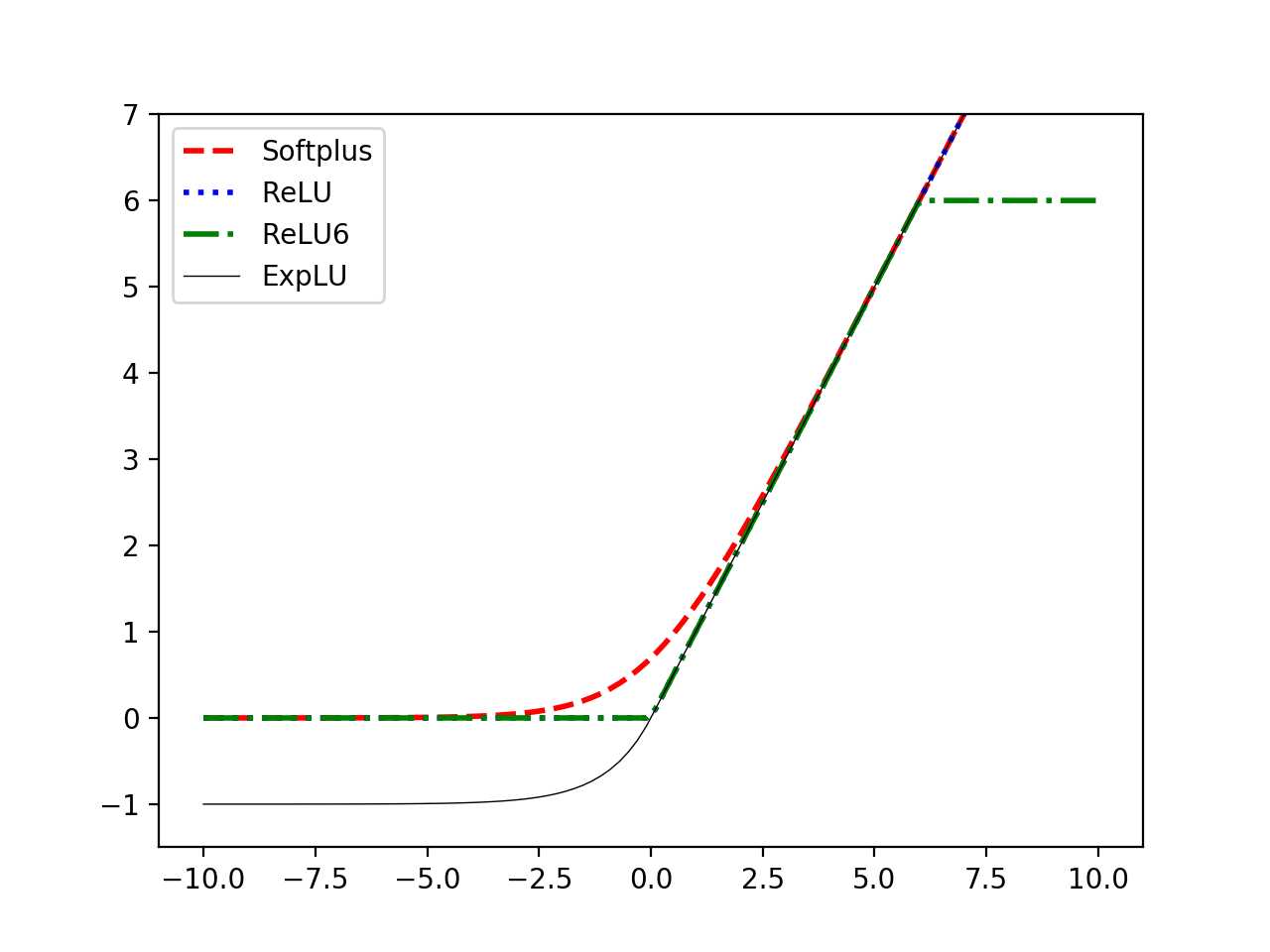

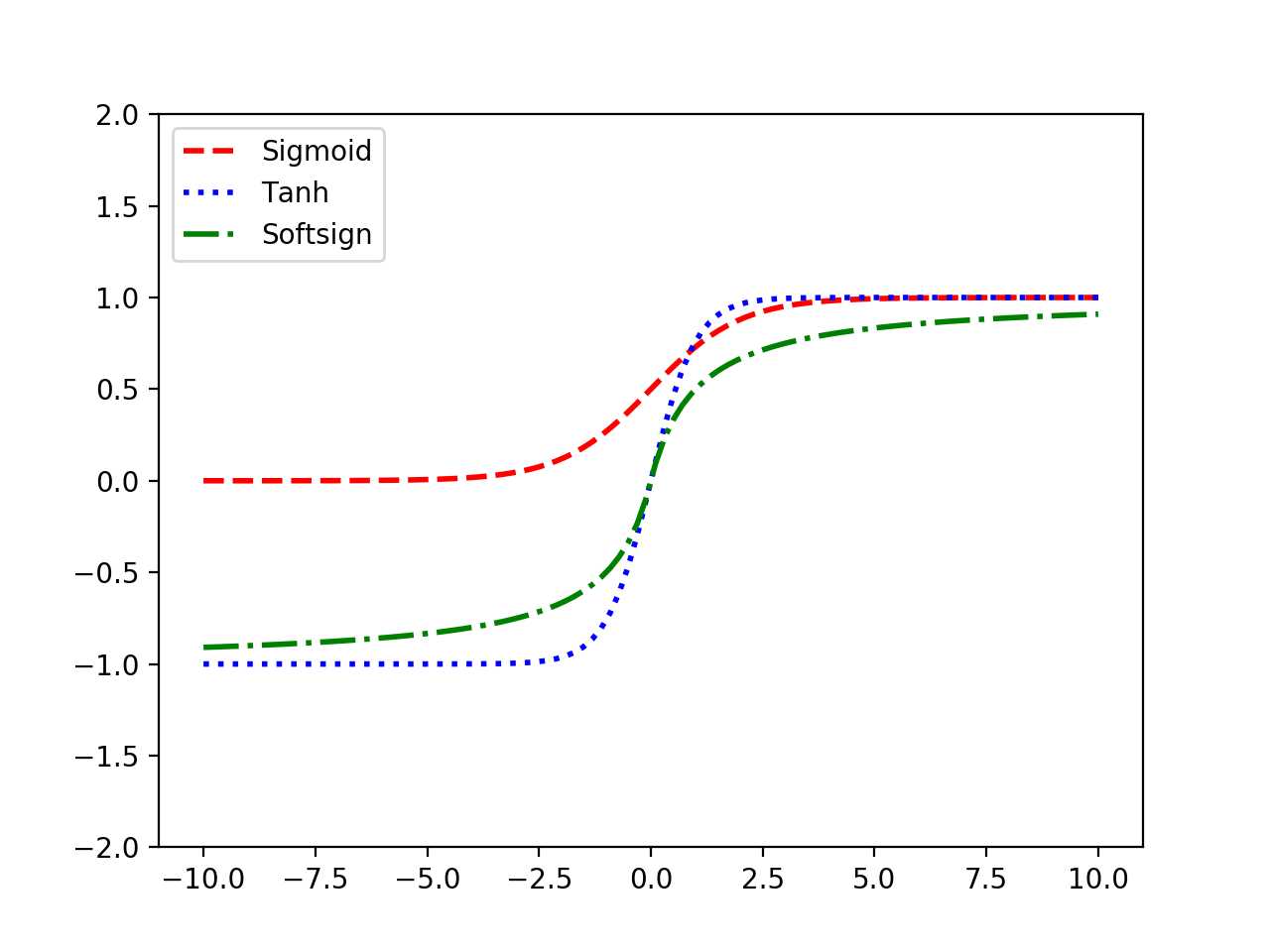

# Activation Functions #---------------------------------- # # This function introduces activation # functions in TensorFlow # Implementing Activation Functions import matplotlib.pyplot as plt import numpy as np import tensorflow as tf from tensorflow.python.framework import ops ops.reset_default_graph() # Open graph session sess = tf.Session() # X range x_vals = np.linspace(start=-10., stop=10., num=100) # ReLU activation print(sess.run(tf.nn.relu([-3., 3., 10.]))) y_relu = sess.run(tf.nn.relu(x_vals)) # ReLU-6 activation print(sess.run(tf.nn.relu6([-3., 3., 10.]))) y_relu6 = sess.run(tf.nn.relu6(x_vals)) # Sigmoid activation print(sess.run(tf.nn.sigmoid([-1., 0., 1.]))) y_sigmoid = sess.run(tf.nn.sigmoid(x_vals)) # Hyper Tangent activation print(sess.run(tf.nn.tanh([-1., 0., 1.]))) y_tanh = sess.run(tf.nn.tanh(x_vals)) # Softsign activation print(sess.run(tf.nn.softsign([-1., 0., 1.]))) y_softsign = sess.run(tf.nn.softsign(x_vals)) # Softplus activation print(sess.run(tf.nn.softplus([-1., 0., 1.]))) y_softplus = sess.run(tf.nn.softplus(x_vals)) # Exponential linear activation print(sess.run(tf.nn.elu([-1., 0., 1.]))) y_elu = sess.run(tf.nn.elu(x_vals)) # Plot the different functions plt.plot(x_vals, y_softplus, ‘r--‘, label=‘Softplus‘, linewidth=2) plt.plot(x_vals, y_relu, ‘b:‘, label=‘ReLU‘, linewidth=2) plt.plot(x_vals, y_relu6, ‘g-.‘, label=‘ReLU6‘, linewidth=2) plt.plot(x_vals, y_elu, ‘k-‘, label=‘ExpLU‘, linewidth=0.5) plt.ylim([-1.5,7]) plt.legend(loc=‘upper left‘) plt.show() plt.plot(x_vals, y_sigmoid, ‘r--‘, label=‘Sigmoid‘, linewidth=2) plt.plot(x_vals, y_tanh, ‘b:‘, label=‘Tanh‘, linewidth=2) plt.plot(x_vals, y_softsign, ‘g-.‘, label=‘Softsign‘, linewidth=2) plt.ylim([-2,2]) plt.legend(loc=‘upper left‘) plt.show()

标签:from img 网络 .sh port image 技术 1.5 end

原文地址:https://www.cnblogs.com/bonelee/p/8994319.html