标签:链接 hba 图片 zip 通过 word span key 用户

本次的所有操作均在当前用户目录下的/temp/2018-05-09中

通过wget下载压缩文件,命令如下:

wget -drc --accept-regex=REGEX -P data ftp://ftp.ncdc.noaa.gov/pub/data/noaa/2015/6*在这之前,需要配置好环境,在.bashrc中加入下面的命令

export PATH=$PATH:/usr/local/hbase/bin:/usr/local/hadoop/sbin:/usr/local/hadoop/bin

export HADOOP_HOME=/usr/local/hadoop

export STREAM=$HADOOP_HOME/share/hadoop/tools/lib/hadoop-streaming-*.jar

下载后解压,之后启动hdfs,将解压文件放入系统中,命令如下

start-dfs.sh

hdfs dfs -mkdir weather_data

hdfs dfs -put weather.txt weather_data/文件放入系统后可以编写mapper.py了,主要代码如下:

import sys

for line in sys.stdin:

line = line.strip()

print(‘%s\t%d‘ % (line[15:23], int(line[87:92])))reducer.py了,主要代码如下:

from operator import itemgetter

import sys

current_date = None

current_temperature = 0

date = None

for line in sys.stdin:

line = line.strip()

date, temperature = line.split(‘\t‘, 1)

try:

temperature = int(temperature)

except ValueError:

continue

if current_date == date:

if current_temperature < temperature:

current_temperature = temperature

else:

if current_date:

print(‘%s\t%d‘ % (current_date, current_temperature))

current_temperature = temperature

current_date = date

if current_date == date:

print(‘%s\t%d‘ % (current_date, current_temperature))这里测试运行mapper和reducer,命令如下:

chmod a+x mapper.py

chmod a+x reducer.py

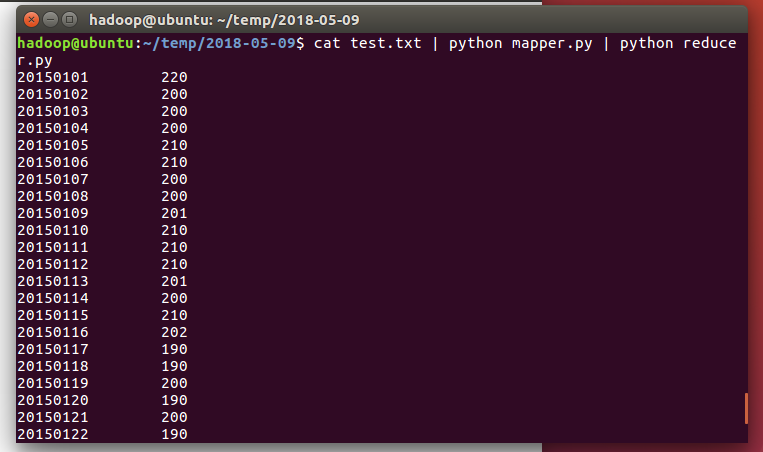

cat test.txt | python mapper.py | python reducer.py

test.txt中包含了部分的天气数据

下面是运行截图:

运行成功后可编写run.sh

hadoop jar $STREAM -D stream.non.zero.exit.is.failure=false -file /home/hadoop/temp/2018-05-09/mapper.py -mapper ‘python /home/hadoop/temp/2018-05-09/mapper.py‘ -file /home/hadoop/temp/2018-05-09/reducer.py -reducer ‘python /home/hadoop/temp/2018-05-09/reducer.py‘ -input /user/hadoop/weather_data/*.txt -output /user/hadoop/weather_output

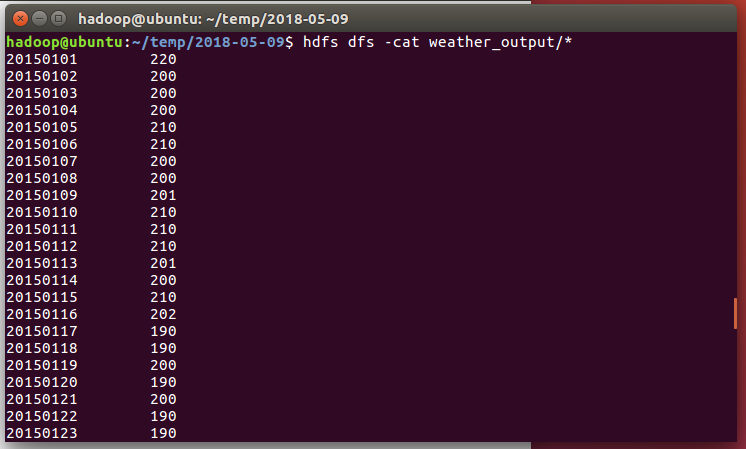

运行run.sh

source run.sh最后的运行结果通过cat打印截图:

/temp下的文件在链接中下载

标签:链接 hba 图片 zip 通过 word span key 用户

原文地址:https://www.cnblogs.com/5277hnl/p/9016724.html