标签:脚本 sum cep ril coder handler error: 配置 img

由于本地测试和服务器不在一个局域网,安装的hadoop配置文件是以内网ip作为机器间通信的ip.

在这种情况下,我们能够访问到namenode机器,

namenode会给我们数据所在机器的ip地址供我们访问数据传输服务,

但是返回的的是datanode内网的ip,我们无法根据该IP访问datanode服务器.

报错如下

2018-06-06 17:01:44,555 [main] WARN [org.apache.hadoop.hdfs.BlockReaderFactory] - I/O error constructing remote block reader.

java.net.ConnectException: Connection timed out: no further information

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:531)

at org.apache.hadoop.hdfs.DFSClient.newConnectedPeer(DFSClient.java:3450)

at org.apache.hadoop.hdfs.BlockReaderFactory.nextTcpPeer(BlockReaderFactory.java:777)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:694)

at org.apache.hadoop.hdfs.BlockReaderFactory.build(BlockReaderFactory.java:355)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:665)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:874)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:926)

at java.io.DataInputStream.read(DataInputStream.java:149)

at sun.nio.cs.StreamDecoder.readBytes(StreamDecoder.java:284)

at sun.nio.cs.StreamDecoder.implRead(StreamDecoder.java:326)

at sun.nio.cs.StreamDecoder.read(StreamDecoder.java:178)

at java.io.InputStreamReader.read(InputStreamReader.java:184)

at java.io.BufferedReader.fill(BufferedReader.java:161)

at java.io.BufferedReader.readLine(BufferedReader.java:324)

at java.io.BufferedReader.readLine(BufferedReader.java:389)

at com.feiyangshop.recommendation.HdfsHandler.main(HdfsHandler.java:36)

2018-06-06 17:01:44,560 [main] WARN [org.apache.hadoop.hdfs.DFSClient] - Failed to connect to /192.168.1.219:50010 for block, add to deadNodes and continue. java.net.ConnectException: Connection timed out: no further information

java.net.ConnectException: Connection timed out: no further information

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:531)

at org.apache.hadoop.hdfs.DFSClient.newConnectedPeer(DFSClient.java:3450)

at org.apache.hadoop.hdfs.BlockReaderFactory.nextTcpPeer(BlockReaderFactory.java:777)为了能够让开发机器访问到hdfs,我们可以通过域名访问hdfs.

让namenode返回给我们datanode的域名,在开发机器的hosts文件中配置datanode对应的外网ip和域名,并且在与hdfs交互的程序中添加如下代码即可

import org.apache.hadoop.conf.Configuration;

Configuration conf = new Configuration();

//设置通过域名访问datanode

conf.set("dfs.client.use.datanode.hostname", "true");还有一个就是比较常见的bug

Exception in thread "main" java.lang.UnsatisfiedLinkError: org.apache.hadoop.util.NativeCrc32.nativeComputeChunkedSums(IILjava/nio/ByteBuffer;ILjava/nio/ByteBuffer;IILjava/lang/String;JZ)V

at org.apache.hadoop.util.NativeCrc32.nativeComputeChunkedSums(Native Method)

at org.apache.hadoop.util.NativeCrc32.verifyChunkedSums(NativeCrc32.java:59)

at org.apache.hadoop.util.DataChecksum.verifyChunkedSums(DataChecksum.java:301)

at org.apache.hadoop.hdfs.RemoteBlockReader2.readNextPacket(RemoteBlockReader2.java:231)

at org.apache.hadoop.hdfs.RemoteBlockReader2.read(RemoteBlockReader2.java:152)

at org.apache.hadoop.hdfs.DFSInputStream$ByteArrayStrategy.doRead(DFSInputStream.java:767)

at org.apache.hadoop.hdfs.DFSInputStream.readBuffer(DFSInputStream.java:823)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:883)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:926)

at java.io.DataInputStream.read(DataInputStream.java:149)windows中的HADOOP_HOME里的bin目录下的脚本是32位的,应该替换成支持windows版本的64位的,我这有编译好的windows64位版本的hadoop包,如果缺少可以通过下面的链接下载.

这个应该是win7-64位版本的,但是我使用win10-64位机器也可以使用.

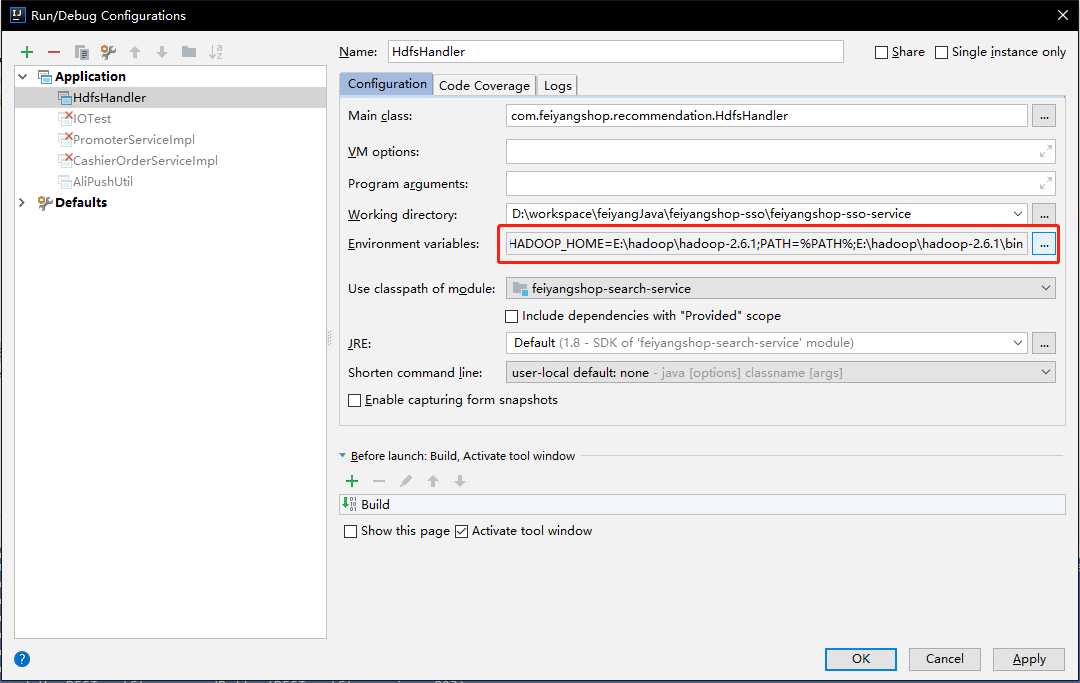

链接:https://pan.baidu.com/s/13Mf3m2fXt0TnXwgsiDejEg 密码:pajo还需要在idea中配置环境参数

HADOOP_HOME=E:\hadoop\hadoop-2.6.1

PATH=%PATH%;E:\hadoop\hadoop-2.6.1\bin标签:脚本 sum cep ril coder handler error: 配置 img

原文地址:https://www.cnblogs.com/krcys/p/9146329.html