标签:style 改进 数组 width sub try 有一个 图片 orm

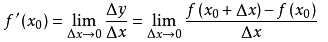

import numpy as np import matplotlib.pyplot as plt np.random.seed(666) X = np.random.random(size=(1000, 10)) true_theta = np.arange(1, 12, dtype=float) X_b = np.hstack([np.ones((len(X), 1)), X]) y = X_b.dot(true_theta) + np.random.normal(size=1000) def J(theta, X_b, y): """求当前 theta 的损失函数""" try: return np.sum((y - X_b.dot(theta)) ** 2) / len(X_b) except: return float(‘inf‘) def dJ_math(theta, X_b, y): """使用模型推导公式求当前 theta 对应的梯度""" return X_b.T.dot(X_b.dot(theta) - y) * 2. / len(y)true_theta

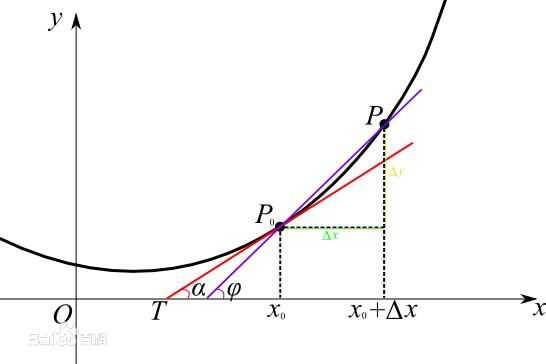

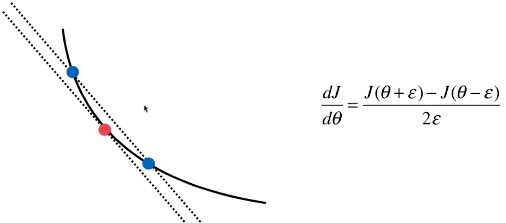

def dJ_debug(theta, X_b, y, epsilon=0.01): """使用调试方式求当前 theta 对应的梯度""" res = np.empty(len(theta)) for i in range(len(theta)): theta_1 = theta.copy() theta_1[i] += epsilon theta_2 = theta.copy() theta_2[i] -= epsilon res[i] = (J(theta_1, X_b, y) - J(theta_2, X_b, y)) / (2*epsilon) return res

def gradient_descent(dJ, X_b, y, initial_theta, eta, n_iters=10**4, epsilon=10**-8): theta = initial_theta cur_iter = 0 while cur_iter < n_iters: gradient = dJ(theta,X_b, y) last_theta = theta theta = theta - eta * gradient if (abs(J(theta, X_b, y) - J(last_theta, X_b, y)) < epsilon): break cur_iter += 1 return theta

initial_theta = np.zeros(X_b.shape[1]) eta = 0.01 %time theta = gradient_descent(dJ_debug, X_b, y, initial_theta, eta) theta # 输出:Wall time: 4.89 s array([ 1.1251597 , 2.05312521, 2.91522497, 4.11895968, 5.05002117, 5.90494046, 6.97383745, 8.00088367, 8.86213468, 9.98608331, 10.90529198])

%time theta = gradient_descent(dJ_math, X_b, y, initial_theta, eta) theta # 输出:Wall time: 652 ms array([ 1.1251597 , 2.05312521, 2.91522497, 4.11895968, 5.05002117, 5.90494046, 6.97383745, 8.00088367, 8.86213468, 9.98608331, 10.90529198])

标签:style 改进 数组 width sub try 有一个 图片 orm

原文地址:https://www.cnblogs.com/volcao/p/9154872.html