标签:xpl jersey between 需要 form cab rip row client

第一步:关联Jar包

1. 配置hadoop-env.sh文件添加Hbase关联jar包

/opt/modules/hadoop-2.5.0-cdh5.3.6/etc/hadoop下编辑hadoop-env.sh文件添加下列变量

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/*

2. 配置临时或者永久环境变量

/opt/modules/hbase-0.98.6-cdh5.3.6/conf/hbase-env.sh 下追加下列变量关联jar包。如果想临时使用的话,只需要在打开的teminal当前会话下执行下列变量

export HBASE_HOME=/opt/modules/hbase-0.98.6-cdh5.3.6

export HADOOP_CLASSPATH=$HBASE_HOME/lib/*:classpath

export HBASE_CLASSPATH=$HBASE_CLASSPATH:`$HBASE_HOME/bin/hbase classpath`

在Hbase根目录下运行下列命令.如果环境变量配置正确的话,命令结束后会列举命令行list菜单

在Hbase根目录下运行下列命令,/opt/modules/hadoop-2.5.0-cdh5.3.6/bin/yarn jar lib/hbase-server-0.98.6-cdh5.3.6.jar

[liupeng@www hbase-0.98.6-cdh5.3.6]$ /opt/modules/hadoop-2.5.0-cdh5.3.6/bin/yarn jar lib/hbase-server-0.98.6-cdh5.3.6.jar An example program must be given as the first argument. Valid program names are: CellCounter: Count cells in HBase table completebulkload: Complete a bulk data load. copytable: Export a table from local cluster to peer cluster export: Write table data to HDFS. import: Import data written by Export. importtsv: Import data in TSV format. rowcounter: Count rows in HBase table verifyrep: Compare the data from tables in two different clusters. WARNING: It doesn‘t work for incrementColumnValues‘d cells since the timestamp is changed after being appended to the log. [liupeng@www hbase-0.98.6-cdh5.3.6]$

第二步:Myeclipse中代码的准备

(1) Maven工程的创建 (不在此处详细解释)

(2) pom.xml文件添加jar包 (Hadoop相关jar包添加下列属性即可)

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>0.98.24-hadoop2</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>0.98.24-hadoop2</version>

</dependency>

(3)Java 代码的准备

com.HBaseMapperReduce.Import 包名

HBaseDriver类:

package com.HBaseMapperReduce.Import; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.conf.Configured; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.client.Put; import org.apache.hadoop.hbase.client.Scan; import org.apache.hadoop.hbase.io.ImmutableBytesWritable; import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.util.Tool; import org.apache.hadoop.util.ToolRunner;

//继承Configured 及 Tool类实现 run 方法 和 Main 方法 public class HBaseDriver extends Configured implements Tool{ public int run(String[] arg0) throws Exception { Configuration conf = this.getConf(); Job job = Job.getInstance(conf, "mr-test"); //这里的"mr-test"为job名可以起任意值 //job.setJarByClass(入口) job.setJarByClass(HBaseDriver.class); Scan scan = new Scan(); TableMapReduceUtil.initTableMapperJob( "liupeng:Student", // input table scan, // Scan instance to control CF and attribute selection HBaseMapperReduceGetInfo.class, // mapper class ImmutableBytesWritable.class, // mapper output key TableMapper类中的类型 Put.class, // mapper output value TableMapper类中的类型 job); TableMapReduceUtil.initTableReducerJob( "liupeng:DemoTest", // output table 需要在Hbase上提前创建table表 null, // reducer class //如果没有reduce这里就指定null值 job); job.setNumReduceTasks(1); // at least one, adjust as required return job.waitForCompletion(true) ? 0:1; //三木运算如果结果为true 返回0,相反返回1 } public static void main(String[] args) { Configuration conf = HBaseConfiguration.create(); try { int status = ToolRunner.run(conf, new HBaseDriver(), args); System.exit(status); } catch (Exception e) { e.printStackTrace(); } } }

HBaseMapperReduceGetInfo类

package com.HBaseMapperReduce.Import; import java.io.IOException; import org.apache.hadoop.hbase.Cell; import org.apache.hadoop.hbase.CellUtil; import org.apache.hadoop.hbase.client.Put; import org.apache.hadoop.hbase.client.Result; import org.apache.hadoop.hbase.io.ImmutableBytesWritable; import org.apache.hadoop.hbase.mapreduce.TableMapper; import org.apache.hadoop.hbase.util.Bytes; import org.apache.hadoop.mapreduce.Mapper; public class HBaseMapperReduceGetInfo extends TableMapper<ImmutableBytesWritable, Put> { @Override protected void map(ImmutableBytesWritable key, Result value,Context context) throws IOException, InterruptedException { //key是rowkey。key.get()得到rowkey下所有的数据 Put put = new Put(key.get());

/**

*需求:获取指定table表中,符合if else条件的所有Cell值

*列簇有2个分别获取 "info","contect"两个列簇下的列名

*获取符合info列簇下name,age。及contect列簇下mail的Cell值

*/

for(Cell cell:value.rawCells()){ if("info".equals(Bytes.toString(CellUtil.cloneFamily(cell)))){ if("name".equals(Bytes.toString(CellUtil.cloneQualifier(cell)))){ put.add(cell); }else if("age".equals(Bytes.toString(CellUtil.cloneQualifier(cell)))){ put.add(cell); } }else if("contect".equals(Bytes.toString(CellUtil.cloneFamily(cell)))){ if("mail".equals(Bytes.toString(CellUtil.cloneQualifier(cell)))){ put.add(cell); } } }context.write(key, put); } }

第三步:打jar包并运行脚本并在Hbase指定table表中查看数据

1. Hbase中创建表,具体操作如下

hbase(main):250:0> create "liupeng:DemoTest",‘info‘,‘contect‘ 0 row(s) in 0.8020 seconds => Hbase::Table - liupeng:DemoTest hbase(main):251:0> desc "liupeng:DemoTest" DESCRIPTION ENABLED ‘liupeng:DemoTest‘, {NAME => ‘contect‘, BLOOMFILTER => ‘ROW‘, VERSIONS => ‘1‘ true , IN_MEMORY => ‘false‘, KEEP_DELETED_CELLS => ‘false‘, DATA_BLOCK_ENCODING => ‘NONE‘, TTL => ‘FOREVER‘, COMPRESSION => ‘NONE‘, MIN_VERSIONS => ‘0‘, BLOCKC ACHE => ‘true‘, BLOCKSIZE => ‘65536‘, REPLICATION_SCOPE => ‘0‘}, {NAME => ‘in fo‘, BLOOMFILTER => ‘ROW‘, VERSIONS => ‘1‘, IN_MEMORY => ‘false‘, KEEP_DELETE D_CELLS => ‘false‘, DATA_BLOCK_ENCODING => ‘NONE‘, TTL => ‘FOREVER‘, COMPRESS ION => ‘NONE‘, MIN_VERSIONS => ‘0‘, BLOCKCACHE => ‘true‘, BLOCKSIZE => ‘65536 ‘, REPLICATION_SCOPE => ‘0‘} 1 row(s) in 0.0970 seconds

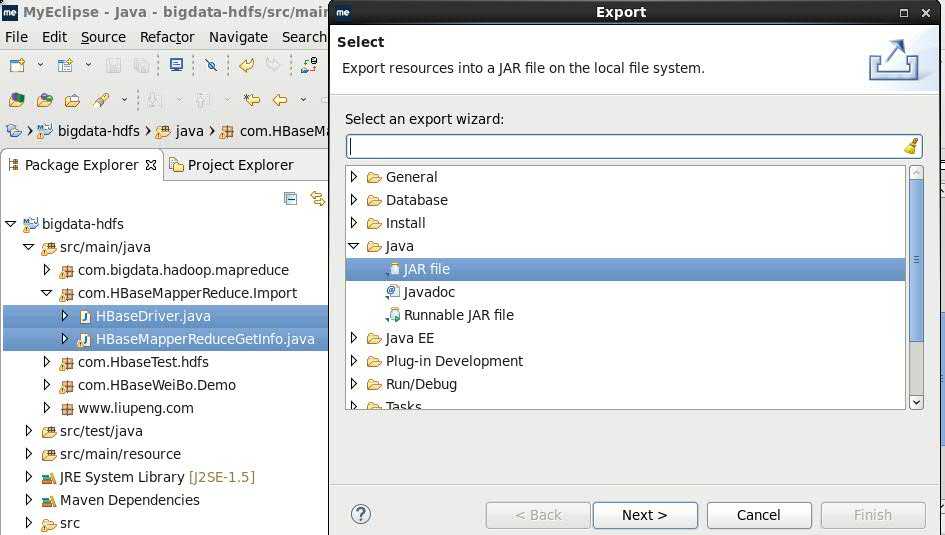

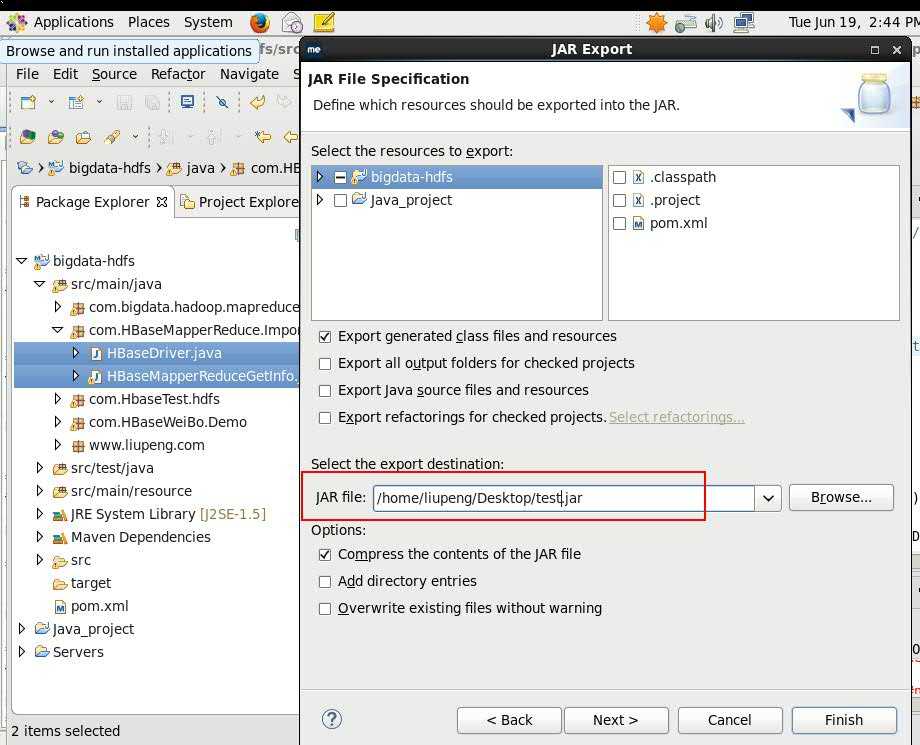

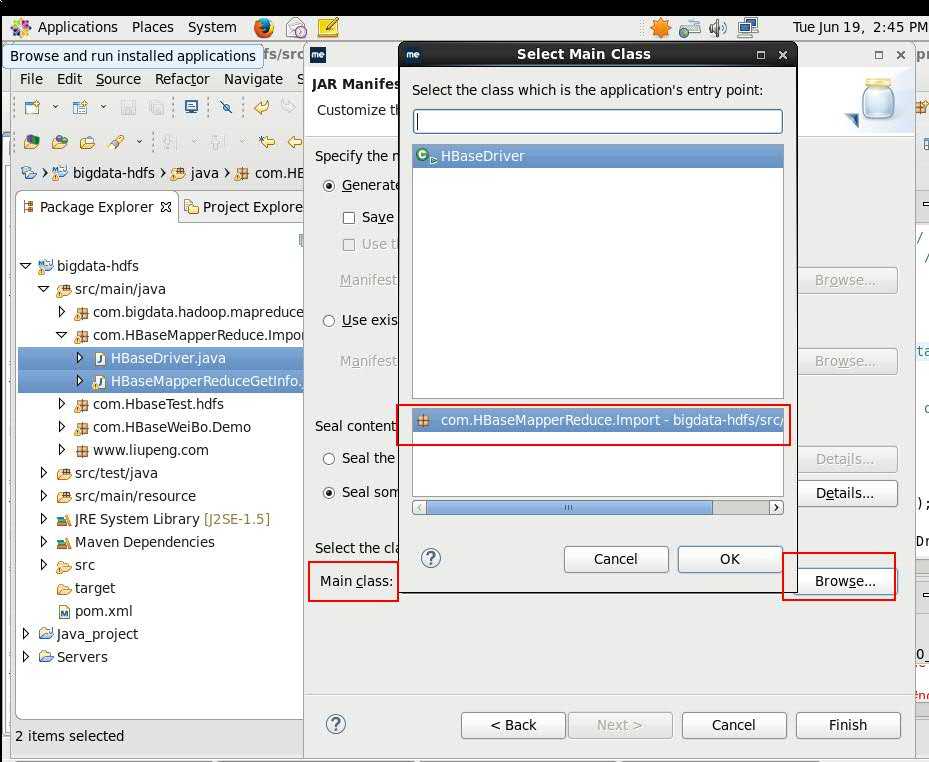

2. 打jar包

图1

图2

图3

图4

3. 执行job命令

[liupeng@www hbase-0.98.6-cdh5.3.6]$ /opt/modules/hadoop-2.5.0-cdh5.3.6/bin/yarn jar /home/liupeng/Desktop/test.jar

命令执行后会运行MapperReduce任务等任务结束后会出现如下结果

[liupeng@www hbase-0.98.6-cdh5.3.6]$ /opt/modules/hadoop-2.5.0-cdh5.3.6/bin/yarn jar /home/liupeng/Desktop/test.jar SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 18/06/19 14:48:33 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 18/06/19 14:48:35 INFO Configuration.deprecation: io.bytes.per.checksum is deprecated. Instead, use dfs.bytes-per-checksum 18/06/19 14:48:35 INFO client.RMProxy: Connecting to ResourceManager at www.hadoopresourcemanager.com/192.168.122.169:8032 18/06/19 14:48:35 INFO Configuration.deprecation: io.bytes.per.checksum is deprecated. Instead, use dfs.bytes-per-checksum 18/06/19 14:48:36 INFO zookeeper.RecoverableZooKeeper: Process identifier=hconnection-0x7b205dbd connecting to ZooKeeper ensemble=localhost:2181 18/06/19 14:48:36 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.5-cdh5.3.6--1, built on 07/28/2015 18:35 GMT 18/06/19 14:48:36 INFO zookeeper.ZooKeeper: Client environment:host.name=www.hadoopsecondarynamenode.com 18/06/19 14:48:36 INFO zookeeper.ZooKeeper: Client environment:java.version=1.8.0_151 18/06/19 14:48:36 INFO zookeeper.ZooKeeper: Client environment:java.vendor=Oracle Corporation 18/06/19 14:48:36 INFO zookeeper.ZooKeeper: Client environment:java.home=/opt/modules/jdk1.8.0_151/jre 18/06/19 14:48:36 INFO zookeeper.ZooKeeper: Client environment:java.class.path=/opt/modules/hadoop-2.5.0-cdh5.3.6/etc/hadoop:/opt/modules/hadoop-2.5.0-cdh5.3.6/etc/hadoop:/opt/modules/hadoop-2.5.0-cdh5.3.6/etc/hadoop:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jackson-core-asl-1.8.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-logging-1.1.3.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-net-3.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-codec-1.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-cli-1.2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/curator-framework-2.6.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/netty-3.6.2.Final.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/junit-4.11.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/guava-11.0.2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-math3-3.1.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-httpclient-3.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jsp-api-2.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jersey-server-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/hamcrest-core-1.3.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jackson-jaxrs-1.8.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jetty-6.1.26.cloudera.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/activation-1.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jetty-util-6.1.26.cloudera.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/zookeeper-3.4.5-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/stax-api-1.0-2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/gson-2.2.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/httpcore-4.2.5.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-io-2.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jsr305-1.3.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jets3t-0.9.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jsch-0.1.42.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jersey-json-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-configuration-1.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-el-1.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/asm-3.2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-lang-2.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/log4j-1.2.17.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jettison-1.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/avro-1.7.6-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/mockito-all-1.8.5.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/servlet-api-2.5.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/paranamer-2.3.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/xmlenc-0.52.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-digester-1.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/httpclient-4.2.5.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-compress-1.4.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/xz-1.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/curator-client-2.6.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jackson-xc-1.8.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/hadoop-auth-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jersey-core-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/hadoop-annotations-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jackson-mapper-asl-1.8.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-collections-3.2.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/hadoop-common-2.5.0-cdh5.3.6-tests.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/hadoop-common-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/hadoop-nfs-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jackson-core-asl-1.8.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/guava-11.0.2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jetty-6.1.26.cloudera.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jetty-util-6.1.26.cloudera.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/commons-io-2.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/commons-el-1.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/asm-3.2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jackson-mapper-asl-1.8.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/hadoop-hdfs-2.5.0-cdh5.3.6-tests.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/hadoop-hdfs-nfs-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/hadoop-hdfs-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jackson-core-asl-1.8.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-codec-1.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-cli-1.2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jline-0.9.94.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/guava-11.0.2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/javax.inject-1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jersey-server-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jersey-client-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jackson-jaxrs-1.8.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jetty-6.1.26.cloudera.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/activation-1.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jetty-util-6.1.26.cloudera.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/zookeeper-3.4.5-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-io-2.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jersey-json-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/aopalliance-1.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/asm-3.2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-lang-2.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/log4j-1.2.17.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jettison-1.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/servlet-api-2.5.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/guice-3.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/xz-1.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jackson-xc-1.8.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jersey-core-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jackson-mapper-asl-1.8.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-collections-3.2.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-api-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-common-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-client-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-tests-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-common-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/jackson-core-asl-1.8.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/junit-4.11.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/javax.inject-1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/asm-3.2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/avro-1.7.6-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/guice-3.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/xz-1.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/hadoop-annotations-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.8.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.5.0-cdh5.3.6-tests.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-nativetask-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.5.0-cdh5.3.6.jar:/contrib/capacity-scheduler/*.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hbase-hadoop2-compat-0.98.6-cdh5.3.6-tests.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hadoop-hdfs-2.5.0-cdh5.3.6-tests.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jackson-core-asl-1.8.8.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hsqldb-1.8.0.10.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jamon-runtime-2.3.1.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hbase-server-0.98.6-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hbase-thrift-0.98.6-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hbase-shell-0.98.6-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/api-util-1.0.0-M20.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/commons-net-3.1.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/commons-cli-1.2.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/curator-framework-2.6.0.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/commons-daemon-1.0.3.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hbase-hadoop2-compat-0.98.6-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/junit-4.11.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/high-scale-lib-1.1.1.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hbase-server-0.98.6-cdh5.3.6-tests.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/netty-3.6.6.Final.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hbase-common-0.98.6-cdh5.3.6-tests.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/guava-12.0.1.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/commons-math3-3.1.1.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jersey-core-1.8.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hbase-testing-util-0.98.6-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jersey-json-1.8.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/java-xmlbuilder-0.4.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/protobuf-java-2.5.0.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jsp-2.1-6.1.14.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/commons-httpclient-3.1.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jsp-api-2.1.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jasper-compiler-5.5.23.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/slf4j-api-1.7.5.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hamcrest-core-1.3.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/servlet-api-2.5-6.1.14.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jackson-jaxrs-1.8.8.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jetty-6.1.26.cloudera.4.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/activation-1.1.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jetty-util-6.1.26.cloudera.4.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/zookeeper-3.4.5-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/gson-2.2.4.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/httpcore-4.2.5.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/commons-io-2.4.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jruby-complete-1.6.8.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jsp-api-2.1-6.1.14.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hbase-it-0.98.6-cdh5.3.6-tests.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jsr305-1.3.9.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/htrace-core-2.04.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hbase-hadoop-compat-0.98.6-cdh5.3.6-tests.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/apacheds-i18n-2.0.0-M15.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jets3t-0.9.0.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/commons-logging-1.1.1.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jsch-0.1.42.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jetty-sslengine-6.1.26.cloudera.4.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/commons-configuration-1.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jasper-runtime-5.5.23.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/commons-el-1.0.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/core-3.1.1.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/findbugs-annotations-1.3.9-1.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/asm-3.2.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/api-asn1-api-1.0.0-M20.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hbase-examples-0.98.6-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/commons-lang-2.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/log4j-1.2.17.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/slf4j-log4j12-1.7.5.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/avro-1.7.6-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hbase-protocol-0.98.6-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hadoop-common-2.5.0-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/commons-math-2.1.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hbase-it-0.98.6-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/libthrift-0.9.0.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/snappy-java-1.0.4.1.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hbase-client-0.98.6-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hbase-hadoop-compat-0.98.6-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/metrics-core-2.2.0.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jettison-1.3.1.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jaxb-api-2.1.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/servlet-api-2.5.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/curator-recipes-2.6.0.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/commons-codec-1.7.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/commons-beanutils-1.7.0.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jaxb-impl-2.2.3-1.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/paranamer-2.3.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/xmlenc-0.52.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/commons-digester-1.8.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/commons-beanutils-core-1.8.0.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jersey-server-1.8.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/httpclient-4.2.5.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/commons-compress-1.4.1.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/xz-1.0.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/curator-client-2.6.0.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hadoop-hdfs-2.5.0-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hbase-common-0.98.6-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jackson-xc-1.8.8.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hbase-prefix-tree-0.98.6-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hadoop-auth-2.5.0-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/hadoop-annotations-2.5.0-cdh5.3.6.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/jackson-mapper-asl-1.8.8.jar:/opt/modules/hbase-0.98.6-cdh5.3.6/lib/commons-collections-3.2.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-api-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-common-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-client-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-tests-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-common-2.5.0-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jackson-core-asl-1.8.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-codec-1.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-cli-1.2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jline-0.9.94.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/guava-11.0.2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/javax.inject-1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jersey-server-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jersey-client-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jackson-jaxrs-1.8.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jetty-6.1.26.cloudera.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/activation-1.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jetty-util-6.1.26.cloudera.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/zookeeper-3.4.5-cdh5.3.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-io-2.4.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jersey-json-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/aopalliance-1.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/asm-3.2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-lang-2.6.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/log4j-1.2.17.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jettison-1.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/servlet-api-2.5.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/guice-3.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/xz-1.0.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jackson-xc-1.8.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jersey-core-1.9.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jackson-mapper-asl-1.8.8.jar:/opt/modules/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-collections-3.2.1.jar 18/06/19 14:48:36 INFO zookeeper.ZooKeeper: Client environment:java.library.path=/opt/modules/hadoop-2.5.0-cdh5.3.6/lib/native 18/06/19 14:48:36 INFO zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp 18/06/19 14:48:36 INFO zookeeper.ZooKeeper: Client environment:java.compiler=<NA> 18/06/19 14:48:36 INFO zookeeper.ZooKeeper: Client environment:os.name=Linux 18/06/19 14:48:36 INFO zookeeper.ZooKeeper: Client environment:os.arch=amd64 18/06/19 14:48:36 INFO zookeeper.ZooKeeper: Client environment:os.version=2.6.32-696.el6.x86_64 18/06/19 14:48:36 INFO zookeeper.ZooKeeper: Client environment:user.name=liupeng 18/06/19 14:48:36 INFO zookeeper.ZooKeeper: Client environment:user.home=/home/liupeng 18/06/19 14:48:36 INFO zookeeper.ZooKeeper: Client environment:user.dir=/opt/modules/hbase-0.98.6-cdh5.3.6 18/06/19 14:48:36 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=localhost:2181 sessionTimeout=90000 watcher=hconnection-0x7b205dbd, quorum=localhost:2181, baseZNode=/hbase 18/06/19 14:48:36 INFO zookeeper.ClientCnxn: Opening socket connection to server localhost6.localdomain6/0:0:0:0:0:0:0:1:2181. Will not attempt to authenticate using SASL (unknown error) 18/06/19 14:48:36 INFO zookeeper.ClientCnxn: Socket connection established, initiating session, client: /0:0:0:0:0:0:0:1:35986, server: localhost6.localdomain6/0:0:0:0:0:0:0:1:2181 18/06/19 14:48:36 INFO zookeeper.ClientCnxn: Session establishment complete on server localhost6.localdomain6/0:0:0:0:0:0:0:1:2181, sessionid = 0x36415671f800003, negotiated timeout = 40000 18/06/19 14:48:36 INFO mapreduce.TableOutputFormat: Created table instance for liupeng:DemoTest 18/06/19 14:48:39 INFO util.RegionSizeCalculator: Calculating region sizes for table "liupeng:Student". 18/06/19 14:48:40 INFO mapreduce.JobSubmitter: number of splits:1 18/06/19 14:48:40 INFO Configuration.deprecation: io.bytes.per.checksum is deprecated. Instead, use dfs.bytes-per-checksum 18/06/19 14:48:40 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1528678110864_0026 18/06/19 14:48:40 INFO impl.YarnClientImpl: Submitted application application_1528678110864_0026 18/06/19 14:48:40 INFO mapreduce.Job: The url to track the job: http://www.hadoopresourcemanager.com:8088/proxy/application_1528678110864_0026/ 18/06/19 14:48:40 INFO mapreduce.Job: Running job: job_1528678110864_0026 18/06/19 14:48:50 INFO mapreduce.Job: Job job_1528678110864_0026 running in uber mode : false 18/06/19 14:48:50 INFO mapreduce.Job: map 0% reduce 0% 18/06/19 14:49:00 INFO mapreduce.Job: map 100% reduce 0% 18/06/19 14:49:09 INFO mapreduce.Job: map 100% reduce 100% 18/06/19 14:49:09 INFO mapreduce.Job: Job job_1528678110864_0026 completed successfully 18/06/19 14:49:09 INFO mapreduce.Job: Counters: 59 File System Counters FILE: Number of bytes read=882 FILE: Number of bytes written=269857 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=98 HDFS: Number of bytes written=0 HDFS: Number of read operations=1 HDFS: Number of large read operations=0 HDFS: Number of write operations=0 Job Counters Launched map tasks=1 Launched reduce tasks=1 Data-local map tasks=1 Total time spent by all maps in occupied slots (ms)=7496 Total time spent by all reduces in occupied slots (ms)=5648 Total time spent by all map tasks (ms)=7496 Total time spent by all reduce tasks (ms)=5648 Total vcore-seconds taken by all map tasks=7496 Total vcore-seconds taken by all reduce tasks=5648 Total megabyte-seconds taken by all map tasks=7675904 Total megabyte-seconds taken by all reduce tasks=5783552 Map-Reduce Framework Map input records=7 Map output records=7 Map output bytes=862 Map output materialized bytes=882 Input split bytes=98 Combine input records=7 Combine output records=7 Reduce input groups=7 Reduce shuffle bytes=882 Reduce input records=7 Reduce output records=7 Spilled Records=14 Shuffled Maps =1 Failed Shuffles=0 Merged Map outputs=1 GC time elapsed (ms)=171 CPU time spent (ms)=2130 Physical memory (bytes) snapshot=312143872 Virtual memory (bytes) snapshot=4137783296 Total committed heap usage (bytes)=170004480 HBase Counters BYTES_IN_REMOTE_RESULTS=0 BYTES_IN_RESULTS=1183 MILLIS_BETWEEN_NEXTS=701 NOT_SERVING_REGION_EXCEPTION=0 NUM_SCANNER_RESTARTS=0 REGIONS_SCANNED=1 REMOTE_RPC_CALLS=0 REMOTE_RPC_RETRIES=0 RPC_CALLS=3 RPC_RETRIES=0 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=0 [liupeng@www hbase-0.98.6-cdh5.3.6]$

4. 查看HBase创建的table表中是否有数据

base(main):253:0> scan "liupeng:Student" ROW COLUMN+CELL 10001 column=contect:mail, timestamp=1528938151610, value=tony@cn.ibm.com 10001 column=info:age, timestamp=1528938151610, value=23 10001 column=info:name, timestamp=1528938151610, value=Tony 10001 column=info:phone, timestamp=1528938151610, value=15995719964 10002 column=contect:mail, timestamp=1528938151610, value=www.ivy@beifeng.com 10002 column=info:age, timestamp=1528938151610, value=30 10002 column=info:name, timestamp=1528938151610, value=Ivy 10002 column=info:phone, timestamp=1528938151610, value=18665851937 10003 column=contect:mail, timestamp=1528938151610, value=www.tom@beifeng.com 10003 column=info:age, timestamp=1528938151610, value=28 10003 column=info:name, timestamp=1528938151610, value=Tom 10003 column=info:phone, timestamp=1528938151610, value=17933569972 10004 column=contect:mail, timestamp=1528938151610, value=jack@alibaba.com 10004 column=info:age, timestamp=1528938151610, value=24 10004 column=info:name, timestamp=1528938151610, value=jack 10004 column=info:phone, timestamp=1528938151610, value=13677543321 10005 column=contect:mail, timestamp=1528938151610, value=kevin@cn.ibm.com 10005 column=info:age, timestamp=1528938151610, value=27 10005 column=info:name, timestamp=1528938151610, value=kevin 10005 column=info:phone, timestamp=1528938151610, value=15999876653 10006 column=contect:mail, timestamp=1528938151610, value=www.mdy@193.com 10006 column=info:age, timestamp=1528938151610, value=20 10006 column=info:name, timestamp=1528938151610, value=mevendy 10006 column=info:phone, timestamp=1528938151610, value=1892287467 10007 column=contect:mail, timestamp=1528938151610, value=www.sendy@163.com 10007 column=info:age, timestamp=1528938151610, value=30 10007 column=info:name, timestamp=1528938151610, value=Sendy 10007 column=info:phone, timestamp=1528938151610, value=15973679981

当看到scan "liupeng:DemoTest"结果出现如下显示时说明数据导入成功。仔细看一下内容,正是我们代码中所要求的 "info","contect"中"name","age","contect"的所有Cell信息

hbase(main):252:0> scan "liupeng:DemoTest" ROW COLUMN+CELL 10001 column=contect:mail, timestamp=1528938151610, value=tony@cn.ibm.com 10001 column=info:age, timestamp=1528938151610, value=23 10001 column=info:name, timestamp=1528938151610, value=Tony 10002 column=contect:mail, timestamp=1528938151610, value=www.ivy@beifeng.com 10002 column=info:age, timestamp=1528938151610, value=30 10002 column=info:name, timestamp=1528938151610, value=Ivy 10003 column=contect:mail, timestamp=1528938151610, value=www.tom@beifeng.com 10003 column=info:age, timestamp=1528938151610, value=28 10003 column=info:name, timestamp=1528938151610, value=Tom 10004 column=contect:mail, timestamp=1528938151610, value=jack@alibaba.com 10004 column=info:age, timestamp=1528938151610, value=24 10004 column=info:name, timestamp=1528938151610, value=jack 10005 column=contect:mail, timestamp=1528938151610, value=kevin@cn.ibm.com 10005 column=info:age, timestamp=1528938151610, value=27 10005 column=info:name, timestamp=1528938151610, value=kevin 10006 column=contect:mail, timestamp=1528938151610, value=www.mdy@193.com 10006 column=info:age, timestamp=1528938151610, value=20 10006 column=info:name, timestamp=1528938151610, value=mevendy 10007 column=contect:mail, timestamp=1528938151610, value=www.sendy@163.com 10007 column=info:age, timestamp=1528938151610, value=30 10007 column=info:name, timestamp=1528938151610, value=Sendy 7 row(s) in 0.5030 seconds

HBase 通过myeclipce脚本来获取固定columns(获取列簇中的列及对应的value值)

标签:xpl jersey between 需要 form cab rip row client

原文地址:https://www.cnblogs.com/liupengpengg/p/9198582.html