标签:github 1.9 ogre pre process efi 技术分享 cto folder

To perform object detection using ImageAI, all you need to do is

3. Download the Object Detection model file

4. Run the sample codes (which is as few as 10 lines)

Now let’s get started.

1) Download and install Python 3 from official Python Language website

2) Install the following dependencies via pip:

i. Tensorflow

pip install tensorflow

ii. Numpy

pip install numpy

iii. SciPy

pip install scipy

iv. OpenCV

pip install opencv-python

v. Pillow

pip install pillow

vi. Matplotlib

pip install matplotlib

vii. H5py

pip install h5py

viii. Keras

pip install keras

ix. ImageAI

pip installhttps://github.com/OlafenwaMoses/ImageAI/releases/download/2.0.1/imageai-2.0.1-py3-none-any.whl

3) Download the RetinaNet model file that will be used for object detection via this link.

Great. Now that you have installed the dependencies, you are ready to write your first object detection code. Create a Python file and give it a name (For example, FirstDetection.py), and then write the code below into it. Copy the RetinaNet model file and the image you want to detect to the folder that contains the python file.

FirstDetection.py

from imageai.Detection import ObjectDetection

import os

execution_path = os.getcwd()

detector = ObjectDetection()

detector.setModelTypeAsRetinaNet()

detector.setModelPath( os.path.join(execution_path , "resnet50_coco_best_v2.0.1.h5"))

detector.loadModel()

detections = detector.detectObjectsFromImage(input_image=os.path.join(execution_path , "image.jpg"), output_image_path=os.path.join(execution_path , "imagenew.jpg"))

for eachObject in detections:

print(eachObject["name"] + " : " + eachObject["percentage_probability"] )

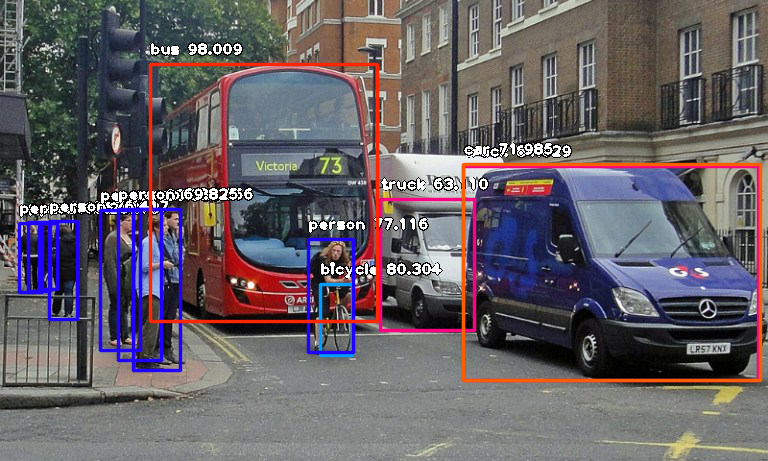

Then run the code and wait while the results prints in the console. Once the result is printed to the console, go to the folder in which your FirstDetection.py is and you will find a new image saved. Take a look at a 2 image samples below and the new images saved after detection.

Before Detection:

Image Credit: alzheimers.co.uk

Image Credit: Wikicommons

After Detection:

Console result for above image:

person : 55.8402955532074

person : 53.21805477142334

person : 69.25139427185059

person : 76.41745209693909

bicycle : 80.30363917350769

person : 83.58567953109741

person : 89.06581997871399

truck : 63.10953497886658

person : 69.82483863830566

person : 77.11606621742249

bus : 98.00949096679688

truck : 84.02870297431946

car : 71.98476791381836

Console result for above image:

person : 71.10445499420166

person : 59.28672552108765

person : 59.61582064628601

person : 75.86382627487183

motorcycle : 60.1050078868866

bus : 99.39600229263306

car : 74.05484318733215

person : 67.31776595115662

person : 63.53200078010559

person : 78.2265305519104

person : 62.880998849868774

person : 72.93365597724915

person : 60.01397967338562

person : 81.05944991111755

motorcycle : 50.591760873794556

motorcycle : 58.719027042388916

person : 71.69321775436401

bicycle : 91.86570048332214

motorcycle : 85.38855314254761

Now let us explain how the 10-line code works.

from imageai.Detection import ObjectDetection import os execution_path = os.getcwd()

In the above 3 lines, we imported the ImageAI object detection class in the first line, imported the python os class in the second line and defined a variable to hold the path to the folder where our python file, RetinaNet model file and images are in the third line.

detector = ObjectDetection() detector.setModelTypeAsRetinaNet() detector.setModelPath( os.path.join(execution_path , "resnet50_coco_best_v2.0.1.h5")) detector.loadModel() detections = detector.detectObjectsFromImage(input_image=os.path.join(execution_path , "image.jpg"), output_image_path=os.path.join(execution_path , "imagenew.jpg"))

In the 5 lines of code above, we defined our object detection class in the first line, set the model type to RetinaNet in the second line, set the model path to the path of our RetinaNet model in the third line, load the model into the object detection class in the fourth line, then we called the detection function and parsed in the input image path and the output image path in the fifth line.

for eachObject in detections:

print(eachObject["name"] + " : " + eachObject["percentage_probability"] )

In the above 2 lines of code, we iterate over all the results returned by the detector.detectObjectsFromImage function in the first line, then print out the name and percentage probability of the model on each object detected in the image in the second line.

ImageAI supports many powerful customization of the object detection process. One of it is the ability to extract the image of each object detected in the image. By simply parsing the extra parameter extract_detected_objects=True into the detectObjectsFromImagefunction as seen below, the object detection class will create a folder for the image objects, extract each image, save each to the new folder created and return an extra array that contains the path to each of the images.

detections, extracted_images = detector.detectObjectsFromImage(input_image=os.path.join(execution_path , "image.jpg"), output_image_path=os.path.join(execution_path , "imagenew.jpg"), extract_detected_objects=True)

Object Detection with 10 lines of code - Image AI

标签:github 1.9 ogre pre process efi 技术分享 cto folder

原文地址:https://www.cnblogs.com/Javi/p/9293404.html