标签:depend UNC cal frame alt agg prot ide java

Working within these constraints, the author of an allocator attempts to meet the often conflicting performance goals of maximizing throughput and memory utilization.

. Goal 1: Maximizing throughput. Given some sequence of n allocate and free requests

R0,R1,...,Rk,...,Rn?1

we would like to maximize an allocator’s throughput, which is defined as the number of requests that it completes per unit time.

For example, if an allo- cator completes 500 allocate requests and 500 free requests in 1 second, then its throughput is 1,000 operations per second.

In general, we can maximize throughput by minimizing the average time to satisfy allocate and free re- quests.

. Goal 2: Maximizing memory utilization.

Naive programmers often incorrectly assume that virtual memory is an unlimited resource.

In fact, the total amount of virtual memory allocated by all of the processes in a system is limited by the amount of swap space on disk.

Good programmers know that virtual memory is a finite resource that must be used efficiently. This is especially true for a dynamic memory allocator that might be asked to allocate and free large blocks of memory.

There are a number of ways to characterize how efficiently an allocator uses the heap. In our experience, the most useful metric is peak utilization. As before, we are given some sequence of n allocate and free requests

R0,R1,...,Rk,...,Rn?1

If an application requests a block of p bytes, then the resulting allocated block has a payload of p bytes.

After request Rk has completed, let the aggregate payload, denoted Pk, be the sum of the payloads of the currently allocated blocks, and let Hk denote the current (monotonically nondecreasing) size of the heap.

Then the peak utilization over the first k requests, denoted by Uk, is given by

The objective of the allocator then is to maximize the peak utilization Un?1 over the entire sequence.

As we will see, there is a tension between maximiz- ing throughput and utilization.

One of the in- teresting challenges in any allocator design is finding an appropriate balance between the two goals.

Fragmentation

The primary cause of poor heap utilization is a phenomenon known as fragmen- tation, which occurs when otherwise unused memory is not available to satisfy allocate requests.

There are two forms of fragmentation: internal fragmentation and external fragmentation.

Internal fragmentation occurs when an allocated block is larger than the pay- load.

This might happen for a number of reasons. For example, the implementation of an allocator might impose a minimum size on allocated blocks that is greater than some requested payload.

Or, as we saw in Figure 9.34(b), the allocator might increase the block size in order to satisfy alignment constraints.

Internal fragmentation is straightforward to quantify.

It is simply the sum of the differences between the sizes of the allocated blocks and their payloads.

Thus, at any point in time, the amount of internal fragmentation depends only on the pattern of previous requests and the allocator implementation.

External fragmentation occurs when there is enough aggregate free memory to satisfy an allocate request, but no single free block is large enough to handle the request.

External fragmentation is much more difficult to quantify than internal frag- mentation because it depends not only on the pattern of previous requests and the allocator implementation, but also on the pattern of future requests.

For example, suppose that after k requests all of the free blocks are exactly four words in size. Does this heap suffer from external fragmentation?

The answer depends on the pattern of future requests.

If all of the future allocate requests are for blocks that are smaller than or equal to four words, then there is no external fragmentation. On the other hand, if one or more requests ask for blocks larger than four words, then the heap does suffer from external fragmentation.

Allocators typically employ heuristics that attempt to maintain small numbers of larger free blocks rather than large numbers of smaller free blocks.

# 855

Garbage Collection

A garbage collector is a dynamic storage allocator that automatically frees al- located blocks that are no longer needed by the program.

Such blocks are known as garbage (hence the term garbage collector).

In a system that supports garbage collection, applications explicitly allocate heap blocks but never explic- itly free them.

In the context of a C program, the application calls malloc, but never calls free.

Instead, the garbage collector periodically identifies the garbage blocks and makes the appropriate calls to free to place those blocks back on the free list.

Garbage Collector Basics

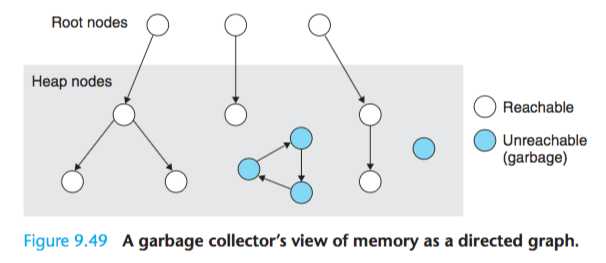

A garbage collector views memory as a directed reachability graph of the form shown in Figure 9.49.

The nodes of the graph are partitioned into a set of root nodes and a set of heap nodes.

Each heap node corresponds to an allocated block in the heap.

A directed edge p → q means that some location in block p points to some location in block q.

Root nodes correspond to locations not in the heap that contain pointers into the heap.

These locations can be registers, variables on the stack, or global variables in the read-write data area of virtual memory.

We say that a node p is reachable if there exists a directed path from any root node to p.

At any point in time, the unreachable nodes correspond to garbage that can never be used again by the application.

The role of a garbage collector is to maintain some representation of the reachability graph and periodically reclaim the unreachable nodes by freeing them and returning them to the free list.

Garbage collectors for languages like ML and Java, which exert tight con- trol over how applications create and use pointers, can maintain an exact repre- sentation of the reachability graph, and thus can reclaim all garbage.

However, collectors for languages like C and C++ cannot in general maintain exact repre- sentations of the reachability graph.

Such collectors are known as conservative garbage collectors.

They are conservative in the sense that each reachable block is correctly identified as reachable, while some unreachable nodes might be incor- rectly identified as reachable.

Collectors can provide their service on demand, or they can run as separate threads in parallel with the application, continuously updating the reachability graph and reclaiming garbage.

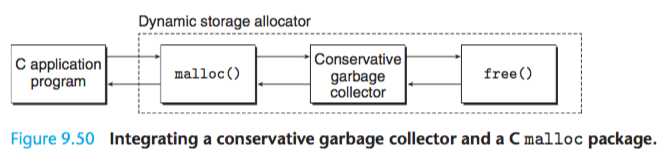

For example, consider how we might incorporate a conservative collector for C programs into an existing malloc package, as shown in Figure 9.50.

The application calls malloc in the usual manner whenever it needs heap space.

If malloc is unable to find a free block that fits, then it calls the garbage col- lector in hopes of reclaiming some garbage to the free list.

The collector identifies the garbage blocks and returns them to the heap by calling the free function.

The key idea is that the collector calls free instead of the application.

When the call to the collector returns, malloc tries again to find a free block that fits.

If that fails, then it can ask the operating system for additional memory.

Eventually malloc returns a pointer to the requested block (if successful) or the NULL pointer (if unsuccessful).

Mark&Sweep Garbage Collectors

Common Memory-Related Bugs in C Programs

Dereferencing Bad Pointers

As we learned in Section 9.7.2, there are large holes in the virtual address space of a process that are not mapped to any meaningful data.

If we attempt to dereference a pointer into one of these holes, the operating system will terminate our program with a segmentation exception.

Also, some areas of virtual memory are read-only. Attempting to write to one of these areas terminates the program with a protection exception.

However, it is easy for new C programmers (and experienced ones too!) to pass the contents of val instead of its address:

scanf("%d", value)

In this case, scanf will interpret the contents of val as an address and attempt to write a word to that location.

In the best case, the program terminates immediately with an exception.

In the worst case, the contents of val correspond to some valid read/write area of virtual memory, and we overwrite memory, usually with disastrous and baffling consequences much later.

Reading Uninitialized Memory

While bss memory locations (such as uninitialized global C variables) are always initialized to zeros by the loader, this is not true for heap memory.

A common error is to assume that heap memory is initialized to zero.

A correct implementation would explicitly zero y[i], or use calloc.

Allowing Stack Buffer Overflows

As we saw in Section 3.12, a program has a buffer overflow bug if it writes to a target buffer on the stack without examining the size of the input string.

For example, the following function has a buffer overflow bug because the gets function copies an arbitrary length string to the buffer.

To fix this, we would need to use the fgets function, which limits the size of the input string.

1 void bufoverflow() 2{ 3 char buf[64]; 4 5 gets(buf); /* Here is the stack buffer overflow bug */ 6 return; 7}

Assuming that Pointers and the Objects They Point to Are the Same Size

...

This code will run fine on machines where ints and pointers to ints are the same size.

But if we run this code on a machine like the Core i7, where a pointer is larger than an int, then the loop in lines 7–8 will write past the end of the A array.

...

Referencing Nonexistent Variables

1 int *stackref () 2{ 3 int val; 4 5 return &val; 6}

This function returns a pointer (say, p) to a local variable on the stack and then pops its stack frame.

Although p still points to a valid memory address, it no longer points to a valid variable.

When other functions are called later in the program, the memory will be reused for their stack frames.

Later, if the program assigns some value to *p, then it might actually be modifying an entry in another function’s stack frame, with potentially disastrous and baffling consequences.

Referencing Data in Free Heap Blocks

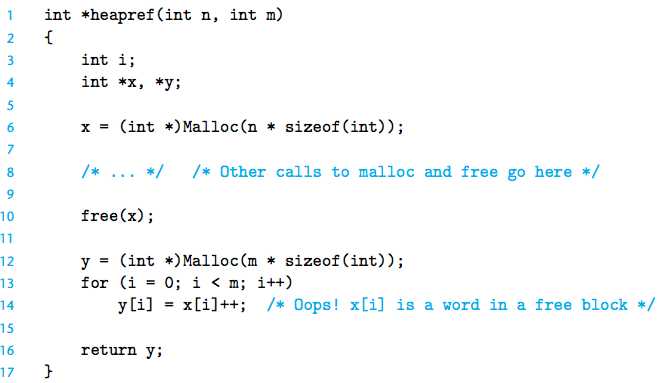

A similar error is to reference data in heap blocks that have already been freed.

Depending on the pattern of malloc and free calls that occur between lines 6 and 10, when the program references x[i] in line 14, the array x might be part of some other allocated heap block and have been overwritten.

Introducing Memory Leaks

Memory leaks are slow, silent killers that occur when programmers inadvertently create garbage in the heap by forgetting to free allocated blocks.

For example, the following function allocates a heap block x and then returns without freeing it:

1 void leak(int n) 2{ 3 int *x = (int *)Malloc(n * sizeof(int)); 4 5 return; /* x is garbage at this point */ 6}

If leak is called frequently, then the heap will gradually fill up with garbage, in the worst case consuming the entire virtual address space.

Memory leaks are particularly serious for programs such as daemons and servers, which by definition never terminate.

标签:depend UNC cal frame alt agg prot ide java

原文地址:https://www.cnblogs.com/geeklove01/p/9340931.html