标签:weight alt col turn dom 迭代 object ural Fix

手写串行BP算法,可调batch_size

既要:1、输入层f(x)=x 隐藏层sigmoid 输出层f(x)=x

2、run函数实现单条数据的一次前馈

3、train函数读入所有数据for循环处理每条数据。

循环中:

首先调用run函数,得到各层的值

self.input_nodes_value

self.hidden_nodes_value

self.output_nodes_value

然后计算输出层误差和delta

4、关键函数:用于前馈的sigmoid和用于反馈的sigmoid的导数

self.activation_function = lambda x : 1/(1+np.exp(-x)) # sigmoid函数,用于正向传播

self.delta_activation_function = lambda x: x-x**2 # sigmoid一阶导,用于反向传播

inputs = np.array([[0.5, -0.2, 0.1]]) targets = np.array([[0.4]]) test_w_i_h = np.array([[0.1, -0.2], [0.4, 0.5], [-0.3, 0.2]]) test_w_h_o = np.array([[0.3], [-0.1]]) batch_size=1 # 输入层没有激活函数f(x)=x,隐藏层激活函数sigmoid,输出层激活函数f(x)=x class NeuralNetwork(object): def __init__(self, input_nodes, hidden_nodes, output_nodes, learning_rate): # Set number of nodes in input, hidden and output layers. self.input_nodes = input_nodes self.hidden_nodes = hidden_nodes self.output_nodes = output_nodes # 创建三个一维数组存放三层节点的值 # print(str(self.input_nodes)+" "+str(self.hidden_nodes)+" "+str(self.output_nodes)) self.input_nodes_value=[1.0]*input_nodes self.hidden_nodes_value=[1.0]*hidden_nodes self.output_nodes_value=[1.0]*output_nodes # Initialize weights self.weights_input_to_hidden = np.random.normal(0.0, self.input_nodes**-0.5, (self.input_nodes, self.hidden_nodes)) self.weights_hidden_to_output = np.random.normal(0.0, self.hidden_nodes**-0.5, (self.hidden_nodes, self.output_nodes)) self.learning_rate = learning_rate #### TODO: Set self.activation_function to your implemented sigmoid function #### self.activation_function = lambda x : 1/(1+np.exp(-x)) # sigmoid函数,用于正向传播 self.delta_activation_function = lambda x: x-x**2 # sigmoid一阶导,用于反向传播 self.change_to_fix_weights_h2o=[[0.0]*self.output_nodes]*self.hidden_nodes self.change_to_fix_weights_i2h=[[0.0]*self.hidden_nodes]*self.input_nodes # print("xxxx") # print(self.change_to_fix_weights_h2o) # print(self.change_to_fix_weights_i2h) def train(self, features, targets):#完成n条数据的一次前向传递 ‘‘‘ Train the network on batch of features and targets. Arguments --------- features: 2D array, each row is one data record, each column is a feature targets: 1D array of target values ‘‘‘ ####################modify by zss############################################### n=features.shape[0]#数据条数 # print(features) # print(targets) counter=batch_size for ii in range(0,n): print("######",ii) print(self.weights_input_to_hidden) print(self.weights_hidden_to_output) # print(self.run(features[ii])) # print(targets[ii]) error_o=[0.0]*self.output_nodes#输出层误差 error_h=[0.0]*self.hidden_nodes#隐藏层误差 output_deltas=[0.0]*self.output_nodes hidden_deltas=[0.0]*self.hidden_nodes for o in range(self.output_nodes): # 输 出 层 error_o[o]=targets[ii][o]-self.output_nodes_value[o]#计算输出层误差 output_deltas[o]=self.delta_activation_function(self.output_nodes_value[o])*error_o[o] for h in range(self.hidden_nodes): # 隐 藏 层 for o in range(self.output_nodes): error_h[h]+=output_deltas[o]*self.weights_hidden_to_output[h][o] hidden_deltas[h]=self.delta_activation_function(self.hidden_nodes_value[h])*error_h[h] for h in range(self.hidden_nodes): for o in range(self.output_nodes): self.change_to_fix_weights_h2o[h][o]+=output_deltas[o]*self.hidden_nodes_value[h] for i in range(self.input_nodes): for h in range(self.hidden_nodes): self.change_to_fix_weights_i2h[i][h]+=hidden_deltas[h]*self.input_nodes_value[i] counter-=1 if counter==0:#当counter=0,进行一次权重修改 #调整隐藏层--输出层权重 for h in range(self.hidden_nodes): for o in range(self.output_nodes): self.weights_hidden_to_output[h][o] += self.learning_rate*self.change_to_fix_weights_h2o[h][o] #调整输入层--隐藏层权重 for i in range(self.input_nodes): for h in range(self.hidden_nodes): self.weights_input_to_hidden[i][h] += self.learning_rate*self.change_to_fix_weights_i2h[i][h] self.change_to_fix_weights_h2o=[[0.0]*self.output_nodes]*self.hidden_nodes self.change_to_fix_weights_i2h=[[0.0]*self.hidden_nodes]*self.input_nodes counter=batch_size return self.weights_hidden_to_output def run(self, features):#完成一条数据的一次前向传递 ‘‘‘ features: 1D array of feature values ‘‘‘ print(features) for i in range(self.input_nodes): self.input_nodes_value[i]=features[i] # self.input_nodes_value[i]=self.activation_function(features[i]) for h in range(self.hidden_nodes): temp=0 for i in range(self.input_nodes): temp+=self.input_nodes_value[i]*self.weights_input_to_hidden[i][h] temp=self.activation_function(temp) self.hidden_nodes_value[h]=temp for o in range(self.output_nodes): temp=0 for h in range(self.hidden_nodes): temp+=self.hidden_nodes_value[h]*self.weights_hidden_to_output[h][o] # temp=self.activation_function(temp) self.output_nodes_value[o]=temp return def test_run(): # Test correctness of run method network = NeuralNetwork(3, 2, 1, 0.5) network.weights_input_to_hidden = test_w_i_h.copy() network.weights_hidden_to_output = test_w_h_o.copy() print("#####"+str(network.run(np.array([0.5, -0.2, 0.1])))) print(np.allclose(network.run(np.array([0.5, -0.2, 0.1])), 0.09998924)) return def test_train(): # Test that weights are updated correctly on training network = NeuralNetwork(3, 2, 1, 0.5) network.weights_input_to_hidden = test_w_i_h.copy() network.weights_hidden_to_output = test_w_h_o.copy() network.train(inputs, targets) print(np.allclose(network.weights_hidden_to_output, np.array([[ 0.37275328], [-0.03172939]]))) print(np.allclose(network.weights_input_to_hidden, np.array([[ 0.10562014, -0.20185996], [0.39775194, 0.50074398], [-0.29887597, 0.19962801]]))) test_train()

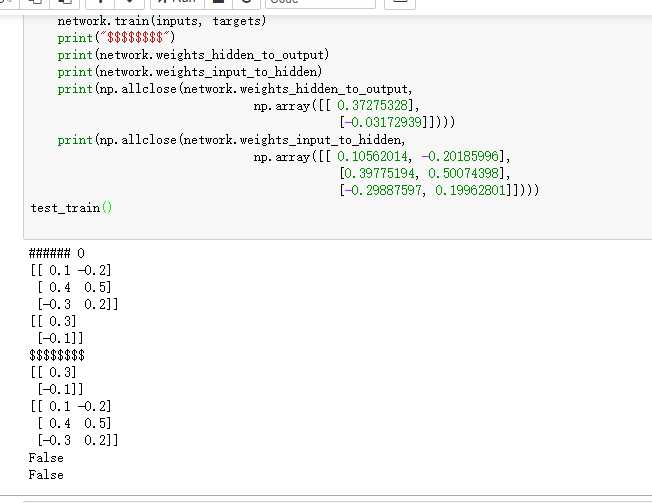

可以看出结果比较接近,但是还是不满足allclose 5%的要求,因为只迭代了一次,多迭代几次精确率就会进一步上升,未完待续

allclose结果为false

标签:weight alt col turn dom 迭代 object ural Fix

原文地址:https://www.cnblogs.com/zealousness/p/9351799.html