标签:contains figure ensure name end init ges mutex other

Shared Variables in Threaded Programs

Threads Memory Model

Thus, registers are never shared, whereas virtual memory is always shared.

The memory model for the separate thread stacks is not as clean.

These stacks are contained in the stack area of the virtual address space, and are usually accessed independently by their respective threads.

We say usually rather than always, because different thread stacks are not protected from other threads.

So if a thread somehow manages to acquire a pointer to another thread’s stack, then it can read and write any part of that stack.

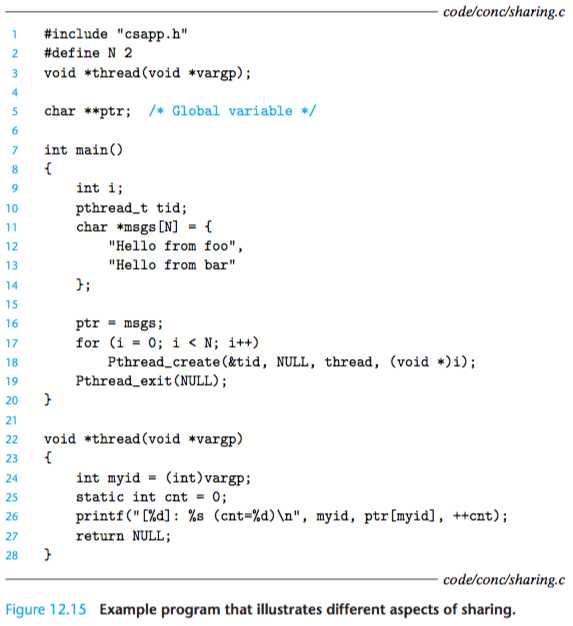

Our example program shows this in line 26,

where the peer threads reference the contents of the main thread’s stack indirectly through the global ptr variable.

Mapping Variables to Memory

Variables in threaded C programs are mapped to virtual memory according to their storage classes:

. Global variables. A global variable is any variable declared outside of a func- tion. At run time, the read/write area of virtual memory contains exactly one instance of each global variable that can be referenced by any thread. For ex- ample, the global ptr variable declared in line 5 has one run-time instance in the read/write area of virtual memory. When there is only one instance of a variable, we will denote the instance by simply using the variable name—in this case, ptr.

. Local automatic variables. A local automatic variable is one that is declaredinside a function without the static attribute. At run time, each thread’s stack contains its own instances of any local automatic variables. This is true even if multiple threads execute the same thread routine. For example, there is one instance of the local variable tid, and it resides on the stack of the main thread. We will denote this instance as tid.m. As another example, there are two instances of the local variable myid, one instance on the stack of peer thread 0, and the other on the stack of peer thread 1. We will denote these instances as myid.p0 and myid.p1, respectively.

. Local static variables. A local static variable is one that is declared inside a function with the static attribute. As with global variables, the read/write area of virtual memory contains exactly one instance of each local static variable declared in a program. For example, even though each peer thread in our example program declares cnt in line 25, at run time there is only one instance of cnt residing in the read/write area of virtual memory. Each peer thread reads and writes this instance.

Shared Variables

We say that a variable v is shared if and only if one of its instances is referenced by more than one thread.

Synchronizing Threads with Semaphores

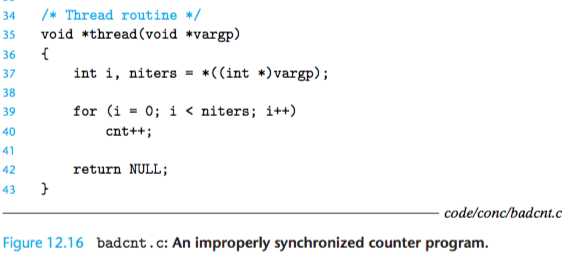

code slice(note: vargp holds the address of a variable defined in the main thread, cnt is a global variable)

thread rountine is below:

and:

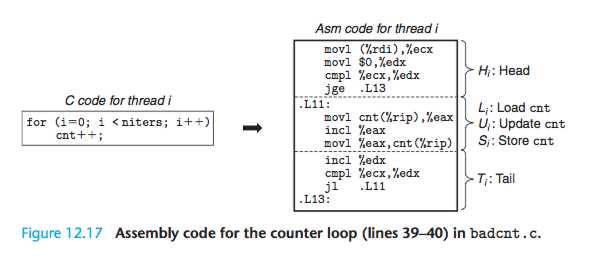

here, %eax: cnt, %ecx, niters, %edx: i,

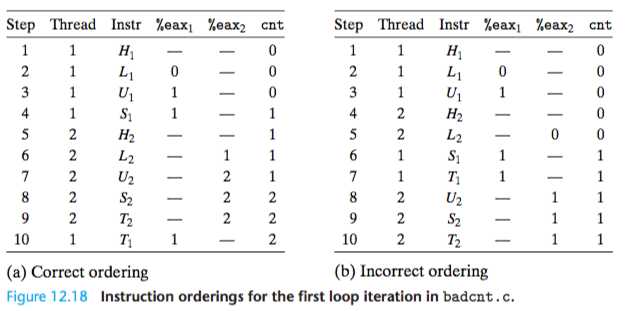

Notice that the head and tail manipulate only local stack variables, while Li, Ui, and Si manipulate the contents of the shared counter variable.

Here is the crucial point: In general, there is no way for you to predict whether the operating system will choose a correct ordering for your threads.

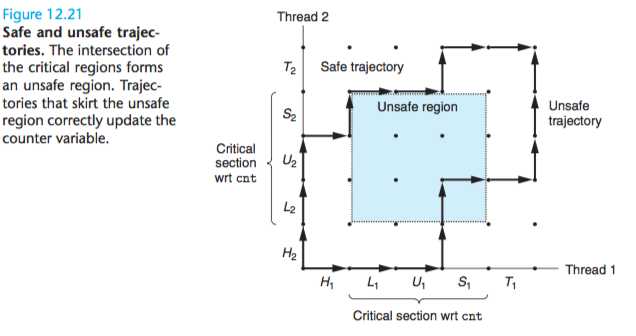

Progress Graphs

A progress graph models the execution of n concurrent threads as a trajectory through an n-dimensional Cartesian space.

For thread i, the instructions (Li, Ui, Si) that manipulate the contents of the shared variable cnt constitute a critical section (with respect to shared variable

cnt) that should not be interleaved with the critical section of the other thread.

The phenomenon in general is known as mutual exclusion.

Notice that the unsafe region abuts, but does not include, the states along its perimeter.

Semaphores

A semaphore, s, is a global variable with a nonnegative integer value that can only be manipulated by two special operations, called P and V :

P (s): If s is nonzero, then P decrements s and returns immediately. If s is zero, then suspend the thread until s becomes nonzero and the process is restarted by a V operation. After restarting, the P operation decrements s and returns control to the caller.

V(s): The V operation increments s by 1. If there are any threads blocked at a P operation waiting for s to become nonzero, then the V operation restarts exactly one of these threads, which then completes its P operation by decrementing s.

The test and decrement operations in P occur indivisibly, in the sense that once the semaphore s becomes nonzero, the decrement of s occurs without in- terruption.

The increment operation in V also occurs indivisibly, in that it loads, increments, and stores the semaphore without interruption.

Notice that the defi- nition of V does not define the order in which waiting threads are restarted.

The only requirement is that the V must restart exactly one waiting thread.

Thus, when several threads are waiting at a semaphore, you cannot predict which one will be restarted as a result of the V .

The definitions of P and V ensure that a running program can never enter a state where a properly initialized semaphore has a negative value.

This property, known as the semaphore invariant, provides a powerful tool for controlling the trajectories of concurrent programs.

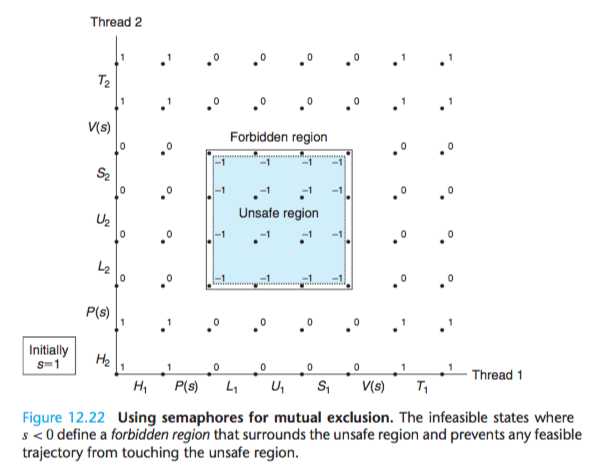

Using Semaphores for Mutual Exclusion

The basic idea is to associate a semaphore s, initially 1, with each shared variable (or related set of shared variables) and then surround the corresponding critical section with P (s) and V (s) operations.

A semaphore that is used in this way to protect shared variables is called a binary semaphore because its value is always 0 or 1.

Binary semaphores whose purpose is to provide mutual exclusion are often called mutexes.

Performing a P operation on a mutex is called locking the mutex. Similarly, performing the V operation is called unlocking the mutex.

A thread that has locked but not yet unlocked a mutex is said to be holding the mutex.

A semaphore that is used as a counter for a set of available resources is called a counting semaphore.

The semaphore operations ensure mutually exclusive access to the critical region.

Putting it all together, to properly synchronize the example counter program in Figure 12.16 using semaphores, we first declare a semaphore called mutex:

volatile int cnt = 0; /* Counter */ sem_t mutex; /* Semaphore that protects counter */

and then initialize it to unity in the main routine:

Sem_init(&mutex, 0, 1); /* mutex = 1 */

Finally, we protect the update of the shared cnt variable in the thread routine by surrounding it with P and V operations:

for (i = 0; i < niters; i++) { P(&mutex); cnt++; V(&mutex); }

Limitations of progress graphs

Multiprocessors behave in ways that cannot be explained by progress graphs.

In particular, a multiprocessor memory system can be in a state that does not correspond to any trajectory in a progress graph.

标签:contains figure ensure name end init ges mutex other

原文地址:https://www.cnblogs.com/geeklove01/p/9367138.html