标签:int new 分享 方式 amp ssi 设置 变量 googl

pip install requestsimport requests

postdata= {‘key‘:‘value‘}

r = requests.post(‘http://www.cnblogs.com/login‘,data=postdata)

print(r.content)payload = {‘opt‘:1}

r = requests.get(‘https://i.cnblogs.com/EditPosts.aspx‘,params=payload)

print r.urlimport requests

r = requests.get(‘http://www.baidu.com‘)

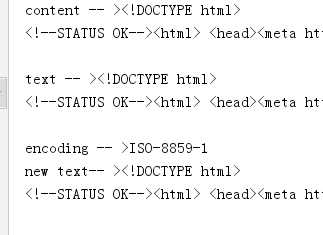

print ‘content -- >‘+ r.content

print ‘text -- >‘+ r.text

print ‘encoding -- >‘+ r.encoding

r.encoding=‘utf-8‘

print ‘new text-- >‘+r.textuploading-image-85581.png

r.content 返回是字节,text返回文本形式

如果输出结果为encoding -->encoding -- >ISO-8859-1,则说明实际的编码格式是UTF-8,由于Requests猜测错误,导致解析文本出现乱码。Requests提供解决方案,可以自行设置编码格式,r.encoding=‘utf-8‘设置成UTF-8之后,“new text -->”就不会出现乱码。但这种方法笨拙。因此就有了:chardet,优秀的字符串/文件编码检测模块。

安装pip install chardet

安装完成后,使用chardet.detect()返回字典,其中confidence是检测精确度,encoding是编码方式

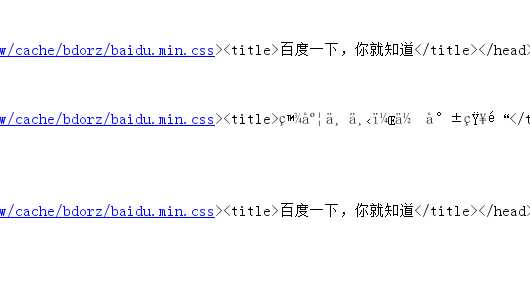

import requests,chardet

r = requests.get(‘http://www.baidu.com‘)

print chardet.detect(r.content)

r.encoding = chardet.detect(r.content)[‘encoding‘]

print(r.text)C:\Python27\python.exe F:/python_scrapy/ch03/3.2.3_3.py

{‘confidence‘: 0.99, ‘language‘: ‘‘, ‘encoding‘: ‘utf-8‘}

<!DOCTYPE html>

<!--STATUS OK--><html> <head><meta http-equiv=content-type content=text/html;charset=utf-8><meta http-equiv=X-UA-Compatible content=IE=Edge><meta content=always name=referrer><link rel=stylesheet type=text/css href=http://s1.bdstatic.com/r/www/cache/bdorz/baidu.min.css><title>百度一下,你就知道</title></head> <body link=#0000cc> <div id=wrapper> <div id=head> <div class=head_wrapper> <div ..

.................

Process finished with exit code 0

import requests

user_agent = ‘Mozilla/4.0 (compatible; MSIE 5.5; Windows NT)‘

headers={‘User-Agent‘:user_agent}

r = requests.get(‘http://www.baidu.com‘,headers=headers)

print r.content

import requests

r = requests.get(‘http://www.baidu.com‘)

if r.status_code == requests.codes.ok:

print r.status_code#响应码

print r.headers#响应头

print r.headers.get(‘content-type‘)#推荐使用这种获取方式,获取其中的某个字段

print r.headers[‘content-type‘]#不推荐使用这种获取方式,因为不存在会抛出异常

else:

r.raise_for_status()import requests

user_agent = ‘Mozilla/4.0 (compatible; MSIE 5.5; Windows NT)‘

headers={‘User-Agent‘:user_agent}

r = requests.get(‘http://www.baidu.com‘,headers=headers)

#遍历出所有的cookie字段的值

for cookie in r.cookies.keys():

print cookie+‘:‘+r.cookies.get(cookie)import requests

user_agent = ‘Mozilla/4.0 (compatible; MSIE 5.5; Windows NT)‘

headers={‘User-Agent‘:user_agent}

cookies = dict(name=‘guguobao‘,age=‘10‘)

r = requests.get(‘http://www.baidu.com‘,headers=headers,cookies=cookies)

print r.textimport requests

loginUrl= ‘http://www.xxx.com/login‘

s = requests.Session()

#首先访问登录界面,作为游客,服务器会先分配一个cookie

r = s.get(loginUrl,allow_redirects=True)

datas={‘name‘:‘guguobao‘,‘passwd‘:‘guguobao‘}

#向登录链接发送post请求,验证成功,游客权限转为会员权限

r =s.post(loginUrl,data=datas,allow_redirects=True)

print r.text

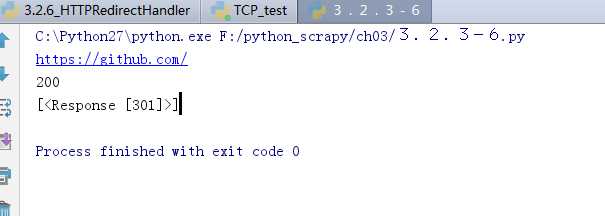

import requests

r = requests.get(‘http://github.com‘)

print r.url

print r.status_code

print r.history

r = requests.get(‘http://github.com‘,timeout=2)import requests

proxies = {

"http": "http://127.0.0.1:1080",

"https": "http://127.0.0.1:1080",

}

r = requests.get("http://www.google.com", proxies=proxies)

print r.text

proxies = {

"http": "http://user:password@127.0.0.1:1080",

]人性化的Requests模块(响应与编码、header处理、cookie处理、重定向与历史记录、代理设置)

标签:int new 分享 方式 amp ssi 设置 变量 googl

原文地址:https://www.cnblogs.com/guguobao/p/9404614.html