标签:progress 账户 清空 als oat err port bootproto 启动网卡

OpenStack手动安装手册即错误排查(Icehouse)

1 Keystone手动安装教程

1.1 Keystone安装前的准备工作

1.1.1环境准备

本实验采用Virtualbox5.2.12版本为虚拟化平台,模拟相应的物理网络和物理服务器,如果需要部署到真实的物理环境,此步骤可以直接替换为在物理机上相应的配置,其原理相同。

Virtualbox下载地址:https://www.virtualbox.org/wiki/Downloads

1.1.2虚拟网络

需要新建3个虚拟网络 Net0、Net1 和 Net2,其在 Virtualbox中对应配置如下:

Net0:

Network name: VirtualBox host-only Ethernet Adapter#2

Purpose: administrator / management network

IP block: 10.20.0.0/24

DHCP: disable

Linux device: eth0

Net1:

Network name: VirtualBox host-only Ethernet Adapter#3

Purpose: public network

DHCP: disable

IP block: 172.16.0.0/24

Linux device: eth1

Net2:

Network name: VirtualBox host-only Ethernet Adapter#4

Purpose: Storage/private network

DHCP: disable

IP block: 192.168.4.0/24

Linux device: eth2

1.1.3虚拟机

需要新建3个虚拟机 VM0、VM1 和 VM2,其对应配置如下:

VM0:

Name: controller0

vCPU:1

Memory :1G

Disk:30G

Networks: net1

VM1:

Name : network0

vCPU:1

Memory :1G

Disk:30G

Network:net1,net2,net3

VM2:

Name: compute0

vCPU:2

Memory :2G

Disk:30G

Networks:net1,net3

1.1.4网络设置

controller0

eth0:10.20.0.10 (management network)

eht1:(disabled)

eht2:(disabled)

network0

eth0:10.20.0.20 (management network)

eht1:172.16.0.20 (public/external network)

eht2:192.168.4.20 (private network)

compute0

eth0:10.20.0.30 (management network)

eht1:(disabled)

eht2:192.168.4.30 (private network)

1.1.5操作系统准备

本实验使用Linux发行版CentOS 6.5 x86_64,在安装操作系统过程中,选择的初始安装包为“基本”安装包,安装完成系统以后还需要额外配置如下载YUM 仓库。

CentOS 6.5镜像文件下载:http://archive.kernel.org/centos-vault/6.5/isos/x86_64/CentOS-6.5-x86_64-bin-DVD1.iso

1.2 虚拟机模板制作

1.2.1虚拟机的部分设置

在创建虚拟机那部分的网络选择,从网卡一到网卡三依次为VirtualBox host-only Ethernet Adapter#2,VirtualBox host-only Ethernet Adapter#3,VirtualBox host-only Ethernet Adapter#4。高级设置里面的控制芯片都设为PCnet-PCT II(Am79C970A),混杂模式为全部允许。为了让我们创建的虚拟机能够访问外网,这里启动第四块网卡,将该网卡的连接方式设为 NEA网络,控制芯片为默认,把混杂模式打开,设为全部允许。

1.2.2网卡配置

当虚拟机成功创建并启动后,我们来修改它的网卡配置:

[root@controller0~]# vi /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=static

IPADDR=10.20.0.10

NETMASK=255.255.255.0

[root@controller0~]# vi /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE=eth1

TYPE=Ethernet

ONBOOT=no

NM_CONTROLLED=yes

BOOTPROTO=static

[root@controller0~]# vi /etc/sysconfig/network-scripts/ifcfg-eth2

DEVICE=eth2

TYPE=Ethernet

ONBOOT=no

NM_CONTROLLED=yes

BOOTPROTO=static

[root@controller0~]# vi /etc/sysconfig/network-scripts/ifcfg-eth3

DEVICE=eth3

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=dhcp

1.2.3外网连通性的测试

这里修改 eth3 的网卡配置为 dhcp 并启用它的 ONBOOT 是为了我们的虚拟机能够访问外网,可以用如下命令来测试一下网络是否连通:

[root@controller0~]# ping www.baidu.com

1.2.4源下载、更新

网卡配置修改完成并能够成功访问外网后,开始下载安装所需要的源,自动配置执行如下命令即可:

[root@controller0~]# yum install -y http://repos.fedorapeople.org/repos/openstack/openstack-icehouse/rdo-release-icehouse-4.noarch.rpm

这在在执行上述命令时会报错,显示该源文件不存在,无法进行下载。由于 OpenStack 源更新较快,现在的版本也较多,比如O版、I版、K版,而I版的官方源似乎已经很难找到,解决方法是建立一个本地源,具体操作如下:

[root@controller0~]# vi /etc/yum.repos.d/icehouse.repo

[icehouse]

name=Extra icehouse Packages for Enterprise Linux 6 - $basearch

baseurl=https://repos.fedorapeople.org/repos/openstack/EOL/openstack-icehouse/epel-6/

#mirrorlist=https://mirrors.fedoraproject.org/metalink?repo=epel-6&arch=$basearch

failovermethod=priority

enabled=1

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-6

下载另一个源:

[root@controller0~]# yum install -y http://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm

下载以上两个源过后需要更新所有 RPM 包,避免在后面的部署中软件包版本过低的问题:

[root@controller0~]#yum –y update

1.2.5公共配置(all nodes)

以下命令需要在每一个节点都执行。

修改 hosts 文件,将虚拟机的名称修改为节点名称:

[root@controller0~]# vi /etc/hosts

127.0.0.1 localhost

::1 localhost

10.20.0.10 controller0

10.20.0.20 network0

10.20.0.30 compute0

禁用selinux:

[root@controller0~]# vi /etc/selinux/config

SELINUX=disabled

安装 NTP 服务:

[root@controller0~]# yum install ntp -y

[root@controller0~]# service ntpd start

[root@controller0~]# chkconfig ntpd on

修改 NTP 配置文件,配置从 controller0时间同步(除了controller0以外):

[root@controller0~]# vi /etc/ntp.conf

server 10.20.0.10

fudge 10.20.0.10 stratum 10 # LCL is unsynchronized

立即同步并检查时间同步配置是否正确。(除了controller0以外):

[root@controller0~]# ntpdate -u 10.20.0.10

[root@controller0~]# service ntpd restart

[root@controller0~]# ntpq -p

为了方便后续直接可以通过命令行方式修改配置文件,这里进行openstack-utils的安装:

[root@controller0~]# yum install -y openstack-utils

1.2.6 防火墙的设置

这里要对防火墙进行相应的设置,清空防火墙的规则,不然后面的 compute节点无法同步到controller 节点上,包括 network 节点同样会受影响。

清空防火墙规则:

[root@controller0~]# vi /etc/sysconfig/iptables

*filter

:INPUT ACCEPT [0:0]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

COMMIT

重启防火墙,查看是否生效:

[root@controller0~]# service iptables restart

[root@controller0~]# iptables -L

1.2.7 模板制作

[root@controller0~]# rm –r /etc/udev/rules.d/70-persistent-net.rules

[root@controller0~]# shutdown –h 0

1.3 基本服务安装与配置(controller0 node)

从做好的模板上克隆一个新的虚拟机下来进来节点的配置。

1.3.1控制节点安装(controller0)

主机名设置:

[root@controller0~]# vi /etc/sysconfig/network

HOSTNAME=controller0

网卡配置、网络配置文件修改完后重启网络服务。由于这个虚拟机是根据前面配置的模板克隆而来的,因此这里不需要再进行这两项工作。

1.3.2基本服务安装与配置(controller0 node)

MySQL服务安装:

[root@controller0~]# yum install -y mysql mysql-server MySQL-python

启动 MySQL 服务并将其添加到系统启动项:

[root@controller0~]# service mysqld start

[root@controller0~]# chkconfig mysqld on

交互式配置 MySQL root 密码,设置密码为“openstack”:

[root@controller0~]# mysql_secure_installation

Set root password? [Y/n] y,再输入自己为 MySQL 设置的密码,我设置的密码为 openstack

Remove anonymous users? [Y/n] y

Disallow root login remotely? [Y/n] n

Remove test database and access to it? [Y/n] n

Reload privilege tables now? [Y/n] y

查看数据库:

[root@controller0~]# mysql –uroot –popenstack

mysql> show databases;

mysql> exit

Qpid安装消息服务,并设置客户端不需要验证使用服务:

[root@controller0~]# yum install -y qpid-cpp-server

[root@controller0~]# vi /etc/qpidd.conf

auth=no

配置修改后,重启 Qpid 后台服务并将其添加到系统启动项:

[root@controller0~]# service qpidd start

[root@controller0~]# chkconfig qpidd on

1.4 Keystone安装与配置

1.4.1 Keystone 的安装和数据配置

安装 Keystone包:

[root@controller0~]# yum install openstack-keystone python-keystoneclient –y

为 Keystone 设置 admin账户的 token:

[root@controller0~]# ADMIN_TOKEN=$(openssl rand -hex 10)

[root@controller0~]# echo $ADMIN_TOKEN

[root@controller0~]# openstack-config --set /etc/keystone/keystone.conf DEFAULT admin_token $ADMIN_TOKEN

配置数据连接:

[root@controller0~]# openstack-config --set /etc/keystone/keystone.conf sql connection mysql://keystone:openstack@controller0/keystone

设置 Keystone 用 PKI tokens:

[root@controller0~]# keystone-manage pki_setup --keystone-user keystone --keystone-group keystone

[root@controller0~]# chown -R keystone:keystone /etc/keystone/ssl

[root@controller0~]# chmod -R o-rwx /etc/keystone/ssl

1.4.2 Keystone 的基本操作

1.4.2.1为 Keystone 建表

用 openstack-db 工具初始数据库:

[root@controller0~]# openstack-db --init --service keystone --password openstack

1.4.2.2 Keystone建表遇到的问题及其解决方法

在执行该命令的时候会出现如下错误:

IOError: [Erron 13] Permission denied: ‘/var/log/keystone/keystone.log’

Error updating the database. Please see /var/log/keystone/ logs for details.

解决方法如下:

先删掉它的配置文件:

[root@controller0~]# rm /var/log/keystone/keystone.log

然后结束掉它的服务:

[root@controller0~]# /usr/bin/openstack-db --drop --service keystone

再重新创建即可:

[root@controller0~]# openstack-db --init --service keystone --password openstack

1.4.2.3启动 Keystone 服务并将其添加到开机项

[root@controller0~]# service openstack-keystone start

[root@controller0~]# chkconfig openstack-keystone on

用进程查看方式查看Keystone 的启动情况:

[root@controller0~]# ps -ef | grep keystone

1.4.2.4设置认证信息

[root@controller0~]# export OS_SERVICE_TOKEN=`echo $ADMIN_TOKEN`

这里的 ADMIN_TOKEN 的查看方式:

[root@controller0~]# vi /etc/keystone/keystone.conf

上面的 admin_token =

[root@controller0~]# export OS_SERVICE_ENDPOINT=http://controller0:35357/v2.0

1.4.3 Keystone 租户、用户、角色的创建

创建管理员和系统服务使用的租户:

[root@controller0~]# keystone tenant-create --name=admin --description="Admin Tenant"

[root@controller0~]# keystone tenant-create --name=service --description="Service Tenant"

查看所创建的租户:

[root@controller0~]# keystone tenant-list

创建管理员用户:

[root@controller0~]# keystone user-create --name=admin --pass=admin --email=admin@example.com

查看所创建的用户:

[root@controller0~]# keystone user-list

创建管理员角色:

[root@controller0~]# keystone role-create --name=admin

查看所创建的角色:

[root@controller0~]# keystone role-list

为管理员用户分配"管理员"角色:

[root@controller0~]# keystone user-role-add --user=admin --tenant=admin --role=admin

1.4.4 Keystone 服务的建立

为 Keystone 服务建立 endpoints:

[root@controller0~]# keystone service-create --name=keystone --type=identity --description="Keystone Identity Service"

查看Keystone 服务:

[root@controller0~]# keystone service-list

为 Keystone 建立 service 和 endpoint 关联:

[root@controller0~]# keystone endpoint-create \

--service-id=$(keystone service-list | awk ‘/ identity / {print $2}‘) \

--publicurl=http://controller0:5000/v2.0 \

--internalurl=http://controller0:5000/v2.0 \

--adminurl=http://controller0:35357/v2.0

1.4.5验证 Keystone 安装的正确性

取消先前的 token 变量,不然会干扰新建用户的验证:

[root@controller0~]# unset OS_SERVICE_TOKEN OS_SERVICE_ENDPOINT

先用命令行方式验证:

[root@controller0~]# keystone --os-username=admin --os-password=admin --os-auth-url=http://controller0:35357/v2.0 token-get

[root@controller0~]# keystone --os-username=admin --os-password=admin --os-tenant-name=admin --os-auth-url=http://controller0:35357/v2.0 token-get

让后用设置环境变量认证,保存认证信息:

[root@controller0~]# vi ~/keystonerc

export OS_USERNAME=admin

export OS_PASSWORD=admin

export OS_TENANT_NAME=admin

export OS_AUTH_URL=http://controller0:35357/v2.0

source该文件使其生效:

[root@controller0~]# source keystonerc

[root@controller0~]# keystone token-get

1.4.6Keystone token-get 命令报错处理

Keystone 安装成功后重启虚拟机,keystone token-get报如下错误:

[root@controller0~]# keystone token-get

WARNING: Bypassing authentication using a token & endpoint (authentication credentials are being ignored).

‘NoneType’object has no attribute ‘has_service_catalog’

解决办法,在命令行执行以下命令:

[root@controller0~]# unset OS_SERVICE_TOKEN OS_SERVICE_ENDPOINT

再执行:

[root@controller0~]# keystone token-get即可

1.5 Keystone 常用操作

1.5.1 Service 相关的命令(Keystone help command)

keystone service-list | service-get | service-create | service-delete

1.5.2 Endpoint 相关的命令

keystone endpoint-list | endpoint-get | endpoint-create | endpoint-delete

1.5.3 Tenant 相关的命令

keystone tenant-create | tenant-delete | tenant-get | tenant-list | tenant-update

1.5.4 User 相关的命令

keystone user-create | user-get | user-password-update | user-role-list | user-update | user-delete | user-list | user-role-add | user-role-remove

1.5.5 Role 相关的命令

keystone role-create | role-delete | role-get | role-list

2 Glance安装与配置

2.1 Glance 的安装及其数据库的配置

安装 Glance 的包:

[root@controller0~]# yum install openstack-glance python-glanceclient -y

配置 Glance 连接数据库:

[root@controller0~]# openstack-config --set /etc/glance/glance-api.conf DEFAULT sql_connection mysql://glance:openstack@controller0/glance

[root@controller0~]# openstack-config --set /etc/glance/glance-registry.conf DEFAULT sql_connection mysql://glance:openstack@controller0/glance

初始化 Glance 数据库:

[root@controller0~]# openstack-db --init --service glance --password openstack

2.2 Glance用户及服务的创建

创建 Glance 用户:

[root@controller0~]# keystone user-create --name=glance --pass=glance --email=glance@example.com

并分配 service 角色:

[root@controller0~]# keystone user-role-add --user=glance --tenant=service --role=admin

创建 Glance 服务:

[root@controller0~]# keystone service-create --name=glance --type=image --description="Glance Image Service"

创建 Keystone 的 endpoint:

[root@controller0~]# keystone endpoint-create \

--service-id=$(keystone service-list | awk ‘/ image / {print $2}‘) \

--publicurl=http://controller0:9292 \

--internalurl=http://controller0:9292 \

--adminurl=http://controller0:9292

用 openstack util 修改 glance api 和 register 配置文件:

[root@controller0~]# openstack-config --set /etc/glance/glance-api.conf DEFAULT debug True

openstack-config --set /etc/glance/glance-api.conf DEFAULT verbose True

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_uri http://controller0:5000

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_host controller0

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_port 35357

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_protocol http

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken admin_tenant_name service

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken admin_user glance

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken admin_password glance

openstack-config --set /etc/glance/glance-api.conf paste_deploy flavor keystone

openstack-config --set /etc/glance/glance-registry.conf DEFAULT debug True

openstack-config --set /etc/glance/glance-registry.conf DEFAULT verbose True

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_uri http://controller0:5000

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_host controller0

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_port 35357

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_protocol http

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken admin_tenant_name service

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken admin_user glance

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken admin_password glance

openstack-config --set /etc/glance/glance-registry.conf paste_deploy flavor keystone

启动 Glance 相关的两个服务并将其添加到系统启动:

[root@controller0~]# service openstack-glance-api start

[root@controller0~]# service openstack-glance-registry start

[root@controller0~]# chkconfig openstack-glance-api on

[root@controller0~]# chkconfig openstack-glance-registry on

查看Glance 的启动情况:

[root@controller0~]# ps -ef | grep glance

2.3 下载 Cirros镜像验证 Glance 安装是否成功

镜像下载:

[root@controller0~]# wget https://download.cirros-cloud.net/0.3.2/cirros-0.3.2-x86_64-disk.img

创建镜像:

[root@controller0~]# glance image-create --progress --name="CirrOS 0.3.2" --disk-format=qcow2 --container-format=ovf --is-public=true < cirros-0.3.2-x86_64-disk.img

查看刚刚上传的 image:

[root@controller0~]# glance image-list

如果显示相应的image信息说明安装成功。

2.4 Glance镜像管理部分操作演示

Glance 的基本操作可以用 help 去查看:

[root@controller0~]# glance help

列如查看镜像信息:

[root@controller0~]# glance image-show <镜像ID>

查看镜像能够被哪些租户查询到:

[root@controller0~]# glance member-list--image-id <镜像ID>

[root@controller0~]# glance member-list --tenant-id <租户ID>

将镜像分享给租户:

[root@controller0~]# glance member-add <镜像ID> <租户ID>

查看分享结果:

[root@controller0~]# glance member-list--image-id <镜像ID>

3 Nova 安装与配置

3.1 对前面设置的 ntp server 同步进行修改:

修改 ntp.conf 的配置文件:

[root@controller0~]# vi /etc/ntp.conf

server 127.127.1.0

重启 ntp 服务:

[root@controller0~]# /etc/init.d/ntpd restart

查看 ntp 的 remotes:

[root@controller0~]# ntpq -p

3.2 Nova 的安装

3.2.1 Nova 包的安装及其用户和服务的创建

安装Nova 的包:

[root@controller0~]# yum install -y openstack-nova-api openstack-nova-cert openstack-nova-conductor \

openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler python-novaclient

在 Keystone 中创建 Nova相应的用户和服务:

[root@controller0~]# keystone user-create --name=nova --pass=nova --email=nova@example.com

[root@controller0~]# keystone user-role-add --user=nova --tenant=service --role=admin

Keystone注册服务:

[root@controller0~]# keystone service-create --name=nova --type=compute --description="Nova Compute Service"

Keystone注册 endpoint:

[root@controller0~]# keystone endpoint-create \

--service-id=$(keystone service-list | awk ‘/ compute / {print $2}‘) \

--publicurl=http://controller0:8774/v2/%\(tenant_id\)s \

--internalurl=http://controller0:8774/v2/%\(tenant_id\)s \

--adminurl=http://controller0:8774/v2/%\(tenant_id\)s

3.2.2配置Nova 与数据库的连接及Keystone 的认证

配置 Nova MySQL 连接:

[root@controller0~]# openstack-config --set /etc/nova/nova.conf database connection mysql://nova:openstack@controller0/nova

初始化数据库:

[root@controller0~]# openstack-db --init --service nova --password openstack

配置 nova.conf:

[root@controller0~]# openstack-config --set /etc/nova/nova.conf DEFAULT rpc_backend qpid

openstack-config --set /etc/nova/nova.conf DEFAULT qpid_hostname controller0

[root@controller0~]# openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 10.20.0.10

openstack-config --set /etc/nova/nova.conf DEFAULT vncserver_listen 10.20.0.10

openstack-config --set /etc/nova/nova.conf DEFAULT vncserver_proxyclient_address 10.20.0.10

[root@controller0~]# openstack-config --set /etc/nova/nova.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_uri http://controller0:5000

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_host controller0

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_protocol http

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_port 35357

openstack-config --set /etc/nova/nova.conf keystone_authtoken admin_user nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken admin_tenant_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken admin_password nova

添加 api-paste.ini 的Keystone 认证信息:

[root@controller0~]# openstack-config --set /etc/nova/api-paste.ini filter:authtoken paste.filter_factory keystoneclient.middleware.auth_token:filter_factory

openstack-config --set /etc/nova/api-paste.ini filter:authtoken auth_host controller0

openstack-config --set /etc/nova/api-paste.ini filter:authtoken admin_tenant_name service

openstack-config --set /etc/nova/api-paste.ini filter:authtoken admin_user nova

openstack-config --set /etc/nova/api-paste.ini filter:authtoken admin_password nova

3.2.3启动服务并将其添加到系统服务

启动服务:

[root@controller0~]# service openstack-nova-api start

service openstack-nova-cert start

service openstack-nova-consoleauth start

service openstack-nova-scheduler start

service openstack-nova-conductor start

service openstack-nova-novncproxy start

添加到系统服务:

[root@controller0~]# chkconfig openstack-nova-api on

chkconfig openstack-nova-cert on

chkconfig openstack-nova-consoleauth on

chkconfig openstack-nova-scheduler on

chkconfig openstack-nova-conductor on

chkconfig openstack-nova-novncproxy on

3.2.4对Nova 服务进行检查

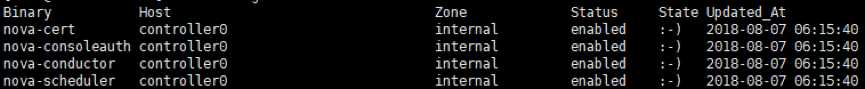

检查服务是否正常:

[root@controller0~]# nova-manage service list

查询所启动的服务有:

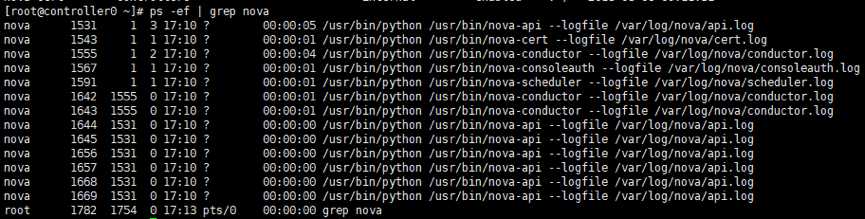

检查进程:

[root@controller0 ~]# ps -ef|grep nova

查询所启动的服务有:

3.3 compute0 的简单配置

3.3.1 compute0 虚拟机的克隆

我们在之前制作的模板上克隆一个新的虚拟机,并将其命名为 compute0,然后修改其网卡配置和主机名。

网卡配置的修改:

[root@compute0 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth0

IPADDR=10.20.0.30

重启网卡使其生效:

[root@compute0 ~]# service network restart

主机名的修改:

[root@compute0 ~]# vi /etc/sysconfig/network

HOSTNAME=compute0

重启电脑使其主机名生效:

[root@compute0 ~]# reboot

每次对网卡配置进行修改后都需要重新启动网卡使其生效,修改完主机名后也需要重启主机使其生效。

3.3.2 compute 节点的安装与配置

安装 Nova 相关包:

[root@compute0 ~]# yum install -y openstack-nova-compute

配置 Nova 与数据库的连接:

[root@compute0 ~]# openstack-config --set /etc/nova/nova.conf database connection mysql://nova:openstack@controller0/nova

配置 Nova 的Keystone认证:

[root@compute0 ~]# openstack-config --set /etc/nova/nova.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_uri http://controller0:5000

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_host controller0

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_protocol http

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_port 35357

openstack-config --set /etc/nova/nova.conf keystone_authtoken admin_user nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken admin_tenant_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken admin_password nova

配置 Nova 的消息服务:

[root@compute0 ~]# openstack-config --set /etc/nova/nova.conf DEFAULT rpc_backend qpid

openstack-config --set /etc/nova/nova.conf DEFAULT qpid_hostname controller0

配置 Nova 的 vnc server:

[root@compute0 ~]# openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 10.20.0.30

openstack-config --set /etc/nova/nova.conf DEFAULT vnc_enabled True

openstack-config --set /etc/nova/nova.conf DEFAULT vncserver_listen 0.0.0.0

openstack-config --set /etc/nova/nova.conf DEFAULT vncserver_proxyclient_address 10.20.0.30

openstack-config --set /etc/nova/nova.conf DEFAULT novncproxy_base_url http://controller0:6080/vnc_auto.html

选择 compute0 的 Hypervisor 类型:

[root@compute0 ~]# openstack-config --set /etc/nova/nova.conf libvirt virt_type qemu

选择 glance host 的位置:

[root@compute0 ~]# openstack-config --set /etc/nova/nova.conf DEFAULT glance_host controller0

3.3.3同步 ntp server:

修改需要同步的 IP:

[root@compute0 ~]# vi /etc/ntp.conf

fudge 10.20.0.10 stratum 10 # LCL is unsynchronized

同步 ntp server:

[root@compute0 ~]# ntpdate -u 10.20.0.10

重启 ntp server:

[root@compute0 ~]# /etc/init.d/ntpd restart

检测同步:

[root@compute0 ~]# ntpq -p

3.3.4启动 compute 节点服务并将其添加到系统启动

启动 compute 节点服务:

[root@compute0 ~]# service libvirtd start

service messagebus start

service openstack-nova-compute start

将 compute 节点服务添加到系统启动:

[root@compute0 ~]# chkconfig libvirtd on

chkconfig messagebus on

chkconfig openstack-nova-compute on

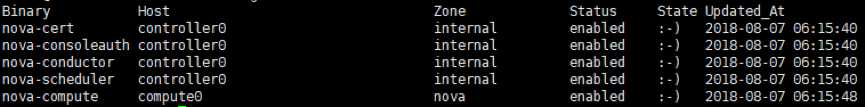

在 controller 节点检查 compute 服务是否启动:

[root@controller0 ~]# nova-manage service list

所检查结果如下所示,可以看出多出计算节点服务:

4 Neutron server安装与配置

4.1 Neutron安装:

4.1.1 Neutron 包的安装及其用户和服务的创建

安装 Neutron server 相关包:

[root@controller0 ~]# yum install -y openstack-neutron openstack-neutron-ml2 python-neutronclient

在 Keystone 中创建 Neutron相应的用户和服务:

[root@controller0 ~]# keystone user-create --name neutron --pass neutron --email neutron@example.com

[root@controller0 ~]# keystone user-role-add --user neutron --tenant service --role admin

[root@controller0 ~]# keystone service-create --name neutron --type network --description "OpenStack Networking"

[root@controller0 ~]# keystone endpoint-create \

--service-id $(keystone service-list | awk ‘/ network / {print $2}‘) \

--publicurl http://controller0:9696 \

--adminurl http://controller0:9696 \

--internalurl http://controller0:9696

4.1.2对 MySQL 配置进行修改

在配置数据库连接前先对数据库的配置进行修改,不然后面Neutron 导入数据库时会出错,会出现漏表的情况,导致所建立的Neutron 数据库完全不能用。

修改 MySQL 配置:

[root@controller0~]# vi /etc/my.cnf

[mysqld]

bind-address = 0.0.0.0

default-storage-engine = innodb

innodb_file_per_table

collation-server = utf8_general_ci

init-connect = ‘SET NAMES utf8‘

character-set-server = utf8

修改完成后重启 MySQL:

[root@controller0~]# /etc/init.d/mysqld restart

4.1.3配置 Neutron 与数据库的连接及 Keystone 认证

为 Neutron 在 MySQL建数据库:

[root@controller0~]# mysql -uroot -popenstack -e "CREATE DATABASE neutron;"

mysql -uroot -popenstack -e "GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron‘@‘localhost‘ IDENTIFIED BY ‘openstack‘;"

mysql -uroot -popenstack -e "GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron‘@‘%‘ IDENTIFIED BY ‘openstack‘;"

mysql -uroot -popenstack -e "GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron‘@‘controller0‘ IDENTIFIED BY ‘openstack‘;"

配置 MySQL:

[root@controller0~]# openstack-config --set /etc/neutron/neutron.conf database connection mysql://neutron:openstack@controller0/neutron

配置 Neutron Keystone 认证:

[root@controller0~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_uri http://controller0:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_host controller0

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_protocol http

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_port 35357

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken admin_tenant_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken admin_user neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken admin_password neutron

配置 Neutron qpid:

[root@controller0~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT rpc_backend neutron.openstack.common.rpc.impl_qpid

openstack-config --set /etc/neutron/neutron.conf DEFAULT qpid_hostname controller0

配置 Neutron 到Nova 的消息提醒:

[root@controller0~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_status_changes True

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_data_changes True

openstack-config --set /etc/neutron/neutron.conf DEFAULT nova_url http://controller0:8774/v2

[root@controller0~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT nova_admin_username nova

openstack-config --set /etc/neutron/neutron.conf DEFAULT nova_admin_tenant_id $(keystone tenant-list | awk ‘/ service / { print $2 }‘)

openstack-config --set /etc/neutron/neutron.conf DEFAULT nova_admin_password nova

openstack-config --set /etc/neutron/neutron.conf DEFAULT nova_admin_auth_url http://controller0:35357/v2.0

配置 Neutron ml2 plugin,用 openvswitch:

先为 plugin 做一个链接,使它链接到本地/etc/neutron/plugin.ini下:

[root@controller0~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

[root@controller0~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT core_plugin ml2

[root@controller0~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT service_plugins router

对 ml2 和 openvswitch 的实现的配置:

[root@controller0~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 type_drivers gre

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 tenant_network_types gre

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 mechanism_drivers openvswitch

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_gre tunnel_id_ranges 1:1000

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup enable_security_group True

配置 Nova 使用 Neutron作为 network 服务:

[root@controller0~]# openstack-config --set /etc/nova/nova.conf DEFAULT network_api_class nova.network.neutronv2.api.API

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_url http://controller0:9696

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_auth_strategy keystone

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_admin_tenant_name service

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_admin_username neutron

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_admin_password neutron

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_admin_auth_url http://controller0:35357/v2.0

openstack-config --set /etc/nova/nova.conf DEFAULT linuxnet_interface_driver nova.network.linux_net.LinuxOVSInterfaceDriver

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf DEFAULT security_group_api neutron

4.1.4启动服务并将其添加到系统服务

重启 nova controller 上的服务:

[root@controller0~]# service openstack-nova-api restart

[root@controller0~]# service openstack-nova-scheduler restart

[root@controller0~]# service openstack-nova-conductor restart

启动 Neutron server:

[root@controller0~]# service neutron-server start

[root@controller0~]# chkconfig neutron-server on

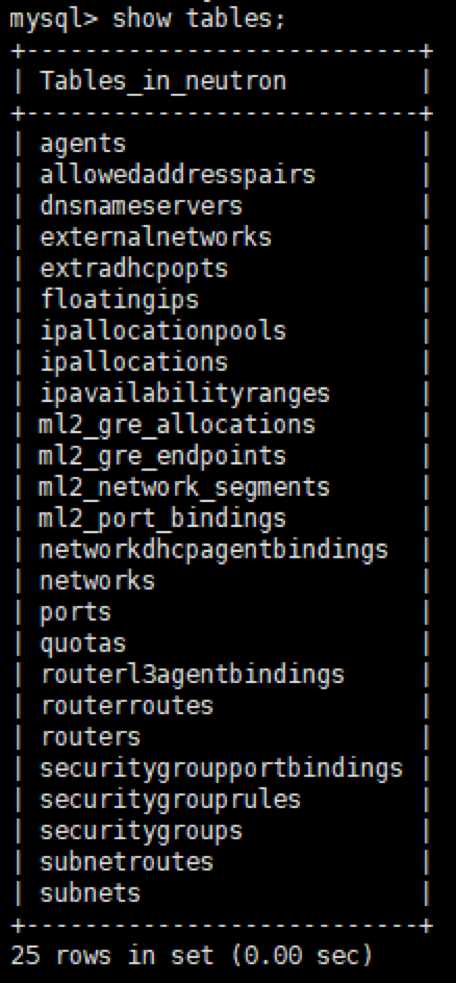

查看 Neutron 所创建的表是否成功:

[root@controller0~]# mysql -uroot-popenstack

[root@controller0~]# use neutron

[root@controller0~]# show tables;

[root@controller0~]# exit

所创建的表如下:

4.2 network0 的简单配置

4.2.1 network0 虚拟机的克隆

我们在之前制作的模板上克隆一个新的虚拟机,并将其命名为 network0,然后修改其网卡配置和主机名。

网卡配置的修改:

[root@network0 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth0

IPADDR=10.20.0.20

重启网卡使其生效:

[root@network0 ~]# service network restart

主机名的修改:

[root@neywork0 ~]# vi /etc/sysconfig/network

HOSTNAME=network0

重启电脑使其主机名生效:

[root@network0 ~]# reboot

4.2.2 network 节点的网卡配置

eth1 网卡配置:

[root@network0 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE=eth1

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=static

IPADDR=172.16.0.20

NETMASK=255.255.255.0

eth2 网卡配置:

[root@network0 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth2

DEVICE=eth2

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=static

IPADDR=192.168.4.20

NETMASK=255.255.255.0

网络配置文件修改完后重启网络服务:

[root@network0 ~]# service network restart

4.2.3同步 ntp server:

修改需要同步的 IP:

[root@network0~]#vi /etc/ntp.conf

fudge 10.20.0.10 stratum 10 # LCL is unsynchronized

同步 ntp server:

[root@network0~]#ntpdate -u 10.20.0.10

重启 ntp server:

[root@network0~]#/etc/init.d/ntpd restart

检测同步:

[root@network0~]#ntpq -p

4.2.4 network 节点的安装与配置

先安装 Neutron 相关的包:

[root@network0 ~]# yum install -y openstack-neutron openstack-neutron-ml2 openstack-neutron-openvswitch

允许 ip forward:

[root@network0 ~]# vi /etc/sysctl.conf

net.ipv4.ip_forward=1

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0

配置 Neutron Keysone 认证:

[root@network0 ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_uri http://controller0:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_host controller0

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_protocol http

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_port 35357

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken admin_tenant_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken admin_user neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken admin_password neutron

配置消息服务:

[root@network0 ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT rpc_backend neutron.openstack.common.rpc.impl_qpid

openstack-config --set /etc/neutron/neutron.conf DEFAULT qpid_hostname controller0

配置 Neutron 使用 ml + openvswitch +gre:

[root@network0 ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT core_plugin ml2

openstack-config --set /etc/neutron/neutron.conf DEFAULT service_plugins router

[root@network0 ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 type_drivers gre

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 tenant_network_types gre

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 mechanism_drivers openvswitch

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_gre tunnel_id_ranges 1:1000

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ovs local_ip 192.168.4.20

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ovs tunnel_type gre

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ovs enable_tunneling True

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup enable_security_group True

[root@network0 ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

[root@network0 ~]# cp /etc/init.d/neutron-openvswitch-agent /etc/init.d/neutronopenvswitch-agent.orig

[root@network0 ~]# sed -i ‘s,plugins/openvswitch/ovs_neutron_plugin.ini,plugin.ini,g‘ /etc/init.d/neutron-openvswitch-agent

配置 l3:

[root@network0 ~]# openstack-config --set /etc/neutron/l3_agent.ini DEFAULT interface_driver neutron.agent.linux.interface.OVSInterfaceDriver

openstack-config --set /etc/neutron/l3_agent.ini DEFAULT use_namespaces True

配置 dhcp agent:

[root@network0 ~]# openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT interface_driver neutron.agent.linux.interface.OVSInterfaceDriver

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT dhcp_driver neutron.agent.linux.dhcp.Dnsmasq

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT use_namespaces True

配置 metadata agent:

[root@network0 ~]# openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT auth_url http://controller0:5000/v2.0

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT auth_region regionOne

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT admin_tenant_name service

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT admin_user neutron

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT admin_password neutron

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT nova_metadata_ip controller0

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT metadata_proxy_shared_secret METADATA_SECRET

启动服务并将其添加到系统服务:

[root@network0 ~]# service openvswitch start

[root@network0 ~]# chkconfig openvswitch on

创建网桥并将 br-ex 添加到 eth1 的端口上:

[root@network0 ~]# ovs-vsctl add-br br-int

[root@network0 ~]# ovs-vsctl add-br br-ex

[root@network0 ~]# ovs-vsctl add-port br-ex eth1

修改 eth1 和 br-ex 网络配置:

[root@network0 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE=eth1

ONBOOT=yes

BOOTPROTO=none

PROMISC=yes

[root@network0 ~]# vi /etc/sysconfig/network-scripts/ifcfg-br-ex

DEVICE=br-ex

TYPE=Bridge

ONBOOT=no

BOOTPROTO=none

重启网络服务:

[root@network0 ~]# service network restart

启动 br-ex 网卡:

[root@network0 ~]# ifconfig br-ex up

为 br-ex 添加 ip:

[root@network0 ~]# ip addr add 172.16.0.20/24 dev br-ex

启动 Neutron 服务并将其添加到系统启动

[root@network0 ~]# service neutron-openvswitch-agent start

[root@network0 ~]# service neutron-l3-agent start

[root@network0 ~]# service neutron-dhcp-agent start

[root@network0 ~]# service neutron-metadata-agent start

[root@network0 ~]# chkconfig neutron-openvswitch-agent on

[root@network0 ~]# chkconfig neutron-l3-agent on

[root@network0 ~]# chkconfig neutron-dhcp-agent on

[root@network0 ~]# chkconfig neutron-metadata-agent on

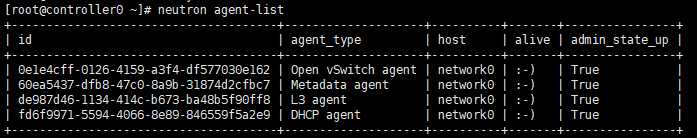

在 controller 节点上查看 agent 服务:

[root@controller0~]# neutron agent-list

进程查看服务启动情况:

[root@network0 ~]# ps -ef | grep neutron

4.2.5验证网络的连通性

对 controller 节点的 eth1 网卡进行配置:

[root@controller0~]# vi /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE=eth1

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=static

IPADDR=172.16.0.10

NETMASK=255.255.255.0

重启网络服务:

[root@controller0~]#service network restart

ping 172.16.0.20 查看结果:

[root@controller0~]# ping 172.16.0.20

4.3 在 compute节点上安装配置 Neutron

安装 neutron ml2 和 openvswitch agent:

[root@compute0~]# yum install openstack-neutron-ml2 openstack-neutron-openvswitch -y

配置 Neutron Keystone认证:

[root@compute0~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_uri http://controller0:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_host controller0

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_protocol http

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_port 35357

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken admin_tenant_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken admin_user neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken admin_password neutron

配置 Neutron qpid:

[root@compute0~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT rpc_backend neutron.openstack.common.rpc.impl_qpid

openstack-config --set /etc/neutron/neutron.conf DEFAULT qpid_hostname controller0

配置 Neutron 使用 ml2 for ovs and gre:

[root@compute0~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT core_plugin ml2

openstack-config --set /etc/neutron/neutron.conf DEFAULT service_plugins router

[root@compute0~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 type_drivers gre

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 tenant_network_types gre

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 mechanism_drivers openvswitch

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_gre tunnel_id_ranges 1:1000

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ovs local_ip 192.168.4.30

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ovs tunnel_type gre

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ovs enable_tunneling True

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup enable_security_group True

[root@compute0~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

[root@compute0~]# cp /etc/init.d/neutron-openvswitch-agent /etc/init.d/neutronopenvswitch-agent.orig

[root@compute0~]# sed -i ‘s,plugins/openvswitch/ovs_neutron_plugin.ini,plugin.ini,g‘ /etc/init.d/neutron-openvswitch-agent

配置 Nova 使用 Neutron提供网络服务:

[root@compute0~]# openstack-config --set /etc/nova/nova.conf DEFAULT network_api_class nova.network.neutronv2.api.API

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_url http://controller0:9696

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_auth_strategy keystone

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_admin_tenant_name service

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_admin_username neutron

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_admin_password neutron

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_admin_auth_url http://controller0:35357/v2.0

openstack-config --set /etc/nova/nova.conf DEFAULT linuxnet_interface_driver nova.network.linux_net.LinuxOVSInterfaceDriver

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf DEFAULT security_group_api neutron

[root@compute0~]# openstack-config --set /etc/nova/nova.conf DEFAULT service_neutron_metadata_proxy true

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_metadata_proxy_shared_secret METADATA_SECRET

[root@compute0~]# service openvswitch start

[root@compute0~]# chkconfig openvswitch on

[root@compute0~]# ovs-vsctl add-br br-int

[root@compute0~]# service openvswitch restart

重启Nova 的两个服务:

[root@compute0~]# service openstack-nova-compute restart

[root@compute0~]# service neutron-openvswitch-agent start

[root@compute0~]# chkconfig neutron-openvswitch-agent on

重启Nova 的 messagebus 服务:

[root@compute0~]# /etc/init.d/messagebus restart

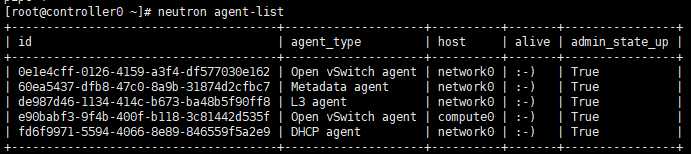

在 controller 节点上检查 agent 是否启动正常:

[root@controller0~]# neutron agent-list

4.4 创建初始网络

创建外部网络:

[root@controller0~]# neutron net-create ext-net --shared --router:external=True

为外部网络添加 subnet:

[root@controller0~]# neutron subnet-create ext-net --name ext-subnet \

--allocation-pool start=172.16.0.100,end=172.16.0.200 \

--disable-dhcp --gateway 172.16.0.1 172.16.0.0/24

创建住户网络,首先创建 demo 用户、租户已经分配角色关系:

[root@controller0~]# keystone user-create --name=demo --pass=demo --email=demo@example.com

[root@controller0~]# keystone tenant-create --name=demo --description="Demo Tenant"

[root@controller0~]# keystone user-role-add --user=demo --role=_member_ --tenant=demo

新建 openrc 文件并修改其配置:

[root@controller0~]# cp keystonerc demo-openrc

[root@controller0~]# vi demo-openrc

export OS_USERNAME=demo

export OS_PASSWORD=demo

export OS_TENANT_NAME=demo

export OS_AUTH_URL=http://controller0:35357/v2.0

切换到 demo 用户:

[root@controller0~]# . demo-openrc

创建租户网络 demo-net:

[root@controller0~]# neutron net-create demo-net

为租户网络添加 subnet:

[root@controller0~]# neutron subnet-create demo-net --name demo-subnet --gateway 192.168.1.1 192.168.1.0/24

为租户网络创建路由,并连接到外部网络:

[root@controller0~]# neutron router-create demo-router

将 demo-net 连接到路由器:

[root@controller0~]# neutron router-interface-add demo-router $(neutron net-show demo-net|awk ‘/ subnets / { print $4 }‘)

设置 demo-router 默认网关:

[root@controller0~]# neutron router-gateway-set demo-router ext-net

启动一个 instance:

[root@controller0~]# nova boot --flavor m1.tiny --image $(nova image-list|awk ‘/ CirrOS / { print $2 }‘) --nic net-id=$(neutron net-list|awk ‘/ demo-net / { print $2 }‘) --security-group default demo-instance1

循环执行下列命令查询实例状态:

[root@controller0~]# nova show <id>

检测路由器是否能够连接到内网:

[root@controller0~]# ping 172.16.0.100

5 Cinder 安装

5.1 Cinder controller 安装

先在controller0 节点安装cinder api

[root@controller0~]# yum install openstack-cinder -y

配置cinder数据库连接

[root@controller0~]# openstack-config --set /etc/cinder/cinder.conf database connection mysql://cinder:openstack@controller0/cinder

用openstack-db 工具初始化数据库

[root@controller0~]# openstack-db --init --service cinder --password openstack

在Keystone中创建cinder系统用户

[root@controller0~]# keystone user-create --name=cinder --pass=cinder --email=cinder@example.com

在Keystone中为cinder添加管理员角色

[root@controller0~]# keystone user-role-add --user=cinder --tenant=service --role=admin

在Keystone注册一个cinder的service

[root@controller0~]# keystone service-create --name=cinder --type=volume --description="OpenStack Block Storage"

创建一个cinder 的endpoint

[root@controller0~]# keystone endpoint-create \

--service-id=$(keystone service-list | awk ‘/ volume / {print $2}‘) \

--publicurl=http://controller0:8776/v1/%\(tenant_id\)s \

--internalurl=http://controller0:8776/v1/%\(tenant_id\)s \

--adminurl=http://controller0:8776/v1/%\(tenant_id\)s

在Keystone注册一个cinderv2的service

[root@controller0~]# keystone service-create --name=cinderv2 --type=volumev2 --description="OpenStack Block Storage v2"

创建一个cinderv2 的endpoint

[root@controller0~]# keystone endpoint-create \

--service-id=$(keystone service-list | awk ‘/ volumev2 / {print $2}‘) \

--publicurl=http://controller0:8776/v2/%\(tenant_id\)s \

--internalurl=http://controller0:8776/v2/%\(tenant_id\)s \

--adminurl=http://controller0:8776/v2/%\(tenant_id\)s

配置cinder Keystone认证

[root@controller0~]# openstack-config --set /etc/cinder/cinder.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_uri http://controller0:5000

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_host controller0

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_protocol http

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_port 35357

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken admin_user cinder

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken admin_tenant_name service

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken admin_password cinder

配置qpid

[root@controller0~]# openstack-config --set /etc/cinder/cinder.conf DEFAULT rpc_backend cinder.openstack.common.rpc.impl_qpid

openstack-config --set /etc/cinder/cinder.conf DEFAULT qpid_hostname controller0

启动cinder controller 相关服务

[root@controller0~]# service openstack-cinder-api start

[root@controller0~]# service openstack-cinder-scheduler start

将cinder controller 相关服务添加到系统启动

[root@controller0~]# chkconfig openstack-cinder-api on

[root@controller0~]# chkconfig openstack-cinder-scheduler on

5.2 Cinder block storage 节点安装

5.2.1 cinder0虚拟机的克隆

我们在之前制作的模板上克隆一个新的虚拟机,并将其命名为cinder0,然后修改其网卡配置和主机名。

网卡配置的修改:

[root@cinder0 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth0

IPADDR=10.20.0.40

重启网卡使其生效:

[root@cinder0 ~]# service network restart

主机名的修改:

[root@cinder0 ~]# vi /etc/sysconfig/network

HOSTNAME=cinder0

重启电脑使其主机名生效:

[root@cinder0 ~]# reboot -h 0

5.2.2对公共配置进行修改

修改hosts文件

[root@cinder0 ~]# vi /etc/hosts

将cinder0添加进去

10.20.0.40 cinder0

禁用selinux

[root@cinder0 ~]# vi /etc/selinux/config

SELINUX=disabled

检查ntp同步的主机是否修改

[root@cinder0 ~]# vi /etc/ntp.conf

server 10.20.0.10

fudge 10.20.0.10 stratum 10 # LCL is unsynchronized

同步并检查时间同步配置是否正确

[root@cinder0 ~]# ntpdate –u 10.20.0.10

[root@cinder0 ~]# service ntpd restart

[root@cinder0 ~]# ntpq -p

清空防护墙规则

[root@cinder0 ~]# vi /etc/sysconfig/iptables

*filter

:INPUT ACCEPT [0:0]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

COMMIT

重置防护墙,查看是否生效

[root@cinder0 ~]# service iptables restart

[root@cinder0 ~]# iptables -L

5.2.3为cinder0创建一个新的磁盘,用于block 的分配

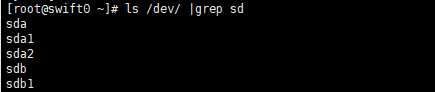

查看cinder0当前磁盘

[root@cinder0 ~]# cd /dev/

[root@cinder0 ~]# ls |grep sd

sda

sda1

sda2

可以看出当前cinder0节点只有一个磁盘,两个分区。

添加磁盘前需要关闭虚拟机

[root@cinder0 ~]# shutdown -h 0

添加磁盘

在虚拟机界面对cinder0这台虚拟机的存储进行配置,在控制器:SATA处添加虚拟硬盘,内存分配为10G。

查看添加磁盘情况

[root@cinder0 ~]# cd /dev/

[root@cinder0 ~]# ls |grep sd

sda

sda1

sda2

sdb

可以发现多出一块磁盘sdb,说明我们已经成功创建磁盘。

5.2.4对cinder0的网卡进行配置

网卡配置

[root@cinder0 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE=eth1

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=static

IPADDR=172.16.0.40

NETMASK=255.255.255.0

[root@cinder0 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth2

DEVICE=eth2

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=static

IPADDR=192.168.4.40

NETMASK=255.255.255.0

网络配置文件修改完后重启网络服务

[root@cinder0 ~]# service network restart

5.3 安装Cinder 相关包

[root@cinder0 ~]# yum install -y openstack-cinder scsi-target-utils

创建LVM physical and logic 卷,作为cinder 块存储的实现

[root@cinder0 ~]# pvcreate /dev/sdb

[root@cinder0 ~]# vgcreate cinder-volumes /dev/sdb

添加一个过滤器保证 虚拟机能扫描到LVM

[root@cinder0 ~]# vi /etc/lvm/lvm.conf

devices {

...

filter = [ "a/sda1/", "a/sdb/", "r/.*/"]

...

}

配置Keystone 认证

[root@cinder0 ~]# openstack-config --set /etc/cinder/cinder.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_uri http://controller0:5000

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_host controller0

openstack-config --set /etc/cinder/cinder.conf keystone_authtokenauth_protocol http

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_port 35357

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken admin_user cinder

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken admin_tenant_name service

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken admin_password cinder

配置qpid

[root@cinder0 ~]# openstack-config --set /etc/cinder/cinder.conf DEFAULT rpc_backend cinder.openstack.common.rpc.impl_qpid

[root@cinder0 ~]# openstack-config --set /etc/cinder/cinder.conf DEFAULT qpid_hostname controller0

配置数据库连接

[root@cinder0 ~]# openstack-config --set /etc/cinder/cinder.conf database connection mysql://cinder:openstack@controller0/cinder

配置Glance server

[root@cinder0 ~]# openstack-config --set /etc/cinder/cinder.conf DEFAULT glance_host controller0

配置iSCSI target 服务发现Block Storage volumes

[root@cinder0 ~]# vi /etc/tgt/targets.conf

include /etc/cinder/volumes/*

启动cinder-volume 服务并将其添加到系统启动

[root@cinder0 ~]# service openstack-cinder-volume start

[root@cinder0 ~]# service tgtd start

[root@cinder0 ~]# chkconfig openstack-cinder-volume on

[root@cinder0 ~]# chkconfig tgtd on

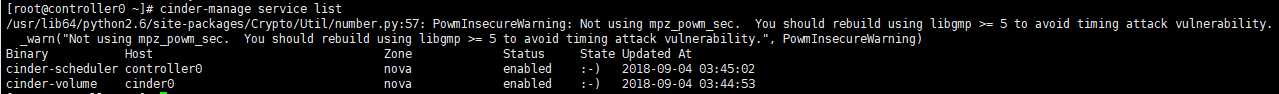

在controller0节点上查看cinder是否安装成功

[root@controller0 ~]# cinder-manage service list

6 Swift 安装

6.1 安装存储节点

6.1.1 swift0虚拟机的克隆

我们在之前制作的模板上克隆一个新的虚拟机,并将其命名为cinder0,然后修改其网卡配置和主机名。

网卡配置的修改:

[root@swift0 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth0

IPADDR=10.20.0.50

重启网卡使其生效:

[root@swift0 ~]# service network restart

主机名的修改:

[root@swift0 ~]# vi /etc/sysconfig/network

HOSTNAME=swift0

重启电脑使其主机名生效:

[root@swift0 ~]# reboot -h 0

6.1.2对公共配置进行修改

修改hosts文件

[root@swift0 ~]# vi /etc/hosts

将cinder0和swift0添加进去

10.20.0.40 cinder0

10.20.0.50 swift0

禁用selinux

[root@swift0 ~]# vi /etc/selinux/config

SELINUX=disabled

检查ntp同步的主机是否修改

[root@swift0 ~]# vi /etc/ntp.conf

server 10.20.0.10

fudge 10.20.0.10 stratum 10 # LCL is unsynchronized

同步并检查时间同步配置是否正确

[root@swift0 ~]# ntpdate –u 10.20.0.10

[root@swift0 ~]# service ntpd restart

[root@swift0 ~]# ntpq -p

清空防护墙规则

[root@swift0 ~]# vi /etc/sysconfig/iptables

*filter

:INPUT ACCEPT [0:0]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

COMMIT

重置防护墙,查看是否生效

[root@swift0 ~]# service iptables restart

[root@swift0 ~]# iptables -L

6.1.3为swift0创建一个新的磁盘

添加磁盘

在虚拟机界面对swift0这台虚拟机的存储进行配置,在控制器:SATA处添加虚拟硬盘,内存分配为10G。

查看添加磁盘情况

[root@swift0 ~]# cd /dev/

[root@swift0 ~]# ls |grep sd

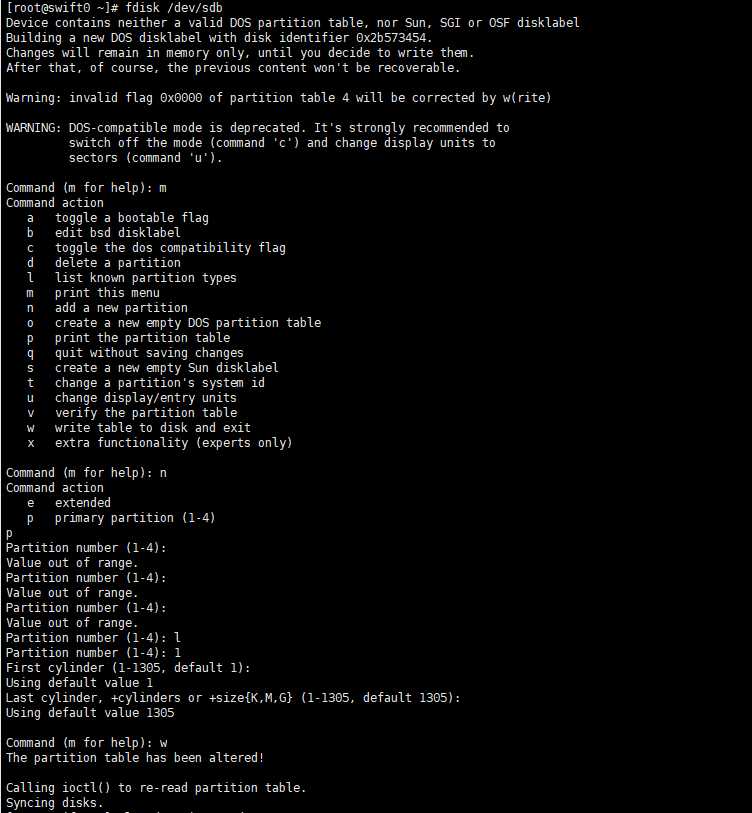

6.1.4磁盘创建好后,启动OS为新磁盘分区

对磁盘进行分区

[root@swift0 ~]# fdisk /dev/sdb

查看分区后的磁盘情况

[root@swift0 ~]# ls /dev/ | grep sd

格式化文件系统

[root@swift0 ~]# mkfs.xfs /dev/sdb1

添加到fstab

[root@swift0 ~]# echo "/dev/sdb1 /srv/node/sdb1 xfs noatime,nodiratime,nobarrier,logbufs=8 0 0" >> /etc/fstab

[root@swift0 ~]# mkdir -p /srv/node/sdb1

[root@swift0 ~]# mount /srv/node/sdb1

[root@swift0 ~]# chown -R swift:swift /srv/node

6.1.5对swift0的网卡进行配置

网卡配置

[root@swift0 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE=eth1

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=static

IPADDR=172.16.0.50

NETMASK=255.255.255.0

[root@swift0 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth2

DEVICE=eth2

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=static

IPADDR=192.168.4.50

NETMASK=255.255.255.0

网络配置文件修改完后重启网络服务

[root@swift0 ~]# service network restart

6.2 安装swift storage 节点相关的包

下载相应的软件包

[root@swift0 ~]# yum install -y openstack-swift-account openstack-swift-container openstack-swift-object xfsprogs xinetd

配置account的配置文件,配置它的bind_ip

[root@swift0 ~]# openstack-config --set /etc/swift/account-server.conf DEFAULT bind_ip 10.20.0.50

配置container的配置文件,配置它的bind_ip

[root@swift0 ~]# openstack-config --set /etc/swift/container-server.conf DEFAULT bind_ip 10.20.0.50

配置object的配置文件,配置它的bind_ip

[root@swift0 ~]# openstack-config --set /etc/swift/object-server.conf DEFAULT bind_ip 10.20.0.50

在rsynd 配置文件中配置要同步的文件目录

[root@swift0 ~]# vi /etc/rsyncd.conf

uid = swift

gid = swift

log file = /var/log/rsyncd.log

pid file = /var/run/rsyncd.pid

address = 192.168.4.50

[account]

max connections = 2

path = /srv/node/

read only = false

lock file = /var/lock/account.lock

[container]

max connections = 2

path = /srv/node/

read only = false

lock file = /var/lock/container.lock

[object]

max connections = 2

path = /srv/node/

read only = false

lock file = /var/lock/object.lock

[root@swift0 ~]# vi /etc/xinetd.d/rsync

disable = no

启动rsynd并将其添加到系统启动

[root@swift0 ~]# service xinetd start

[root@swift0 ~]# chkconfig xinetd on

创建Swift recon缓存目录并设置其权限:

[root@swift0 ~]# mkdir -p /var/swift/recon

[root@swift0 ~]# chown -R swift:swift /var/swift/recon

6.3 安装swift-proxy服务

修改hosts文件

[root@controller0 ~]# vi /etc/hosts

10.20.0.40 cinder0

10.20.0.50 swift0

为swift 在Keystone中创建一个用户

[root@controller0 ~]# keystone user-create --name=swift --pass=swift --email=swift@example.com

为swift 用户添加root 用户

[root@controller0 ~]# keystone user-role-add --user=swift --tenant=service --role=admin

为swift 添加一个对象存储服务

[root@controller0 ~]# keystone service-create --name=swift --type=object-store --description="OpenStack Object Storage"

为swift 添加endpoint

[root@controller0 ~]# keystone endpoint-create \

--service-id=$(keystone service-list | awk ‘/ object-store / {print $2}‘) \

--publicurl=‘http://controller0:8080/v1/AUTH_%(tenant_id)s‘ \

--internalurl=‘http://controller0:8080/v1/AUTH_%(tenant_id)s‘ \

--adminurl=http://controller0:8080

6.4 安装swift-proxy相关软件包

下载相应软件包

[root@controller0 ~]# yum install -y openstack-swift-proxy memcached python-swiftclient python-keystone-auth-token

添加配置文件,完成配置后再copy 该文件到每个storage 节点

[root@controller0 ~]# openstack-config --set /etc/swift/swift.conf swift-hash swift_hash_path_prefix xrfuniounenqjnw

[root@controller0 ~]# openstack-config --set /etc/swift/swift.conf swift-hash swift_hash_path_suffix fLIbertYgibbitZ

[root@controller0 ~]# scp /etc/swift/swift.conf root@10.20.0.50:/etc/swift/

修改memcached 默认监听ip地址

[root@controller0 ~]# vi /etc/sysconfig/memcached

OPTIONS="-l 10.20.0.10"

启动mencached

[root@controller0 ~]# service memcached restart

[root@controller0 ~]# chkconfig memcached on

修改过proxy server配置

[root@controller0 ~]# vi /etc/swift/proxy-server.conf

[root@controller0 ~]# openstack-config --set /etc/swift/proxy-server.conf filter:keystone operator_roles Member,admin,swiftoperator

[root@controller0 ~]# openstack-config --set /etc/swift/proxy-server.conf filter:authtoken auth_host controller0

openstack-config --set /etc/swift/proxy-server.conf filter:authtoken auth_port 35357

openstack-config --set /etc/swift/proxy-server.conf filter:authtoken admin_user swift

openstack-config --set /etc/swift/proxy-server.conf filter:authtoken admin_tenant_name service

openstack-config --set /etc/swift/proxy-server.conf filter:authtoken admin_password swift

openstack-config --set /etc/swift/proxy-server.conf filter:authtoken delay_auth_decision true

构建ring 文件

[root@controller0 ~]# cd /etc/swift

[root@controller0 ~]# swift-ring-builder account.builder create 18 3 1

[root@controller0 ~]# swift-ring-builder container.builder create 18 3 1

[root@controller0 ~]# swift-ring-builder object.builder create 18 3 1

将存储块添加到ring

[root@controller0 ~]# swift-ring-builder account.builder add z1-10.20.0.50:6002R10.20.0.50:6005/sdb1 100

[root@controller0 ~]# swift-ring-builder container.builder add z1-10.20.0.50:6001R10.20.0.50:6004/sdb1 100

[root@controller0 ~]# swift-ring-builder object.builder add z1-10.20.0.50:6000R10.20.0.50:6003/sdb1 100

检查ring是否添加成功

[root@controller0 ~]# swift-ring-builder account.builder

[root@controller0 ~]# swift-ring-builder container.builder

[root@controller0 ~]# swift-ring-builder object.builder

[root@controller0 ~]# swift-ring-builder account.builder rebalance

[root@controller0 ~]# swift-ring-builder container.builder rebalance

[root@controller0 ~]# swift-ring-builder object.builder rebalance

拷贝ring 文件到storage节点

[root@controller0 ~]# scp *ring.gz root@10.20.0.50:/etc/swift/

修改proxy server 和storage节点Swift 配置文件的权限

[root@controller0 ~]# ssh root@10.20.0.50 "chown -R swift:swift /etc/swift"

[root@controller0 ~]# chown -R swift:swift /etc/swift

在controller0 上启动proxy service

[root@controller0 ~]# service openstack-swift-proxy start

[root@controller0 ~]# chkconfig openstack-swift-proxy on

在Swift0 上 启动storage服务

[root@swift0 ~]# service openstack-swift-object start

[root@swift0 ~]# service openstack-swift-object-replicator start

[root@swift0 ~]# service openstack-swift-object-updater start

[root@swift0 ~]# service openstack-swift-object-auditor start

[root@swift0 ~]# service openstack-swift-container start

[root@swift0 ~]# service openstack-swift-container-replicator start

[root@swift0 ~]# service openstack-swift-container-updater start

[root@swift0 ~]# service openstack-swift-container-auditor start

[root@swift0 ~]# service openstack-swift-account start

[root@swift0 ~]# service openstack-swift-account-replicator start

[root@swift0 ~]# service openstack-swift-account-reaper start

[root@swift0 ~]# service openstack-swift-account-auditor start

设置开机启动

[root@swift0 ~]# chkconfig openstack-swift-object on

[root@swift0 ~]# chkconfig openstack-swift-object-replicator on

[root@swift0 ~]# chkconfig openstack-swift-object-updater on

[root@swift0 ~]# chkconfig openstack-swift-object-auditor on

[root@swift0 ~]# chkconfig openstack-swift-container on

[root@swift0 ~]# chkconfig openstack-swift-container-replicator on

[root@swift0 ~]# chkconfig openstack-swift-container-updater on

[root@swift0 ~]# chkconfig openstack-swift-container-auditor on

[root@swift0 ~]# chkconfig openstack-swift-account on

[root@swift0 ~]# chkconfig openstack-swift-account-replicator on

[root@swift0 ~]# chkconfig openstack-swift-account-reaper on

[root@swift0 ~]# chkconfig openstack-swift-account-auditor on

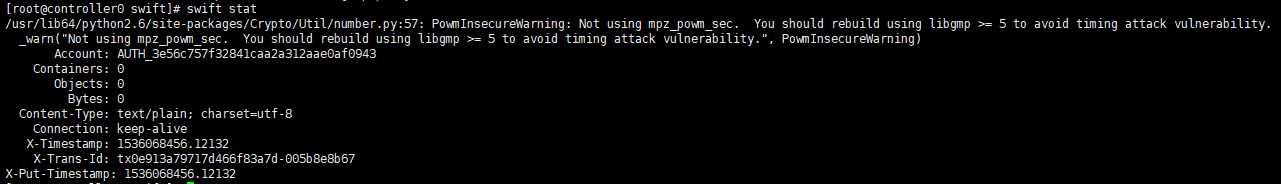

在controller节点验证Swift 安装

[root@controller0 ~]# swift stat

上传两个文件测试

[root@controller0 ~]# swift upload myfiles test.txt

[root@controller0 ~]# swift upload myfiles test2.txt

下载刚上传的文件

[root@controller0 ~]# swift download myfiles

7 Dashboard 安装

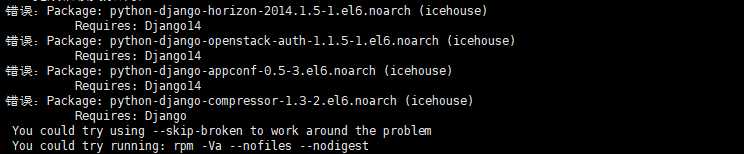

安装Dashboard相关包

[root@controller0~]# yum install memcached python-memcached mod_wsgi openstack-dashboard

这里在下载Dashboard相关包的时候会出现Django的错误

出现这个错误是因为源的问题,Django14存在于EPEL的早期版本中,但近期的EPEL中移除了这个软件。解决方法是手动rpm安装,具体实现方式如下:

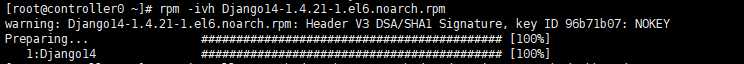

先在下载一个Django14的包,然后再手动安装所下载的Django包。

下载Django14包

[root@controller0~]# wget ftp://bo.mirror.garr.it/1/egi/production/umd/4/sl6/x86_64/updates/Django14-1.4.21-1.el6.noarch.rpm

安装Django包

[root@controller0~]# rpm -ivh Django14-1.4.21-1.el6.noarch.rpm

排除错误后再继续安装Dashboard相关包

[root@controller0~]# yum install memcached python-memcached mod_wsgi openstack-dashboard

配置mencached,进入配置文件后找到对应的内容进行修改

[root@controller0~]# vi /etc/openstack-dashboard/local_settings

DEBUG = True

ALLOWED_HOSTS = [‘localhost’]

CACHES = {

‘default‘: {

‘BACKEND‘ : ‘django.core.cache.backends.memcached.MemcachedCache‘,

‘LOCATION‘ : ‘127.0.0.1:11211‘

}

}

配置Keystone hostname

[root@controller0~]# vi /etc/openstack-dashboard/local_settings

OPENSTACK_HOST = "controller0"

启动Dashboard相关服务

[root@controller0~]# service httpd start

[root@controller0~]# service memcached start

[root@controller0~]# chkconfig httpd on

[root@controller0~]# chkconfig memcached on

打开浏览器验证,用户名:admin密码:admin

http://10.20.0.10/dashboard

本篇文章主要参考:https://github.com/yongluo2013/osf-openstack-training/blob/master/installation/openstack-icehouse-for-centos65.md

OpenStack手动安装手册即错误排查(Icehouse)

标签:progress 账户 清空 als oat err port bootproto 启动网卡

原文地址:https://www.cnblogs.com/chenjin2018/p/9600114.html