标签:路径 mitm def odi 写入 [] put log tst

百度贴吧-中国好声音评论爬爬

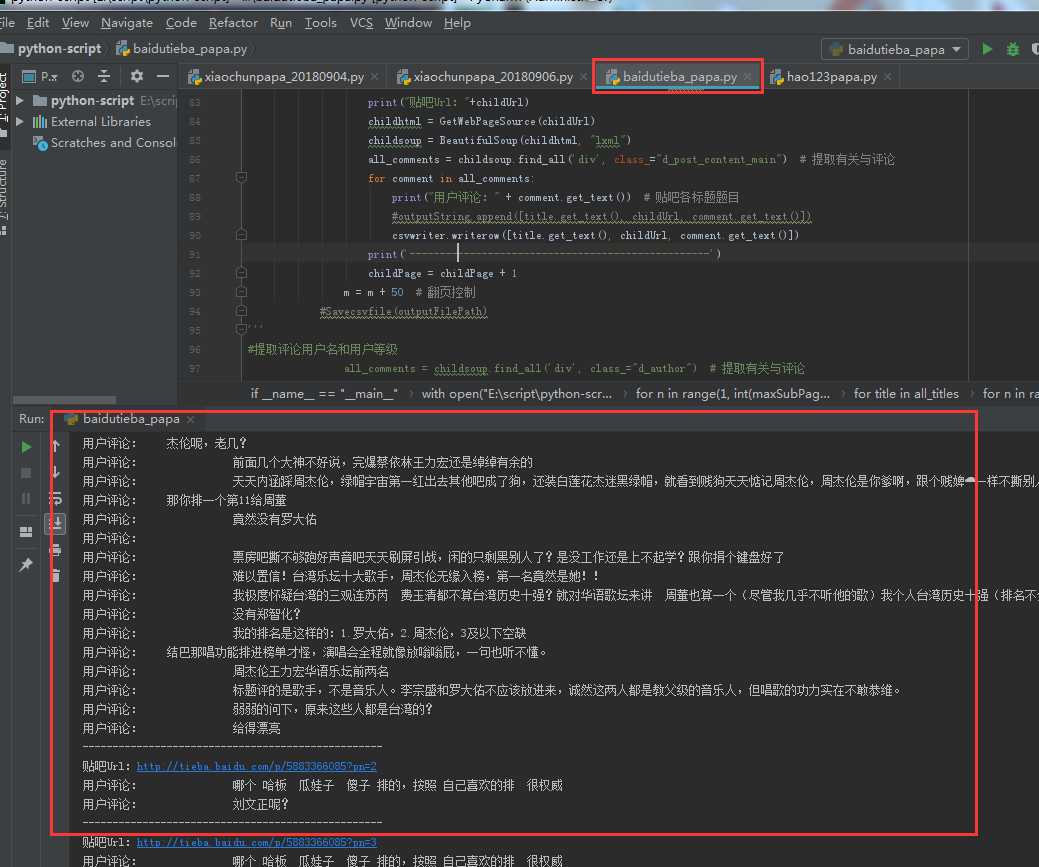

1 # coding=utf-8 2 import csv 3 import random 4 import io 5 from urllib import request,parse,error 6 import http.cookiejar 7 import urllib 8 import re 9 from bs4 import BeautifulSoup 10 11 # 爬爬网址 12 #homeUrl ="http://tieba.baidu.com" #贴吧首页 13 subUrl = "http://tieba.baidu.com/f?kw=%E4%B8%AD%E5%9B%BD%E5%A5%BD%E5%A3%B0%E9%9F%B3&ie=utf-8&pn=0" #中国好声音贴吧页面 14 #childUrl="http://tieba.baidu.com/p/5825125387" #中国好声音贴吧第一条 15 16 #存储csv文件路径 17 outputFilePath = "E:\script\python-script\laidutiebapapa\csvfile_output.csv" 18 19 def GetWebPageSource(url): 20 21 values = {} 22 data = parse.urlencode(values).encode(‘utf-8‘) 23 24 # header 25 user_agent = "" 26 headers = {‘User-Agent‘:user_agent,‘Connection‘:‘keep-alive‘} 27 28 # 声明cookie 声明opener 29 cookie_filename = ‘cookie.txt‘ 30 cookie = http.cookiejar.MozillaCookieJar(cookie_filename) 31 handler = urllib.request.HTTPCookieProcessor(cookie) 32 opener = urllib.request.build_opener(handler) 33 34 # 声明request 35 request=urllib.request.Request(url, data, headers) 36 # 得到响应 37 response = opener.open(request) 38 html=response.read().decode(‘utf-8‘) 39 # 保存cookie 40 cookie.save(ignore_discard=True,ignore_expires=True) 41 42 return html 43 44 # 将读取的内容写入一个新的csv文档 54 # 主函数 55 if __name__ == "__main__": 56 #outputString = [] 57 maxSubPage=2 #中国好声音贴吧翻几页设置? 58 m=0#起始页数 59 with open("E:\script\python-script\laidutiebapapa\csvfile_output.csv", "w", newline="", encoding=‘utf-8‘) as datacsv: 60 headers = [‘title‘, ‘url‘, ‘comment‘] 61 csvwriter = csv.writer(datacsv, headers) 62 for n in range(1, int(maxSubPage)): 63 subUrl = "http://tieba.baidu.com/f?kw=%E4%B8%AD%E5%9B%BD%E5%A5%BD%E5%A3%B0%E9%9F%B3&ie=utf-8&pn=" + str(m) + ".html" 64 print(subUrl) 65 subhtml = GetWebPageSource(subUrl) 66 #print(html) 67 # 利用BeatifulSoup获取想要的元素 68 subsoup = BeautifulSoup(subhtml, "lxml") 69 #打印中国好声音贴吧页标题 70 print(subsoup.title) 71 #打印中国好声音第一页贴吧标题 72 all_titles = subsoup.find_all(‘div‘, class_="threadlist_title pull_left j_th_tit ") # 提取有关与标题 73 for title in all_titles: 74 print(‘--------------------------------------------------‘) 75 print("贴吧标题:"+title.get_text())#贴吧各标题题目 76 commentUrl = str(title.a[‘href‘]) 77 #print(commitment) 78 #评论页循环需要改改,maxchildpage 79 childPage = 1#评论页翻页变量 80 maxChildPage = 6#【变量】几页就翻几页设置? 81 for n in range(1, int(maxChildPage)): 82 childUrl = "http://tieba.baidu.com" + commentUrl +"?pn=" + str(childPage) 83 print("贴吧Url:"+childUrl) 84 childhtml = GetWebPageSource(childUrl) 85 childsoup = BeautifulSoup(childhtml, "lxml") 86 all_comments = childsoup.find_all(‘div‘, class_="d_post_content_main") # 提取有关与评论 87 for comment in all_comments: 88 print("用户评论:" + comment.get_text()) # 贴吧各标题题目 89 #outputString.append([title.get_text(), childUrl, comment.get_text()]) 90 csvwriter.writerow([title.get_text(), childUrl, comment.get_text()]) 91 print(‘--------------------------------------------------‘) 92 childPage = childPage + 1 93 m = m + 50 # 翻页控制,通过观察发现,每页相差50

跑完了成果图

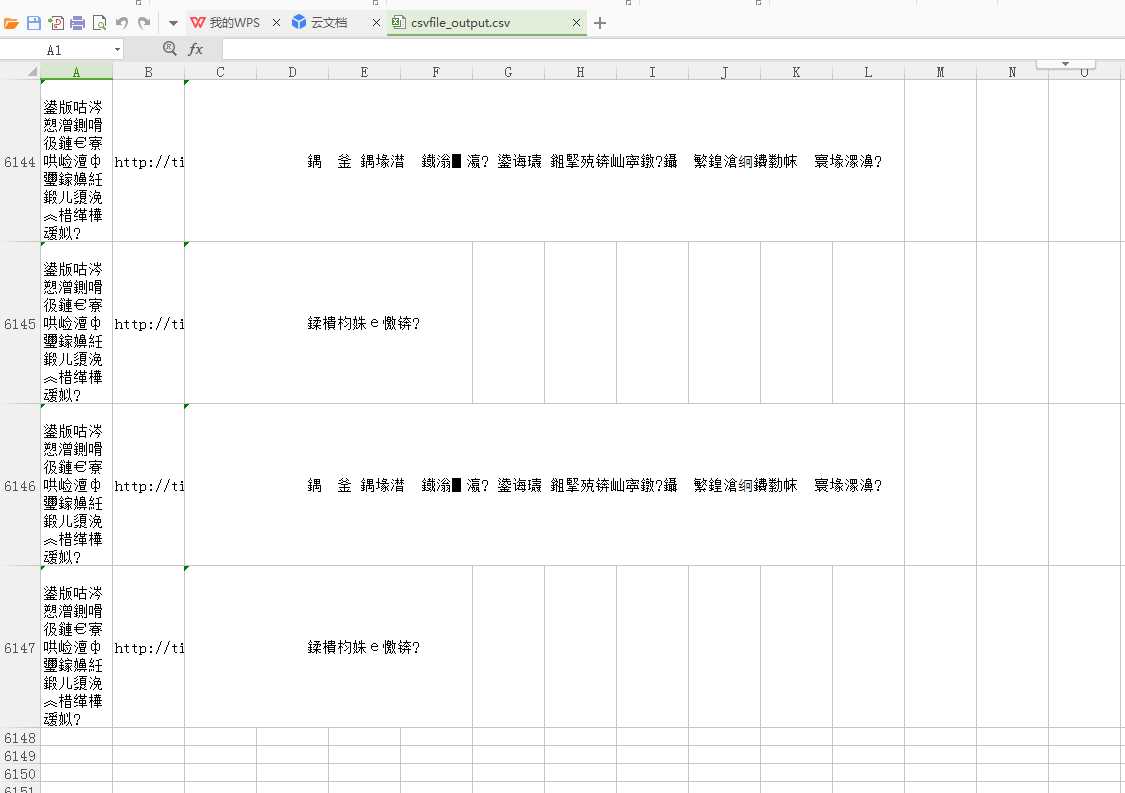

csv文档中效果

上方生成的csv文件通过txt记事本打开另存为ANIS编码方式,然后在通过csv打开就不会再乱码了,解决csv打开乱码问题相关可以参考博文:https://www.cnblogs.com/zhuzhubaoya/p/9675203.html

标签:路径 mitm def odi 写入 [] put log tst

原文地址:https://www.cnblogs.com/zhuzhubaoya/p/9675376.html