标签:ever str 文本文件 ace 字符 lower 分词 rdl 切片

1.准备utf-8编码的文本文件file

2.通过文件读取字符串 str

3.对文本进行预处理

4.分解提取单词 list

5.单词计数字典 set , dict

6.按词频排序 list.sort(key=)

7.排除语法型词汇,代词、冠词、连词等无语义词

8.输出TOP(20)

with open (‘English.txt‘,‘r‘) as fb:

content = fb.read()

# 清洗数据

import string

content = content.lower() # 格式化数据,转为小写

for i in string.punctuation : # 去除所有标点符号

content = content.replace(i,‘ ‘)

wordList = content.split() # 切片分词

# 统计单词数量

data = {}

for word in wordList :

data[word] = data.get(word,0) +1

# 排序

hist = []

for key,value in data.items():

hist.append([value,key])

hist.sort(reverse = True) # 降序

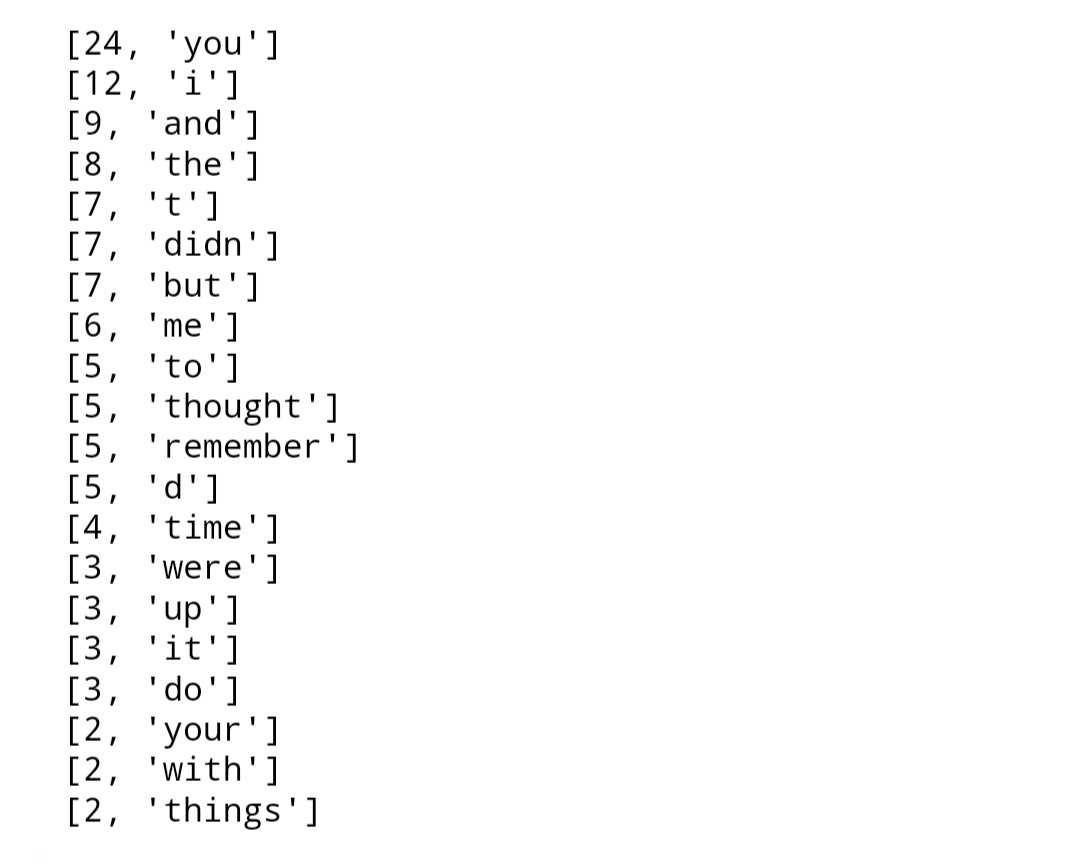

# 前20个

for i in range(20):

print(hist[i])

with open (‘Chinese.txt‘,‘r‘) as fb:

content = fb.read()

# 清洗数据

bd = ‘,。?!;:‘’“”【】‘

for word in content :

content = content.replace(bd,‘ ‘)

# 统计出词频字典

wordDict = {}

for word in content :

wordDict[word] = content.count(word)

wordList = list(wordDict.items())

# 排序

wordList.sort(key=lambda x: x[1], reverse=True)

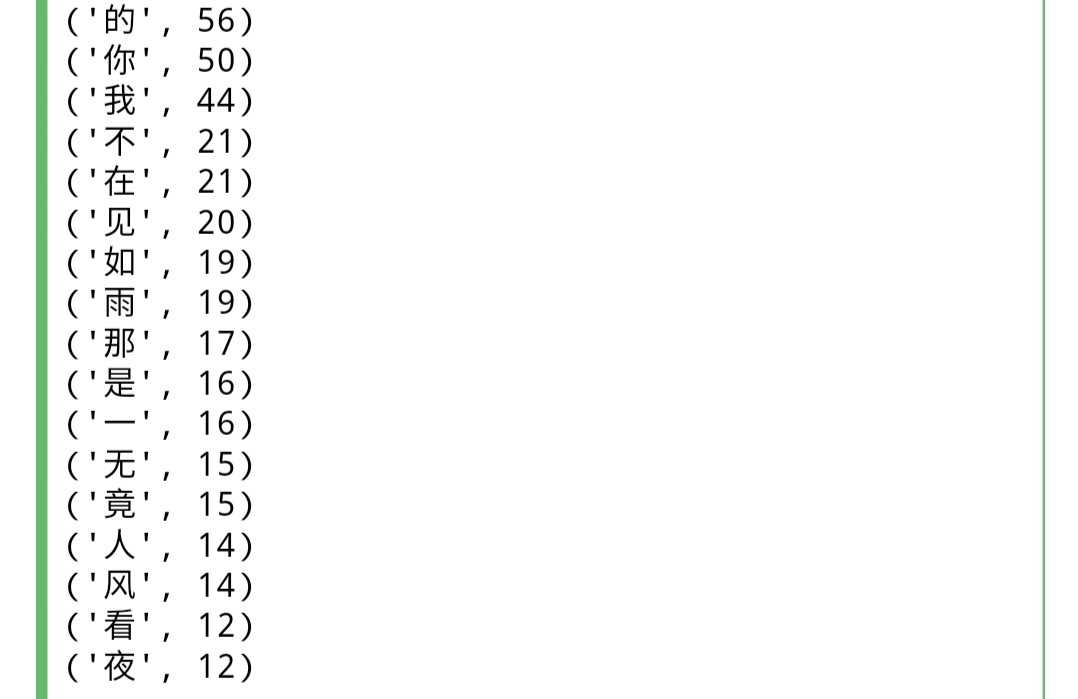

# TOP20

for i in range(20):

print(wordList[i])

标签:ever str 文本文件 ace 字符 lower 分词 rdl 切片

原文地址:https://www.cnblogs.com/vitan/p/9722046.html