标签:nested 原因 maximum except 数据 date man sha def

正常情况下,各个分片的分布如下:

可见,user 索引的三个分片平均分布在各台机器上,可以完全容忍一台机器宕机,而不丢失任何数据。

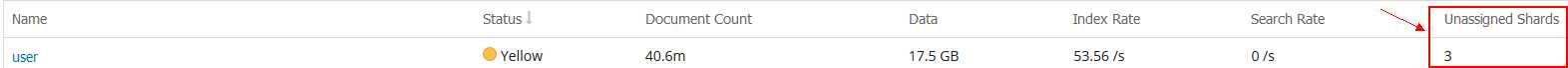

由于一次故障(修改了一个分词插件,但是这个插件未能正确加载),导致 node-151 节点宕机了。修复问题后,执行./bin/elasticsearch -d正常启动,但是发现集群中存在三个未分配的shards。本以为这些未分配的shards在node-151正常启动后能够自动分配,但是却发现它一直没有自动分配。

首先:GET user/_recovery?active_only=true 发现集群并没有进行副本恢复。

执行GET _cluster/allocation/explain?pretty发现:

"explanation": "shard has exceeded the maximum number of retries [5] on failed allocation attempts - manually call [/_cluster/reroute?retry_failed=true] to retry, [unassigned_info[[reason=ALLOCATION_FAILED], at[2018-09-29T08:02:03.794Z], failed_attempts[5], delayed=false, details[failed shard on node [mKkj4112T7aLeC2oNouOrg]: failed to update mapping for index, failure MapperParsingException[Failed to parse mapping [profile]: analyzer [hanlp_standard] not found for field [details]]; nested: MapperParsingException[analyzer [hanlp_standard] not found for field [details]]; ]

原来是分词插件错误导致。再仔细看日志,有一行:

allocation_status: "no_attempt"

原因是:shard 自动分配 已经达到最大重试次数5次,仍然失败了,所以导致"shard的分配状态已经是:no_attempt"。这时在Kibana Dev Tools,执行命令:POST /_cluster/reroute?retry_failed=true即可。由index.allocation.max_retries参数来控制最大重试次数。

The cluster will attempt to allocate a shard a maximum of index.allocation.max_retries times in a row (defaults to 5), before giving up and leaving the shard unallocated.

当执行reroute命令对分片重新路由后,ElasticSearch会自动进行负载均衡,负载均衡参数cluster.routing.rebalance.enable默认为true。

It is important to note that after processing any reroute commands Elasticsearch will perform rebalancing as normal (respecting the values of settings such as cluster.routing.rebalance.enable) in order to remain in a balanced state.

过一段时间后:执行 GET /_cat/shards?index=user 可查看 user 索引中所有的分片分配情况已经正常了。

user 1 p STARTED 13610428 2.6gb node-248

user 1 r STARTED 13610428 2.5gb node-151

user 1 r STARTED 13610428 2.8gb node-140

user 2 p STARTED 13606674 2.8gb node-248

user 2 r STARTED 13606674 2.7gb node-151

user 2 r STARTED 13606684 3.8gb node-140

user 0 p STARTED 13603429 2.6gb node-248

user 0 r STARTED 13603429 2.6gb node-151

user 0 r STARTED 13603429 2.7gb node-140

第一列:索引名称;第二列标识 shard 是primary(p) 还是 replica(r);第三列 shard的状态;第四列:该shard上的文档数量;最后一列 节点名称。

一般来说,ElasticSearch会自动分配 那些 unassigned shards,当发现某些shards长期未分配时,首先看下是否是因为:为索引指定了过多的primary shard 和 replica 数量,然后集群中机器数量又不够。另一个原因就是本文中提到的:由于故障,shard自动分配达到了最大重试次数了,这时执行 reroute 就可以了。

/_cat/shards 命令:https://www.elastic.co/guide/en/elasticsearch/reference/current/cat-shards.html

2018.9.30

原文:https://www.cnblogs.com/hapjin/p/9726469.html

记一次ElasticSearch重启之后shard未分配问题的解决

标签:nested 原因 maximum except 数据 date man sha def

原文地址:https://www.cnblogs.com/hapjin/p/9726469.html