标签:ack att 因此 app 实战 load ensure pre fse

(一)目标站点的分析

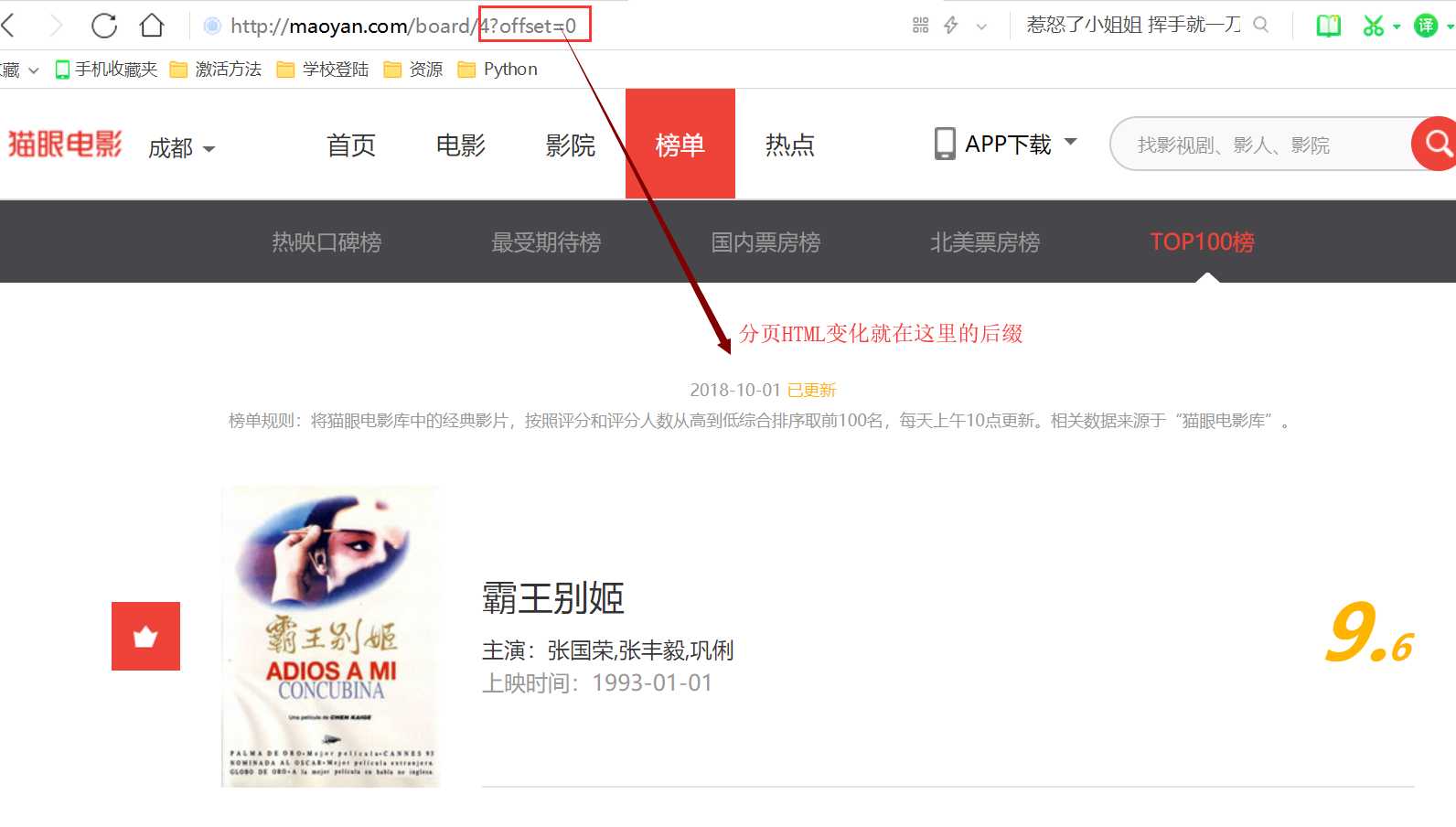

首先打开我们的目标网站,发现每一页有十个电影,最下面有分页标志,而分页只改变的是标签后缀,如下:

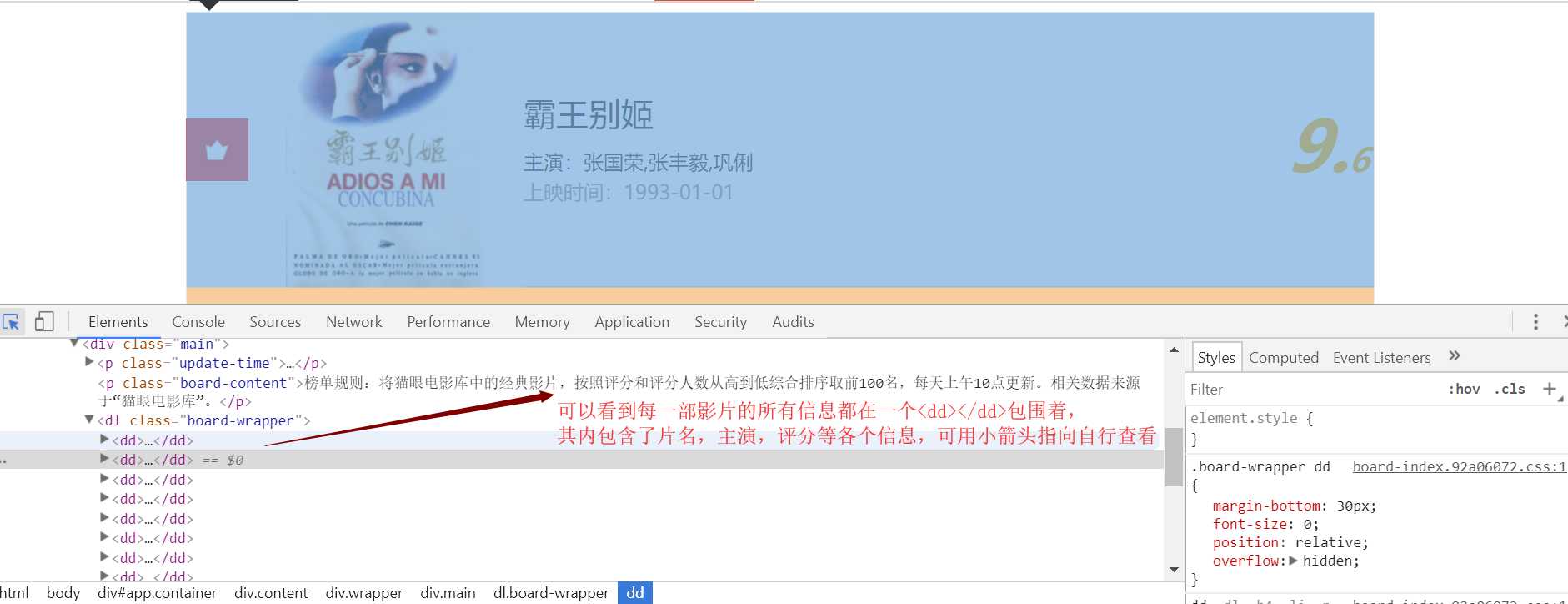

而后可以在网页按f12打开源代码管理,查看网页每处信息对应的源代码形式,如下图:

(二)流程框架

经过简单分析后,我们可以整理一下总的流程分为四步:

(三)实战编码

1.我们首先完成获取一页html信息的函数--抓取单页内容:

1 import requests 2 from requests.exceptions import RequestException 3 4 5 def get_one_page(url, headers): 6 try: 7 response = requests.get(url, headers=headers) 8 if response.status_code == 403: 9 return ‘需要设置headers信息‘ 10 elif response.status_code == 200: 11 return response.text 12 else: 13 return None 14 except RequestException: 15 return ‘Fault‘ 16 17 18 def main(): 19 url = ‘http://maoyan.com/board/4?offset=0‘ 20 headers = { 21 ‘User-Agent‘: ‘Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) ‘ 22 ‘Chrome/63.0.3239.132 Safari/537.36‘ 23 } 24 response = get_one_page(url, headers) 25 print(response) 26 27 28 if __name__ == ‘__main__‘: 29 main()

注意,在这里我们会发现,如果不设置headers信息,我们会无法获取html信息,所以需要设置一个headers信息才能打开网页。

2.接下来,我们要对内容进行整理和抓取--正则分析:

1 import requests 2 from requests.exceptions import RequestException 3 import re 4 5 6 def get_one_page(url, headers): 7 try: 8 response = requests.get(url, headers=headers) 9 if response.status_code == 403: 10 return ‘需要设置headers信息‘ 11 elif response.status_code == 200: 12 return response.text 13 else: 14 return None 15 except RequestException: 16 return ‘Fault‘ 17 18 19 def parse_one_page(html): 20 pattern = re.compile(‘<dd>.*?board-index.*?>(\d+)</i>.*?data-src="(.*?)".*?name"><a‘ 21 ‘.*?>(.*?)</a>.*?star">(.*?)</p>.*?releasetime">(.*?)</p>‘ 22 ‘.*?integer">(.*?)</i>.*?fraction">(.*?)</i>.*?</dd>‘, re.S) 23 items = re.findall(pattern, html) 24 #利用生成器实现格式化输出: 25 for i in items: 26 yield { 27 ‘index‘: i[0], 28 ‘image‘: i[1], 29 ‘name‘: i[2], 30 ‘actor‘: i[3].strip()[3:], 31 ‘tmie‘: i[4].strip()[5:], 32 ‘score‘: i[5]+i[6] 33 } 34 35 36 def main(): 37 url = ‘http://maoyan.com/board/4?offset=0‘ 38 headers = { 39 ‘User-Agent‘: ‘Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) ‘ 40 ‘Chrome/63.0.3239.132 Safari/537.36‘ 41 } 42 html = get_one_page(url, headers) 43 #由于分析函数已经是一个迭代器了,所以可以迭代访问: 44 for i in parse_one_page(html): 45 print(i) 46 47 48 if __name__ == ‘__main__‘: 49 main()

这一步的重点就是第20-22的正则匹配,关键在于如何去定位我们所需要的信息,关于输出这里用了生成器,其实不懂的话没关系,可以直接输出,这里只是为了方便观看结果,下面是输出结果:

1 {‘index‘: ‘1‘, ‘image‘: ‘http://p1.meituan.net/movie/20803f59291c47e1e116c11963ce019e68711.jpg@160w_220h_1e_1c‘, ‘name‘: ‘霸王别姬‘, ‘actor‘: ‘张国荣,张丰毅,巩俐‘, ‘tmie‘: ‘1993-01-01‘, ‘score‘: ‘9.6‘} 2 {‘index‘: ‘2‘, ‘image‘: ‘http://p0.meituan.net/movie/54617769d96807e4d81804284ffe2a27239007.jpg@160w_220h_1e_1c‘, ‘name‘: ‘罗马假日‘, ‘actor‘: ‘格利高里·派克,奥黛丽·赫本,埃迪·艾伯特‘, ‘tmie‘: ‘1953-09-02(美国)‘, ‘score‘: ‘9.1‘} 3 {‘index‘: ‘3‘, ‘image‘: ‘http://p0.meituan.net/movie/283292171619cdfd5b240c8fd093f1eb255670.jpg@160w_220h_1e_1c‘, ‘name‘: ‘肖申克的救赎‘, ‘actor‘: ‘蒂姆·罗宾斯,摩根·弗里曼,鲍勃·冈顿‘, ‘tmie‘: ‘1994-10-14(美国)‘, ‘score‘: ‘9.5‘} 4 {‘index‘: ‘4‘, ‘image‘: ‘http://p0.meituan.net/movie/e55ec5d18ccc83ba7db68caae54f165f95924.jpg@160w_220h_1e_1c‘, ‘name‘: ‘这个杀手不太冷‘, ‘actor‘: ‘让·雷诺,加里·奥德曼,娜塔莉·波特曼‘, ‘tmie‘: ‘1994-09-14(法国)‘, ‘score‘: ‘9.5‘} 5 {‘index‘: ‘5‘, ‘image‘: ‘http://p1.meituan.net/movie/f5a924f362f050881f2b8f82e852747c118515.jpg@160w_220h_1e_1c‘, ‘name‘: ‘教父‘, ‘actor‘: ‘马龙·白兰度,阿尔·帕西诺,詹姆斯·肯恩‘, ‘tmie‘: ‘1972-03-24(美国)‘, ‘score‘: ‘9.3‘} 6 {‘index‘: ‘6‘, ‘image‘: ‘http://p1.meituan.net/movie/0699ac97c82cf01638aa5023562d6134351277.jpg@160w_220h_1e_1c‘, ‘name‘: ‘泰坦尼克号‘, ‘actor‘: ‘莱昂纳多·迪卡普里奥,凯特·温丝莱特,比利·赞恩‘, ‘tmie‘: ‘1998-04-03‘, ‘score‘: ‘9.5‘} 7 {‘index‘: ‘7‘, ‘image‘: ‘http://p0.meituan.net/movie/da64660f82b98cdc1b8a3804e69609e041108.jpg@160w_220h_1e_1c‘, ‘name‘: ‘唐伯虎点秋香‘, ‘actor‘: ‘周星驰,巩俐,郑佩佩‘, ‘tmie‘: ‘1993-07-01(中国香港)‘, ‘score‘: ‘9.2‘} 8 {‘index‘: ‘8‘, ‘image‘: ‘http://p0.meituan.net/movie/b076ce63e9860ecf1ee9839badee5228329384.jpg@160w_220h_1e_1c‘, ‘name‘: ‘千与千寻‘, ‘actor‘: ‘柊瑠美,入野自由,夏木真理‘, ‘tmie‘: ‘2001-07-20(日本)‘, ‘score‘: ‘9.3‘} 9 {‘index‘: ‘9‘, ‘image‘: ‘http://p0.meituan.net/movie/46c29a8b8d8424bdda7715e6fd779c66235684.jpg@160w_220h_1e_1c‘, ‘name‘: ‘魂断蓝桥‘, ‘actor‘: ‘费雯·丽,罗伯特·泰勒,露塞尔·沃特森‘, ‘tmie‘: ‘1940-05-17(美国)‘, ‘score‘: ‘9.2‘} 10 {‘index‘: ‘10‘, ‘image‘: ‘http://p0.meituan.net/movie/230e71d398e0c54730d58dc4bb6e4cca51662.jpg@160w_220h_1e_1c‘, ‘name‘: ‘乱世佳人‘, ‘actor‘: ‘费雯·丽,克拉克·盖博,奥利维娅·德哈维兰‘, ‘tmie‘: ‘1939-12-15(美国)‘, ‘score‘: ‘9.1‘}

3.接下来就需要把信息保存至我们的文件--保存至文件:

1 import requests 2 from requests.exceptions import RequestException 3 import re 4 import json 5 6 def get_one_page(url, headers): 7 try: 8 response = requests.get(url, headers=headers) 9 if response.status_code == 403: 10 return ‘需要设置headers信息‘ 11 elif response.status_code == 200: 12 return response.text 13 else: 14 return None 15 except RequestException: 16 return ‘Fault‘ 17 18 19 def parse_one_page(html): 20 pattern = re.compile(‘<dd>.*?board-index.*?>(\d+)</i>.*?data-src="(.*?)".*?name"><a‘ 21 ‘.*?>(.*?)</a>.*?star">(.*?)</p>.*?releasetime">(.*?)</p>‘ 22 ‘.*?integer">(.*?)</i>.*?fraction">(.*?)</i>.*?</dd>‘, re.S) 23 items = re.findall(pattern, html) 24 #利用生成器实现格式化输出: 25 for i in items: 26 yield { 27 ‘index‘: i[0], 28 ‘image‘: i[1], 29 ‘name‘: i[2], 30 ‘actor‘: i[3].strip()[3:], 31 ‘tmie‘: i[4].strip()[5:], 32 ‘score‘: i[5]+i[6] 33 } 34 35 36 def write_to_file(content): 37 with open(‘result.txt‘, ‘a‘, encoding=‘utf-8‘) as f: 38 f.write(json.dumps(content, ensure_ascii=False) + ‘\n‘) 39 f.close() 40 41 42 def main(): 43 url = ‘http://maoyan.com/board/4?offset=0‘ 44 headers = { 45 ‘User-Agent‘: ‘Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) ‘ 46 ‘Chrome/63.0.3239.132 Safari/537.36‘ 47 } 48 html = get_one_page(url, headers) 49 #由于分析函数已经是一个迭代器了,所以可以迭代访问: 50 for i in parse_one_page(html): 51 write_to_file(i) 52 53 54 if __name__ == ‘__main__‘: 55 main()

这里要注意37,38行的两处转码操作,因为content是一个字典,我们需要将其转成字符串,然后需要转换成中文,因此有了如上两步。

4.循环爬取所有页数,采用往main中传参数:

1 import requests 2 from requests.exceptions import RequestException 3 import re 4 import json 5 6 def get_one_page(url, headers): 7 try: 8 response = requests.get(url, headers=headers) 9 if response.status_code == 403: 10 return ‘需要设置headers信息‘ 11 elif response.status_code == 200: 12 return response.text 13 else: 14 return None 15 except RequestException: 16 return ‘Fault‘ 17 18 19 def parse_one_page(html): 20 pattern = re.compile(‘<dd>.*?board-index.*?>(\d+)</i>.*?data-src="(.*?)".*?name"><a‘ 21 ‘.*?>(.*?)</a>.*?star">(.*?)</p>.*?releasetime">(.*?)</p>‘ 22 ‘.*?integer">(.*?)</i>.*?fraction">(.*?)</i>.*?</dd>‘, re.S) 23 items = re.findall(pattern, html) 24 #利用生成器实现格式化输出: 25 for i in items: 26 yield { 27 ‘index‘: i[0], 28 ‘image‘: i[1], 29 ‘name‘: i[2], 30 ‘actor‘: i[3].strip()[3:], 31 ‘tmie‘: i[4].strip()[5:], 32 ‘score‘: i[5]+i[6] 33 } 34 35 36 def write_to_file(content): 37 with open(‘result.txt‘, ‘a‘, encoding=‘utf-8‘) as f: 38 f.write(json.dumps(content, ensure_ascii=False) + ‘\n‘) 39 f.close() 40 41 42 def main(offset): 43 url = ‘http://maoyan.com/board/4?offset=‘ + str(offset) 44 headers = { 45 ‘User-Agent‘: ‘Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) ‘ 46 ‘Chrome/63.0.3239.132 Safari/537.36‘ 47 } 48 html = get_one_page(url, headers) 49 #由于分析函数已经是一个迭代器了,所以可以迭代访问: 50 for i in parse_one_page(html): 51 write_to_file(i) 52 53 54 if __name__ == ‘__main__‘: 55 for i in range(10): 56 main(i*10)

5.使用多线程进行抓取,提高效率:

主要就是引入一个进程池即可,这里了解一下其用法:

1 import requests 2 from requests.exceptions import RequestException 3 import re 4 import json 5 from multiprocessing import Pool 6 7 8 def get_one_page(url, headers): 9 try: 10 response = requests.get(url, headers=headers) 11 if response.status_code == 403: 12 return ‘需要设置headers信息‘ 13 elif response.status_code == 200: 14 return response.text 15 else: 16 return None 17 except RequestException: 18 return ‘Fault‘ 19 20 21 def parse_one_page(html): 22 pattern = re.compile(‘<dd>.*?board-index.*?>(\d+)</i>.*?data-src="(.*?)".*?name"><a‘ 23 ‘.*?>(.*?)</a>.*?star">(.*?)</p>.*?releasetime">(.*?)</p>‘ 24 ‘.*?integer">(.*?)</i>.*?fraction">(.*?)</i>.*?</dd>‘, re.S) 25 items = re.findall(pattern, html) 26 #利用生成器实现格式化输出: 27 for i in items: 28 yield { 29 ‘index‘: i[0], 30 ‘image‘: i[1], 31 ‘name‘: i[2], 32 ‘actor‘: i[3].strip()[3:], 33 ‘tmie‘: i[4].strip()[5:], 34 ‘score‘: i[5]+i[6] 35 } 36 37 38 def write_to_file(content): 39 with open(‘result.txt‘, ‘a‘, encoding=‘utf-8‘) as f: 40 f.write(json.dumps(content, ensure_ascii=False) + ‘\n‘) 41 f.close() 42 43 44 def main(offset): 45 url = ‘http://maoyan.com/board/4?offset=‘ + str(offset) 46 headers = { 47 ‘User-Agent‘: ‘Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) ‘ 48 ‘Chrome/63.0.3239.132 Safari/537.36‘ 49 } 50 html = get_one_page(url, headers) 51 #由于分析函数已经是一个迭代器了,所以可以迭代访问: 52 for i in parse_one_page(html): 53 write_to_file(i) 54 55 56 if __name__ == ‘__main__‘: 57 pool = Pool() 58 pool.map(main, [i*10 for i in range(10)])

OK,到此,这个简单的爬虫就结束了。

标签:ack att 因此 app 实战 load ensure pre fse

原文地址:https://www.cnblogs.com/boru-computer/p/9736780.html