标签:hosts uri 其他 deny 链接 schedule drivers ali memcache

本文旨在记录本人的一个实验过程,因为其中有一些坑,方便以后回顾查询。

其中限于篇幅(大部分是配置部分)有些内容省略掉了,官网都有,各位如果是安装部署的话可以参考官网,不建议使用本文。

以下是ocata版本官网链接

https://docs.openstack.org/ocata/zh_CN/install-guide-rdo/common/conventions.html

在centOS 7中部署Openstack,按照官网只需要控制节点和计算节点,网络节点安装和控制节点安装在一起

版本:openstack-ocata

/etc/hosts

# controller

192.168.2.19 controller

# compute1

192.168.2.21 compute1

# block1

192.168.2.21 block1

# object1

192.168.2.21 object1

# object2

192.168.2.21 object2

控制节点和计算节点都需要两个网络接口,一个作为管理网络接口,一个作为外部网络接口。接口配置如下:

|

节点名称 |

网络名称 |

IP地址 |

子网掩码 |

默认网关 |

|

控制节点 |

管理网络 |

192.168.2.19 |

255.255.255.0 |

192.168.2.1 |

|

外部网络 |

10.1.12.10 |

255.255.255.0 |

10.1.12.1 |

|

|

计算节点 |

管理网络 |

192.168.2.21 |

255.255.255.0 |

192.168.2.1 |

|

外部网络 |

10.1.12.11 |

255.255.255.0 |

10.1.12.1 |

永久关闭:vi /etc/selinux/config

SELINUX=disabled

临时关闭:setenforce 0

关闭iptables

永久关闭:systemctl disable firewalld.service

systemctl disable firewalld

临时关闭:systemctl stop firewalld.service

systemctl stop firewalld

sed -i ‘/SELINUX/s/enforcing/disabled/‘ /etc/selinux/config

timedatectl set-timezone Asia/Shanghai

|

密码名称 |

描述 |

|

数据库密码(不能使用变量) |

数据库的root密码(空) |

|

ADMIN_PASS |

admin 用户密码(111111) |

|

CINDER_DBPASS |

块设备存储服务的数据库密码 |

|

CINDER_PASS |

块设备存储服务的 cinder 密码 |

|

DASH_DBPASS |

Database password for the Dashboard |

|

DEMO_PASS |

demo 用户的密码 |

|

GLANCE_DBPASS |

镜像服务的数据库密码(glance) |

|

GLANCE_PASS |

镜像服务的 glance 用户密码(111111) |

|

KEYSTONE_DBPASS |

认证服务的数据库密码(keystone) |

|

METADATA_SECRET |

Secret for the metadata proxy(111111) |

|

NEUTRON_DBPASS |

网络服务的数据库密码(neutron) |

|

NEUTRON_PASS |

网络服务的 neutron 用户密码(111111) |

|

NOVA_DBPASS |

计算服务的数据库密码(nova) |

|

NOVA_PASS |

计算服务中``nova``用户的密码(111111) |

|

PLACEMENT_PASS |

Password of the Placement service user placement(111111) |

|

RABBIT_PASS |

RabbitMQ的openstack用户密码(rabbit) |

rpm.tar.gz(/var/cache/yum/$basearch/$releasever“/var/cache/yum/x86_64/7”打包)再解压就相当于用来本地yum源,别忘了打开/etc/yum.conf中缓存。

启用OpenStack库

yum install centos-release-openstack-ocata -y yum upgrade -y yum install python-openstackclient -y

yum install mariadb mariadb-server python2-PyMySQL -y cat >/etc/my.cnf.d/openstack.cnf<<eof [mysqld] bind-address = 192.168.2.19 # default-storage-engine = innodb innodb_file_per_table = on max_connections = 4096 collation-server = utf8_general_ci character-set-server = utf8 eof systemctl enable mariadb.service systemctl start mariadb.service mysql_secure_installation

消息队列运行在控制节点。

yum install rabbitmq-server -y systemctl enable rabbitmq-server.service systemctl start rabbitmq-server.service rabbitmqctl add_user openstack rabbit rabbitmqctl set_permissions openstack ".*" ".*" ".*"

各类服务的身份认证机制使用Memcached缓存令牌。

缓存服务memecached通常运行在控制节点。

在生产部署中,我们推荐联合启用防火墙、认证和加密保证它的安全。

yum install memcached python-memcached -y sed -i ‘s#OPTIONS="-l 127.0.0.1,::1"#OPTIONS="-l 127.0.0.1,::1,controller"#g‘ /etc/sysconfig/memcached systemctl enable memcached.service systemctl start memcached.service

mysql -u root -proot CREATE DATABASE keystone; GRANT ALL PRIVILEGES ON keystone.* TO ‘keystone‘@‘localhost‘ IDENTIFIED BY ‘keystone‘; GRANT ALL PRIVILEGES ON keystone.* TO ‘keystone‘@‘%‘ IDENTIFIED BY ‘keystone‘; exit

生成一个随机值在初始的配置中作为管理员的令牌。

openssl rand -hex 10

66ef83a4b21cebde6996

yum install openstack-keystone httpd mod_wsgi -y vim /etc/keystone/keystone.conf su -s /bin/sh -c "keystone-manage db_sync" keystone keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone keystone-manage credential_setup --keystone-user keystone --keystone-group keystone keystone-manage bootstrap --bootstrap-password 111111 --bootstrap-admin-url http://controller:35357/v3/ \ --bootstrap-internal-url http://controller:5000/v3/ \ --bootstrap-public-url http://controller:5000/v3/ \ --bootstrap-region-id RegionOne

echo ServerName controller >> /etc/httpd/conf/httpd.conf

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

systemctl enable httpd.service systemctl restart httpd.service 快照6 export OS_USERNAME=admin export OS_PASSWORD=111111 export OS_PROJECT_NAME=admin export OS_USER_DOMAIN_NAME=Default export OS_PROJECT_DOMAIN_NAME=Default export OS_AUTH_URL=http://controller:35357/v3 export OS_IDENTITY_API_VERSION=3 .......................................................................................................... export OS_AUTH_URL=http://controller:35357/v3 export OS_IDENTITY_API_VERSION=3 慎用:不然会报错(经测试没问题黄线上面的就可以) export OS_TOKEN=66ef83a4b21cebde6996 export OS_USERNAME=admin export OS_PASSWORD=111111 export OS_PROJECT_NAME=admin export OS_USER_DOMAIN_NAME=Default export OS_PROJECT_DOMAIN_NAME=Default ..........................................................................................................

openstack project create --domain default --description "Service Project" service openstack project create --domain default --description "Demo Project" demo openstack user create --domain default --password-prompt demo 111111 111111 openstack role create user openstack role add --project demo --user demo user sed -i ‘s#admin_auth_token# #g‘ /etc/keystone/keystone-paste.ini unset OS_AUTH_URL OS_PASSWORD -------------------------------------------------------------------- 作为 admin 用户,请求认证令牌: openstack --os-auth-url http://controller:35357/v3 \ --os-project-domain-name default --os-user-domain-name default --os-project-name admin --os-username admin token issue -------------------------------------------------------------------- 作为``demo`` 用户,请求认证令牌: openstack --os-auth-url http://controller:5000/v3 \ --os-project-domain-name default --os-user-domain-name default --os-project-name demo --os-username demo token issue

cat >admin-openrc<<eof export OS_PROJECT_DOMAIN_NAME=Default export OS_USER_DOMAIN_NAME=Default export OS_PROJECT_NAME=admin export OS_USERNAME=admin export OS_PASSWORD=111111 export OS_AUTH_URL=http://controller:35357/v3 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2 eof cat > demo-openrc<<eof export OS_PROJECT_DOMAIN_NAME=Default export OS_USER_DOMAIN_NAME=Default export OS_PROJECT_NAME=demo export OS_USERNAME=demo export OS_PASSWORD=111111 export OS_AUTH_URL=http://controller:5000/v3 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2 eof

. admin-openrc

openstack token issue

mysql -uroot -proot CREATE DATABASE glance; GRANT ALL PRIVILEGES ON glance.* TO ‘glance‘@‘localhost‘ IDENTIFIED BY ‘glance‘; GRANT ALL PRIVILEGES ON glance.* TO ‘glance‘@‘%‘ IDENTIFIED BY ‘glance‘ openstack user create --domain default --password-prompt glance 111111 111111 openstack role add --project service --user glance admin openstack service create --name glance --description "OpenStack Image" image openstack endpoint create --region RegionOne image public http://controller:9292 openstack endpoint create --region RegionOne image internal http://controller:9292 openstack endpoint create --region RegionOne image admin http://controller:9292

yum install openstack-glance -y

配置文件:

/etc/glance/glance-api.conf [database] mysql+pymysql://glance:glance@controller/glance [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = glance password = 111111 [paste_deploy] flavor = keystone [glance_store] stores = file,http default_store = file filesystem_store_datadir = /var/lib/glance/images/ ############################################## /etc/glance/glance-registry.conf [database] connection = mysql+pymysql://glance:glance@controller/glance [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = glance password = 111111 [paste_deploy] flavor = keystone

写入镜像服务数据库:

su -s /bin/sh -c "glance-manage db_sync" glance

完成安装

systemctl enable openstack-glance-api.service openstack-glance-registry.service systemctl start openstack-glance-api.service openstack-glance-registry.service

使用 `CirrOS`对镜像服务进行验证,CirrOS是一个小型的Linux镜像可以用来帮助你进行 OpenStack部署测试。

. admin-openrc yum install wget -y wget http://download.cirros-cloud.net/0.3.5/cirros-0.3.5-x86_64-disk.img openstack image create "cirros" --file cirros-0.3.5-x86_64-disk.img --disk-format qcow2 --container-format bare --public

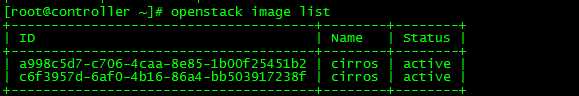

确认镜像的上传并验证属性:

openstack image list

mysql -uroot -proot

CREATE DATABASE nova_api;

CREATE DATABASE nova;

CREATE DATABASE nova_cell0;

GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova‘@‘localhost‘ IDENTIFIED BY ‘nova‘; GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova‘@‘%‘ IDENTIFIED BY ‘nova‘; GRANT ALL PRIVILEGES ON nova.* TO ‘nova‘@‘localhost‘ IDENTIFIED BY ‘nova‘; GRANT ALL PRIVILEGES ON nova.* TO ‘nova‘@‘%‘ IDENTIFIED BY ‘nova‘; GRANT ALL PRIVILEGES ON nova_cell0.* TO ‘nova‘@‘localhost‘ IDENTIFIED BY ‘nova‘; GRANT ALL PRIVILEGES ON nova_cell0.* TO ‘nova‘@‘%‘ IDENTIFIED BY ‘nova‘; exit

. admin-openrc openstack user create --domain default --password-prompt nova 111111 111111 openstack role add --project service --user nova admin openstack service create --name nova --description "OpenStack Compute" compute

openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1 openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1 openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1

openstack user create --domain default --password-prompt placement 111111 111111 openstack role add --project service --user placement admin

openstack service create --name placement --description "Placement API" placement openstack endpoint create --region RegionOne placement public http://controller:8778 openstack endpoint create --region RegionOne placement internal http://controller:8778 openstack endpoint create --region RegionOne placement admin http://controller:8778

yum install openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler openstack-nova-placement-api -y

/etc/nova/nova.conf [DEFAULT] enabled_apis = osapi_compute,metadata transport_url = rabbit://openstack:rabbit@controller my_ip = 192.168.2.19 use_neutron = True firewall_driver = nova.virt.firewall.NoopFirewallDriver [api_database] connection = mysql+pymysql://nova:nova@controller/nova_api [database] connection = mysql+pymysql://nova:nova@controller/nova [api] # ... auth_strategy = keystone [keystone_authtoken] # ... auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = nova password = 111111 [vnc] enabled = true # ... vncserver_listen=$my_ip vncserver_proxyclient_address=$my_ip [glance] # ... api_servers=http://controller:9292 [oslo_concurrency] # ... lock_path=/var/lib/nova/tmp [placement] # ... os_region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://controller:35357/v3 username = placement password = 111111

/etc/httpd/conf.d/00-nova-placement-api.conf 增加: <Directory /usr/bin> <IfVersion >= 2.4> Require all granted </IfVersion> <IfVersion < 2.4> Order allow,deny Allow from all </IfVersion> </Directory>

systemctl restart httpd.service su -s /bin/sh -c "nova-manage api_db sync" nova su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova su -s /bin/sh -c "nova-manage db sync" nova 会有如下输出,不用管。 /usr/lib/python2.7/site-packages/pymysql/cursors.py:166: Warning: (1831, u‘Duplicate index `block_device_mapping_instance_uuid_virtual_name_device_name_idx`. This is deprecated and will be disallowed in a future release.‘) result = self._query(query) /usr/lib/python2.7/site-packages/pymysql/cursors.py:166: Warning: (1831, u‘Duplicate index `uniq_instances0uuid`. This is deprecated and will be disallowed in a future release.‘) result = self._query(query)

nova-manage cell_v2 list_cells

systemctl enable openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service systemctl start openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

日志为/vat/log/nova-compute.log

yum install openstack-nova-compute

/etc/nova/nova.conf

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl start libvirtd.service openstack-nova-compute.service &

l 重要

Run the following commands on the controller node.

. admin-openrc openstack hypervisor list su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

执行后会输出

Found 2 cell mappings. Skipping cell0 since it does not contain hosts. Getting compute nodes from cell ‘cell1‘: e46118d6-f516-4249-8e11-559f1a2602be Found 1 computes in cell: e46118d6-f516-4249-8e11-559f1a2602be Checking host mapping for compute host ‘compute1‘: 9460baa8-d770-4841-9934-2e02df2b1ec9 Creating host mapping for compute host ‘compute1‘: 9460baa8-d770-4841-9934-2e02df2b1ec9

/etc/nova/nova.conf -----> [scheduler] discover_hosts_in_cells_interval = 300

在控制节点上执行这些命令。

. admin-openrc

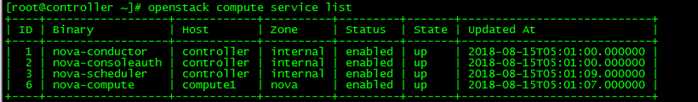

openstack compute service list

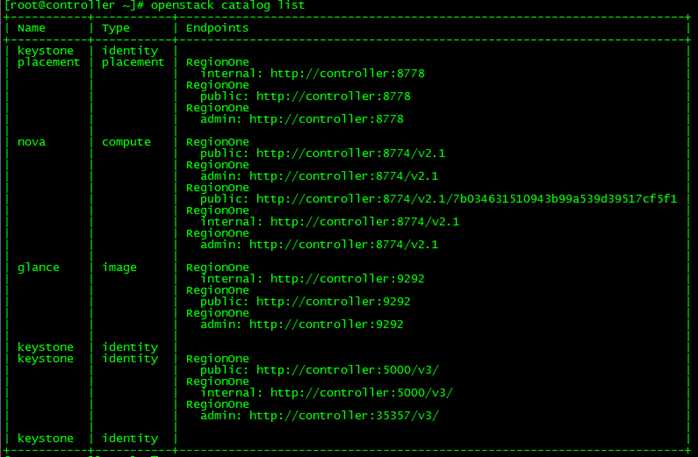

openstack catalog list

openstack image list

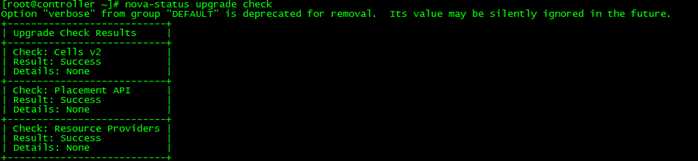

nova-status upgrade check

mysql -uroot -proot CREATE DATABASE neutron; GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron‘@‘localhost‘ IDENTIFIED BY ‘neutron‘; GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron‘@‘%‘ IDENTIFIED BY ‘neutron‘; exit

. admin-openrc openstack user create --domain default --password-prompt neutron 111111 111111 openstack role add --project service --user neutron admin 创建``neutron``服务实体: openstack service create --name neutron --description "OpenStack Networking" network 创建网络服务API端点: openstack endpoint create --region RegionOne network public http://controller:9696 openstack endpoint create --region RegionOne network internal http://controller:9696 openstack endpoint create --region RegionOne network admin http://controller:9696

l 网络选项1:提供者网络

l 网络选项2:自服务网络

安装组件

yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables -y

配置服务组件

/etc/neutron/neutron.conf [database] # ... connection = mysql+pymysql://neutron:neutron@controller/neutron [DEFAULT] # ... core_plugin = ml2 service_plugins = router allow_overlapping_ips = true transport_url = rabbit://openstack:rabbit@controller auth_strategy = keystone notify_nova_on_port_status_changes = true notify_nova_on_port_data_changes = true [keystone_authtoken] # ... auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = 111111 [nova] # ... auth_url = http://controller:35357 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = nova password = 111111 [oslo_concurrency] # ... lock_path = /var/lib/neutron/tmp

配置 Modular Layer 2 (ML2) 插件

vim /etc/neutron/plugins/ml2/ml2_conf.ini [ml2] # ... type_drivers = flat,vlan,vxlan tenant_network_types = vxlan mechanism_drivers = linuxbridge,l2population extension_drivers = port_security [ml2_type_flat] # ... flat_networks = provider [ml2_type_vxlan] # ... vni_ranges = 1:1000 [securitygroup] # ... enable_ipset = true

配置Linuxbridge代理

/etc/neutron/plugins/ml2/linuxbridge_agent.ini [linux_bridge] physical_interface_mappings = provider:ens33 [vxlan] enable_vxlan = true local_ip = 192.168.2.19 l2_population = true [securitygroup] # ... enable_security_group = true firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

配置layer-3代理

/etc/neutron/l3_agent.ini

[DEFAULT]

interface_driver = linuxbridge

配置DHCP代理

/etc/neutron/dhcp_agent.ini

/etc/neutron/metadata_agent.ini

需要设置元数据密码:这里就是第一次设置,直接设置即可,本例为111111

/etc/nova/nova.conf

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

同步数据库

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \ --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron systemctl restart openstack-nova-api.service

对于两种网络选项(即不管是网络1还是网络2都要做):

systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service systemctl start neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

对于网络选项2,同样启用layer-3服务并设置其随系统自启动

systemctl enable neutron-l3-agent.service

systemctl start neutron-l3-agent.service

yum install openstack-neutron-linuxbridge ebtables ipset -y

Networking 通用组件的配置包括认证机制、消息队列和插件

/etc/neutron/neutron.conf

选择与您之前在控制节点上选择的相同的网络选项。

l 网络选项1:提供者网络

l 网络选项2:自服务网络

配置Linuxbridge代理

/etc/neutron/plugins/ml2/linuxbridge_agent.ini

/etc/nova/nova.conf

重启计算服务:

systemctl restart openstack-nova-compute.service

启动Linuxbridge代理并配置它开机自启动:

systemctl enable neutron-linuxbridge-agent.service

systemctl start neutron-linuxbridge-agent.service

l 注解

在控制节点上执行这些命令。

. admin-openrc

openstack extension list --network

使用网络部分你选择的验证部分来进行部署,网络选项2:自服务网络

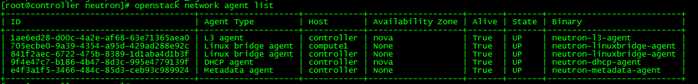

输出结果应该包括控制节点上的四个代理和每个计算节点上的一个代理。

openstack network agent list

这个部分将描述如何在控制节点上安装和配置仪表板。

yum install openstack-dashboard -y

/etc/openstack-dashboard/local_settings OPENSTACK_HOST = "controller" ALLOWED_HOSTS = [‘horizon.example.com‘, ‘localhost‘,‘192.168.2.19‘]

systemctl restart httpd.service memcached.service

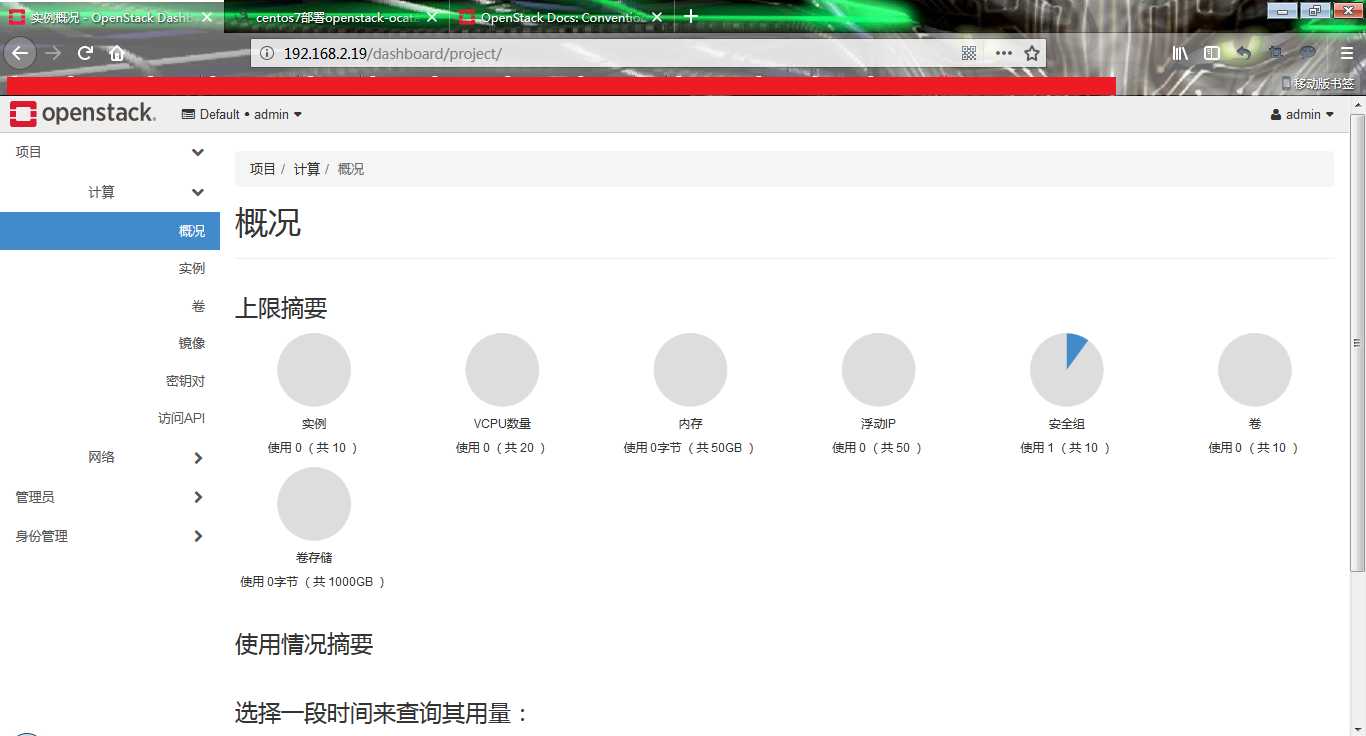

访问 http://192.168.2.19/dashboard

admin 111111

排障: Openstack安装Dashboard之后无法打开页面 [root@controller ~]# cd /var/log/httpd/ [root@controller httpd]# less error_log [Wed Aug 15 04:55:22.431328 2018] [core:error] [pid 109774] [client 192.168.2.1:9918] Script timed out before returning headers: django.wsgi [Wed Aug 15 04:56:15.073662 2018] [core:error] [pid 109701] [client 192.168.2.1:9748] End of script output before headers: django.wsgi 修改 /etc/httpd/conf.d/openstack-dashboard.conf 文件 在WSGISocketPrefix run/wsgi下面加一行代码: WSGIApplicationGroup %{GLOBAL} 保存退出,然后重启httpd服务。

这个部分描述如何在控制节点上安装和配置块设备存储服务,即 cinder。

这个服务需要至少一个额外的存储节点,以向实例提供卷。

mysql -u root -proot CREATE DATABASE cinder; GRANT ALL PRIVILEGES ON cinder.* TO ‘cinder‘@‘localhost‘ IDENTIFIED BY ‘cinder‘; GRANT ALL PRIVILEGES ON cinder.* TO ‘cinder‘@‘%‘ IDENTIFIED BY ‘cinder‘; exit

openstack user create --domain default --password-prompt cinder 111111 111111 openstack role add --project service --user cinder admin

? 注解 块设备存储服务要求两个服务实体。 openstack service create --name cinderv2 --description "OpenStack Block Storage" volumev2 openstack service create --name cinderv3 --description "OpenStack Block Storage" volumev3

? 注解

块设备存储服务每个服务实体都需要端点。

openstack endpoint create --region RegionOne volumev2 public http://controller:8776/v2/%\(project_id\)s openstack endpoint create --region RegionOne volumev2 internal http://controller:8776/v2/%\(project_id\)s openstack endpoint create --region RegionOne volumev2 admin http://controller:8776/v2/%\(project_id\)s openstack endpoint create --region RegionOne volumev3 public http://controller:8776/v3/%\(project_id\)s openstack endpoint create --region RegionOne volumev3 internal http://controller:8776/v3/%\(project_id\)s openstack endpoint create --region RegionOne volumev3 admin http://controller:8776/v3/%\(project_id\)s

yum install openstack-cinder -y

/etc/cinder/cinder.conf [database] # ... connection = mysql+pymysql://cinder:cinder@controller/cinder [DEFAULT] # ... transport_url = rabbit://openstack:rabbit@controller auth_strategy = keystone my_ip = 192.168.2.19 [keystone_authtoken] # ... auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = cinder password = 111111 [oslo_concurrency] # ... lock_path = /var/lib/cinder/tmp

初始化块设备服务的数据库:

su -s /bin/sh -c "cinder-manage db sync" cinder

vim /etc/nova/nova.conf

[cinder]

os_region_name = RegionOne

systemctl restart openstack-nova-api.service systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

l 注解

在存储节点实施这些步骤。

[root@compute1 ~]# yum install lvm2 -y systemctl enable lvm2-lvmetad.service systemctl start lvm2-lvmetad.service

pvcreate /dev/sda

vgcreate cinder-volumes /dev/sda

只有实例可以访问块存储卷组。但是呢,底层的操作系统(如centos)管理着与这些卷相关联的设备。默认情况下,LVM卷扫描工具会对底层操作系统扫描``/dev`` 目录,查找包含卷的块存储设备。

如果项目在他们的卷上使用了LVM,LVM卷扫描工具便会在检测到这些块存储卷时尝试缓存它们,这可能会在底层操作系统和项目卷上产生各种问题。所以您必须重新配置LVM,让它扫描仅包含``cinder-volume``卷组的设备。编辑``/etc/lvm/lvm.conf``文件并完成下面的操作:

vim /etc/lvm/lvm.conf devices { ... filter = [ "a/sdb/", "r/.*/"] 或者如果sda也是lvm卷的话: filter = [ "a/sda/", "a/sdb/", "r/.*/"]

【PS】

centos7默认情况下是创建不了pv的(原因待查证),解决方法如下:

默认:

[root@compute1 ~]# pvcreate /dev/sdb

Device /dev/sdb excluded by a filter.

解决:

[root@compute1 ~]# dd if=/dev/urandom of=/dev/sdb bs=512 count=64 64+0 records in 64+0 records out 32768 bytes (33 kB) copied, 0.00760562 s, 4.3 MB/s [root@compute1 ~]# pvcreate /dev/sdb Physical volume "/dev/sdb" successfully created.

小扩展:http://www.voidcn.com/article/p-uxhrkuzs-bsd.html

yum install openstack-cinder targetcli python-keystone

vim /etc/cinder/cinder.conf [database] # ... connection = mysql+pymysql://cinder:cinder@controller/cinder [DEFAULT] # ... transport_url = rabbit://openstack:rabbit@controller auth_strategy = keystone my_ip=192.168.2.21 enabled_backends = lvm glance_api_servers = http://controller:9292 [keystone_authtoken] # ... auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = cinder password = 111111 如果``[lvm]``部分不存在,则创建它: [lvm] volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver volume_group = cinder-volumes iscsi_protocol = iscsi iscsi_helper = lioadm [oslo_concurrency] # ... lock_path = /var/lib/cinder/tmp

systemctl enable openstack-cinder-volume.service target.service

systemctl start openstack-cinder-volume.service target.service

l 注解

在控制节点上执行这些命令。

. admin-openrc

openstack volume service list

裸金属服务是提供管理和准备物理硬件支持的组件的集合。

容器的基础设施管理服务(magnum)是OpenStack API服务,它使容器编排引擎(COE),比如Docker Swarm, Kubernetes和Mesos,成为了OpenStack头等资源。

数据库服务(trove)提供了数据库引擎的云部署功能。

The DNS service (designate) provides cloud provisioning functionality for DNS Zones and Recordsets.

密钥管理服务为存储提供了RESTful API,以及密钥数据,比如口令、加密密钥和X.509证书。

云消息服务允许开发人员共享分布式应用组件间的数据来完成不同任务,而不会丢失消息或要求每个组件总是可用。

对象存储服务(swift)通过REST API提供对象存储和检索的访问入口。

The Orchestration service (heat) uses a Heat Orchestration Template (HOT) to create and manage cloud resources.

共享文件系统服务(manila)提供了共享或分布式文件系统的协同访问。

当收集到的测量或事件数据符合预定义的规则时,监测告警服务就会触发告警。

Telemetry 数据收集服务提供如下功能:

l 警告

在创建私有项目网络前,你必须:ref:create the provider network <launch-instance-networks-provider>。

. admin-openrc neutron net-create --shared --provider:physical_network provider --provider:network_type flat provider

neutron subnet-create --name provider --allocation-pool start=10.2.2.178,end=10.2.2.190 --disable-dhcp --gateway 10.2.2.1 provider 10.2.2.0/24

在控制节点上,获得 admin 凭证来获取只有管理员能执行的命令的访问权限:

. demo-openrc openstack network create selfservice openstack subnet create --network selfservice --dns-nameserver 8.8.4.4 --gateway 172.16.1.1 --subnet-range 172.16.1.0/24 selfservice

. admin-openrc . demo-openrc openstack router create router neutron router-interface-add router selfservice neutron router-gateway-set router provider

. admin-openrc

ip netns

neutron router-port-list router

。。。。。。

后续请移步官网https://docs.openstack.org/ocata/zh_CN/install-guide-rdo/common/conventions.html

标签:hosts uri 其他 deny 链接 schedule drivers ali memcache

原文地址:https://www.cnblogs.com/daynote/p/9747053.html