标签:class tin oop EAP example snap inpu 结果 png

Hadoop的HelloWorld程序

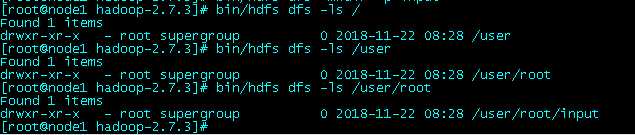

hdfs命令位于bin目录下,通过hdfs dfs -mkdir命令可以创建一个目录。

[root@node1 hadoop-2.7.3]# bin/hdfs dfs -mkdir -p input

dfs创建的目录默认会放到/user/{username}/目录下面,其中{username}是当前用户名。所以input目录应该在/user/root/下面。

下面通过`hdfs dfs -ls`命令可以查看HDFS目录文件

[root@node1 hadoop-2.7.3]# bin/hdfs dfs -ls /

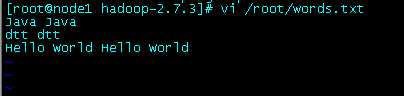

在本地新建一个文本文件

vi /root/words.txt

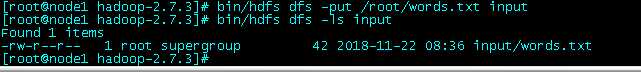

将本地文件/root/words.txt上传到HDFS

bin/hdfs dfs -put /root/words.txt input

bin/hdfs dfs -ls input

执行下面命令:

bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar wordcount input output

[root@node1 hadoop-2.7.3]# bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar wordcount input output

18/11/22 08:37:53 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

18/11/22 08:37:55 INFO input.FileInputFormat: Total input paths to process : 1

18/11/22 08:37:56 INFO mapreduce.JobSubmitter: number of splits:1

18/11/22 08:37:56 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1542892421463_0001

18/11/22 08:37:57 INFO impl.YarnClientImpl: Submitted application application_1542892421463_0001

18/11/22 08:37:57 INFO mapreduce.Job: The url to track the job: http://node1:8088/proxy/application_1542892421463_0001/

18/11/22 08:37:57 INFO mapreduce.Job: Running job: job_1542892421463_0001

18/11/22 08:39:05 INFO mapreduce.Job: Job job_1542892421463_0001 running in uber mode : false

18/11/22 08:39:05 INFO mapreduce.Job: map 0% reduce 0%

18/11/22 08:39:53 INFO mapreduce.Job: map 100% reduce 0%

18/11/22 08:40:27 INFO mapreduce.Job: map 100% reduce 100%

18/11/22 08:40:30 INFO mapreduce.Job: Job job_1542892421463_0001 completed successfully

18/11/22 08:40:35 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=51

FILE: Number of bytes written=237319

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=150

HDFS: Number of bytes written=29

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=43701

Total time spent by all reduces in occupied slots (ms)=28714

Total time spent by all map tasks (ms)=43701

Total time spent by all reduce tasks (ms)=28714

Total vcore-milliseconds taken by all map tasks=43701

Total vcore-milliseconds taken by all reduce tasks=28714

Total megabyte-milliseconds taken by all map tasks=44749824

Total megabyte-milliseconds taken by all reduce tasks=29403136

Map-Reduce Framework

Map input records=3

Map output records=8

Map output bytes=74

Map output materialized bytes=51

Input split bytes=108

Combine input records=8

Combine output records=4

Reduce input groups=4

Reduce shuffle bytes=51

Reduce input records=4

Reduce output records=4

Spilled Records=8

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=342

CPU time spent (ms)=3420

Physical memory (bytes) snapshot=283492352

Virtual memory (bytes) snapshot=4202385408

Total committed heap usage (bytes)=138788864

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=42

File Output Format Counters

Bytes Written=29

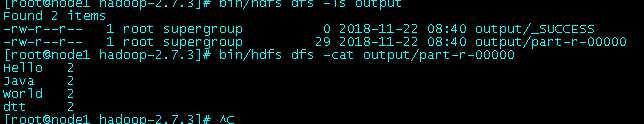

bin/hdfs dfs -ls output

bin/hdfs dfs -cat output/part-r-00000

[root@node1 hadoop-2.7.3]# bin/hdfs dfs -ls output Found 2 items -rw-r--r-- 1 root supergroup 0 2018-11-22 08:40 output/_SUCCESS -rw-r--r-- 1 root supergroup 29 2018-11-22 08:40 output/part-r-00000 [root@node1 hadoop-2.7.3]# bin/hdfs dfs -cat output/part-r-00000 Hello 2 Java 2 World 2 dtt 2 [root@node1 hadoop-2.7.3]#

标签:class tin oop EAP example snap inpu 结果 png

原文地址:https://www.cnblogs.com/mtime2004/p/10004164.html