标签:main 默认 mkdir 命令 gre 没有 san active wan

注:/usr/local/src为源码安装包存放目录。 /data/为数据存储、解压目录。一、 安装elasticsearch

1.1 安装Java

[root@localhost data]# rpm -qa |grep java

tzdata-java-2015g-1.el7.noarch

javapackages-tools-3.4.1-11.el7.noarch

java-1.8.0-openjdk-headless-1.8.0.65-3.b17.el7.x86_64

java-1.7.0-openjdk-1.7.0.91-2.6.2.3.el7.x86_64

java-1.8.0-openjdk-1.8.0.65-3.b17.el7.x86_64

java-1.7.0-openjdk-headless-1.7.0.91-2.6.2.3.el7.x86_64

python-javapackages-3.4.1-11.el7.noarch

[root@localhost data]# rpm -e --nodeps java-1.7.0-openjdk-1.7.0.91-2.6.2.3.el7.x86_64

[root@localhost data]# rpm -e --nodeps java-1.7.0-openjdk-headless-1.7.0.91-2.6.2.3.el7.x86_64

[root@localhost data]# rpm -e --nodeps java-1.8.0-openjdk-1.8.0.65-3.b17.el7.x86_64

[root@localhost data]# rpm -e --nodeps java-1.8.0-openjdk-headless-1.8.0.65-3.b17.el7.x86_64

[root@localhost data]# rpm -qa |grep java

tzdata-java-2015g-1.el7.noarch

javapackages-tools-3.4.1-11.el7.noarch

python-javapackages-3.4.1-11.el7.noarch

[root@localhost ~]# mkdir /data

[root@localhost src]# tar xf jdk-8u191-linux-x64.tar.gz -C /data/

[root@localhost jdk]# vim /etc/profile

在末尾添加以下内容

export JAVA_HOME=/data/jdk

export JAVA_BIN=/data/jdk/bin

export JAVA_LIB=/data/jdk/lib

export JAVA_JRE=/data/jdk/jre

[root@localhost jdk]# ln -s /data/jdk/bin/java /usr/bin/

[root@localhost jdk]# java -version

java version "1.8.0_191"

Java(TM) SE Runtime Environment (build 1.8.0_191-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.191-b12, mixed mode)

[root@localhost config]# mkdir /data/{es-data,es-logs}

修改配置文件,注意“:”后面有空格,

[root@localhost config]# vim elasticsearch.yml

path.data: /data/es-data

path.logs: /data/es-logs

network.host: 0.0.0.0

http.port: 9200

*在network项最后添加,后面要调用到以下信息,所以一起添加。但是在启动elasticsearch时会报错,所以添加时记得注销掉,在前面添加“#”,这样启动elasticsearch就不会报错了。在安装elasticsearch-head后再取消“#”就可以了。

http.cors.enabled:true

http.cors.allow-origin:""

后面安装elasticsearch-head-master需要调用到

[root@localhost data]# vim /etc/security/limits.conf

在最后添加以下信息, **

grunt-contrib-jasmine@1.0.3 node_modules/grunt-contrib-jasmine

├── sprintf-js@1.0.3

├── lodash@2.4.2

├── chalk@1.1.3 (escape-string-regexp@1.0.5, ansi-styles@2.2.1, supports-color@2.0.0, has-ansi@2.0.0, strip-ansi@3.0.1)

├── es5-shim@4.5.12

├── jasmine-core@2.99.1

├── rimraf@2.6.2 (glob@7.1.3)

└── grunt-lib-phantomjs@1.1.0 (eventemitter2@0.4.14, semver@5.6.0, temporary@0.0.8, phantomjs-prebuilt@2.1.16)

[root@localhost elasticsearch-head]#

通过后台进程进行启动

[root@localhost elasticsearch-head]# nohup grunt server &

[1] 5254

[root@localhost elasticsearch-head]# nohup: 忽略输入并把输出追加到"nohup.out"

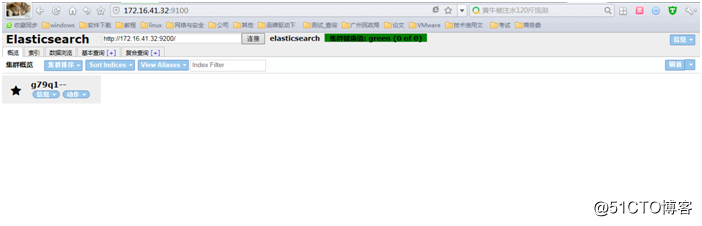

现在可以通过网页进行浏览

默认这里会显示“集群健康值:未连接”,不要紧张,我们只需要将“localhost”修改成主机IP地址,点击“连接”就可以显示正常了。

到此elasticsearch配置完成。

二、 logstasho安装

2.1 手动安装logstash

在logstash/config目录创建一个.conf配置文件,名字自己定义。我这使用的是default.conf,添加以下内容:

#监听5044端口作为输入

input {

beats {

port => "5044"

}

}

#数据过滤

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

geoip {

source => "clientip"

}

#输出配置为本机的9200端口,这是ElasticSerach服务的监听端口

output {

elasticsearch {

hosts => ["本机IP地址:9200"]

}

}

~

进入filebeat插件目录

[root@localhost data]# cd filebeat/

后台启动

[root@localhost filebeat]# ./filebeat &

查看启动情况

[root@localhost filebeat]# ps -ef |grep filebeat

root 70376 48524 0 16:19 pts/3 00:00:00 ./filebeat

root 70391 48524 0 16:20 pts/3 00:00:00 grep --color=auto filebeat

[root@localhost filebeat]# tail logs/filebeat

2018-11-27T16:19:36+08:00 INFO Loading Prospectors: 1

2018-11-27T16:19:36+08:00 INFO Prospector with previous states loaded: 5

2018-11-27T16:19:36+08:00 INFO Starting Registrar

2018-11-27T16:19:36+08:00 INFO Starting prospector of type: log; id: 17005676086519951868

2018-11-27T16:19:36+08:00 INFO Start sending events to output

2018-11-27T16:19:36+08:00 INFO Loading and starting Prospectors completed. Enabled prospectors: 1

2018-11-27T16:19:36+08:00 INFO Starting spooler: spool_size: 2048; idle_timeout: 5s

2018-11-27T16:20:06+08:00 INFO Non-zero metrics in the last 30s: publish.events=5 registrar.states.current=5 registrar.states.update=5 registrar.writes=1

2018-11-27T16:20:36+08:00 INFO No non-zero metrics in the last 30s

2018-11-27T16:21:06+08:00 INFO No non-zero metrics in the last 30s

启动正常

开放端口

[root@localhost logstash]# firewall-cmd --add-port=5044/tcp --permanent

success

[root@localhost logstash]# firewall-cmd --add-port=9600/tcp --permanent

success

[root@localhost logstash]# firewall-cmd --reload

配置path.data路径,要不然启动logstash会报错

[root@localhost logstash]# bin/logstash -f config-mysql-path.data=/data/es-logstash-log

后台启动logstash

[root@localhost logstash]# bin/logstash -f config/default.conf &

查看监听信息

[root@localhost config]# netstat -ntlup

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 172.16.41.32:9100 0.0.0.0: LISTEN 47943/grunt

tcp 0 0 192.168.122.1:53 0.0.0.0: LISTEN 2854/dnsmasq

tcp 0 0 0.0.0.0:22 0.0.0.0: LISTEN 1566/sshd

tcp 0 0 127.0.0.1:631 0.0.0.0: LISTEN 1571/cupsd

tcp 0 0 127.0.0.1:25 0.0.0.0: LISTEN 2070/master

tcp 0 0 172.16.41.32:5601 0.0.0.0: LISTEN 73103/bin/../node/b

tcp6 0 0 :::9200 ::: LISTEN 48329/java

tcp6 0 0 :::5044 ::: LISTEN 71717/java

tcp6 0 0 :::9300 ::: LISTEN 48329/java

tcp6 0 0 :::22 ::: LISTEN 1566/sshd

tcp6 0 0 ::1:631 ::: LISTEN 1571/cupsd

tcp6 0 0 ::1:25 ::: LISTEN 2070/master

tcp6 0 0 127.0.0.1:9600 ::: LISTEN 71717/java

udp 0 0 192.168.122.1:53 0.0.0.0: 2854/dnsmasq

udp 0 0 0.0.0.0:67 0.0.0.0: 2854/dnsmasq

udp 0 0 0.0.0.0:53381 0.0.0.0: 939/avahi-daemon: r

udp 0 0 0.0.0.0:5353 0.0.0.0: 939/avahi-daemon: r

udp 0 0 127.0.0.1:323 0.0.0.0: 960/chronyd

udp6 0 0 ::1:323 :::* 960/chronyd

[root@localhost config]#

测试logstash访问情况,返回以下内容说明logstash正常启动。

[root@localhost elasticsearch]# curl http://172.16.41.32:9200/_search?pretty

{

"took" : 2771,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1116,

"max_score" : 1.0,

"hits" : [

{

"_index" : "filebeat-2018.11.27",

"_type" : "log",

"_id" : "IVZAVGcB6PZDlVvdZ8IE",

"_score" : 1.0,

"_source" : {

"@timestamp" : "2018-11-27T08:19:13.518Z",

"beat" : {

"hostname" : "localhost.localdomain",

"name" : "localhost.localdomain",

"version" : "5.4.1"

},

"input_type" : "log",

"message" : "Successfully initialized wpa_supplicant",

"offset" : 40,

"source" : "/var/log/wpa_supplicant.log",

"type" : "log"

}

},

{

"_index" : "filebeat-2018.11.27",

"_type" : "log",

"_id" : "KFZAVGcB6PZDlVvdZ8IE",

"_score" : 1.0,

"_source" : {

"@timestamp" : "2018-11-27T08:19:13.518Z",

"beat" : {

"hostname" : "localhost.localdomain",

"name" : "localhost.localdomain",

"version" : "5.4.1"

},

"input_type" : "log",

"message" : "Successfully initialized wpa_supplicant",

"offset" : 80,

"source" : "/var/log/wpa_supplicant.log",

"type" : "log"

}

},

{

"_index" : "filebeat-2018.11.27",

"_type" : "log",

"_id" : "K1ZAVGcB6PZDlVvdZ8IE",

"_score" : 1.0,

"_source" : {

"@timestamp" : "2018-11-27T08:19:13.519Z",

"beat" : {

"hostname" : "localhost.localdomain",

"name" : "localhost.localdomain",

"version" : "5.4.1"

},

"input_type" : "log",

"message" : "Nov 15 11:14:47 Updated: systemd-sysv-219-57.el7_5.3.x86_64",

"offset" : 412,

"source" : "/var/log/yum.log",

"type" : "log"

}

},

{

"_index" : "filebeat-2018.11.27",

"_type" : "log",

"_id" : "L1ZAVGcB6PZDlVvdZ8IE",

"_score" : 1.0,

"_source" : {

"@timestamp" : "2018-11-27T08:19:13.535Z",

"beat" : {

"hostname" : "localhost.localdomain",

"name" : "localhost.localdomain",

"version" : "5.4.1"

},

"input_type" : "log",

"message" : "[\u001B[32m OK \u001B[0m] Reached target Paths.",

"offset" : 95,

"source" : "/var/log/boot.log",

"type" : "log"

}

},

{

"_index" : "filebeat-2018.11.27",

"_type" : "log",

"_id" : "MVZAVGcB6PZDlVvdZ8IE",

"_score" : 1.0,

"_source" : {

"@timestamp" : "2018-11-27T08:19:13.535Z",

"beat" : {

"hostname" : "localhost.localdomain",

"name" : "localhost.localdomain",

"version" : "5.4.1"

},

"input_type" : "log",

"message" : "X.Org X Server 1.17.2",

"offset" : 36,

"source" : "/var/log/Xorg.1.log",

"type" : "log"

}

},

{

"_index" : "filebeat-2018.11.27",

"_type" : "log",

"_id" : "OVZAVGcB6PZDlVvdZ8IE",

"_score" : 1.0,

"_source" : {

"@timestamp" : "2018-11-27T08:19:13.567Z",

"beat" : {

"hostname" : "localhost.localdomain",

"name" : "localhost.localdomain",

"version" : "5.4.1"

},

"input_type" : "log",

"message" : "Release Date: 2015-06-16",

"offset" : 61,

"source" : "/var/log/Xorg.1.log",

"type" : "log"

}

},

{

"_index" : "filebeat-2018.11.27",

"_type" : "log",

"_id" : "QVZAVGcB6PZDlVvdZ8IE",

"_score" : 1.0,

"_source" : {

"@timestamp" : "2018-11-27T08:19:13.568Z",

"beat" : {

"hostname" : "localhost.localdomain",

"name" : "localhost.localdomain",

"version" : "5.4.1"

},

"input_type" : "log",

"message" : "[ 23.508] Current version of pixman: 0.32.6",

"offset" : 658,

"source" : "/var/log/Xorg.0.log",

"type" : "log"

}

},

{

"_index" : "filebeat-2018.11.27",

"_type" : "log",

"_id" : "TVZAVGcB6PZDlVvdZ8IE",

"_score" : 1.0,

"_source" : {

"@timestamp" : "2018-11-27T08:19:13.568Z",

"beat" : {

"hostname" : "localhost.localdomain",

"name" : "localhost.localdomain",

"version" : "5.4.1"

},

"input_type" : "log",

"message" : "[ 23.597] (==) No Layout section. Using the first Screen section.",

"offset" : 1266,

"source" : "/var/log/Xorg.0.log",

"type" : "log"

}

},

{

"_index" : "filebeat-2018.11.27",

"_type" : "log",

"_id" : "V1ZAVGcB6PZDlVvdbsKB",

"_score" : 1.0,

"_source" : {

"@timestamp" : "2018-11-27T08:19:13.568Z",

"beat" : {

"hostname" : "localhost.localdomain",

"name" : "localhost.localdomain",

"version" : "5.4.1"

},

"input_type" : "log",

"message" : "[\u001B[32m OK \u001B[0m] Reached target Initrd Default Target.",

"offset" : 881,

"source" : "/var/log/boot.log",

"type" : "log"

}

},

{

"_index" : "filebeat-2018.11.27",

"_type" : "log",

"_id" : "XVZAVGcB6PZDlVvdbsKB",

"_score" : 1.0,

"_source" : {

"@timestamp" : "2018-11-27T08:19:13.568Z",

"beat" : {

"hostname" : "localhost.localdomain",

"name" : "localhost.localdomain",

"version" : "5.4.1"

},

"input_type" : "log",

"message" : "[\u001B[32m OK \u001B[0m] Stopped Cleaning Up and Shutting Down Daemons.",

"offset" : 1218,

"source" : "/var/log/boot.log",

"type" : "log"

}

}

]

}

}

正常启动

三、 配置kibana

[root@localhost data]# cd kibana/config

修改配置文件,去除“#”,并修改相应内容。

[root@localhost config]# vim kibana.yml

[root@localhost config]# egrep -v "^#|^$" kibana.yml

server.port: 5601

server.host: "172.16.41.32"

elasticsearch.url: "http://172.16.41.32:9200"

开放端口

[root@localhost config]# firewall-cmd --add-port=5601/tcp --permanent

success

[root@localhost config]# firewall-cmd --reload

success

后台启动kibana

[root@localhost kibana]# bin/kibana &

查看端口监听情况

[root@localhost kibana]# netstat -ntulp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 172.16.41.32:9100 0.0.0.0: LISTEN 47943/grunt

tcp 0 0 192.168.122.1:53 0.0.0.0: LISTEN 2854/dnsmasq

tcp 0 0 0.0.0.0:22 0.0.0.0: LISTEN 1566/sshd

tcp 0 0 127.0.0.1:631 0.0.0.0: LISTEN 1571/cupsd

tcp 0 0 127.0.0.1:25 0.0.0.0: LISTEN 2070/master

tcp 0 0 172.16.41.32:5601 0.0.0.0: LISTEN 73103/bin/../node/b

tcp6 0 0 :::9200 ::: LISTEN 48329/java

tcp6 0 0 :::5044 ::: LISTEN 71717/java

tcp6 0 0 :::9300 ::: LISTEN 48329/java

tcp6 0 0 :::22 ::: LISTEN 1566/sshd

tcp6 0 0 ::1:631 ::: LISTEN 1571/cupsd

tcp6 0 0 ::1:25 ::: LISTEN 2070/master

tcp6 0 0 127.0.0.1:9600 ::: LISTEN 71717/java

udp 0 0 192.168.122.1:53 0.0.0.0: 2854/dnsmasq

udp 0 0 0.0.0.0:67 0.0.0.0: 2854/dnsmasq

udp 0 0 0.0.0.0:53381 0.0.0.0: 939/avahi-daemon: r

udp 0 0 0.0.0.0:5353 0.0.0.0: 939/avahi-daemon: r

udp 0 0 127.0.0.1:323 0.0.0.0: 960/chronyd

udp6 0 0 ::1:323 :::* 960/chronyd

结尾返回以下内容,说明正常启动。

log [09:43:22.040] [info][status][plugin:elasticsearch@6.5.1] Status changed from red to green - Ready

log [09:43:29.129] [warning][reporting] Enabling the Chromium sandbox provides an additional layer of protection.

log [09:43:29.287] [info][migrations] Creating index .kibana_1.

log [09:43:31.804] [info][migrations] Pointing alias .kibana to .kibana_1.

log [09:43:33.246] [info][migrations] Finished in 3959ms.

log [09:43:33.251] [info][listening] Server running at http://172.16.41.32:5601

log [09:43:36.724] [info][status][plugin:spaces@6.5.1] Status changed from red to green - Ready

2.2 汉化

默认情况下kibana是英文版,所以我们要进行汉化。

下载汉化包

[root@localhost data]# git clone https://github.com/anbai-inc/Kibana_Hanization.git

[root@localhost data]# cd Kibana_Hanization/

进行翻译

[root@localhost Kibana_Hanization]# python main.py ../kibana/

等待翻译完成。

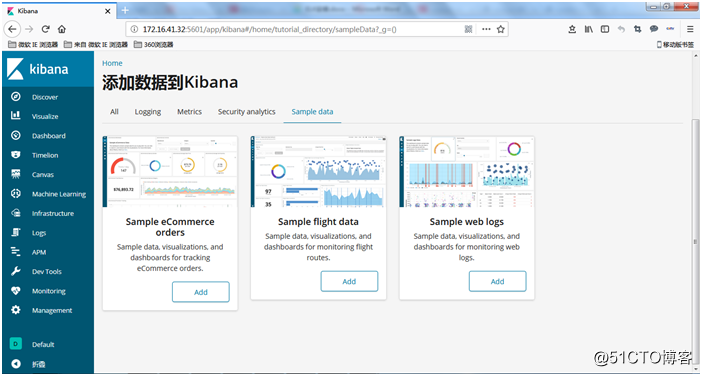

2.3 打开网页进行浏览测试

标签:main 默认 mkdir 命令 gre 没有 san active wan

原文地址:http://blog.51cto.com/3001441/2323259