标签:efault 没有 split code and spec you hdf ble

1.为什么要让运行时Jar可以从yarn端访问

spark2以后,原有lib目录下的大JAR包被分散成多个小JAR包,原来的spark-assembly-*.jar已经不存在

每一次我们运行的时候,如果没有指定

spark.yarn.archive or spark.yarn.jars

Spark将在安装路径下的Jar目录,将其所有的Jar包打包然后将其上传到分布式缓存(官网上的原话是:To make Spark runtime jars accessible from YARN side, you can specify spark.yarn.archive or spark.yarn.jars. For details please refer to Spark Properties. If neither spark.yarn.archive nor spark.yarn.jars is specified, Spark will create a zip file with all jars under $SPARK_HOME/jars and upload it to the distributed cache.)

这里以简单地写了一个wordcount.scala为例,将其打包然后部署到Spark集群上运行

object WordCount { def main(args: Array[String]): Unit = { if (args.length != 2) println("AppName + FilePath") val conf = new SparkConf() // .setMaster("local[4]") .setAppName(args(0)) val sc = new SparkContext(conf) val lines = sc.textFile(args(1)) val flatRDD = lines.flatMap(_.split(" ")).map((_, 1)).reduceByKey(_ + _).collect.foreach(println) } }

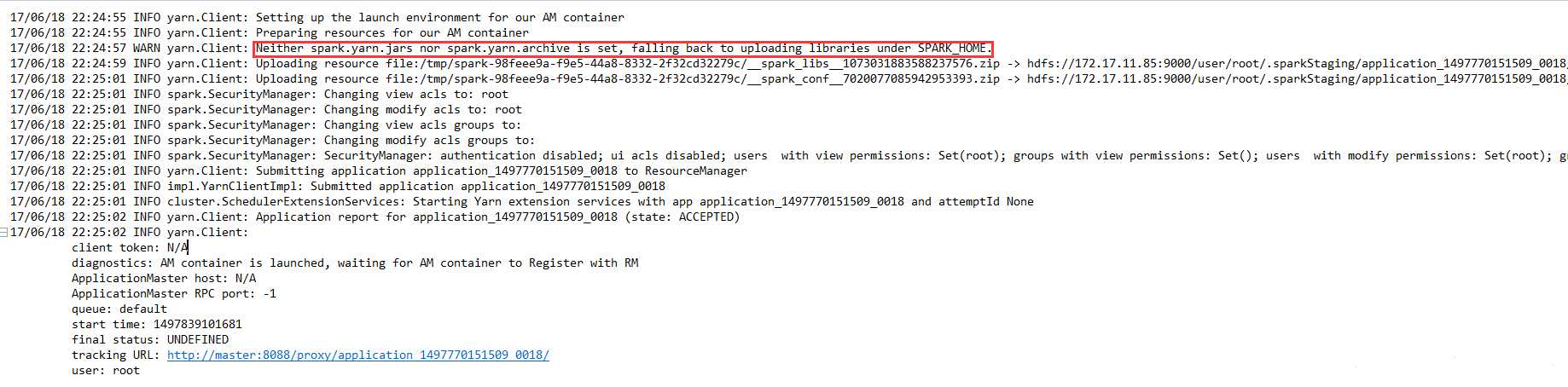

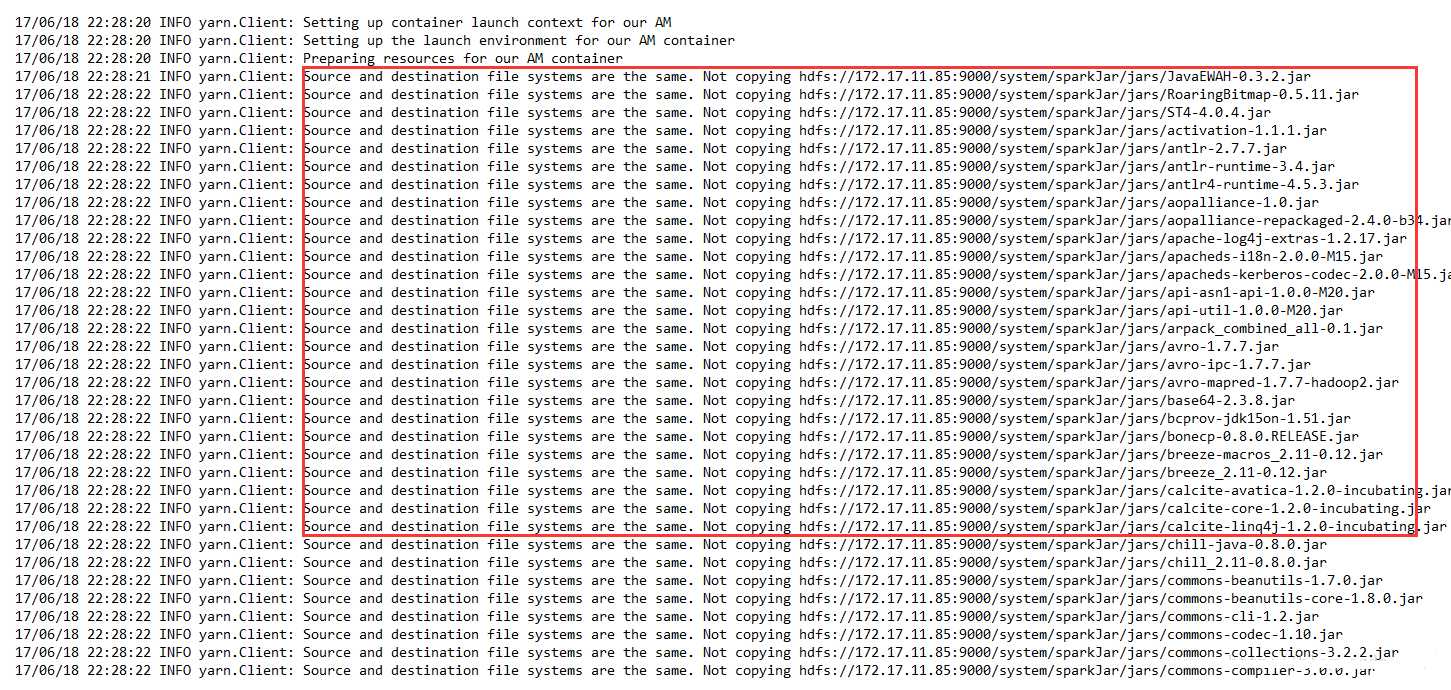

观察日志:

yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

大致的意思说找不到spark.yarn.jars nor spark.yarn.archive,回到spark安装目录上传运行时的Jar包

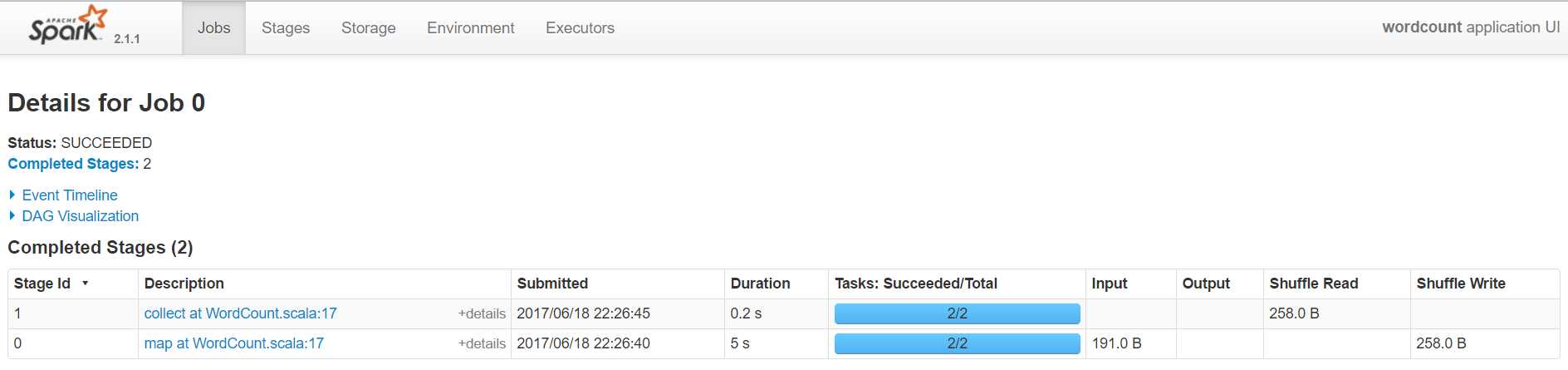

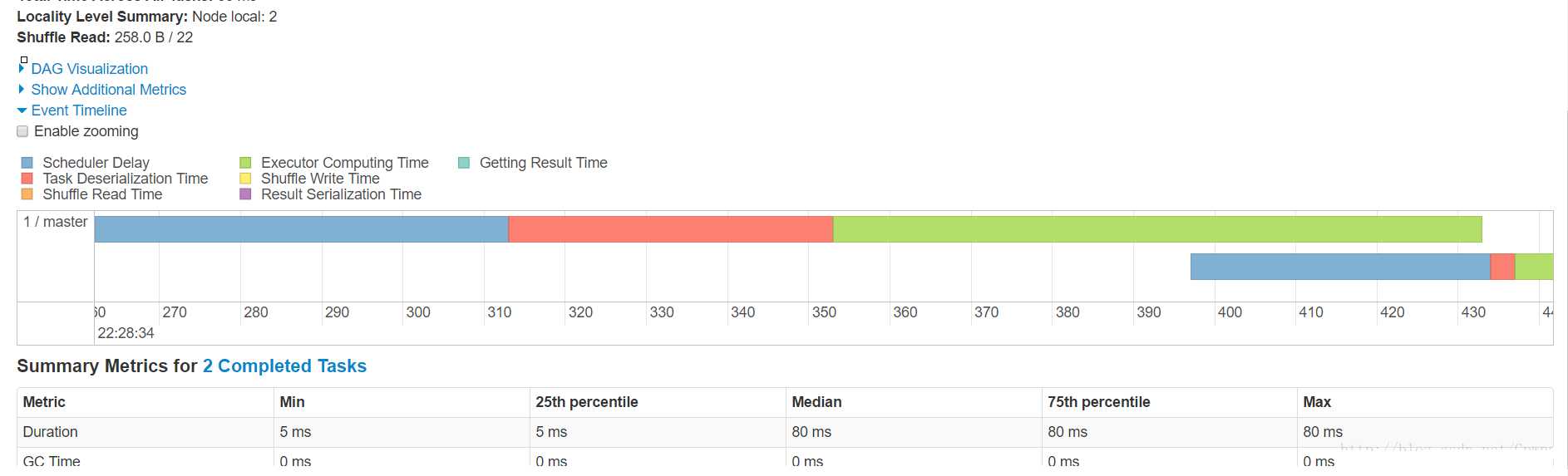

观察一下SparkUI,这里以collect为例子

点进去。观察他的Scheduler Delay

可以看到Scheduler Delay=557-457=100

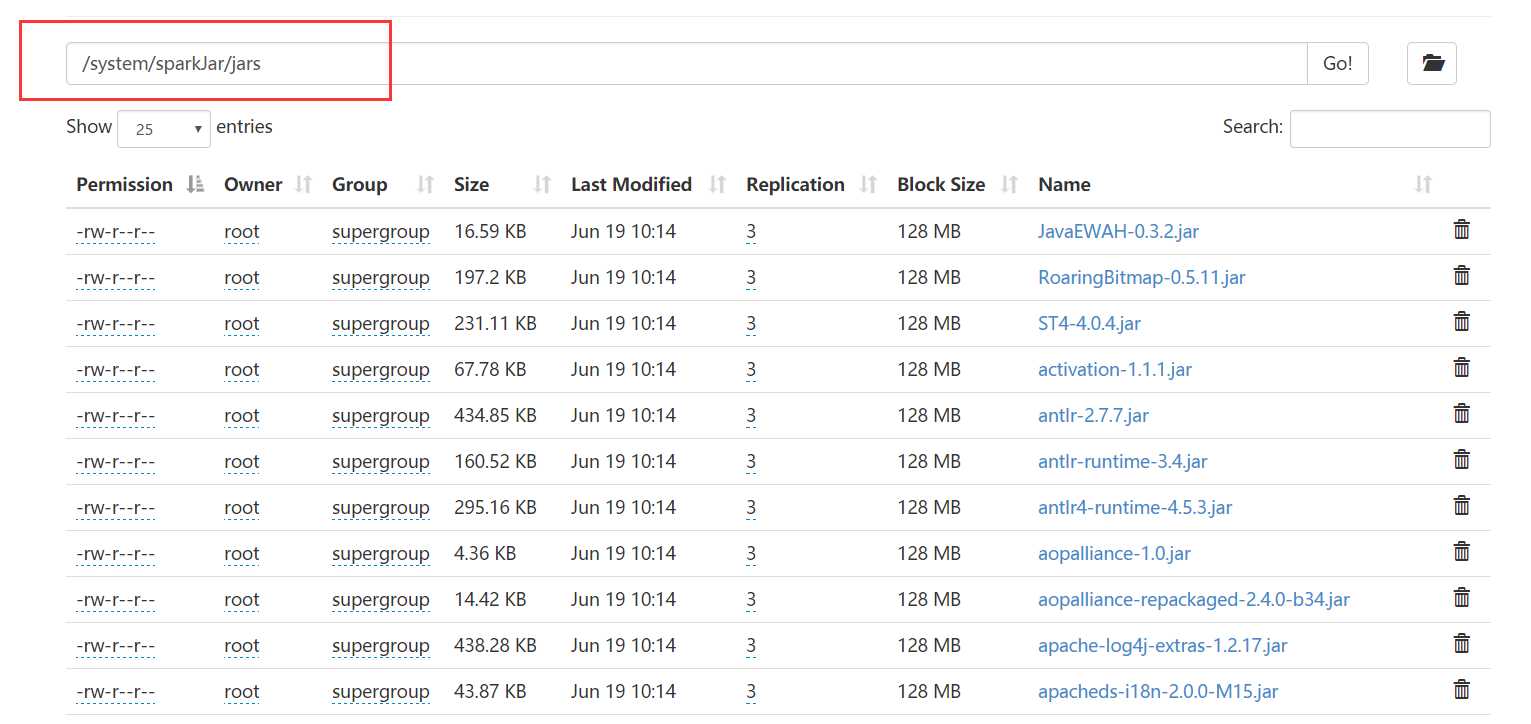

/system/sparkJar/jars

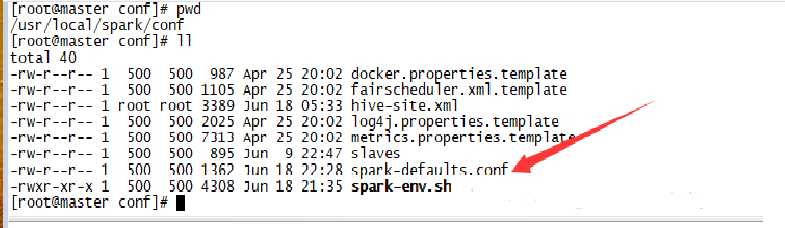

spark-defaults.conf末尾添加上这一行

spark.yarn.jars hdfs://172.17.11.85:9000/system/sparkJar/jars/*.jar

可以看到它已经不用上传Spark运行时Jar包到分布式缓存中了

还是相同的界面:

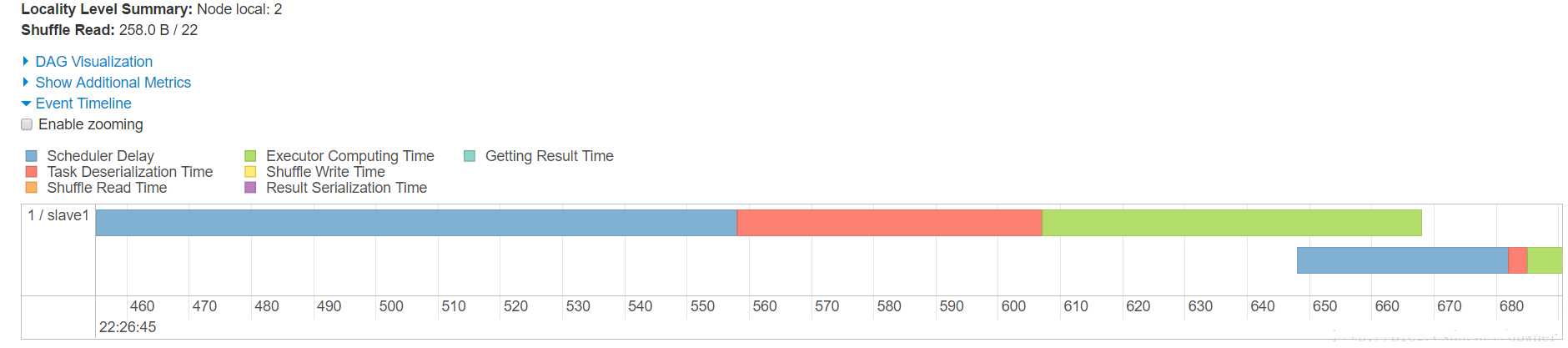

还是看Scheduler Delay

发现Scheduler Delay=313-263=50

标签:efault 没有 split code and spec you hdf ble

原文地址:https://www.cnblogs.com/itboys/p/10041541.html