标签:put work exce eth hive led object word ems

[hadoop@localhost mapreduce]$ hadoop jar hadoop-mapreduce-examples-2.7.3.jar wordcount /home/hadoop/data/input/sp.txt /home/hadoop/data/output/sp_20181225

18/12/26 01:56:56 INFO client.RMProxy: Connecting to ResourceManager at hp001/192.168.66.81:8032

18/12/26 01:56:58 INFO input.FileInputFormat: Total input paths to process : 1

18/12/26 01:56:58 WARN hdfs.DFSClient: Caught exception

java.lang.InterruptedException

at java.lang.Object.wait(Native Method)

at java.lang.Thread.join(Thread.java:1252)

at java.lang.Thread.join(Thread.java:1326)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.closeResponder(DFSOutputStream.java:609)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.endBlock(DFSOutputStream.java:370)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:546)

18/12/26 01:56:58 WARN hdfs.DFSClient: Caught exception

java.lang.InterruptedException

at java.lang.Object.wait(Native Method)

at java.lang.Thread.join(Thread.java:1252)

at java.lang.Thread.join(Thread.java:1326)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.closeResponder(DFSOutputStream.java:609)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.endBlock(DFSOutputStream.java:370)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:546)

18/12/26 01:56:58 INFO mapreduce.JobSubmitter: number of splits:1

18/12/26 01:56:59 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1545759347429_0002

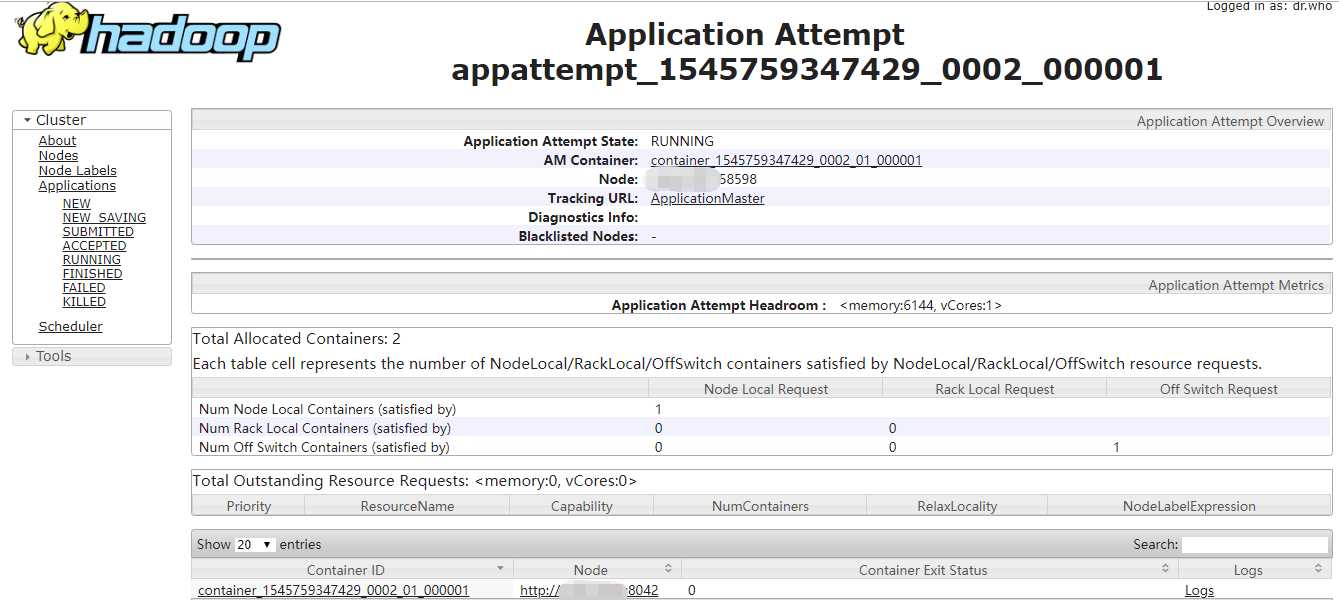

18/12/26 01:57:01 INFO impl.YarnClientImpl: Submitted application application_1545759347429_0002

18/12/26 01:57:01 INFO mapreduce.Job: The url to track the job: http://hp001:8088/proxy/application_1545759347429_0002/

18/12/26 01:57:01 INFO mapreduce.Job: Running job: job_1545759347429_0002

18/12/26 01:57:30 INFO mapreduce.Job: Job job_1545759347429_0002 running in uber mode : false

18/12/26 01:57:30 INFO mapreduce.Job: map 0% reduce 0%

18/12/26 01:57:51 INFO mapreduce.Job: map 100% reduce 0%

18/12/26 01:58:11 INFO mapreduce.Job: map 100% reduce 100%

18/12/26 01:58:13 INFO mapreduce.Job: Job job_1545759347429_0002 completed successfully

18/12/26 01:58:16 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=54

FILE: Number of bytes written=237763

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=141

HDFS: Number of bytes written=32

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=20696

Total time spent by all reduces in occupied slots (ms)=13782

Total time spent by all map tasks (ms)=20696

Total time spent by all reduce tasks (ms)=13782

Total vcore-milliseconds taken by all map tasks=20696

Total vcore-milliseconds taken by all reduce tasks=13782

Total megabyte-milliseconds taken by all map tasks=21192704

Total megabyte-milliseconds taken by all reduce tasks=14112768

Map-Reduce Framework

Map input records=4

Map output records=4

Map output bytes=40

Map output materialized bytes=54

Input split bytes=117

Combine input records=4

Combine output records=4

Reduce input groups=4

Reduce shuffle bytes=54

Reduce input records=4

Reduce output records=4

Spilled Records=8

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=215

CPU time spent (ms)=1570

Physical memory (bytes) snapshot=287817728

Virtual memory (bytes) snapshot=4129759232

Total committed heap usage (bytes)=142573568

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=24

File Output Format Counters

Bytes Written=32

[hadoop@localhost mapreduce]$ cd

[hadoop@localhost ~]$ hadoop fs -ls /home/hadoop/data/output

Found 2 items

drwxr-xr-x - hadoop supergroup 0 2018-12-26 01:58 /home/hadoop/data/output/sp_20181225

drwxr-xr-x - hadoop supergroup 0 2018-12-17 18:36 /home/hadoop/data/output/wordcount

[hadoop@localhost ~]$ hadoop fs -ls /home/hadoop/data/output/sp_20181225

Found 2 items

-rw-r--r-- 1 hadoop supergroup 0 2018-12-26 01:58 /home/hadoop/data/output/sp_20181225/_SUCCESS

-rw-r--r-- 1 hadoop supergroup 32 2018-12-26 01:58 /home/hadoop/data/output/sp_20181225/part-r-00000

[hadoop@localhost ~]$ hadoop fs -cat /home/hadoop/data/output/sp_20181225/part-r-00000

hadoop 1

hive 1

mllib 1

spark 1

标签:put work exce eth hive led object word ems

原文地址:https://www.cnblogs.com/RHadoop-Hive/p/10175625.html