redis安装及数据类型简介(string、list、set、sorted_set、hash)

一:简介:

redis国内最大的案例---》新浪微博

memcache:是key-value数据库

数据类型:只支持key value数据

过期策略:支持

持久化:不支持(可以通过三方程序)

主从复制:不支持

虚拟内存:不支持

使用场景:可以保存session,存放单一的数据,更加轻量级,效率更高

redis:是key-value数据库

数据类型:支持五种数据类型

过期策略:支持

持久化:支持

主从复制:支持

虚拟内存:不支持

支持五种数据类型,可以保存购物车的商品

二:编译安装redis 3.0.7

本机编译安装redis 3.0.7:

tar xvf redis-3.0.7.tar.gz

cd redis-3.0.7

make PREFIX=/usr/local/redis install

制作启动脚本:

cp utils/redis_init_script /etc/init.d/redisd

chmod a+x /etc/init.d/redisd

[root@node5 redis-3.0.7]# vim /etc/init.d/redisd

#!/bin/sh

#

# Simple Redis init.d script conceived to work on Linux systems

# as it does use of the /proc filesystem.

REDISPORT=6379

EXEC=/usr/local/redis/bin/redis-server

CLIEXEC=/usr/local/redis/bin/redis-cli

PIDFILE=/var/run/redis_${REDISPORT}.pid

CONF="/etc/redis/${REDISPORT}.conf"

case "$1" in

start)

if [ -f $PIDFILE ]

then

echo "$PIDFILE exists, process is already running or crashed"

else

echo "Starting Redis server..."

$EXEC $CONF

fi

;;

stop)

if [ ! -f $PIDFILE ]

then

echo "$PIDFILE does not exist, process is not running"

else

PID=$(cat $PIDFILE)

echo "Stopping ..."

$CLIEXEC -p $REDISPORT shutdown

while [ -x /proc/${PID} ]

do

echo "Waiting for Redis to shutdown ..."

sleep 1

done

echo "Redis stopped"

fi

;;

*)

echo "Please use start or stop as first argument"

;;

esac

配置文件:

[root@node5 redis-3.0.7]# mkdir /etc/redis

[root@node5 redis-3.0.7]# cp redis.conf /etc/redis/6379.conf #对应配置文件/etc/redis/6379.conf

启动测试:

/etc/init.d/redisd start

[root@node5 redis-3.0.7]# /etc/init.d/redisd start

Starting Redis server...

65171:M 21 Mar 07:14:15.712 * Increased maximum number of open files to 10032 (it was originally set to 1024).

_._

_.-``__ ‘‘-._

_.-`` `. `_. ‘‘-._ Redis 3.0.7 (00000000/0) 64 bit

.-`` .-```. ```\/ _.,_ ‘‘-._

( ‘ , .-` | `, ) Running in standalone mode

|`-._`-...-` __...-.``-._|‘` _.-‘| Port: 6379

| `-._ `._ / _.-‘ | PID: 65171

`-._ `-._ `-./ _.-‘ _.-‘

|`-._`-._ `-.__.-‘ _.-‘_.-‘|

| `-._`-._ _.-‘_.-‘ | http://redis.io

`-._ `-._`-.__.-‘_.-‘ _.-‘

|`-._`-._ `-.__.-‘ _.-‘_.-‘|

| `-._`-._ _.-‘_.-‘ |

`-._ `-._`-.__.-‘_.-‘ _.-‘

`-._ `-.__.-‘ _.-‘

`-._ _.-‘

`-.__.-‘

这是在前台启动的,如果要想在后台启动,需要改一下配置文件:

daemonize no 改为 daemonize yes

然后在重新启动服务即可

通过salt-master批量安装:

1、编辑redis.sls文件:

/etc/salt/states/init

redis-install:

file.managed: #调用file模块的managed方法

- name: /usr/local/src/redis-3.0.7.tar.gz #客户端的文件路径

- source: salt://init/files/redis-3.0.7.tar.gz #在服务器的路径

- user: root

- group: root

- mode: 755

cmd.run: #执行远程命令,使用cmd的run方法

- name: cd /usr/local/src/ && tar xvf redis-3.0.7.tar.gz && cd redis-3.0.7 && make PREFIX=/usr/local/redis install #编译安装

- unless: test -d /usr/local/redis #如果目录存在就不安装了

- require: #依赖

- file: redis-install #编译之前需要依赖redis-install执行成功

redis-config:

file.managed:

- name: /etc/redis/6379.conf #客户端的配置文件路径

- source: salt://init/files/6379.conf #服务器的配置文件路径

- user: root

- group: root

- mode: 644

redis-service:

file.managed:

- name: /etc/init.d/redis

- source: salt://init/files/redis

- user: root

- group: root

- mode: 755

cmd.run:

- name: chkconfig --add redis && chkconfig redis on #设置服务开机启动

- unless: chkconfig --list | grep redis #如果已经在chkconfig --list列表就不执行上一步骤

service.running: #这是服务启动

- name: redis

- enable: True

- watch: #监控的文件

- file: redis-config

- require: 服务启动依赖 redis-install和redis-service

- cmd: redis-install

- cmd: redis-service

2、准备文件:

[root@node5 init]# ls files/redis #启动脚本

files/redis

[root@node5 init]# ls files/6379.conf #配置文件

files/6379.conf

[root@node5 init]# ls files/redis-3.0.7.tar.gz #源码包

files/redis-3.0.7.tar.gz

3、执行命令:

[root@node5 init]# salt "node6.a.com" state.sls init.redis

4、客户端验证:

root@node6 ~]# /etc/init.d/redis start

Starting Redis server...

[root@node6 ~]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:6379 *:*

LISTEN 0 128 :::6379 :::*

LISTEN 0 128 :::111 :::*

LISTEN 0 128 *:111 *:*

LISTEN 0 128 :::41586 :::*

LISTEN 0 128 :::22 :::*

LISTEN 0 128 *:22 *:*

LISTEN 0 128 127.0.0.1:631 *:*

LISTEN 0 128 ::1:631 :::*

LISTEN 0 100 ::1:25 :::*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 *:46715 *:*

LISTEN 0 128 :::10050 :::*

LISTEN 0 128 *:10050 *:*

salt-master执行命令后后如果报错就根据返回的红色错误信息进行排错

三:redis数据类型格式

3.1、字符串数据格式

SET 设置key,设置的值都是字符串格式string:

192.168.10.205:6379> set key1 value1

OK

192.168.10.205:6379> set key3 value3

OK

192.168.10.205:6379> set key2 value2

OK

GET 获取key的值:

192.168.10.205:6379> get key1

"value1"

192.168.10.205:6379> get key3 #获取指定的key的值

"value3"

不能一次多个key的值:

192.168.10.205:6379> get key1 key2

(error) ERR wrong number of arguments for ‘get‘ command

KEYS 显示所有的key

192.168.10.205:6379> KEYS *

1) "ss"

2) "key2"

3) "list1"

4) "key1"

5) "key4"

EXISTS 判断key是否存在:

192.168.10.205:6379> EXISTS key1

(integer) 1

192.168.10.205:6379> EXISTS key9

(integer) 0

返回1表示存在,0表示不存在

DEL 删除指定的key:

192.168.10.205:6379> del key1 key2 key9

(integer) 2

返回0表示没有指定的key可以删除,返回其他整数表示成功删除的key的数量,没有的key不报错

TYPE 获取key的类型

[root@node5 init]# redis-cli -h 192.168.10.205 -p 6379

192.168.10.205:6379> set key1 value1 #set key

OK

192.168.10.205:6379> get key1 #获取key的值

"value1"

INFO: 当前服务器的状态

SELECT: 更换数据库,redis默认支持16个数据库,默认在数据库0,可以使用sekect 进行更换数据库

192.168.10.205:6379> SELECT 1

OK

192.168.10.205:6379[1]> set key1 value1

OK

192.168.10.205:6379[1]> KEYS *

1) "key1"

清空整个数据库:FLUSHALL

192.168.10.205:6379[1]> FLUSHALL

APPEND:附加值给value

127.0.0.1:6379> get key1

"value1"

127.0.0.1:6379> APPEND key1 cc

(integer) 8

127.0.0.1:6379> get key1

"value1cc"

INCR:如果key不存在就创建并设置value默认为1:

127.0.0.1:6379> INCR num #如果key不存在就创建并设置默认值为1

(integer) 1

127.0.0.1:6379> get num

"1"

127.0.0.1:6379> INCR num #再次执行的值会自增1

(integer) 2

127.0.0.1:6379> get num

"2"

127.0.0.1:6379> get key1

"value1cc"

127.0.0.1:6379> INCR key1 #如果key已经存则报错

(error) ERR value is not an integer or out of range

DECR:value自减1:

127.0.0.1:6379> get num

"5"

127.0.0.1:6379> DECR num

(integer) 4

127.0.0.1:6379> get num

"4"

127.0.0.1:6379> DECR num

(integer) 3

127.0.0.1:6379> get num

"3"

INCRBY :指定自增value的整数值:

127.0.0.1:6379> get num

"13"

127.0.0.1:6379> INCRBY num 7

(integer) 20

127.0.0.1:6379> get num

"20"

127.0.0.1:6379> INCRBY num 10

(integer) 30

127.0.0.1:6379> get num

"30"

DECRBY:指定自检value的值:

127.0.0.1:6379> get num

"41"

127.0.0.1:6379> DECRBY num 10

(integer) 31

127.0.0.1:6379> get num

"31"

127.0.0.1:6379> DECRBY num 10

(integer) 21

127.0.0.1:6379> get num

"21"

INCRBYFLOAT :设置浮点数的value

127.0.0.1:6379> INCRBYFLOAT num1 0.1

"0.1"

127.0.0.1:6379> INCRBYFLOAT num1 0.1

"0.2"

127.0.0.1:6379> INCRBYFLOAT num1 0.1

"0.3"

127.0.0.1:6379> get num1

"0.3"

MSET 和 MGET:批量创建和获取key

127.0.0.1:6379> MSET k1 v2 k2 v2 k3 v3

OK

127.0.0.1:6379> MGET k1 k2 k3

1) "v2"

2) "v2"

3) "v3"

STRLEN:获取key字符串的长度

127.0.0.1:6379> STRLEN key1

(integer) 8

127.0.0.1:6379> STRLEN k1

(integer) 2

127.0.0.1:6379> get key1

"value1cc"

127.0.0.1:6379> get k1

"v2"

3.2、散列数据类型:

HSET:命令用于为哈希表中的字段赋值,如果哈希表不存在,一个新的哈希表被创建并进行 HSET 操作,如果字段已经存在于哈希表中,旧值将被覆盖

HGET:获取key:

127.0.0.1:6379> HSET shouji name iphone

(integer) 1

127.0.0.1:6379> HSET shouji color red

(integer) 1

127.0.0.1:6379> HSET shouji price 4888

(integer) 1

127.0.0.1:6379> HGET shouji name

"iphone"

127.0.0.1:6379> HGET shouji color

"red"

127.0.0.1:6379> HGET shouji price

"4888"

HGETALL:获取key的所有值

127.0.0.1:6379> HGETALL shouji

1) "name"

2) "iphone"

3) "color"

4) "red"

5) "price"

6) "4888"

HMSET、HMGET、HMGETALL:批量创建、批量获取并获取所有的key:

127.0.0.1:6379> HMSET shouji name xiaoji color baise storge 16G #批量创建

OK

127.0.0.1:6379> HMGET shouji name storge #批量获取

1) "xiaoji"

2) "16G"

127.0.0.1:6379> HGETALL shouji #获取所有的key

1) "name"

2) "xiaoji"

3) "color"

4) "baise"

5) "price"

6) "4888"

7) "name1"

8) "xiaomio"

9) "storge"

10) "16G"

HDEL:删除指定的key

127.0.0.1:6379> HDEL shouji name #删除值的key

(integer) 1

127.0.0.1:6379> HGETALL shouji #再次查看

1) "color"

2) "baise"

3) "price"

4) "4888"

5) "name1"

6) "xiaomio"

7) "storge"

8) "16G"

3.3 列表数据类型:不同的数据类型的命令是不通用的,如list与string的命令是不能通用的。出了set命令之外

LPUSH与RPUSH:

127.0.0.1:6379> LPUSH list1 a #从左侧添加

(integer) 1

127.0.0.1:6379> LPUSH list1 b

(integer) 2

127.0.0.1:6379> LPUSH list1 c

(integer) 3

127.0.0.1:6379> RPUSH list 1 #从右侧添加

(integer) 1

127.0.0.1:6379> TYPE list1 #查看类型

list

LLEN:获取列表的长度:

127.0.0.1:6379> LLEN list1

(integer) 3

LPOP 与 RPOP:从左侧和右侧弹出列表中的值:

127.0.0.1:6379> LPOP list1

"c"

127.0.0.1:6379> RPOP list1

"a"

LINDEX:获取最后一个元素:

LRANGE :获取指定范围的元素

127.0.0.1:6379> LINDEX list1 -1

"b"

127.0.0.1:6379> LINDEX list1 3

"a"

127.0.0.1:6379> LRANGE list1 0 -1

1) "d"

2) "c"

3) "b"

4) "a"

5) "b"

3.4、集合数据类型的操作:集合默认是无序的,列表是有序的,有序是只按照添加的循序保持位置

SADD:创建并给集合赋值

192.168.10.205:6379> SADD set1 0 99 1

(integer) 3

192.168.10.205:6379> SADD set1 a b c

(integer) 3

SMEMBERS:获取集合中的所有值

192.168.10.205:6379> SMEMBERS set1

1) "1"

2) "99"

3) "0"

4) "c"

5) "b"

6) "a"

SISMEMBER:判断一个值是不是在集合当中,在返回1,否则返回0

192.168.10.205:6379> SISMEMBER set1 a

(integer) 1

192.168.10.205:6379> SISMEMBER set1 p

(integer) 0

SDIFF:求两个集合的差集:

192.168.10.205:6379> SADD jihe1 1 2 3 a

(integer) 4

192.168.10.205:6379> SADD jihe2 1 2 3 b

(integer) 4

192.168.10.205:6379> SDIFF jihe1 jihe2 #差集,求集合1有而集合2没有的值

1) "a"

192.168.10.205:6379> SDIFF jihe2 jihe1

1) "b"

SINTER:求并集,即在多个集合当中共同包含的值,可以是多个集合

192.168.10.205:6379> SADD jihe3 2 3 c

(integer) 3

192.168.10.205:6379> SINTER jihe1 jihe2 jihe3

1) "2"

2) "3"

SUNION:求并集,即在每个元素都出现的值只统计一次

192.168.10.205:6379> SUNION jihe1 jihe2 jihe3

1) "c"

2) "1"

3) "b"

4) "3"

5) "a"

6) "2"

3.5 有序队列:保持值的位置固定

192.168.10.205:6379> ZADD youxv 1 b 2 a 3 c #通过设置分数创建有序队列,值会安装自己的分数排列位置

(integer) 3

192.168.10.205:6379> ZSCORE youxv a #获取值的分数

"2"

192.168.10.205:6379> ZSCORE youxv b

"1"

ZRANGE:根据值的分数排序获取值

192.168.10.205:6379> ZRANGE youxv 0 3 #0和3为取值的下标范围,可以通过LEN统计,超出值的总数不报错

1) "b"

2) "a"

3) "c"

############################################################################################ redis 消息队列(发布订阅)、持久化(RDB、AOF)、集群(cluster)

一:订阅:

192.168.10.205:6379> SUBSCRIBE test

Reading messages... (press Ctrl-C to quit)

1) "subscribe"

2) "test"

3) (integer) 1

在另一个终端向订阅test发布消息:

127.0.0.1:6379> PUBLISH test1 hello

(integer) 0

上一个终端的订阅就收到了消息:

1) "message"

2) "test"

3) "hello"

二:redis持久化:

redis支持两种数据持久化,一种是rdb,会在指定的数据间隔内把内存的数据快照到文件,aof将所有服务端执行的命令备查,在服务重启的时候全部执行以此

rdb在断电的时候会丢失部分内网,aof不会

1、使用rdb持久化redis数据:

配置文件的快照时间设置:

快照是fork出一个当前的进程在后台进行快照,不会影响当前的进程,先写一个临时文件,写完以后将临时文件替换旧的文件。这个文件任何时候都是一个完整的副本

save 900 1 #900秒以内有1个key发生变化就快照

save 300 10 #300秒以内有10个key发生变化就快照

save 60 10000 #60秒内有10000个key变化就快照

rdbcompression yes #持久化到RDB文件时,是否压缩,“yes”为压缩,“no”则反之

rdbchecksum yes #读取和写入的时候是否支持CRC64校验,默认是开启的

dbfilename dump.rdb #保存的文件的名称

dir /usr/local/redis #快照文件的保存路径

SAVE 与 BGSAVE:

SAVE:阻塞保存

BGSAVE:在后台保存,不阻塞

KILL -9 会丢失自上次保存以后到现在的数据

2、使用AOF进行持久化:

appendonly yes #默认为no,改为yes

appendfilename "appendonly.aof" #保存的文件名,路径为rdb指定的file目录

关闭服务在启动即可

三:redis主从:

一个主服务器可以有多个从服务器,而且从服务器也可以有主服务器,主从复制时非阻塞的,通过fork主进程

主服务器收到从服务器的sync命令,就在后台执行bgsave,执行完毕后将保持的rdb文件发给客户端,客户端收到后将rdb载入到内存,新版本的支持增量备份

通过info命令查看默认配置:

# Replication

role:master #默认较色为主服务器

connected_slaves:0

master_repl_offset:0

repl_backlog_active:0

repl_backlog_size:1048576

repl_backlog_first_byte_offset:0

repl_backlog_histlen:0

在从服务器执行命令:

127.0.0.1:6379> SLAVEOF 192.168.10.205 6379 #主服务器的地址和端口

OK

查看从服务器的info信息:

# Replication

role:slave #角色为从服务器

master_host:192.168.10.205 #主服务器的地址

master_port:6379 #主服务器的端口

master_link_status:up

master_last_io_seconds_ago:8

master_sync_in_progress:0

slave_repl_offset:29

slave_priority:100

slave_read_only:1

connected_slaves:0

master_repl_offset:0

repl_backlog_active:0

repl_backlog_size:1048576

repl_backlog_first_byte_offset:0

repl_backlog_histlen:0

主服务器的状态信息:

# Replication

role:master #角色为主服务器

connected_slaves:1

slave0:ip=192.168.10.206,port=6379,state=online,offset=471,lag=0 #从服务器的地址和端口

master_repl_offset:471

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:2

repl_backlog_histlen:470

从服务器无法写,如下:

127.0.0.1:6379> set a x

(error) READONLY You can‘t write against a read only slave.

三:redis集群:

四种大方案:

1、客户端分片,优势是比较灵活,不存在单点故障,缺点是添加节点需要重新配置,数据要手动同步

2、代理:代理分片,有proxy代理取数据,可以为proxy配置算法,如Twemproxy

3、Redis Cluster:在3.0版本以后支持,无中心,在某种情况下会造成数据丢失

4、Coodis:豌豆荚的开源方案

使用redis cluster:

需要至少6个机器,3主3从,下面创建8个客户端,另外2个做备用

先将配置文件复制到/opt,然后创建8个目录并生成8个不同端口、不同pid和不同rdb文件的redis.conf配置文件:

[root@node5 ~]# cd /opt/

[root@node5 opt]# mkdir `seq 7001 7008`

[root@node5 opt]# cp /etc/redis/6379.conf .

[root@node5 opt]# sed ‘s/6379/7001/g‘ 6379.conf >> 7001/redis.conf

[root@node5 opt]# sed ‘s/7001/7002/g‘ 6379.conf >> 7002/redis.conf

[root@node5 opt]# sed ‘s/7002/7003/g‘ 6379.conf >> 7003/redis.conf

[root@node5 opt]# sed ‘s/7003/7004/g‘ 6379.conf >> 7004/redis.conf

[root@node5 opt]# sed ‘s/7004/7005/g‘ 6379.conf >> 7005/redis.conf

[root@node5 opt]# sed ‘s/7005/7006/g‘ 6379.conf >> 7006/redis.conf

[root@node5 opt]# sed ‘s/7006/7007/g‘ 6379.conf >> 7007/redis.conf

[root@node5 opt]# sed ‘s/7007/7008/g‘ 6379.conf >> 7008/redis.conf

批量启动redis:

[root@node5 opt]# for i in `seq 7001 7008`;do cd /opt/$i && /usr/local/redis/bin/redis-server /opt/$i/redis.conf; done

确认端口都已经启动成功:

[root@node5 opt]# redis-cli -h 192.168.10.205 -p 7001

192.168.10.205:7001>

[root@node5 opt]# redis-cli -h 192.168.10.205 -p 7002

192.168.10.205:7002>

[root@node5 opt]# redis-cli -h 192.168.10.205 -p 7003

192.168.10.205:7003>

[root@node5 opt]# redis-cli -h 192.168.10.205 -p 7004

192.168.10.205:7004>

[root@node5 opt]# redis-cli -h 192.168.10.205 -p 7005

192.168.10.205:7005>

[root@node5 opt]# redis-cli -h 192.168.10.205 -p 7006

192.168.10.205:7006>

[root@node5 opt]# redis-cli -h 192.168.10.205 -p 7007

192.168.10.205:7007>

[root@node5 opt]# redis-cli -h 192.168.10.205 -p 7008

192.168.10.205:7008>

安装ruby管理工具:

[root@node5 opt]# yum install ruby rubygems -y

[root@node5 opt]# gem install redis

Successfully installed redis-3.2.2

1 gem installed

Installing ri documentation for redis-3.2.2...

Installing RDoc documentation for redis-3.2.2..

复制ruby的管理脚本:

[root@node5 ~]# cp /root/redis-3.0.7/src/redis-trib.rb /usr/local/bin/redis-trib #是一个ruby脚本,方便redis管理

功能介绍:

root@node5 ~]# redis-trib help

Usage: redis-trib <command> <options> <arguments ...>

help (show this help)

del-node host:port node_id #删除节点

reshard host:port #重新分片

--timeout <arg>

--pipeline <arg>

--slots <arg>

--to <arg>

--yes

--from <arg>

fix host:port

--timeout <arg>

create host1:port1 ... hostN:portN #创建集群

--replicas <arg>

rebalance host:port

--timeout <arg>

--simulate

--pipeline <arg>

--threshold <arg>

--use-empty-masters

--auto-weights

--weight <arg>

call host:port command arg arg .. arg

add-node new_host:new_port existing_host:existing_port #添加节点

--slave

--master-id <arg>

check host:port #检测节点

import host:port

--replace

--copy

--from <arg>

set-timeout host:port milliseconds

info host:port

创建集群:

[root@node5 ~]# redis-trib create --replicas 1 192.168.10.205:7001 192.168.10.205:7002 192.168.10.205:7003 192.168.10.205:7004 192.168.10.205:7005 192.168.10.205:7006

>>> Creating cluster

>>> Performing hash slots allocation on 6 nodes...

Using 3 masters:

192.168.10.205:7001

192.168.10.205:7002

192.168.10.205:7003

Adding replica 192.168.10.205:7004 to 192.168.10.205:7001

Adding replica 192.168.10.205:7005 to 192.168.10.205:7002

Adding replica 192.168.10.205:7006 to 192.168.10.205:7003

M: 9db0386330f3d66d0c7cac48dbf2dda1972c96c4 192.168.10.205:7001

slots:0-5460 (5461 slots) master

M: 5d1ccd0b651b135bbc5e91976aff91ce3e33b0b6 192.168.10.205:7002

slots:5461-10922 (5462 slots) master

M: 8f2f43ef9008f299786b3f02446a0ac0d20dde35 192.168.10.205:7003

slots:10923-16383 (5461 slots) master

S: 32d95f05196786500187137f30040968cc8b6521 192.168.10.205:7004

replicates 9db0386330f3d66d0c7cac48dbf2dda1972c96c4

S: 19806347e07d4b341e3ddff16136241d16e410c4 192.168.10.205:7005

replicates 5d1ccd0b651b135bbc5e91976aff91ce3e33b0b6

S: 840e13e7cef0db82172fab8c50180088055ada60 192.168.10.205:7006

replicates 8f2f43ef9008f299786b3f02446a0ac0d20dde35

Can I set the above configuration? (type ‘yes‘ to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join..

>>> Performing Cluster Check (using node 192.168.10.205:7001)

M: 9db0386330f3d66d0c7cac48dbf2dda1972c96c4 192.168.10.205:7001 #ID及IP和端口

slots:0-5460 (5461 slots) master #本机对应的分片位置,0-5460

M: 5d1ccd0b651b135bbc5e91976aff91ce3e33b0b6 192.168.10.205:7002

slots:5461-10922 (5462 slots) master #本机对应的分片位置,5461-10922 #ID及IP和端口

M: 8f2f43ef9008f299786b3f02446a0ac0d20dde35 192.168.10.205:7003

slots:10923-16383 (5461 slots) master #本机对应的分片位置,10923-16383 #ID及IP和端口

M: 32d95f05196786500187137f30040968cc8b6521 192.168.10.205:7004

slots: (0 slots) master

replicates 9db0386330f3d66d0c7cac48dbf2dda1972c96c4 #master的ID

M: 19806347e07d4b341e3ddff16136241d16e410c4 192.168.10.205:7005

slots: (0 slots) master

replicates 5d1ccd0b651b135bbc5e91976aff91ce3e33b0b6 #master的ID

M: 840e13e7cef0db82172fab8c50180088055ada60 192.168.10.205:7006

slots: (0 slots) master

replicates 8f2f43ef9008f299786b3f02446a0ac0d20dde35 #master的ID

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

连接到集群:

[root@node5 ~]# redis-cli -h 192.168.10.205 -c -p 7001

查看集群信息:

# Replication

role:master

connected_slaves:1

slave0:ip=192.168.10.205,port=7004,state=online,offset=407,lag=0

master_repl_offset:407

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:2

repl_backlog_histlen:406

操作集群:

192.168.10.205:7001> set key1 value1

-> Redirected to slot [9189] located at 192.168.10.205:7002

OK

192.168.10.205:7002> set key2 value2

-> Redirected to slot [4998] located at 192.168.10.205:7001

OK

192.168.10.205:7001> set key3 value3

OK

192.168.10.205:7001> set key4 value4

-> Redirected to slot [13120] located at 192.168.10.205:7003

OK

192.168.10.205:7003> set key5 value5

-> Redirected to slot [9057] located at 192.168.10.205:7002

OK

192.168.10.205:7002> set key6 value6

-> Redirected to slot [4866] located at 192.168.10.205:7001

OK

192.168.10.205:7001> set key7 value7

OK

192.168.10.205:7001> set key8 value8

-> Redirected to slot [13004] located at 192.168.10.205:7003 #插入的数据是轮训写入到各个redis server

OK

cluster nodes:列出当前的主从

192.168.10.205:7003> CLUSTER nodes

8f2f43ef9008f299786b3f02446a0ac0d20dde35 192.168.10.205:7003 myself,master - 0 0 3 connected 10923-16383

5d1ccd0b651b135bbc5e91976aff91ce3e33b0b6 192.168.10.205:7002 master - 0 1458558870837 2 connected 5461-10922

32d95f05196786500187137f30040968cc8b6521 192.168.10.205:7004 slave 9db0386330f3d66d0c7cac48dbf2dda1972c96c4 0 1458558869829 4 connected

840e13e7cef0db82172fab8c50180088055ada60 192.168.10.205:7006 slave 8f2f43ef9008f299786b3f02446a0ac0d20dde35 0 1458558868820 6 connected

19806347e07d4b341e3ddff16136241d16e410c4 192.168.10.205:7005 slave 5d1ccd0b651b135bbc5e91976aff91ce3e33b0b6 0 1458558870334 5 connected

9db0386330f3d66d0c7cac48dbf2dda1972c96c4 192.168.10.205:7001 master - 0 1458558869829 1 connected 0-5460

cluster info:当前的状态:

192.168.10.205:7003> CLUSTER INFO

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:3

cluster_stats_messages_sent:2911

cluster_stats_messages_received:2911

向集群中添加节点:

[root@node5 ~]# redis-trib add-node 192.168.10.205:7007 192.168.10.205:7001

要添加的节点和端口 添加到的目的节点和端口

[root@node5 ~]# redis-trib add-node 192.168.10.205:7007 192.168.10.205:7001

>>> Adding node 192.168.10.205:7007 to cluster 192.168.10.205:7001

>>> Performing Cluster Check (using node 192.168.10.205:7001)

M: 9db0386330f3d66d0c7cac48dbf2dda1972c96c4 192.168.10.205:7001

slots:0-5460 (5461 slots) master

1 additional replica(s)

M: 8f2f43ef9008f299786b3f02446a0ac0d20dde35 192.168.10.205:7003

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S: 840e13e7cef0db82172fab8c50180088055ada60 192.168.10.205:7006

slots: (0 slots) slave

replicates 8f2f43ef9008f299786b3f02446a0ac0d20dde35

M: 5d1ccd0b651b135bbc5e91976aff91ce3e33b0b6 192.168.10.205:7002

slots:5461-10922 (5462 slots) master

1 additional replica(s)

S: 32d95f05196786500187137f30040968cc8b6521 192.168.10.205:7004

slots: (0 slots) slave

replicates 9db0386330f3d66d0c7cac48dbf2dda1972c96c4

S: 19806347e07d4b341e3ddff16136241d16e410c4 192.168.10.205:7005

slots: (0 slots) slave

replicates 5d1ccd0b651b135bbc5e91976aff91ce3e33b0b6

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.10.205:7007 to make it join the cluster.

[OK] New node added correctly.

添加主机以后需要重新分片:

[root@node5 ~]# redis-trib reshard 192.168.10.205:7007

连接到新添加的节点查询信息:

[root@node5 ~]# redis-cli -h 192.168.10.205 -p 7007

192.168.10.205:7007> KEYS *

(empty list or set)

192.168.10.205:7007> CLUSTER NODES

5d1ccd0b651b135bbc5e91976aff91ce3e33b0b6 192.168.10.205:7002 master - 0 1458560205983 2 connected 5728-10922

8f2f43ef9008f299786b3f02446a0ac0d20dde35 192.168.10.205:7003 master - 0 1458560204471 3 connected 11189-16383

19a5bb651f4b182a0e3dd49154323d31c1524167 192.168.10.205:7007 myself,master - 0 0 7 connected 0-265 5461-5727 10923-11188 #已经有了分片信息

19806347e07d4b341e3ddff16136241d16e410c4 192.168.10.205:7005 slave 5d1ccd0b651b135bbc5e91976aff91ce3e33b0b6 0 1458560204977 2 connected

840e13e7cef0db82172fab8c50180088055ada60 192.168.10.205:7006 slave 8f2f43ef9008f299786b3f02446a0ac0d20dde35 0 1458560205482 3 connected

9db0386330f3d66d0c7cac48dbf2dda1972c96c4 192.168.10.205:7001 master - 0 1458560204471 1 connected 266-5460

32d95f05196786500187137f30040968cc8b6521 192.168.10.205:7004 slave 9db0386330f3d66d0c7cac48dbf2dda1972c96c4 0 1458560204977 1 connected

为新添加的主节点添加从节点:

[root@node5 ~]# redis-trib add-node 192.168.10.205:7008 192.168.10.205:7001 #添加一个节点

#登陆到从节点进行配置

[root@node5 ~]# redis-cli -h 192.168.10.205 -c -p 7008 #登陆到要备用的从节点

192.168.10.205:7008> CLUSTER replicate 19a5bb651f4b182a0e3dd49154323d31c1524167 #指定主节点的ID即可

OK

再次查看集群信息:

192.168.10.205:7008> CLUSTER nodes

19806347e07d4b341e3ddff16136241d16e410c4 192.168.10.205:7005 slave 5d1ccd0b651b135bbc5e91976aff91ce3e33b0b6 0 1458560717276 2 connected

ab146fb5881064a6375da76f861e205d2d702140 192.168.10.205:7008 myself,slave 19a5bb651f4b182a0e3dd49154323d31c1524167 0 0 0 connected #已经是从节点并连接了

32d95f05196786500187137f30040968cc8b6521 192.168.10.205:7004 slave 9db0386330f3d66d0c7cac48dbf2dda1972c96c4 0 1458560716772 1 connected

8f2f43ef9008f299786b3f02446a0ac0d20dde35 192.168.10.205:7003 master - 0 1458560715764 3 connected 11189-16383

19a5bb651f4b182a0e3dd49154323d31c1524167 192.168.10.205:7007 master - 0 1458560717276 7 connected 0-265 5461-5727 10923-11188

9db0386330f3d66d0c7cac48dbf2dda1972c96c4 192.168.10.205:7001 master - 0 1458560717276 1 connected 266-5460

5d1ccd0b651b135bbc5e91976aff91ce3e33b0b6 192.168.10.205:7002 master - 0 1458560716267 2 connected 5728-10922

840e13e7cef0db82172fab8c50180088055ada60 192.168.10.205:7006 slave 8f2f43ef9008f299786b3f02446a0ac0d20dde35 0 1458560717780 3 connected

############################################################################################ redis的管理工具

- phpredisadmin工具

- rdbtools管理工具

- saltstack管理redis

- 通过codis完成redis管理

一:phpredisadmin工具:类似于mysqladmin管理mysql一样

安装环境:

[root@node5 ~]# yum install httpd php php-redis -y

下载管理包:

[root@node5 html]# git clone https://github.com/erikdubbelboer/phpRedisAdmin.git

更改名称并解决依赖:

[root@node5 html]# mv phpRedisAdmin admin

[root@node5 html]# cd admin

[root@node5 admin]# git clone https://github.com/nrk/predis.git vendor #解决依赖包

启动http,启动前确认80端口没有被占用,如果被占用可以修改监听的默认端口为80以为的其他端口:

[root@node5 html]# /etc/init.d/httpd restart

Stopping httpd: [FAILED]

Starting httpd: [ OK ]

[root@node5 html]# chkconfig httpd on

访问http://ServerIP/admin:

报错,无法打开,查看apache错误日志如下:

[Mon Mar 21 21:24:47 2016] [error] [client 192.168.10.1] PHP Fatal error: require(): Failed opening required ‘/var/www/html/admin/includes/../vendor/autoload.php‘ (include_path=‘.:/usr/share/pear:/usr/share/php‘) in /var/www/html/admin/includes/common.inc.php on line 2

解决过程:

[root@node5 admin]# cd includes/

[root@node5 includes]# ls config.sample.inc.php config.inc.php

[root@node5 includes]# vim config.inc.php

$config = array(

‘servers‘ => array(

array(

‘name‘ => ‘local server‘, // Optional name.

‘host‘ => ‘192.168.10.205‘, #配置为自己的主机IP

‘port‘ => 6379,

‘filter‘ => ‘*‘,

// Optional Redis authentication.

//‘auth‘ => ‘redispasswordhere‘ // Warning: The password is sent in plain-text to the Redis server.

),

保存退出后重新启动httpd:

[root@node5 includes]# /etc/init.d/httpd restart

Stopping httpd: [ OK ]

Starting httpd: [ OK ]

再次测试还是不能访问,报错如下:

[Mon Mar 21 21:55:27 2016] [error] [client 192.168.10.1] PHP Fatal error: Call to undefined function mb_internal_encoding() in /var/www/html/admin/includes/common.inc.php on line 59

解决办法:

[root@node5 admin]# vim /var/www/html/admin/includes/common.inc.php

mb_internal_encoding(‘utf-8‘);

将此行改为注释,如下:

#mb_internal_encoding(‘utf-8‘);

访问:

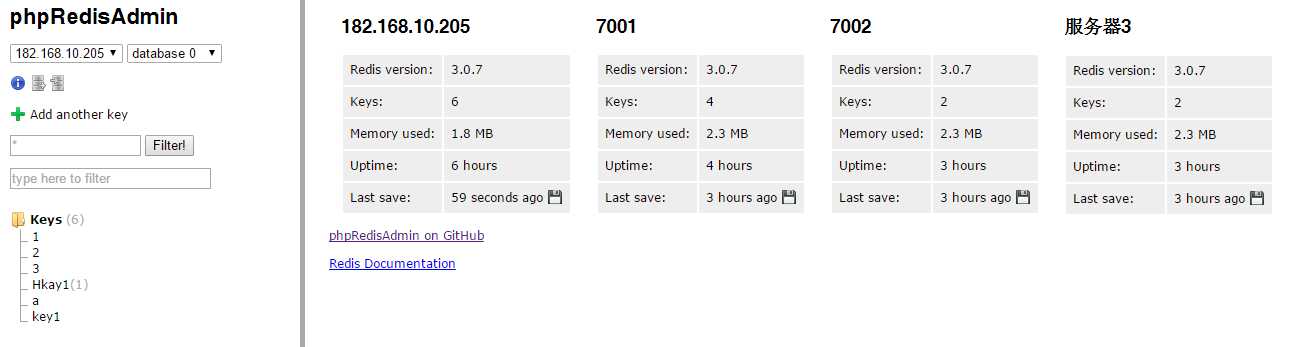

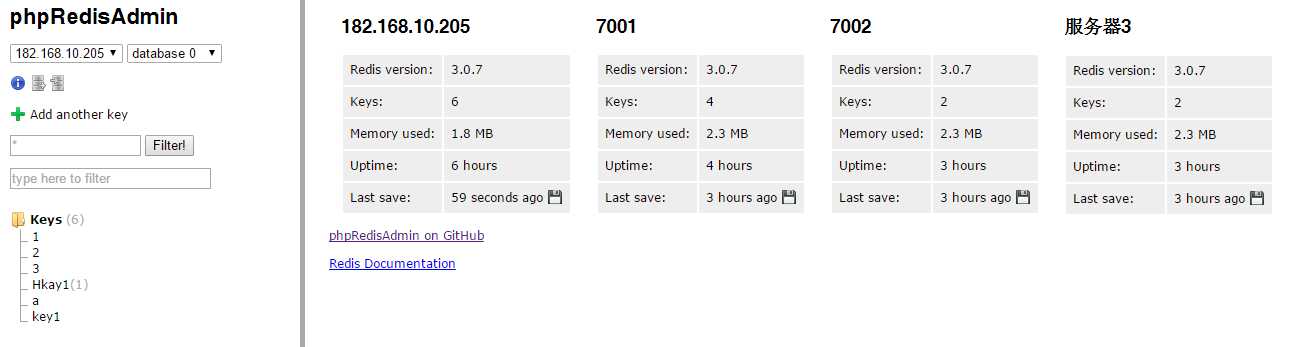

配置管理多个reids Server:

[root@node5 includes]# pwd

/var/www/html/admin/includes

[root@node5 includes]# vim config.inc.php

$config = array(

‘servers‘ => array(

array(

‘name‘ => ‘182.168.10.205‘, // Optional name.

‘host‘ => ‘192.168.10.205‘,

‘port‘ => 6379,

‘filter‘ => ‘*‘,

// Optional Redis authentication.

//‘auth‘ => ‘redispasswordhere‘ // Warning: The password is sent in plain-text to the Redis server.

),

array( #一个主机一个配置,名称自定义,IP和端口是Redis的IP和端口

‘name‘ => ‘7001‘, // Optional name.name支持中文

‘host‘ => ‘192.168.10.205‘,

‘port‘ => 7001,

),

array(

‘name‘ => ‘7002‘, // Optional name.

‘host‘ => ‘192.168.10.205‘,

‘port‘ => 7002,

),

array(

‘name‘ => ‘7003‘, // Optional name.

‘host‘ => ‘192.168.10.205‘,

‘port‘ => 7003,

),

更改完成以后无需重启apache,如果浏览器已经打开管理地址,刷新一下即可:

二:rdbtools管理工具:

使用pip命令安装,如果没有pip命令则先安装pip:

[root@node5 ~]# yum install python-pip

使用pip安装rdbtools: pip install rdbtools

[root@node5 ~]# pip install rdbtools

/usr/lib/python2.6/site-packages/pip/_vendor/requests/packages/urllib3/util/ssl_.py:90: InsecurePlatformWarning: A true SSLContext object is not available. This prevents urllib3 from configuring SSL appropriately and may cause certain SSL connections to fail. For more information, see https://urllib3.readthedocs.org/en/latest/security.html#insecureplatformwarning.

InsecurePlatformWarning

You are using pip version 7.1.0, however version 8.1.1 is available.

You should consider upgrading via the ‘pip install --upgrade pip‘ command.

Collecting rdbtools

/usr/lib/python2.6/site-packages/pip/_vendor/requests/packages/urllib3/util/ssl_.py:90: InsecurePlatformWarning: A true SSLContext object is not available. This prevents urllib3 from configuring SSL appropriately and may cause certain SSL connections to fail. For more information, see https://urllib3.readthedocs.org/en/latest/security.html#insecureplatformwarning.

InsecurePlatformWarning

Downloading rdbtools-0.1.6.tar.gz

Installing collected packages: rdbtools

Running setup.py install for rdbtools

Successfully installed rdbtools-0.1.6

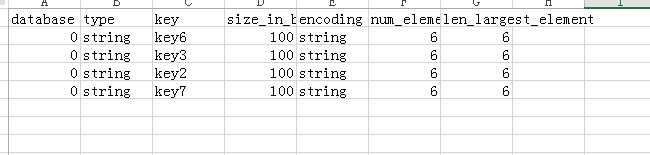

导出rdb中的key信息:

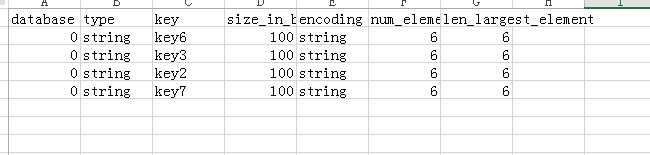

[root@node5 7001]# rdb -c memory dump_7001.rdb > memory.csv #然后将memory.csv文件下载到本地,即可用excel打开分析了,格式如下图:

三:通过saltstack管理redis:

模块名称:redismod

官方文档地址:https://docs.saltstack.com/en/latest/ref/modules/all/index.html

四:通过codis完成redis管理:

1.codis简介:

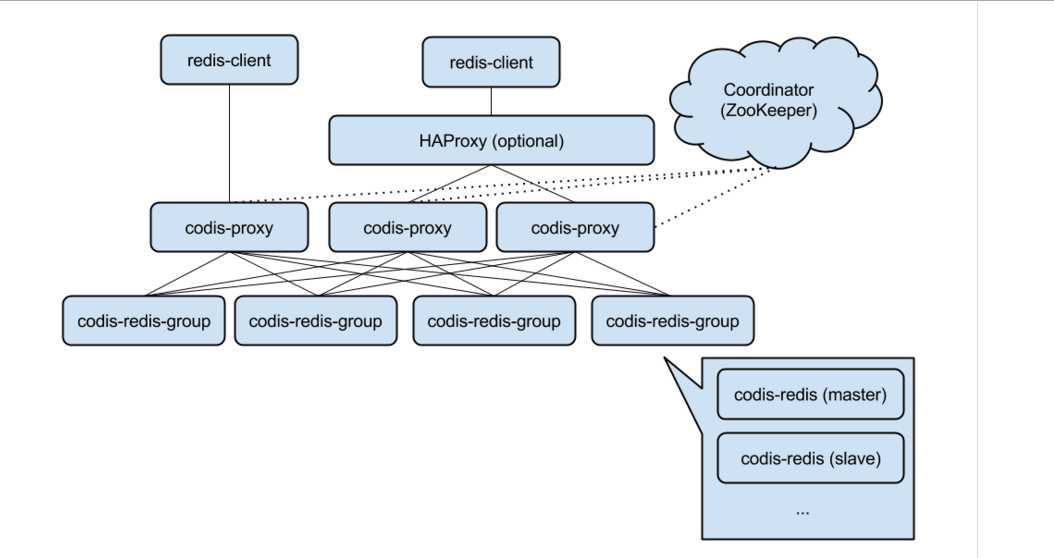

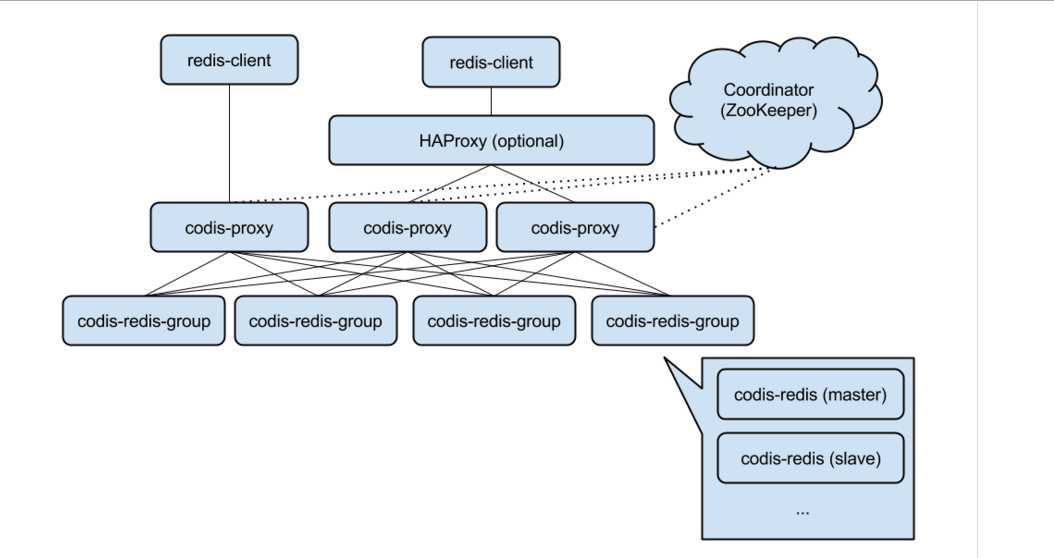

Codis 是一个分布式 Redis 解决方案, 对于上层的应用来说, 连接到 Codis Proxy 和连接原生的 Redis Server 没有明显的区别 (不支持的命令列表), 上层应用可以像使用单机的 Redis 一样使用, Codis 底层会处理请求的转发, 不停机的数据迁移等工作, 所有后边的一切事情, 对于前面的客户端来说是透明的, 可以简单的认为后边连接的是一个内存无限大的 Redis 服务.其架构如下:

Codis 由四部分组成:

- Codis Proxy (codis-proxy) #负责对redis server 的代理,可以在前端使用haproxy做负载均衡,负责调度redis-client的请求。

- Codis Dashboard (codis-config) #codis的管理工具,可以添加删除redis节点,发起数据迁移,还自带一个http server,提供一个dashboard可以在浏览器进行观察和配置。

- Codis Redis (codis-server) #基于redis 2.8做了一个分支,只能运行codis-server

- ZooKeeper/Etcd #Codis 依赖 ZooKeeper 来存放数据路由表和 codis-proxy 节点的元信息, codis-config 发起的命令都会通过 ZooKeeper 同步到各个存活的 codis-proxy.

codis-proxy 是客户端连接的 Redis 代理服务, codis-proxy 本身实现了 Redis 协议, 表现得和一个原生的 Redis 没什么区别 (就像 Twemproxy), 对于一个业务来说, 可以部署多个 codis-proxy, codis-proxy 本身是无状态的.

codis-config 是 Codis 的管理工具, 支持包括, 添加/删除 Redis 节点, 添加/删除 Proxy 节点, 发起数据迁移等操作. codis-config 本身还自带了一个 http server, 会启动一个 dashboard, 用户可以直接在浏览器上观察 Codis 集群的运行状态.

2.准备基础环境:

安装go语言并设置环境变量:

[root@node5 opt]# yum install golang -y #codis是基于go语言编写的,因此要安装go语言环境

[root@node5 opt]# mkdir /opt/gopath #保存go环境的路径

[root@node5 opt]# vim /etc/profile

export GOPATH=/opt/gopath

[root@node5 opt]# source /etc/profile

安装配置zookeeper,需要有java环境:

[root@node5 ~]# wget http://mirrors.cnnic.cn/apache/zookeeper/zookeeper-3.4.6/zookeeper-3.4.6.tar.gz

[root@node5 ~]# mv zookeeper-3.4.6 /usr/local/zookeeper #移动到目录

[root@node5 ~]# cd /usr/local/zookeeper/conf/ #进入到目录

[root@node5 conf]# cp zoo_sample.cfg /opt/zoo.cfg #复制配置文件

[root@node5 conf]# cd /opt/

[root@node5 opt]# mkdir zk1 zk2 zk3 #准备zookeeper 服务ID,每个服务器的ID是不同的

[root@node5 opt]# echo 1 > zk1/myid

[root@node5 opt]# echo 2 > zk2/myid

[root@node5 opt]# echo 3 > zk3/myid

[root@node5 opt]# vim zoo.cfg #编辑配置文件

dataDir=/opt/zk1 #保存数据的命令

clientPort=2181 #客户端连接的端口

server.1 = 192.168.10.205:2887:3887

server.2 = 192.168.10.205:2888:3888

server.3 = 192.168.10.205:2889:3889

[root@node5 opt]# cp zoo.cfg zk1/zk1.cfg

[root@node5 opt]# cp zoo.cfg zk2/zk2.cfg

[root@node5 opt]# cp zoo.cfg zk3/zk3.cfg

[root@node5 opt]# vim zk2/zk2.cfg #每个服务对应不同的目录

dataDir=/opt/zk2

clientPort=2182

[root@node5 opt]# vim zk3/zk3.cfg

dataDir=/opt/zk3

clientPort=2183

详细配置:

[root@node5 opt]# grep ‘^[a-z]‘ zk3/zk3.cfg

tickTime=2000 #服务器和客户端的心跳维持间隔,间隔多久发送心跳

initLimit=10 #选举的时候的时间间隔是10次,10次 * 2000微秒

syncLimit=5 # Leader 与 Follower 之间发送消息,请求和应答时间长度,最长不能超过多少个 tickTime 的时间长度

dataDir=/opt/zk3 #数据保存目录

clientPort=2181 #客户端连接的端口

server.1 = 192.168.10.205:2887:3887 #集群端口和ID配置

server.2 = 192.168.10.205:2888:3888

server.3 = 192.168.10.205:2889:3889

详细解释:

tickTime:这个时间是作为 Zookeeper 服务器之间或客户端与服务器之间维持心跳的时间间隔,也就是每个 tickTime 时间就会发送一个心跳。

dataDir:顾名思义就是 Zookeeper 保存数据的目录,默认情况下,Zookeeper 将写数据的日志文件也保存在这个目录里。

clientPort:这个端口就是客户端连接 Zookeeper 服务器的端口,Zookeeper 会监听这个端口,接受客户端的访问请求。

initLimit:这个配置项是用来配置 Zookeeper 接受客户端(这里所说的客户端不是用户连接 Zookeeper 服务器的客户端,而是 Zookeeper 服务器集群中连接到 Leader 的 Follower 服务器)初始化连接时最长能忍受多少个心跳时间间隔数。当已经超过 5个心跳的时间(也就是 tickTime)长度后 Zookeeper 服务器还没有收到客户端的返回信息,那么表明这个客户端连接失败。总的时间长度就是 5*2000=10 秒

syncLimit:这个配置项标识 Leader 与 Follower 之间发送消息,请求和应答时间长度,最长不能超过多少个 tickTime 的时间长度,总的时间长度就是 2*2000=4 秒

server.A=B:C:D:其中 A 是一个数字,表示这个是第几号服务器;B 是这个服务器的 ip 地址;C 表示的是这个服务器与集群中的 Leader 服务器交换信息的端口;D 表示的是万一集群中的 Leader 服务器挂了,需要一个端口来重新进行选举,选出一个新的 Leader,而这个端口就是用来执行选举时服务器相互通信的端口。如果是伪集群的配置方式,由于 B 都是一样,所以不同的 Zookeeper 实例通信端口号不能一样,所以要给它们分配不同的端口号。

启动每个zookeeper 服务:

[root@node5 opt]# /usr/local/zookeeper/bin/zkServer.sh start /opt/zk1/zk1.cfg

JMX enabled by default

Using config: /opt/zk1/zk1.cfg

Starting zookeeper ... STARTED

[root@node5 opt]# /usr/local/zookeeper/bin/zkServer.sh start /opt/zk2/zk2.cfg

JMX enabled by default

Using config: /opt/zk2/zk2.cfg

Starting zookeeper ... STARTED

[root@node5 opt]# /usr/local/zookeeper/bin/zkServer.sh start /opt/zk3/zk3.cfg

JMX enabled by default

Using config: /opt/zk3/zk3.cfg

Starting zookeeper ... STARTED

确认端口启动成功:

[root@node5 opt]# ss -tnl | grep 2181

LISTEN 0 50 :::2181 :::*

[root@node5 opt]# ss -tnl | grep 2182

LISTEN 0 50 :::2182 :::*

[root@node5 opt]# ss -tnl | grep 2183

LISTEN 0 50 :::2183 :::*

[root@node5 opt]#

查看每个节点的状态:

[root@node5 opt]# /usr/local/zookeeper/bin/zkServer.sh status /opt/zk1/zk1.cfg

JMX enabled by default

Using config: /opt/zk1/zk1.cfg

Mode: follower

[root@node5 opt]# /usr/local/zookeeper/bin/zkServer.sh status /opt/zk2/zk2.cfg

JMX enabled by default

Using config: /opt/zk2/zk2.cfg

Mode: leader #这是主的,另外两个是从的,表示已经启动完成

[root@node5 opt]# /usr/local/zookeeper/bin/zkServer.sh status /opt/zk3/zk3.cfg

JMX enabled by default

Using config: /opt/zk3/zk3.cfg

Mode: follower

连接到zookeeper节点:

[root@node5 opt]# /usr/local/zookeeper/bin/zkCli.sh -server 192.168.10.205:2181

[root@node5 opt]# /usr/local/zookeeper/bin/zkCli.sh -server 192.168.10.205:2181Connecting to 192.168.10.205:2181

2016-03-22 01:27:48,325 [myid:] - INFO [main:Environment@100] - Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT

2016-03-22 01:27:48,331 [myid:] - INFO [main:Environment@100] - Client environment:host.name=node5.a.com

2016-03-22 01:27:48,332 [myid:] - INFO [main:Environment@100] - Client environment:java.version=1.7.0_45

2016-03-22 01:27:48,334 [myid:] - INFO [main:Environment@100] - Client environment:java.vendor=Oracle Corporation

2016-03-22 01:27:48,334 [myid:] - INFO [main:Environment@100] - Client environment:java.home=/usr/lib/jvm/java-1.7.0-openjdk-1.7.0.45.x86_64/jre

2016-03-22 01:27:48,334 [myid:] - INFO [main:Environment@100] - Client environment:java.class.path=/usr/local/zookeeper/bin/../build/classes:/usr/local/zookeeper/bin/../build/lib/*.jar:/usr/local/zookeeper/bin/../lib/slf4j-log4j12-1.6.1.jar:/usr/local/zookeeper/bin/../lib/slf4j-api-1.6.1.jar:/usr/local/zookeeper/bin/../lib/netty-3.7.0.Final.jar:/usr/local/zookeeper/bin/../lib/log4j-1.2.16.jar:/usr/local/zookeeper/bin/../lib/jline-0.9.94.jar:/usr/local/zookeeper/bin/../zookeeper-3.4.6.jar:/usr/local/zookeeper/bin/../src/java/lib/*.jar:/usr/local/zookeeper/bin/../conf:

2016-03-22 01:27:48,334 [myid:] - INFO [main:Environment@100] - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2016-03-22 01:27:48,335 [myid:] - INFO [main:Environment@100] - Client environment:java.io.tmpdir=/tmp

2016-03-22 01:27:48,335 [myid:] - INFO [main:Environment@100] - Client environment:java.compiler=<NA>

2016-03-22 01:27:48,335 [myid:] - INFO [main:Environment@100] - Client environment:os.name=Linux

2016-03-22 01:27:48,335 [myid:] - INFO [main:Environment@100] - Client environment:os.arch=amd64

2016-03-22 01:27:48,335 [myid:] - INFO [main:Environment@100] - Client environment:os.version=2.6.32-431.el6.x86_64

2016-03-22 01:27:48,335 [myid:] - INFO [main:Environment@100] - Client environment:user.name=root

2016-03-22 01:27:48,335 [myid:] - INFO [main:Environment@100] - Client environment:user.home=/root

2016-03-22 01:27:48,336 [myid:] - INFO [main:Environment@100] - Client environment:user.dir=/opt

2016-03-22 01:27:48,337 [myid:] - INFO [main:ZooKeeper@438] - Initiating client connection, connectString=192.168.10.205:2181 sessionTimeout=30000 watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@6ade299f

Welcome to ZooKeeper!

2016-03-22 01:27:48,393 [myid:] - INFO [main-SendThread(192.168.10.205:2181):ClientCnxn$SendThread@975] - Opening socket connection to server 192.168.10.205/192.168.10.205:2181. Will not attempt to authenticate using SASL (unknown error)

2016-03-22 01:27:48,424 [myid:] - INFO [main-SendThread(192.168.10.205:2181):ClientCnxn$SendThread@852] - Socket connection established to 192.168.10.205/192.168.10.205:2181, initiating session

JLine support is enabled

2016-03-22 01:27:48,621 [myid:] - INFO [main-SendThread(192.168.10.205:2181):ClientCnxn$SendThread@1235] - Session establishment complete on server 192.168.10.205/192.168.10.205:2181, sessionid = 0x1539a349fc40000, negotiated timeout = 30000

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

连接时的初始化信息 进入到目录执行编译:

[root@node5 codis]# pwd

/opt/gopath/src/github.com/CodisLabs/codis

[root@node5 codis]# ls

cmd docker genver.sh MIT-LICENSE.txt test

config.ini Dockerfile Godeps pkg vitess_license

doc extern Makefile README.md wandoujia_licese.txt

[root@node5 codis]# make

[root@node5 codis]# make

GO15VENDOREXPERIMENT=0 GOPATH=`godep path` godep restore

godep: [WARNING]: godep should only be used inside a valid go package directory and

godep: [WARNING]: may not function correctly. You are probably outside of your $GOPATH.

godep: [WARNING]: Current Directory: /opt/gopath/src/github.com/CodisLabs/codis

godep: [WARNING]: $GOPATH: /opt/gopath/src/github.com/CodisLabs/codis/Godeps/_workspace

GOPATH=`godep path`:$GOPATH go build -o bin/codis-proxy ./cmd/proxy

GOPATH=`godep path`:$GOPATH go build -o bin/codis-config ./cmd/cconfig

make -j4 -C extern/redis-2.8.21/

make[1]: Entering directory `/opt/gopath/src/github.com/CodisLabs/codis/extern/redis-2.8.21‘

cd src && make all

make[2]: Entering directory `/opt/gopath/src/github.com/CodisLabs/codis/extern/redis-2.8.21/src‘

rm -rf redis-server redis-sentinel redis-cli redis-benchmark redis-check-dump redis-check-aof *.o *.gcda *.gcno *.gcov redis.info lcov-html

(cd ../deps && make distclean)

make[3]: Entering directory `/opt/gopath/src/github.com/CodisLabs/codis/extern/redis-2.8.21/deps‘

(cd hiredis && make clean) > /dev/null || true

(cd linenoise && make clean) > /dev/null || true

(cd lua && make clean) > /dev/null || true

(cd jemalloc && [ -f Makefile ] && make distclean) > /dev/null || true

(rm -f .make-*)

make[3]: Leaving directory `/opt/gopath/src/github.com/CodisLabs/codis/extern/redis-2.8.21/deps‘

(rm -f .make-*)

echo STD=-std=c99 -pedantic >> .make-settings

echo WARN=-Wall -W >> .make-settings

echo OPT=-O2 >> .make-settings

echo MALLOC=jemalloc >> .make-settings

echo CFLAGS= >> .make-settings

echo LDFLAGS= >> .make-settings

echo REDIS_CFLAGS= >> .make-settings

echo REDIS_LDFLAGS= >> .make-settings

echo PREV_FINAL_CFLAGS=-std=c99 -pedantic -Wall -W -O2 -g -ggdb -I../deps/hiredis -I../deps/linenoise -I../deps/lua/src -DUSE_JEMALLOC -I../deps/jemalloc/include >> .make-settings

echo PREV_FINAL_LDFLAGS= -g -ggdb -rdynamic >> .make-settings

(cd ../deps && make hiredis linenoise lua jemalloc)

make[3]: Entering directory `/opt/gopath/src/github.com/CodisLabs/codis/extern/redis-2.8.21/deps‘

(cd hiredis && make clean) > /dev/null || true

(cd linenoise && make clean) > /dev/null || true

(cd lua && make clean) > /dev/null || true

(cd jemalloc && [ -f Makefile ] && make distclean) > /dev/null || true

(rm -f .make-*)

(echo "" > .make-ldflags)

(echo "" > .make-cflags)

MAKE hiredis

cd hiredis && make static

MAKE linenoise

cd linenoise && make

MAKE lua

make[4]: Entering directory `/opt/gopath/src/github.com/CodisLabs/codis/extern/redis-2.8.21/deps/linenoise‘

cc -Wall -Os -g -c linenoise.c

cd lua/src && make all CFLAGS="-O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL " MYLDFLAGS="" AR="ar rcu"

MAKE jemalloc

cd jemalloc && ./configure --with-jemalloc-prefix=je_ --enable-cc-silence CFLAGS="-std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops " LDFLAGS=""

make[4]: Entering directory `/opt/gopath/src/github.com/CodisLabs/codis/extern/redis-2.8.21/deps/lua/src‘

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lapi.o lapi.c

make[4]: Entering directory `/opt/gopath/src/github.com/CodisLabs/codis/extern/redis-2.8.21/deps/hiredis‘

cc -std=c99 -pedantic -c -O3 -fPIC -Wall -W -Wstrict-prototypes -Wwrite-strings -g -ggdb net.c

checking for xsltproc... /usr/bin/xsltproc

checking for gcc... gcc

checking whether the C compiler works... yes

checking for C compiler default output file name... a.out

checking for suffix of executables... cc -std=c99 -pedantic -c -O3 -fPIC -Wall -W -Wstrict-prototypes -Wwrite-strings -g -ggdb hiredis.c

checking whether we are cross compiling... no

checking for suffix of object files... make[4]: Leaving directory `/opt/gopath/src/github.com/CodisLabs/codis/extern/redis-2.8.21/deps/linenoise‘

cc -std=c99 -pedantic -c -O3 -fPIC -Wall -W -Wstrict-prototypes -Wwrite-strings -g -ggdb sds.c

o

checking whether we are using the GNU C compiler... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lcode.o lcode.c

yes

checking whether gcc accepts -g... yes

checking for gcc option to accept ISO C89... none needed

checking how to run the C preprocessor... gcc -E

checking for grep that handles long lines and -e... /bin/grep

checking for egrep... /bin/grep -E

checking for ANSI C header files... yes

checking for sys/types.h... cc -std=c99 -pedantic -c -O3 -fPIC -Wall -W -Wstrict-prototypes -Wwrite-strings -g -ggdb async.c

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o ldebug.o ldebug.c

yes

checking for sys/stat.h... yes

checking for stdlib.h... yes

checking for string.h... yes

checking for memory.h... yes

checking for strings.h... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o ldo.o ldo.c

yes

ldo.c: In function ‘f_parser’:

ldo.c:496: warning: unused variable ‘c’

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o ldump.o ldump.c

checking for inttypes.h... yes

checking for stdint.h... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lfunc.o lfunc.c

yes

checking for unistd.h... ar rcs libhiredis.a net.o hiredis.o sds.o async.o

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lgc.o lgc.c

yes

checking whether byte ordering is bigendian... make[4]: Leaving directory `/opt/gopath/src/github.com/CodisLabs/codis/extern/redis-2.8.21/deps/hiredis‘

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o llex.o llex.c

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lmem.o lmem.c

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lobject.o lobject.c

no

checking size of void *... 8

checking size of int... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lopcodes.o lopcodes.c

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lparser.o lparser.c

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lstate.o lstate.c

4

checking size of long... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lstring.o lstring.c

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o ltable.o ltable.c

8

checking size of intmax_t... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o ltm.o ltm.c

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lundump.o lundump.c

8

checking build system type... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lvm.o lvm.c

x86_64-unknown-linux-gnu

checking host system type... x86_64-unknown-linux-gnu

checking whether pause instruction is compilable... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lzio.o lzio.c

yes

checking whether SSE2 intrinsics is compilable... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o strbuf.o strbuf.c

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o fpconv.o fpconv.c

yes

checking for ar... ar

checking whether __attribute__ syntax is compilable... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lauxlib.o lauxlib.c

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lbaselib.o lbaselib.c

yes

checking whether compiler supports -fvisibility=hidden... yes

checking whether compiler supports -Werror... yes

checking whether tls_model attribute is compilable... yes

checking for a BSD-compatible install... /usr/bin/install -c

checking for ranlib... ranlib

checking for ld... /usr/bin/ld

checking for autoconf... /usr/bin/autoconf

checking for memalign... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o ldblib.o ldblib.c

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o liolib.o liolib.c

yes

checking for valloc... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lmathlib.o lmathlib.c

yes

checking configured backtracing method... N/A

checking for sbrk... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o loslib.o loslib.c

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o ltablib.o ltablib.c

yes

checking whether utrace(2) is compilable... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lstrlib.o lstrlib.c

no

checking whether valgrind is compilable... no

checking STATIC_PAGE_SHIFT... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o loadlib.o loadlib.c

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o linit.o linit.c

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lua_cjson.o lua_cjson.c

12

checking pthread.h usability... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lua_struct.o lua_struct.c

yes

checking pthread.h presence... yes

checking for pthread.h... yes

checking for pthread_create in -lpthread... yes

checking for _malloc_thread_cleanup... no

checking for _pthread_mutex_init_calloc_cb... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lua_cmsgpack.o lua_cmsgpack.c

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lua_bit.o lua_bit.c

no

checking for TLS... yes

checking whether a program using ffsl is compilable... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o lua.o lua.c

cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o luac.o luac.c

yes

checking whether atomic(9) is compilable... no

checking whether Darwin OSAtomic*() is compilable... no

checking whether to force 32-bit __sync_{add,sub}_and_fetch()... no

checking whether to force 64-bit __sync_{add,sub}_and_fetch()... cc -O2 -Wall -DLUA_ANSI -DENABLE_CJSON_GLOBAL -c -o print.o print.c

no

checking whether Darwin OSSpin*() is compilable... ar rcu liblua.a lapi.o lcode.o ldebug.o ldo.o ldump.o lfunc.o lgc.o llex.o lmem.o lobject.o lopcodes.o lparser.o lstate.o lstring.o ltable.o ltm.o lundump.o lvm.o lzio.o strbuf.o fpconv.o lauxlib.o lbaselib.o ldblib.o liolib.o lmathlib.o loslib.o ltablib.o lstrlib.o loadlib.o linit.o lua_cjson.o lua_struct.o lua_cmsgpack.o lua_bit.o # DLL needs all object files

ranlib liblua.a

no

checking for stdbool.h that conforms to C99... cc -o lua lua.o liblua.a -lm

yes

checking for _Bool... liblua.a(loslib.o): In function `os_tmpname‘:

loslib.c:(.text+0x35): warning: the use of `tmpnam‘ is dangerous, better use `mkstemp‘

cc -o luac luac.o print.o liblua.a -lm

make[4]: Leaving directory `/opt/gopath/src/github.com/CodisLabs/codis/extern/redis-2.8.21/deps/lua/src‘

yes

configure: creating ./config.status

config.status: creating Makefile

config.status: creating doc/html.xsl

config.status: creating doc/manpages.xsl

config.status: creating doc/jemalloc.xml

config.status: creating include/jemalloc/jemalloc_macros.h

config.status: creating include/jemalloc/jemalloc_protos.h

config.status: creating include/jemalloc/internal/jemalloc_internal.h

config.status: creating test/test.sh

config.status: creating test/include/test/jemalloc_test.h

config.status: creating config.stamp

config.status: creating bin/jemalloc.sh

config.status: creating include/jemalloc/jemalloc_defs.h

config.status: creating include/jemalloc/internal/jemalloc_internal_defs.h

config.status: creating test/include/test/jemalloc_test_defs.h

config.status: executing include/jemalloc/internal/private_namespace.h commands

config.status: executing include/jemalloc/internal/private_unnamespace.h commands

config.status: executing include/jemalloc/internal/public_symbols.txt commands

config.status: executing include/jemalloc/internal/public_namespace.h commands

config.status: executing include/jemalloc/internal/public_unnamespace.h commands

config.status: executing include/jemalloc/internal/size_classes.h commands

config.status: executing include/jemalloc/jemalloc_protos_jet.h commands

config.status: executing include/jemalloc/jemalloc_rename.h commands

config.status: executing include/jemalloc/jemalloc_mangle.h commands

config.status: executing include/jemalloc/jemalloc_mangle_jet.h commands

config.status: executing include/jemalloc/jemalloc.h commands

===============================================================================

jemalloc version : 3.6.0-0-g46c0af68bd248b04df75e4f92d5fb804c3d75340

library revision : 1

CC : gcc

CPPFLAGS : -D_GNU_SOURCE -D_REENTRANT

CFLAGS : -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -fvisibility=hidden

LDFLAGS :

EXTRA_LDFLAGS :

LIBS : -lpthread

RPATH_EXTRA :

XSLTPROC : /usr/bin/xsltproc

XSLROOT : /usr/share/sgml/docbook/xsl-stylesheets

PREFIX : /usr/local

BINDIR : /usr/local/bin

INCLUDEDIR : /usr/local/include

LIBDIR : /usr/local/lib

DATADIR : /usr/local/share

MANDIR : /usr/local/share/man

srcroot :

abs_srcroot : /opt/gopath/src/github.com/CodisLabs/codis/extern/redis-2.8.21/deps/jemalloc/

objroot :

abs_objroot : /opt/gopath/src/github.com/CodisLabs/codis/extern/redis-2.8.21/deps/jemalloc/

JEMALLOC_PREFIX : je_

JEMALLOC_PRIVATE_NAMESPACE

: je_

install_suffix :

autogen : 0

experimental : 1

cc-silence : 1

debug : 0

code-coverage : 0

stats : 1

prof : 0

prof-libunwind : 0

prof-libgcc : 0

prof-gcc : 0

tcache : 1

fill : 1

utrace : 0

valgrind : 0

xmalloc : 0

mremap : 0

munmap : 0

dss : 0

lazy_lock : 0

tls : 1

===============================================================================

cd jemalloc && make CFLAGS="-std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops " LDFLAGS="" lib/libjemalloc.a

make[4]: Entering directory `/opt/gopath/src/github.com/CodisLabs/codis/extern/redis-2.8.21/deps/jemalloc‘

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/jemalloc.o src/jemalloc.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/arena.o src/arena.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/atomic.o src/atomic.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/base.o src/base.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/bitmap.o src/bitmap.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/chunk.o src/chunk.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/chunk_dss.o src/chunk_dss.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/chunk_mmap.o src/chunk_mmap.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/ckh.o src/ckh.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/ctl.o src/ctl.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/extent.o src/extent.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/hash.o src/hash.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/huge.o src/huge.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/mb.o src/mb.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/mutex.o src/mutex.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/prof.o src/prof.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/quarantine.o src/quarantine.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/rtree.o src/rtree.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/stats.o src/stats.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/tcache.o src/tcache.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/util.o src/util.c

gcc -std=gnu99 -Wall -pipe -g3 -O3 -funroll-loops -c -D_GNU_SOURCE -D_REENTRANT -Iinclude -Iinclude -o src/tsd.o src/tsd.c

ar crus lib/libjemalloc.a src/jemalloc.o src/arena.o src/atomic.o src/base.o src/bitmap.o src/chunk.o src/chunk_dss.o src/chunk_mmap.o src/ckh.o src/ctl.o src/extent.o src/hash.o src/huge.o src/mb.o src/mutex.o src/prof.o src/quarantine.o src/rtree.o src/stats.o src/tcache.o src/util.o src/tsd.o

make[4]: Leaving directory `/opt/gopath/src/github.com/CodisLabs/codis/extern/redis-2.8.21/deps/jemalloc‘

make[3]: Leaving directory `/opt/gopath/src/github.com/CodisLabs/codis/extern/redis-2.8.21/deps‘

CC adlist.o

CC ae.o

CC anet.o

CC dict.o

anet.c: In function ‘anetSockName’:

anet.c:565: warning: dereferencing pointer ‘s’ does break strict-aliasing rules

anet.c:563: note: initialized from here

anet.c:569: warning: dereferencing pointer ‘s’ does break strict-aliasing rules

anet.c:567: note: initialized from here

anet.c: In function ‘anetPeerToString’:

anet.c:543: warning: dereferencing pointer ‘s’ does break strict-aliasing rules

anet.c:541: note: initialized from here

anet.c:547: warning: dereferencing pointer ‘s’ does break strict-aliasing rules

anet.c:545: note: initialized from here

anet.c: In function ‘anetTcpAccept’:

anet.c:511: warning: dereferencing pointer ‘s’ does break strict-aliasing rules

anet.c:509: note: initialized from here

anet.c:515: warning: dereferencing pointer ‘s’ does break strict-aliasing rules

anet.c:513: note: initialized from here

CC redis.o

CC sds.o

CC zmalloc.o

CC lzf_c.o

CC lzf_d.o

CC pqsort.o

CC zipmap.o

CC sha1.o

CC ziplist.o

CC release.o

CC networking.o

CC util.o

CC object.o

CC db.o

CC replication.o

CC rdb.o

CC t_string.o

db.c: In function ‘scanGenericCommand’:

db.c:454: warning: ‘pat’ may be used uninitialized in this function

db.c:455: warning: ‘patlen’ may be used uninitialized in this function

CC t_list.o

CC t_set.o

CC t_zset.o

CC t_hash.o

CC config.o

CC aof.o

CC pubsub.o

CC multi.o

CC debug.o

CC sort.o

CC intset.o

CC syncio.o

CC migrate.o

CC endianconv.o

CC slowlog.o

CC scripting.o

CC bio.o

CC rio.o

CC rand.o

CC memtest.o

CC crc64.o

CC crc32.o

CC bitops.o

CC sentinel.o

CC notify.o

CC setproctitle.o

CC hyperloglog.o

CC latency.o

CC sparkline.o

CC slots.o

CC redis-cli.o

CC redis-benchmark.o

CC redis-check-dump.o

CC redis-check-aof.o

LINK redis-benchmark

LINK redis-check-dump

LINK redis-check-aof

LINK redis-server

INSTALL redis-sentinel

LINK redis-cli

Hint: It‘s a good idea to run ‘make test‘ ;)

make 过程

[root@node5 codis]# make gotest

GOPATH=`godep path`:$GOPATH go test ./pkg/... ./cmd/...

ok github.com/CodisLabs/codis/pkg/models 17.324s

ok github.com/CodisLabs/codis/pkg/proxy 6.296s

ok github.com/CodisLabs/codis/pkg/proxy/redis 1.338s

ok github.com/CodisLabs/codis/pkg/proxy/router 0.654s

? github.com/CodisLabs/codis/pkg/utils [no test files]

? github.com/CodisLabs/codis/pkg/utils/assert [no test files]

? github.com/CodisLabs/codis/pkg/utils/atomic2 [no test files]

ok github.com/CodisLabs/codis/pkg/utils/bytesize 0.002s

? github.com/CodisLabs/codis/pkg/utils/errors [no test files]

? github.com/CodisLabs/codis/pkg/utils/log [no test files]

? github.com/CodisLabs/codis/pkg/utils/trace [no test files]

? github.com/CodisLabs/codis/cmd/cconfig [no test files]

? github.com/CodisLabs/codis/cmd/proxy [no test files]

make gotest 测试过程执行全部指令后,会在 bin 文件夹内生成 codis-config、codis-proxy、codis-server三个可执行文件。另外, bin/assets 文件夹是 codis-config 的 dashboard http 服务需要的前端资源, 需要和 codis-config 放置在同一文件夹下)

[root@node5 codis]# cd bin/

[root@node5 bin]# ls

assets codis-config codis-proxy codis-server

可执行文件使用方式:

[root@node5 bin]# ./codis-config -h

[root@node5 bin]# ./codis-config -h

usage: codis-config [-c <config_file>] [-L <log_file>] [--log-level=<loglevel>]

<command> [<args>...]

options:

-c #配置文件地址

-L #日志输出文件地址

--log-level=<loglevel> #输出日志级别 (debug < info (default) < warn < error < fatal)

commands:

server #redis 服务器组管理

slot #slot 管理

dashboard #启动 dashboard 服务

action #事件管理 (目前只有删除历史事件的日志)

proxy #proxy 管理

[root@node5 bin]# ./codis-proxy -h

$ bin/codis-proxy -h

usage: codis-proxy [-c <config_file>] [-L <log_file>] [--log-level=<loglevel>] [--cpu=<cpu_num>] [--addr=<proxy_listen_addr>] [--http-addr=<debug_http_server_addr>]

options:

-c #配置文件地址

-L #日志输出文件地址

--log-level=<loglevel> #输出日志级别 (debug < info (default) < warn < error < fatal)

--cpu=<cpu_num> #proxy占用的 cpu 核数, 默认1, 最好设置为机器的物理cpu数的一半到2/3左右

--addr=<proxy_listen_addr> #proxy 的 redis server 监听的地址, 格式 <ip or hostname>:<port>, 如: localhost:9000, :9001

--http-addr=<debug_http_server_addr> #proxy 的调试信息启动的http server, 可以访问 http://debug_http_server_addr/debug/vars

[root@node5 bin]# ./codis-server -h

[root@node5 bin]# ./codis-server -h

Usage: ./redis-server [/path/to/redis.conf] [options]

./redis-server - (read config from stdin) #指定redis配置文件

./redis-server -v or --version #显示版本

./redis-server -h or --help #获取帮助

./redis-server --test-memory <megabytes> #单位为字节

Examples:

./redis-server (run the server with default conf)

./redis-server /etc/redis/6379.conf

./redis-server --port 7777

./redis-server --port 7777 --slaveof 127.0.0.1 8888

./redis-server /etc/myredis.conf --loglevel verbose

开始部署:

[root@node5 bin]# cd ..

[root@node5 codis]# vim config.ini

zk=192.168.10.205:2181

dashboard_addr=192.168.10.205:18087

配置并启动 dashboard:

[root@node5 codis]# cp config.ini bin/ #将修改后的文件复制至bin目录,因为bin里面你的命令默认读取当前目录的config.ini文件,或可以使用 -c指定也可以

[root@node5 bin]# ./codis-config dashboard & #该命令会启动 dashboard

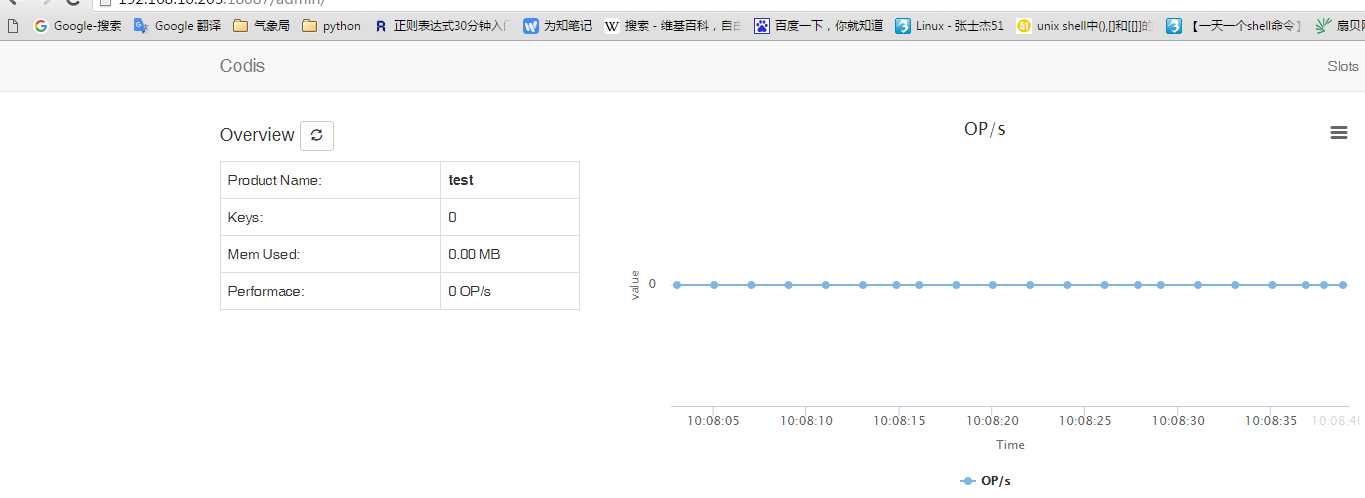

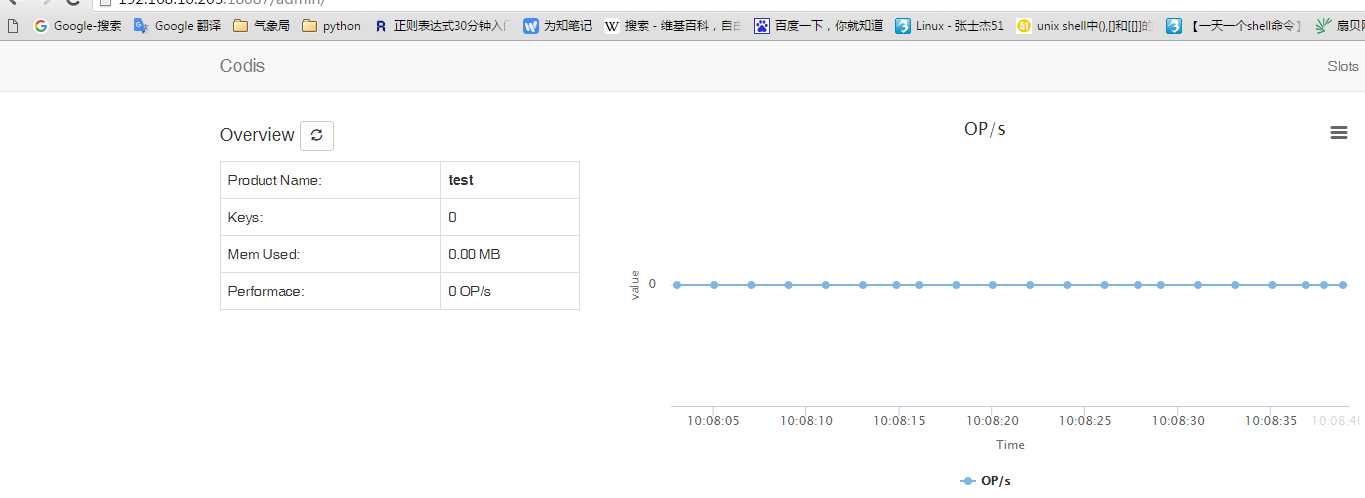

启动成功就可以通过 http://ServerIP:18087访问

初始化 slots:

执行./codis-config slot init,该命令会在zookeeper上创建slot相关信息,如果提示已经初始化可以加 -f 强制

[root@node5 bin]# ./codis-config slot init -f

{

"msg": "OK",

"ret": 0

}

启动 Codis Redis:

如果已经启动可以跳过,如果还未启动就执行:

[root@node5 bin]# /etc/init.d/redisd start

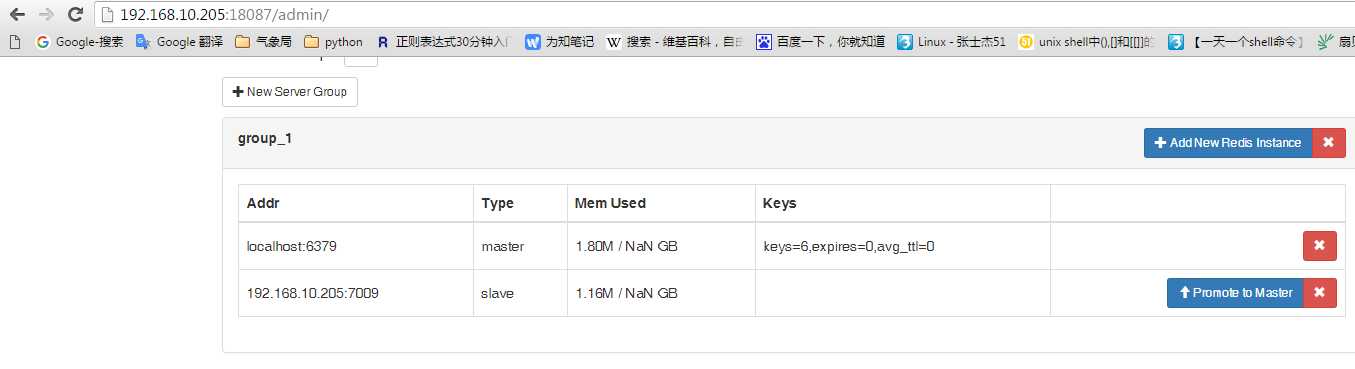

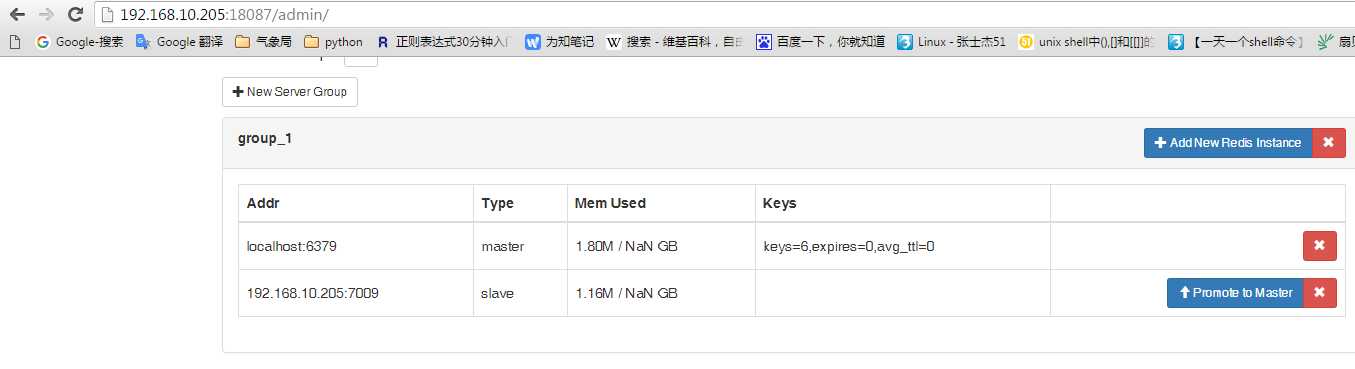

添加 Redis Server Group:

每一个 Server Group 作为一个 Redis 服务器组存在, 只允许有一个 master, 可以有多个 slave, group id 仅支持大于等于1的整数

添加一个master:

[root@node5 bin]# ./codis-config server add 1 localhost:6379 master

{

"msg": "OK",

"ret": 0

}

为master添加一个slave:

[root@node5 bin]# ./codis-config server add 1 192.168.10.205:7009 slave

[root@node5 bin]# ./codis-config server add 1 192.168.10.205:7009 slave

2016/03/22 03:37:27 main.go:154: [PANIC] run sub-command failed

[error]: http status code 500, ERR SLAVEOF not allowed in cluster mode.

4 /opt/gopath/src/github.com/CodisLabs/codis/cmd/cconfig/utils.go:66

main.callApi

3 /opt/gopath/src/github.com/CodisLabs/codis/cmd/cconfig/server_group.go:94

main.runAddServerToGroup

2 /opt/gopath/src/github.com/CodisLabs/codis/cmd/cconfig/server_group.go:54

main.cmdServer

1 /opt/gopath/src/github.com/CodisLabs/codis/cmd/cconfig/main.go:87

main.runCommand

0 /opt/gopath/src/github.com/CodisLabs/codis/cmd/cconfig/main.go:152

main.main

... ...

[stack]:

0 /opt/gopath/src/github.com/CodisLabs/codis/cmd/cconfig/main.go:154

main.main

... ...

查看列表信息:

[root@node5 bin]# ./codis-config server list

[

{

"id": 1,

"product_name": "test",

"servers": [

{

"addr": "localhost:6379",

"group_id": 1,

"type": "master"

},

{

"addr": "192.168.10.205:7009",

"group_id": 1,

"type": "slave"

}

]

}

]

在浏览器验证:

设置 server group 服务的 slot 范围:

Codis 采用 Pre-sharding 的技术来实现数据的分片, 默认分成 1024 个 slots (0-1023), 对于每个key来说, 通过以下公式确定所属的 Slot Id : SlotId = crc32(key) % 1024 每一个 slot 都会有一个且必须有一个特定的 server group id 来表示这个 slot 的数据由哪个 server group 来提供:

如:

设置编号为[0, 511]的 slot 由 server group 1 提供服务, 编号 [512, 1023] 的 slot 由 server group 2 提供服务

[root@node5 bin]# ./codis-config slot range-set 0 511 1 online

{

"msg": "OK",

"ret": 0

}

[root@node5 bin]# ./codis-config slot range-set 512 1023 2 online

{

"msg": "OK",

"ret": 0

}

启动 codis-proxy:

[root@node5 bin]# ./codis-proxy -c config.ini -L ./log/proxy.log --cpu=8 --addr=0.0.0.0:19000 --http-addr=0.0.0.0:11000

_____ ____ ____/ / (_) _____

/ ___/ / __ \ / __ / / / / ___/

/ /__ / /_/ / / /_/ / / / (__ )

\___/ \____/ \__,_/ /_/ /____/

刚启动的 codis-proxy 默认是处于 offline状态的, 然后设置 proxy 为 online 状态, 只有处于 online 状态的 proxy 才会对外提供服务

[root@node5 bin]# ./codis-config -c config.ini proxy online proxy_1

{

"msg": "OK",

"ret": 0

}

注:命令格式为./codis-config -c config.ini proxy online <proxy_name> <---- proxy的id, 如 proxy_1,可以在浏览器看到

########################################################################################## Python 操作Redis

redis对比monoDB:

redis和memcache 是key value非关系型数据库,mysql是关系型数据库,表的结构和保存的内容有严格的要求,关系型数据库无法保存临时数据或不标准的数据,redis支持的数据类型相比memcache要多,memcache只支持hash(哈希类型)。

redis是一个key-value存储系统。和Memcached类似,它支持存储的value类型相对更多,包括string(字符串)、list(链表)、set(集合)、zset(sorted set --有序集合)和hash(哈希类型)。这些数据类型都支持push/pop、add/remove及取交集并集和差集及更丰富的操作,而且这些操作都是原子性(一组操作要么都完成要不不操作)的。在此基础上,redis支持各种不同方式的排序。与memcached一样,为了保证效率,数据都是先缓存在内存中。区别的是redis会周期性的把更新的数据写入磁盘或者把修改操作写入追加的记录文件,并且在此基础上实现了master-slave(主从)同步、cluster(集群)。

下载安装:

[root@localhost ~]# wget http://download.redis.io/releases/redis-3.0.7.tar.gz

[root@localhost ~]# tar xvf redis-3.0.7.tar.gz

[root@localhost ~]# mv redis-3.0.7 /usr/local/redis

[root@localhost ~]# make

[root@localhost redis]# vim redis.conf #更改配置:

[root@localhost redis]# grep -v "#" redis.conf | grep -v "^$" #简单配置如下:

daemonize yes

pidfile /var/run/redis.pid

port 6379

tcp-backlog 511

timeout 0

tcp-keepalive 0

loglevel notice

logfile ""

databases 16

save 900 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dbfilename 10.16.59.103.rdb

dir ./

slave-serve-stale-data yes

slave-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-disable-tcp-nodelay no

slave-priority 100

appendonly no

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

lua-time-limit 5000

slowlog-log-slower-than 10000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-entries 512

list-max-ziplist-value 64

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit slave 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

aof-rewrite-incremental-fsync yes

redis.conf

启动脚本:

[root@localhost redis]# cp redis.conf /etc/redis.conf #复制配置文件

[root@localhost redis]# cp utils/redis_init_script /etc/init.d/redis

[root@localhost redis]# vim /etc/init.d/redis

#!/bin/sh

#

# Simple Redis init.d script conceived to work on Linux systems

# as it does use of the /proc filesystem.

# chkconfig: - 30 69

# description: Redis start script

REDISPORT=6379

EXEC=/usr/local/redis/src/redis-server

CLIEXEC=/usr/local/redis/src/redis-cli

PIDFILE=/var/run/redis_${REDISPORT}.pid

CONF="/etc/redis.conf"

case "$1" in

start)

if [ -f $PIDFILE ]

then

echo "$PIDFILE exists, process is already running or crashed"

else

echo "Starting Redis server..."

$EXEC $CONF

fi

;;

stop)

if [ ! -f $PIDFILE ]

then

echo "$PIDFILE does not exist, process is not running"

else

PID=$(cat $PIDFILE)

echo "Stopping ..."

$CLIEXEC -p $REDISPORT shutdown

while [ -x /proc/${PID} ]

do

echo "Waiting for Redis to shutdown ..."

sleep 1

done

echo "Redis stopped"

fi

;;

*)

echo "Please use start or stop as first argument"

;;

esac

redis script启动服务:

[root@localhost redis]# /etc/init.d/redis start

确认服务器启动成功:

[root@localhost redis]# ps -ef | grep redis

root 40500 1 0 16:07 ? 00:00:00 /usr/local/redis/src/redis-server *:6379

root 40663 37362 0 16:21 pts/1 00:00:00 grep redis

[root@localhost redis]# ss -tnlp | grep redis

LISTEN 0 128 *:6379 *:* users:(("redis-server",40500,5))

LISTEN 0 128 :::6379 :::* users:(("redis-server",40500,4))

安装python 客户端:

API使用

redis-py 的API的使用可以分类为:

- 连接方式

- 连接池

- 操作

- String 操作

- Hash 操作

- List 操作

- Set 操作

- Sort Set 操作

- 管道

- 发布订阅

1、操作模式

redis-py提供两个类Redis和StrictRedis用于实现Redis的命令,StrictRedis用于实现大部分官方的命令,并使用官方的语法和命令,Redis是StrictRedis的子类,用于向后兼容旧版本的redis-py。

#/usr/bin/env python

# -*- coding:utf-8 -*-

import redis

r = redis.Redis(host=‘10.16.59.103‘, port=6379)

r.set(‘foo‘, ‘Bar‘)

print(r.get(‘foo‘))

执行结果:

b‘Bar‘