标签:evel osi dev lib nbsp chown backup 设置 htm

数据源端使用logstash收集udp 514日志输出到kafka中做测试(可选择filebeat,比较轻量级、对CPU负载不大、不占用太多资源;选择rsyslog对 kafka的支持是 v8.7.0版本及之后版本)。如下流程:

logstash(udp 514) => kafka(zookeeper) 集群=> logstash(grok) => elasticsearch集群 => kibana

Logstash(grok)因每条日志需做正则匹配比较消耗资源,所以中间加了kafka(zookeeper)集群做消息队列。

1) 安装jdk

yum install -y java-1.8.0-openjdk java-1.8.0-openjdk-devel

2) yum安装logstash

rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

cat >> /etc/yum.repos.d/logstash-6.x.repo << ‘EOF‘

[logstash-6.x]

name=Elastic repository for 6.x packages

baseurl=https://artifacts.elastic.co/packages/6.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

EOF

yum install -y logstash

3) 配置

修改内存大小

vim /etc/logstash/jvm.options

-Xms2g

-Xmx2g

cp /etc/logstash/logstash-sample.conf /etc/logstash/conf.d/logstash514.conf

vim /etc/logstash/conf.d/logstash514.conf

#############################

input {

syslog {

type => "syslog"

port => "514"

}

}

output {

kafka {

codec => "json" #一定要加上,不然输出没有host等字段

bootstrap_servers => "192.168.89.11:9092,192.168.89.12:9092,192.168.89.13:9092"

topic_id => "SyslogTopic"

}

}

#############################

4) 测试配置

/usr/share/logstash/bin/logstash --path.settings /etc/logstash/ -t

-t: 测试配置文件

--path.settings: 单独测试需要指定配置文件路径,否则会找不到配置

5) 启动logstash并设置开机启动(配置好zookeeper+kafka再启动)

Logstash监听小于1024的端口号使用logstash权限启动会失败,需要修改root权限启动

vim /etc/systemd/system/logstash.service #修改以下两项

##################

User=root

Group=root

##################

启动服务

systemctl daemon-reload

systemctl start logstash

systemctl enable logstash

6) 可以使用logger生成日志

例:logger -n 服务器IP "日志信息"

请看https://www.cnblogs.com/longBlogs/p/10340251.html

需要安装logstash-output-jdbc输出到mysql数据库(请看https://www.cnblogs.com/longBlogs/p/10340252.html)

安装步骤请参考“数据源端配置”-“安装Logstash(udp 514)”上的安装步骤

1)设置日志匹配目录和格式

mkdir -p /data/logstash/patterns # 创建额外格式目录

自定义匹配类型

vim /data/logstash/patterns/logstash_grok

============================================

#num formats

num [1][0-9]{9,} #匹配1开头至少10位的数字

=================================================

2)配置logstash(grok):

修改内存大小

vim /etc/logstash/jvm.options

-Xms2g

-Xmx2g

配置conf文件

cp /etc/logstash/logstash-sample.conf /etc/logstash/conf.d/logstash_syslog.conf

vim /etc/logstash/conf.d/logstash514.conf

#############################

input {

kafka {

bootstrap_servers => "192.168.89.11:9092,192.168.89.12:9092,192.168.89.13:9092"

topics => "SyslogTopic"

codec => "json" #一定要加上,不然输出没有host等字段

group_id => "logstash_kafka" #多个logstash消费要相同group_id,不然会重复消费

client_id => "logstash00" #client_id唯一

consumer_threads => 3 #线程数

}

}

filter {

grok {

patterns_dir => ["/data/logstash/patterns/"]

match => {

"message" => ".*?%{NUM:num}.*?"

}

}

}

#输出到elasticsearch

#这里不输出到mysql ,把输出到mysql注释掉

output {

elasticsearch {

hosts => ["192.168.89.20:9200","192.168.89.21:9200"] #是集群可写多个

index => "log-%{+YYYY.MM.dd}" # 按日期分index,-前面必须小写

}

#jdbc {

#driver_jar_path => "/etc/logstash/jdbc/mysql-connector-java-5.1.47/mysql-connector-java-5.1.47-bin.jar"

#driver_class => "com.mysql.jdbc.Driver"

#connection_string => "jdbc:mysql://mysql服务器ip:端口/数据库?user=数据库用户名&password=数据库密码"

#statement => [ "insert into 数据表 (TIME ,IP,MESSAGES) values (?,?,?)","%{@timestamp}" ,"%{host}","%{message}" ]

#}

}

################################################

3)启动logstash并设置开机启动(配置好elasticsearch再启动)

如果Logstash监听小于1024的端口号使用logstash权限启动会失败,需要修改root权限启动

vim /etc/systemd/system/logstash.service #修改以下两项

##################

User=root

Group=root

##################

启动服务

systemctl daemon-reload

systemctl start logstash

systemctl enable logstash

1) 安装java环境

yum install –y java-1.8.0-openjdk java-1.8.0-openjdk-devel

2) 设置yum源

rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

cat >> /etc/yum.repos.d/elasticsearch-6.x.repo << ‘EOF‘

[elasticsearch-6.x]

name=Elasticsearch repository for 6.x packages

baseurl=https://artifacts.elastic.co/packages/6.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

EOF

3) 安装

yum install -y elasticsearch

4) 配置

vim /etc/elasticsearch/elasticsearch.yml

cluster.name: syslog_elasticsearch # 集群名称,同一集群需要一致

node.name: es_89.20 # 节点名称,同一集群不同主机不能一致

path.data: /data/elasticsearch # 数据存放目录

path.logs: /data/elasticsearch/log # 日志存放目录

network.host: 0.0.0.0 # 绑定ip

discovery.zen.ping.unicast.hosts: ["192.168.89.20", "192.168.89.21"] # 集群成员,不指定host.master则是自由选举

#discovery.zen.minimum_master_nodes: 1 # 这个参数默认值1,控制的是,一个节点需要看到的具有master节点资格的最小数量,然后才能在集群中做操作。官方的推荐值是(N/2)+1,其中N是具有master资格的节点的数量(情况是3,这个参数设置为2,但对于只有2个节点的情况,设置为2就有些问题了,一个节点DOWN掉后,你肯定连不上2台服务器了,这点需要注意)。

修改了elasticsearch.yml的data、log等目录,请在这里也修改

#vim /usr/lib/systemd/system/elasticsearch.service

LimitMEMLOCK=infinity

#systemctl daemon-reload

5) 创建目录并属主、属组为一个非root账户,否则启动会有以下错误

mkdir -p /data/elasticsearch/log

出现报错:

main ERROR Unable to create file /data/log/elasticsearch/syslog_elasticsearch.log java.io.IOException: Permission denied

将ElasticSearch的安装目录及其子目录改为另外一个非root账户

chown -R elasticsearch:elasticsearch /data/elasticsearch/

6) 启动

systemctl restart elasticsearch

systemctl enable elasticsearch

7) 测试

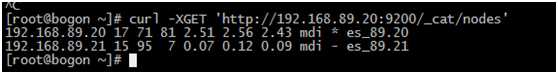

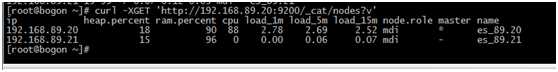

curl -XGET ‘http://192.168.89.20:9200/_cat/nodes‘

后面添加?v代表详细

curl -XGET ‘http://192.168.89.20:9200/_cat/nodes?v‘

curl -XGET ‘http://192.168.89.20:9200/_cluster/state/nodes?pretty‘

rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

cat >> /etc/yum.repos.d/kibana-6.x.repo << ‘EOF‘

[kibana-6.x]

name=Kibana repository for 6.x packages

baseurl=https://artifacts.elastic.co/packages/6.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

EOF

yum install -y kibana

配置文件

cat /etc/kibana/kibana.yml |egrep -v "^#|^$"

###############################################

server.port: 5601 #要使用5601端口,使用nginx转80端口访问

server.host: "0.0.0.0"

elasticsearch.url: "http://192.168.89.20:9200"

###############################################

systemctl start kibana

systemctl enable kibana

访问

汉化

先停止服务

systemctl stop kibana

做汉化

github上有汉化的项目,地址:https://github.com/anbai-inc/Kibana_Hanization

yum install unzip

解压在kibana的安装目录

unzip Kibana_Hanization-master.zip

cd Kibana_Hanization-master

python main.py kibana安装目录(可以python main.py /)

启动服务

systemctl start kibana

nginx主要作用是添加登录密码,因为kibana并没有登录功能,除非es开启密码。

1) 安装

rpm -ivh http://nginx.org/packages/centos/7/noarch/RPMS/nginx-release-centos-7-0.el7.ngx.noarch.rpm

yum install -y nginx

安装Apache密码生产工具:

yum install httpd-tools

2) 配置

生成密码文件:

mkdir -p /etc/nginx/passwd

htpasswd -c -b /etc/nginx/passwd/kibana.passwd admin admin

cp /etc/nginx/conf.d/default.conf /etc/nginx/conf.d/default.conf.backup

vim /etc/nginx/conf.d/default.conf

####################################

server {

listen 192.168.89.15:80;

server_name localhost;

auth_basic "Kibana Auth";

auth_basic_user_file /etc/nginx/passwd/kibana.passwd;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

proxy_pass http://127.0.0.1:5601;

proxy_redirect off;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

##################################

修改Kibana配置文件:

vim /etc/kibana/kibana.yml

server.host: "localhost"

重启服务

systemctl restart kibana

systemctl restart nginx

systemctl enable nginx

访问

http://192.168.89.15:80

标签:evel osi dev lib nbsp chown backup 设置 htm

原文地址:https://www.cnblogs.com/longBlogs/p/10340233.html