标签:rom atm models value sequence http .data taf tin

接上回, 这次做了一个多元回归

这里贴一下代码

import numpy as np np.random.seed(1337) from sklearn.model_selection import train_test_split import matplotlib.pyplot as plt import keras from keras.models import Sequential from keras.layers import Activation from keras.layers import LSTM from keras.layers import Dropout from keras.layers import Dense import pandas as pd

datan = 1000 # 真实参数 ori_weights = [5, -4, 3, -2, 1] colsn = len(ori_weights) bias = -1 ori = np.zeros((1, colsn)) ori[0] = np.asarray(ori_weights)

# 列信息

cols_name = [chr(65+i) for i in range(colsn)]

X = np.zeros((colsn, datan))

for i in range(colsn):

X[i] = np.random.normal(1, 0.1, datan)

# 真实Y

Y = np.matmul(ori, X) + bias + np.random.normal(-0.1, 0.1, (datan, ))

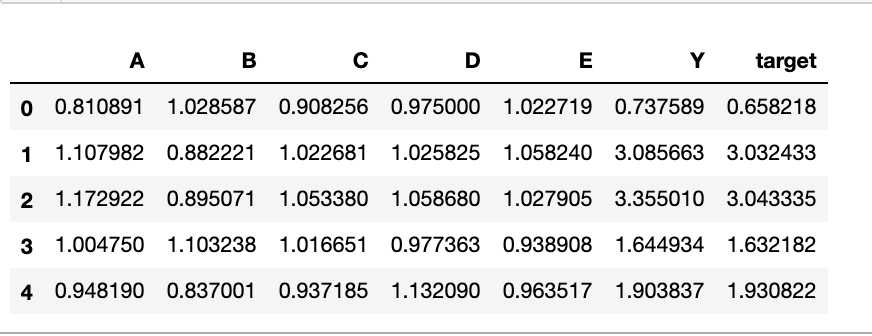

# 数据预览 df = pd.DataFrame(X.T, columns=cols_name) df[‘Y‘] = df.apply(lambda row: np.matmul(ori, [row[k] for k in df.columns] )[0]+bias, axis=1) df[‘target‘] = Y[0] df.head()

X_train, X_test, Y_train, Y_test = train_test_split(X.T, Y.T, test_size=0.33, random_state=42)

neurons = 128 activation_function = ‘tanh‘ loss = ‘mse‘ optimizer="adam" dropout = 0.01 batch_size = 12 epochs = 200

model = Sequential() model.add(LSTM(neurons, return_sequences=True, input_shape=(1, colsn), activation=activation_function)) model.add(Dropout(dropout)) model.add(LSTM(neurons, return_sequences=True, activation=activation_function)) model.add(Dropout(dropout)) model.add(LSTM(neurons, activation=activation_function)) model.add(Dropout(dropout)) model.add(Dense(output_dim=1, input_dim=1))

model.compile(loss=loss, optimizer=optimizer)

epochs = 2001

for step in range(epochs):

cost = model.train_on_batch(X_train[:, np.newaxis], Y_train)

if step % 30 == 0:

print(f‘{step} train cost: ‘, cost)

# test print(‘Testing ------------‘) cost = model.evaluate(X_test[:, np.newaxis], Y_test, batch_size=40) print(‘test cost:‘, cost)

# plotting the prediction

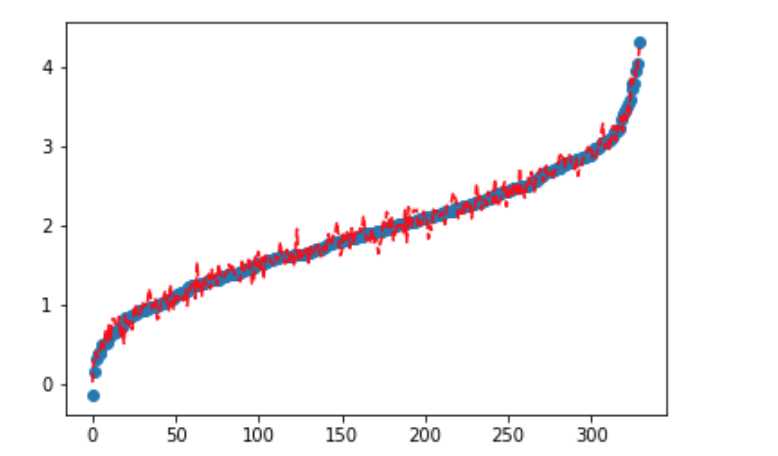

Y_pred = model.predict(X_test[:, np.newaxis])

#

sdf = pd.DataFrame({‘test‘:list(Y_test.T[0]), ‘pred‘:list(Y_pred.T[0])})

sdf.sort_values(by=‘test‘, inplace=True)

#

plt.scatter(range(len(Y_test)), list(sdf.test))

plt.plot(range(len(Y_test)), list(sdf.pred), ‘r--‘)

plt.show()

标签:rom atm models value sequence http .data taf tin

原文地址:https://www.cnblogs.com/fadedlemon/p/10530244.html