标签:3.5 guid eth class -- dfs emc 状态 sgd

前面已经介绍了Ceph的自动部署,本次介绍一下关于手动部署Ceph节点操作

一台虚拟机部署单节点Ceph集群

IP:172.25.250.14

内核: Red Hat Enterprise Linux Server release 7.4 (Maipo)

磁盘:/dev/vab,/dev/vac,/dev/vad

[root@cepe5 ~]# yum install -y ceph-common ceph-mon ceph-mgr ceph-mds ceph-osd ceph-radosgw

登录到ceph5查看ceph目录是否生成

[root@ceph5 ceph]# ll -rw-r--r-- 1 root root 92 Nov 23 2017 rbdmap [root@ceph6 ~]# ll /etc/ceph/ -rw-r--r-- 1 root root 92 Nov 23 2017 rbdmap

执行uuidgen命令,得到一个唯一的标识,作为ceph集群的ID

[root@ceph5 ceph]# uuidgen 82bf91ae-6d5e-4c09-9257-3d9e5992e6ef

生成ceph配置文件

[root@ceph5 ceph]# vim /etc/ceph/backup.conf

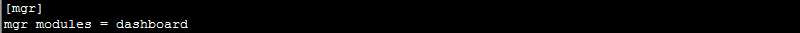

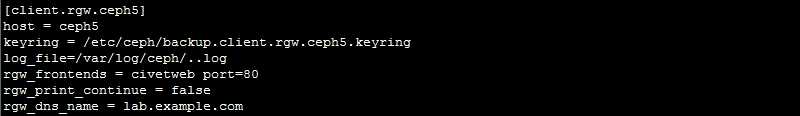

fsid = 51dda18c-7545-4edb-8ba9-27330ead81a7 mon_initial_members = ceph5 mon_host = 172.25.250.14 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx public_network = 172.25.250.0/24 cluster_network = 172.25.250.0/24 [mgr] mgr modules = dashboard [client.rgw.ceph5] host = ceph5 keyring = /etc/ceph/backup.client.rgw.ceph5.keyring log_file=/var/log/ceph/$cluster.$name.log rgw_frontends = civetweb port=80 rgw_print_continue = false rgw_dns_name = lab.example.com

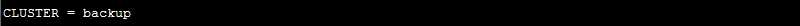

设置集群名为backup

[root@ceph5 ceph]# vim /etc/sysconfig/ceph

创建monitor使用的key

[root@ceph5 ceph]# ceph-authtool --create-keyring /tmp/backup.mon.keyring --gen-key -n mon. --cap mon ‘allow *‘

为ceph amin用户创建管理集群的密钥并赋予访问权限

[root@ceph5 ceph]# ceph-authtool --create-keyring /etc/ceph/backup.client.admin.keyring --gen-key -n client.admin --set-uid=0 --cap mon ‘allow *‘ --cap osd ‘allow *‘ --cap mgr ‘allow *‘ --cap mds ‘allow *‘

添加client.admin的秘钥到/tmp/backup.mon.keyring文件中

[root@ceph5 ceph]# ceph-authtool /tmp/backup.mon.keyring --import-keyring /etc/ceph/backup.client.admin.keyring

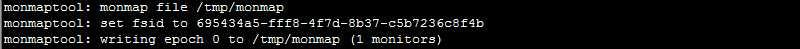

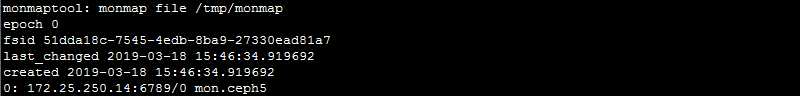

[root@ceph5 ~]# monmaptool --create --add ceph5 172.25.250.14 --fsid 51dda18c-7545-4edb-8ba9-27330ead81a7 /tmp/monmap

[root@ceph5 ceph]# file /tmp/monmaptool

[root@ceph5 ceph]# monmaptool --print /tmp/monmaptool

创建monitor使用的目录

[root@ceph5 ~]# mkdir -p /var/lib/ceph/mon/backup-ceph5

设置文件相关的权限

[root@ceph5 ~]# chown ceph.ceph -R /var/lib/ceph /etc/ceph /tmp/backup.mon.keyring /tmp/monmap

[root@ceph5 ~]# sudo -u ceph ceph-mon --cluster backup --mkfs -i ceph5 --monmap /tmp/monmap --keyring /tmp/backup.mon.keyring

[root@ceph5 ~]# systemctl start ceph-mon@ceph5

[root@ceph5 ~]# systemctl enable ceph-mon@ceph5

Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@ceph5.service to /usr/lib/systemd/system/ceph-mon@.service.

[root@ceph5 ~]# systemctl status ceph-mon@ceph5

ceph-mon@ceph5.service - Ceph cluster monitor daemon Loaded: loaded (/usr/lib/systemd/system/ceph-mon@.service; enabled; vendor preset: disabled) Active: active (running) since Mon 2019-03-18 15:46:45 CST; 2min 21s ago Main PID: 1527 (ceph-mon) CGroup: /system.slice/system-ceph\x2dmon.slice/ceph-mon@ceph5.service └─1527 /usr/bin/ceph-mon -f --cluster backup --id ceph5 --setuser ceph --setgroup ceph Mar 18 15:46:45 ceph5 systemd[1]: Started Ceph cluster monitor daemon. Mar 18 15:46:45 ceph5 systemd[1]: Starting Ceph cluster monitor daemon...

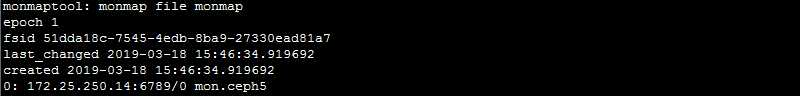

[root@ceph5 ~]# ceph mon getmap -o monmap --cluster backup

[root@ceph5 ~]# monmaptool --print monmap

[root@ceph5 ~]# ceph auth get mon. --cluster backup

exported keyring for mon. [mon.] key = AQDLTI9cCRjlLRAASL2nSyFnRX9uHSxbGXTycQ== caps mon = "allow *"

[root@ceph5 ~]# mkdir /var/lib/ceph/mgr/backup-ceph5

[root@ceph5 ~]# chown ceph.ceph -R /var/lib/ceph

[root@ceph5 ~]# ceph-authtool --create-keyring /etc/ceph/backup.mgr.ceph5.keyring --gen-key -n mgr.ceph5 --cap mon ‘allow profile mgr‘ --cap osd ‘allow *‘ --cap mds ‘allow *‘

[root@ceph5 ~]# ceph auth import -i /etc/ceph/backup.mgr.ceph5.keyring --cluster backup

[root@ceph5 ~]# ceph auth get-or-create mgr.ceph5 -o /var/lib/ceph/mgr/backup-ceph5/keyring --cluster backup

[root@ceph5 ~]# systemctl start ceph-mgr@ceph5

[root@ceph5 ~]# systemctl enable ceph-mgr@ceph5

Created symlink from /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@ceph5.service to /usr/lib/systemd/system/ceph-mgr@.service.

[root@ceph5 ~]# ceph -s --cluster backup

cluster:

id: 51dda18c-7545-4edb-8ba9-27330ead81a7

health: HEALTH_OK

services:

mon: 1 daemons, quorum ceph5

mgr: ceph5(active, starting)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 bytes

usage: 0 kB used, 0 kB / 0 kB avail

pgs:

[root@ceph5 ~]# ceph mgr dump --conf /etc/ceph/backup.conf

{ "epoch": 2, "active_gid": 4100, "active_name": "ceph5", "active_addr": "-", "available": false, "standbys": [], "modules": [ "restful", "status" ], "available_modules": [ "dashboard", "prometheus", "restful", "status", "zabbix" ] }

[root@ceph5 ~]# ceph mgr module enable dashboard --conf /etc/ceph/backup.conf

[root@ceph5 ~]# ceph mgr module ls --conf /etc/ceph/backup.conf

[ "dashboard", "restful", "status" ]

[root@ceph5 ~]# ceph config-key put mgr/dashboard/server_addr 172.25.250.14 --conf /etc/ceph/backup.conf

[root@ceph5 ~]# ceph config-key put mgr/dashboard/server_port 7000 --conf /etc/ceph/backup.conf

[root@ceph5 ~]# ceph config-key dump --conf /etc/ceph/backup.conf

{ "mgr/dashboard/server_addr": "172.25.250.14", "mgr/dashboard/server_port": "7000" }

做一个别名

[root@ceph5 ~]# alias ceph=‘ceph --cluster backup‘

[root@ceph5 ~]# alias rbd=‘rbd --cluster backup‘

[root@ceph5 ~]# uuidgen

创建日志盘

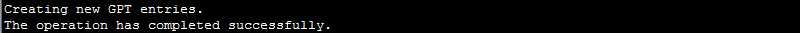

[root@ceph5 ~]# sgdisk --new=1:0:+5G --change-name=1:‘ceph journal‘ --partition-guid=1:aa25b6e9-384a-4012-b597-f4af96da3d5e --typecode=1:aa25b6e9-384a-4012-b597-f4af96da3d5e --mbrtogpt -- /dev/vdb

[root@ceph5 ~]# uuidgen

创建数据盘

[root@ceph5 ~]# sgdisk --new=2:0:0 --change-name=2:‘ceph data‘ --partition-guid=2:5aaec435-b7fd-4a50-859f-3d26ed09f185 --typecode=2:5aaec435-b7fd-4a50-859f-3d26ed09f185 --mbrtogpt -- /dev/vdb

格式化

[root@ceph5 ~]# mkfs.xfs -f -i size=2048 /dev/vdb1

[root@ceph5 ~]# mkfs.xfs -f -i size=2048 /dev/vdb2

挂载数据盘

[root@ceph5 ~]# mkdir /var/lib/ceph/osd/backup-{0,1,2}

[root@ceph5 ~]# mount -o noatime,largeio,inode64,swalloc /dev/vdb2 /var/lib/ceph/osd/backup-0

[root@ceph5 ~]# uuid_blk=`blkid /dev/vdb2|awk ‘{print $2}‘`

[root@ceph5 ~]# echo $uuid_blk

[root@ceph5 ~]# echo "$uuid_blk /var/lib/ceph/osd/backup-0 xfs defaults,noatime,largeio,inode64,swalloc 0 0" >> /etc/fstab

[root@ceph5 ~]# cat /etc/fstab

# # /etc/fstab # Created by anaconda on Tue Jul 11 05:34:11 2017 # # Accessible filesystems, by reference, are maintained under ‘/dev/disk‘ # See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info # UUID=716e713d-4e91-4186-81fd-c6cfa1b0974d / xfs defaults 0 0 UUID="2fc06d6c-39b1-47f8-b9ab-cf034599cbd1" /var/lib/ceph/osd/backup-0 xfs defaults,noatime,largeio,inode64,swalloc 0 0

创建Ceph osd秘钥

[root@ceph5 ~]# ceph-authtool --create-keyring /etc/ceph/backup.osd.0.keyring --gen-key -n osd.0 --cap mon ‘allow profile osd‘ --cap mgr ‘allow profile osd‘ --cap osd ‘allow *‘

[root@ceph5 ~]# ceph auth import -i /etc/ceph/backup.osd.0.keyring --cluster backup

[root@ceph5 ~]# ceph auth get-or-create osd.0 -o /var/lib/ceph/osd/backup-0/keyring --cluster backup

初始化osd数据目录

[root@ceph5 ~]# ceph-osd -i 0 --mkfs --cluster backup

2019-03-18 16:35:46.788193 7f030624ad00 -1 journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aio anyway 2019-03-18 16:35:46.886027 7f030624ad00 -1 journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aio anyway 2019-03-18 16:35:46.886514 7f030624ad00 -1 read_settings error reading settings: (2) No such file or directory 2019-03-18 16:35:46.992516 7f030624ad00 -1 created object store /var/lib/ceph/osd/backup-0 for osd.0 fsid 51dda18c-7545-4edb-8ba9-27330ead81a7

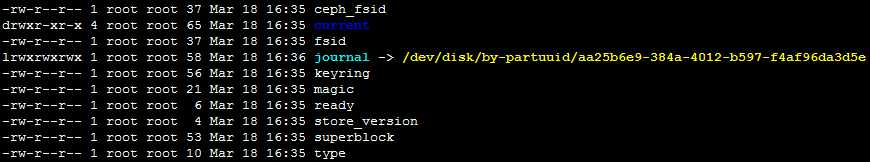

挂载日志盘

[root@ceph5 ~]# cd /var/lib/ceph/osd/backup-0

[root@ceph5 backup-0]# rm -f journal

[root@ceph5 backup-0]# partuuid_0=`blkid /dev/vdb1|awk -F "[\"\"]" ‘{print $8}‘`

[root@ceph5 backup-0]# echo $partuuid_0

[root@ceph5 backup-0]# ln -s /dev/disk/by-partuuid/$partuuid_0 ./journal

[root@ceph5 backup-0]# ll

[root@ceph5 backup-0]# chown ceph.ceph -R /var/lib/ceph

[root@ceph5 backup-0]# ceph-osd --mkjournal -i 0 --cluster backup

[root@ceph5 backup-0]# chown ceph.ceph /dev/disk/by-partuuid/$partuuid_0

将osd节点机加入crushmap

[root@ceph5 backup-0]# ceph osd crush add-bucket ceph5 host --cluster backup

将osd节点机移动到默认的root default下面

[root@ceph5 backup-0]# ceph osd crush move ceph5 root=default --cluster backup

将osd.0添加到ceph5节点下

[root@ceph5 backup-0]# ceph osd crush add osd.0 0.01500 root=default host=ceph5 --cluster backup

启动OSD

[root@ceph5 backup-0]# systemctl start ceph-osd@0

[root@ceph5 backup-0]# systemctl enable ceph-osd@0

Created symlink from /etc/systemd/system/ceph-osd.target.wants/ceph-osd@0.service to /usr/lib/systemd/system/ceph-osd@.service.

[root@ceph5 backup-0]# ceph osd create [root@ceph5 backup-0]# sgdisk --new=1:0:+5G --change-name=1:‘ceph journal‘ --partition-guid=1:3af9982e-8324-4666-bf3a-771f0e981f00 --typecode=1:3af9982e-8324-4666-bf3a-771f0e981f00 --mbrtogpt -- /dev/vdc [root@ceph5 backup-0]# sgdisk --new=2:0:0 --change-name=2:‘ceph data‘ --partition-guid=2:3f1d1456-2ebe-4702-96dc-e8ba975a772c --typecode=2:3f1d1456-2ebe-4702-96dc-e8ba975a772c --mbrtogpt -- /dev/vdc [root@ceph5 backup-0]# mkfs.xfs -f -i size=2048 /dev/vdc1 [root@ceph5 backup-0]# mkfs.xfs -f -i size=2048 /dev/vdc2 [root@ceph5 backup-0]# mount -o noatime,largeio,inode64,swalloc /dev/vdc2 /var/lib/ceph/osd/backup-1 [root@ceph5 backup-0]# blkid /dev/vdc2 /dev/vdc2: UUID="e0a2ccd2-549d-474f-8d36-fd1e3f20a4f8" TYPE="xfs" PARTLABEL="ceph data" PARTUUID="3f1d1456-2ebe-4702-96dc-e8ba975a772c" [root@ceph5 backup-0]# uuid_blk_1=`blkid /dev/vdc2|awk ‘{print $2}‘` [root@ceph5 backup-0]# echo "$uuid_blk_1 /var/lib/ceph/osd/backup-1 xfs defaults,noatime,largeio,inode64,swalloc 0 0" >> /etc/fstab [root@ceph5 backup-0]# ceph-osd -i 1 --mkfs --mkkey --cluster backup 2019-03-18 17:01:46.680469 7f4156f0ed00 -1 asok(0x5609ed1701c0) AdminSocketConfigObs::init: failed: AdminSocket::bind_and_listen: failed to bind the UNIX domain socket to ‘/var/run/ceph/backup-osd.1.asok‘: (17) File exists [root@ceph5 backup-0]# cd /var/lib/ceph/osd/backup-1 [root@ceph5 backup-1]# rm -f journal [root@ceph5 backup-1]# partuuid_1=`blkid /dev/vdc1|awk -F "[\"\"]" ‘{print $8}‘` [root@ceph5 backup-1]# ln -s /dev/disk/by-partuuid/$partuuid_1 ./journal [root@ceph5 backup-1]# chown ceph.ceph -R /var/lib/ceph [root@ceph5 backup-1]# ceph-osd --mkjournal -i 1 --cluster backup [root@ceph5 backup-1]# chown ceph.ceph /dev/disk/by-partuuid/$partuuid_1 [root@ceph5 backup-1]# ceph auth add osd.1 osd ‘allow *‘ mon ‘allow profile osd‘ mgr ‘allow profile osd‘ -i /var/lib/ceph/osd/backup-1/keyring --cluster backup [root@ceph5 backup-1]# ceph osd crush add osd.1 0.01500 root=default host=ceph5 --cluster backup [root@ceph5 backup-1]# systemctl start ceph-osd@1 [root@ceph5 backup-1]# systemctl enable ceph-osd@1

[root@ceph5 ~]# ceph osd create [root@ceph5 backup-1]# sgdisk --new=1:0:+5G --change-name=1:‘ceph journal‘ --partition-guid=1:4e7b40e7-f303-4362-8b57-e024d4987892 --typecode=1:4e7b40e7-f303-4362-8b57-e024d4987892 --mbrtogpt -- /dev/vdd Creating new GPT entries. chown ceph.ceph -R /var/lib/ceph ceph-osd --mkjournal -i 2 --cluster backup chown ceph.ceph /dev/disk/by-partuuid/$partuuid_2 ceph auth add osd.2 osd ‘allow *‘ mon ‘allow profile osd‘ mgr ‘allow profile osd‘ -i /var/lib/ceph/osd/backup-2/keyring --cluster backup ceph osd crush add osd.2 0.01500 root=default host=ceph5 --cluster backup systemctl start ceph-osd@2 systemctl enable ceph-osd@2The operation has completed successfully. [root@ceph5 backup-1]# sgdisk --new=2:0:0 --change-name=2:‘ceph data‘ --partition-guid=2:c82ce8a4-a762-4ba9-b987-21ef51df2eb2 --typecode=2:c82ce8a4-a762-4ba9-b987-21ef51df2eb2 --mbrtogpt -- /dev/vdd The operation has completed successfully. [root@ceph5 backup-1]# mkfs.xfs -f -i size=2048 /dev/vdd1 [root@ceph5 backup-1]# mkfs.xfs -f -i size=2048 /dev/vdd2 [root@ceph5 backup-1]# mount -o noatime,largeio,inode64,swalloc /dev/vdd2 /var/lib/ceph/osd/backup-2 [root@ceph5 backup-1]# blkid /dev/vdd2 /dev/vdd2: UUID="8458389b-c453-4c1f-b918-5ebde3a3b7b9" TYPE="xfs" PARTLABEL="ceph data" PARTUUID="c82ce8a4-a762-4ba9-b987-21ef51df2eb2" [root@ceph5 backup-1]# uuid_blk_2=`blkid /dev/vdd2|awk ‘{print $2}‘` [root@ceph5 backup-1]# echo "$uuid_blk_2 /var/lib/ceph/osd/backup-2 xfs defaults,noatime,largeio,inode64,swalloc 0 0">> /etc/fstab [root@ceph5 backup-1]# ceph-osd -i 2 --mkfs --mkkey --cluster backup [root@ceph5 backup-1]# cd /var/lib/ceph/osd/backup-2 [root@ceph5 backup-2]# rm -f journal [root@ceph5 backup-2]# partuuid_2=`blkid /dev/vdd1|awk -F "[\"\"]" ‘{print $8}‘` [root@ceph5 backup-2]# ln -s /dev/disk/by-partuuid/$partuuid_2 ./journal [root@ceph5 backup-2]# chown ceph.ceph -R /var/lib/ceph [root@ceph5 backup-2]# ceph-osd --mkjournal -i 2 --cluster backup 2019-03-18 17:06:43.123486 7efe77573d00 -1 journal read_header error decoding journal header [root@ceph5 backup-2]# chown ceph.ceph /dev/disk/by-partuuid/$partuuid_2 [root@ceph5 backup-2]# ceph auth add osd.2 osd ‘allow *‘ mon ‘allow profile osd‘ mgr ‘allow profile osd‘ -i /var/lib/ceph/osd/backup-2/keyring --cluster backup added key for osd.2 [root@ceph5 backup-2]# ceph osd crush add osd.2 0.01500 root=default host=ceph5 --cluster backup add item id 2 name ‘osd.2‘ weight 0.015 at location {host=ceph5,root=default} to crush map [root@ceph5 backup-2]# systemctl start ceph-osd@2 [root@ceph5 backup-2]# systemctl enable ceph-osd@2

[root@ceph5 backup-2]# ceph -s

cluster: id: 51dda18c-7545-4edb-8ba9-27330ead81a7 health: HEALTH_OK services: mon: 1 daemons, quorum ceph5 mgr: ceph5(active) osd: 3 osds: 3 up, 3 in data: pools: 0 pools, 0 pgs objects: 0 objects, 0 bytes usage: 5441 MB used, 40605 MB / 46046 MB avail pgs:

由于ceph默认crushmap策略是基于host的故障域,在三副本的情况下,由于只有一个host,这时创建存储池后,ceph状态会变为HEALTH_WARN,而且一直无法重平衡PG,故障表现为:

* 写入数据会hang住

* 如果安装了radosgw,会发现civetweb端口一直无法正常监听且radosgw进程反复重启

[root@ceph5 backup-2]# ceph osd pool create rbd 64 64 --cluster backup pool ‘rbd‘ created [root@ceph5 backup-2]# ceph -s cluster: id: 51dda18c-7545-4edb-8ba9-27330ead81a7 health: HEALTH_WARN too few PGs per OSD (21 < min 30) services: mon: 1 daemons, quorum ceph5 mgr: ceph5(active) osd: 3 osds: 3 up, 3 in data: pools: 1 pools, 64 pgs objects: 0 objects, 0 bytes usage: 5442 MB used, 40604 MB / 46046 MB avail pgs: 100.000% pgs not active 64 creating+peering

[root@ceph5 ~]# cd /etc/ceph/ [root@ceph5 ceph]# ceph osd getcrushmap -o /etc/ceph/crushmap 9 [root@ceph5 ceph]# crushtool -d /etc/ceph/crushmap -o /etc/ceph/crushmap.txt [root@ceph5 ceph]# sed -i ‘s/step chooseleaf firstn 0 type host/step chooseleaf firstn 0 type osd/‘ /etc/ceph/crushmap.txt [root@ceph5 ceph]# grep ‘step chooseleaf‘ /etc/ceph/crushmap.txt step chooseleaf firstn 0 type osd [root@ceph5 ceph]# crushtool -c /etc/ceph/crushmap.txt -o /etc/ceph/crushmap-new [root@ceph5 ceph]# ceph osd setcrushmap -i /etc/ceph/crushmap-new 10

[root@ceph5 ceph]# ceph -s cluster: id: 51dda18c-7545-4edb-8ba9-27330ead81a7 health: HEALTH_OK services: mon: 1 daemons, quorum ceph5 mgr: ceph5(active) osd: 3 osds: 3 up, 3 in data: pools: 1 pools, 64 pgs objects: 0 objects, 0 bytes usage: 5443 MB used, 40603 MB / 46046 MB avail pgs: 64 active+clean

[root@ceph5 backup-2]# ceph auth get-or-create client.rgw.ceph5 mon ‘allow rwx‘ osd ‘allow rwx‘ -o ceph.client.rgw.ceph5.keyring --backup cluster --conf /etc/ceph/backup.conf

添加配置文件

启动服务

[root@ceph5 ceph]# systemctl start ceph-radosgw@rgw.ceph5

[root@ceph5 ceph]# systemctl enbale ceph-radosgw@rgw.ceph5

所有命令

#可以把这些命令直接写一个bash文件执行安装,也可以进行修改,做一个shell脚本一键安装 yum install -y ceph-common ceph-mon ceph-mgr ceph-mds ceph-osd ceph-radosgw echo ‘CLUSTER = backup‘>>/etc/sysconfig/ceph uuid=`uuidgen` echo "fsid = $uuid mon_initial_members = ceph5 mon_host = 172.25.250.14 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx public_network = 172.25.250.0/24 cluster_network = 172.25.250.0/24 [mgr] mgr modules = dashboard [client.rgw.ceph5] host = ceph5 keyring = /etc/ceph/backup.client.rgw.ceph5.keyring log_file=/var/log/ceph/$cluster.$name.log rgw_frontends = civetweb port=80 rgw_print_continue = false rgw_dns_name = lab.example.com " >>/etc/ceph/backup.conf ceph-authtool --create-keyring /tmp/backup.mon.keyring --gen-key -n mon. --cap mon ‘allow *‘ ceph-authtool --create-keyring /etc/ceph/backup.client.admin.keyring --gen-key -n client.admin --set-uid=0 --cap mon ‘allow *‘ --cap osd ‘allow *‘ --cap mgr ‘allow *‘ --cap mds ‘allow *‘ ceph-authtool /tmp/backup.mon.keyring --import-keyring /etc/ceph/backup.client.admin.keyring monmaptool --create --add ceph5 172.25.250.14 --fsid $uuid /tmp/monmap monmaptool --print /tmp/monmap mkdir -p /var/lib/ceph/mon/backup-ceph5 chown ceph.ceph -R /var/lib/ceph /etc/ceph /tmp/backup.mon.keyring /tmp/monmap sudo -u ceph ceph-mon --cluster backup --mkfs -i ceph5 --monmap /tmp/monmap --keyring /tmp/backup.mon.keyring systemctl start ceph-mon@ceph5 systemctl enable ceph-mon@ceph5 ceph mon getmap -o monmap --cluster backup monmaptool --print monmap ceph auth get mon. --cluster backup mkdir /var/lib/ceph/mgr/backup-ceph5 chown ceph.ceph -R /var/lib/ceph ceph-authtool --create-keyring /etc/ceph/backup.mgr.ceph5.keyring --gen-key -n mgr.ceph5 --cap mon ‘allow profile mgr‘ --cap osd ‘allow *‘ --cap mds ‘allow *‘ ceph auth import -i /etc/ceph/backup.mgr.ceph5.keyring --cluster backup ceph auth get-or-create mgr.ceph5 -o /var/lib/ceph/mgr/backup-ceph5/keyring --cluster backup systemctl start ceph-mgr@ceph5 systemctl enable ceph-mgr@ceph5 ceph -s --cluster backup ceph mgr dump --conf /etc/ceph/backup.conf ceph mgr module enable dashboard --conf /etc/ceph/backup.conf ceph mgr module ls --conf /etc/ceph/backup.conf ceph config-key put mgr/dashboard/server_addr 172.25.250.14 --conf /etc/ceph/backup.conf ceph config-key put mgr/dashboard/server_port 7000 --conf /etc/ceph/backup.conf ceph config-key dump --conf /etc/ceph/backup.conf alias ceph=‘ceph --cluster backup‘ alias rbd=‘rbd --cluster backup‘ ceph osd create sgdisk --new=1:0:+5G --change-name=1:‘ceph journal‘ --partition-guid=1:baf21303-1f6a-4345-965e-4b75a12ec3c6 --typecode=1:baf21303-1f6a-4345-965e-4b75a12ec3c6 --mbrtogpt -- /dev/vdb sgdisk --new=2:0:0 --change-name=2:‘ceph data‘ --partition-guid=2:04aad6e0-0232-4578-8b52-a289f3777f07 --typecode=2:04aad6e0-0232-4578-8b52-a289f3777f07 --mbrtogpt -- /dev/vdb mkfs.xfs -f -i size=2048 /dev/vdb1 mkfs.xfs -f -i size=2048 /dev/vdb2 mkdir /var/lib/ceph/osd/backup-{0,1,2} mount -o noatime,largeio,inode64,swalloc /dev/vdb2 /var/lib/ceph/osd/backup-0 uuid_blk=`blkid /dev/vdb2|awk ‘{print $2}‘` echo "$uuid_blk /var/lib/ceph/osd/backup-0 xfs defaults,noatime,largeio,inode64,swalloc 0 0" >> /etc/fstab ceph-authtool --create-keyring /etc/ceph/backup.osd.0.keyring --gen-key -n osd.0 --cap mon ‘allow profile osd‘ --cap mgr ‘allow profile osd‘ --cap osd ‘allow *‘ ceph auth import -i /etc/ceph/backup.osd.0.keyring --cluster backup ceph auth get-or-create osd.0 -o /var/lib/ceph/osd/backup-0/keyring --cluster backup ceph-osd -i 0 --mkfs --cluster backup cd /var/lib/ceph/osd/backup-0 rm -f journal partuuid_0=`blkid /dev/vdb1|awk -F "[\"\"]" ‘{print $8}‘` ln -s /dev/disk/by-partuuid/$partuuid_0 ./journal chown ceph.ceph -R /var/lib/ceph ceph-osd --mkjournal -i 0 --cluster backup chown ceph.ceph /dev/disk/by-partuuid/$partuuid_0 ceph osd crush add-bucket ceph5 host --cluster backup ceph osd crush move ceph5 root=default --cluster backup ceph osd crush add osd.0 0.01500 root=default host=ceph5 --cluster backup systemctl start ceph-osd@0 systemctl enable ceph-osd@0 ceph osd create sgdisk --new=1:0:+5G --change-name=1:‘ceph journal‘ --partition-guid=1:3af9982e-8324-4666-bf3a-771f0e981f00 --typecode=1:3af9982e-8324-4666-bf3a-771f0e981f00 --mbrtogpt -- /dev/vdc sgdisk --new=2:0:0 --change-name=2:‘ceph data‘ --partition-guid=2:3f1d1456-2ebe-4702-96dc-e8ba975a772c --typecode=2:3f1d1456-2ebe-4702-96dc-e8ba975a772c --mbrtogpt -- /dev/vdc mkfs.xfs -f -i size=2048 /dev/vdc1 mkfs.xfs -f -i size=2048 /dev/vdc2 mount -o noatime,largeio,inode64,swalloc /dev/vdc2 /var/lib/ceph/osd/backup-1 blkid /dev/vdc2 uuid_blk_1=`blkid /dev/vdc2|awk ‘{print $2}‘` echo "$uuid_blk_1 /var/lib/ceph/osd/backup-1 xfs defaults,noatime,largeio,inode64,swalloc 0 0" >> /etc/fstab ceph-osd -i 1 --mkfs --mkkey --cluster backup cd /var/lib/ceph/osd/backup-1 rm -f journal partuuid_1=`blkid /dev/vdc1|awk -F "[\"\"]" ‘{print $8}‘` ln -s /dev/disk/by-partuuid/$partuuid_1 ./journal chown ceph.ceph -R /var/lib/ceph ceph-osd --mkjournal -i 1 --cluster backup chown ceph.ceph /dev/disk/by-partuuid/$partuuid_1 ceph auth add osd.1 osd ‘allow *‘ mon ‘allow profile osd‘ mgr ‘allow profile osd‘ -i /var/lib/ceph/osd/backup-1/keyring --cluster backup ceph osd crush add osd.1 0.01500 root=default host=ceph5 --cluster backup systemctl start ceph-osd@1 systemctl enable ceph-osd@1 ceph osd create sgdisk --new=1:0:+5G --change-name=1:‘ceph journal‘ --partition-guid=1:4e7b40e7-f303-4362-8b57-e024d4987892 --typecode=1:4e7b40e7-f303-4362-8b57-e024d4987892 --mbrtogpt -- /dev/vdd sgdisk --new=2:0:0 --change-name=2:‘ceph data‘ --partition-guid=2:c82ce8a4-a762-4ba9-b987-21ef51df2eb2 --typecode=2:c82ce8a4-a762-4ba9-b987-21ef51df2eb2 --mbrtogpt -- /dev/vdd mkfs.xfs -f -i size=2048 /dev/vdd1 mkfs.xfs -f -i size=2048 /dev/vdd2 mount -o noatime,largeio,inode64,swalloc /dev/vdd2 /var/lib/ceph/osd/backup-2 blkid /dev/vdd2 uuid_blk_2=`blkid /dev/vdd2|awk ‘{print $2}‘` echo "$uuid_blk_2 /var/lib/ceph/osd/backup-2 xfs defaults,noatime,largeio,inode64,swalloc 0 0">> /etc/fstab ceph-osd -i 2 --mkfs --mkkey --cluster backup cd /var/lib/ceph/osd/backup-2 rm -f journal partuuid_2=`blkid /dev/vdd1|awk -F "[\"\"]" ‘{print $8}‘` ln -s /dev/disk/by-partuuid/$partuuid_2 ./journal chown ceph.ceph -R /var/lib/ceph ceph-osd --mkjournal -i 2 --cluster backup chown ceph.ceph /dev/disk/by-partuuid/$partuuid_2 ceph auth add osd.2 osd ‘allow *‘ mon ‘allow profile osd‘ mgr ‘allow profile osd‘ -i /var/lib/ceph/osd/backup-2/keyring --cluster backup ceph osd crush add osd.2 0.01500 root=default host=ceph5 --cluster backup systemctl start ceph-osd@2 systemctl enable ceph-osd@2 ceph osd getcrushmap -o /etc/ceph/crushmap crushtool -d /etc/ceph/crushmap -o /etc/ceph/crushmap.txt sed -i ‘s/step chooseleaf firstn 0 type host/step chooseleaf firstn 0 type osd/‘ /etc/ceph/crushmap.txt grep ‘step chooseleaf‘ /etc/ceph/crushmap.txt crushtool -c /etc/ceph/crushmap.txt -o /etc/ceph/crushmap-new ceph osd setcrushmap -i /etc/ceph/crushmap-new 命令

博主声明:本文的内容来源主要来自誉天教育晏威老师,由本人实验完成操作验证,需要的博友请联系誉天教育(http://www.yutianedu.com/),获得官方同意或者晏老师(https://www.cnblogs.com/breezey/)本人同意即可转载,谢谢!

标签:3.5 guid eth class -- dfs emc 状态 sgd

原文地址:https://www.cnblogs.com/zyxnhr/p/10553717.html