标签:mod for each tle ssi cto 类别 output material

import org.apache.spark.{SparkConf, SparkContext} import org.apache.spark.ml.feature.HashingTF import org.apache.spark.ml.feature.IDF import org.apache.spark.ml.feature.Tokenizer import org.apache.spark.mllib.classification.NaiveBayes import org.apache.spark.mllib.linalg.Vector import org.apache.spark.mllib.linalg.Vectors import org.apache.spark.mllib.regression.LabeledPoint import org.apache.spark.sql.Row import scala.reflect.api.materializeTypeTag object TestNaiveBayes { case class RawDataRecord(category: String, text: String) def main(args : Array[String]) { /*val conf = new SparkConf().setMaster("yarn-client") val sc = new SparkContext(conf)*/ val conf = new SparkConf().setMaster("local").setAppName("reduce") val sc = new SparkContext(conf) val sqlContext = new org.apache.spark.sql.SQLContext(sc) import sqlContext.implicits._ var srcRDD = sc.textFile("C:/Users/dell/Desktop/大数据/分类细胞词库").map { x => var data = x.split(",") RawDataRecord(data(0),data(1)) } var trainingDF = srcRDD.toDF() //将词语转换成数组 var tokenizer = new Tokenizer().setInputCol("text").setOutputCol("words") var wordsData = tokenizer.transform(trainingDF) println("output1:") wordsData.select($"category",$"text",$"words").take(1).foreach(println) //计算每个词在文档中的词频 var hashingTF = new HashingTF().setNumFeatures(50000).setInputCol("words").setOutputCol("rawFeatures") var featurizedData = hashingTF.transform(wordsData) println("output2:") featurizedData.select($"category", $"words", $"rawFeatures").take(1).foreach(println) //计算每个词的TF-IDF var idf = new IDF().setInputCol("rawFeatures").setOutputCol("features") var idfModel = idf.fit(featurizedData) var rescaledData = idfModel.transform(featurizedData) println("output3:") rescaledData.select($"category", $"features").take(1).foreach(println) //转换成Bayes的输入格式 var trainDataRdd = rescaledData.select($"category",$"features").map { case Row(label: String, features: Vector) => LabeledPoint(label.toDouble, Vectors.dense(features.toArray)) } println("output4:") trainDataRdd.take(1) //训练热词数据 val model = NaiveBayes.train(trainDataRdd, lambda = 1.0, modelType = "multinomial") var srcRDD1 = sc.textFile("C:/Users/dell/Desktop/大数据/热词细胞词库/热词数据1.txt").map { x => var data = x.split(",") RawDataRecord(data(0),data(1)) } var testDF = srcRDD1.toDF() //将热词数据做同样的特征表示及格式转换 var testwordsData = tokenizer.transform(testDF) var testfeaturizedData = hashingTF.transform(testwordsData) var testrescaledData = idfModel.transform(testfeaturizedData) var testDataRdd = testrescaledData.select($"category",$"features").map { case Row(label: String, features: Vector) => LabeledPoint(label.toDouble, Vectors.dense(features.toArray)) } //对热词数据数据集使用训练模型进行分类预测 训练模型就是提前弄好的分类数据细胞集 val testpredictionAndLabel = testDataRdd.map(p => (model.predict(p.features), p.label)) println("output5:") testpredictionAndLabel.foreach(println) } }

代码网上找的好几天前的了,找不到出处了,侵删

找到了。https://blog.csdn.net/yumingzhu1/article/details/85064047

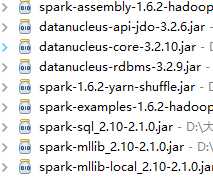

5.jar包依赖

可能不需要这么多,自己甄别吧

需要什么没补充或者不懂得可以评论,因为太晚了,就写到这样吧

spark MLlib实现的基于朴素贝叶斯(NaiveBayes)的中文文本自动分类

标签:mod for each tle ssi cto 类别 output material

原文地址:https://www.cnblogs.com/zpsblog/p/10591136.html