标签:任务 sub shuffle state sys ado byte port pie

案例分析:

需要将hdfs上的数据导出到mysql里的一张表里。

虚拟机集群的为:centos1-centos5

问题1:

在centos1上将hdfs上的数据导出到centos1上的mysql里:

sqoop export --connect jdbc:mysql://centos1:3306/test \ --username root --password root --table order_uid --export-dir /user/hive/warehouse/test.db/order_uid/ --fields-terminated-by ‘,‘

报错:

Error executing statement: java.sql.SQLException: Access denied for user ‘root‘@‘centos1‘ (using password: YES)

改成:

sqoop export

--connect jdbc:mysql://localhost:3306/test \

--username root \

--password root \

--table order_uid \

--export-dir /user/hive/warehouse/test.db/order_uid/ \

--fields-terminated-by ‘,‘

报错:

Error: java.io.IOException: com.mysql.jdbc.exceptions.jdbc4.MySQLSyntaxErrorException: Table ‘test.order_uid‘ doesn‘t exist at org.apache.sqoop.mapreduce.AsyncSqlRecordWriter.close(AsyncSqlRecordWriter.java:205) at

org.apache.hadoop.mapred.MapTask$NewDirectOutputCollector.close(MapTask.java:670) at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:793) at org.apache.hadoop.mapred.MapTask.run(MapTask.java:341) at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164) at java.security.AccessController.doPrivileged(Native Method) at

javax.security.auth.Subject.doAs(Subject.java:422) at ...

问题2:

在centos3上将hdfs上的数据导出到centos1上的mysql里:

sqoop export --connect jdbc:mysql://centos1:3306/test \ --username root --password root --table order_uid --export-dir /user/hive/warehouse/test.db/order_uid/ --fields-terminated-by ‘,‘

报错:

19/03/27 17:47:41 ERROR mapreduce.ExportJobBase: Export job failed! 19/03/27 17:47:41 ERROR tool.ExportTool: Error during export: Export job failed! at org.apache.sqoop.mapreduce.ExportJobBase.runExport(ExportJobBase.java:445) at org.apache.sqoop.manager.SqlManager.exportTable(SqlManager.java:931) at org.apache.sqoop.tool.ExportTool.exportTable(ExportTool.java:80) at org.apache.sqoop.tool.ExportTool.run(ExportTool.java:99) at org.apache.sqoop.Sqoop.run(Sqoop.java:147) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70) at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183) at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234) at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243) at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

在网上找到两种解决方案:

1.在网上找到有人说在hdfs的路径写到具体文件,而不是写到目录,改成:

sqoop export --connect jdbc:mysql://centos1:3306/test \ --username root --password root --table order_uid --export-dir /user/hive/warehouse/test.db/order_uid/t1.dat --fields-terminated-by ‘,‘

还是报相同错误!

2. 更改mysql里表的编码

将cengos1里mysql的表order_uid字符集编码改成:utf-8,重新执行,centos1的mysql表里导入了部分数据, 仍然报错:

Job failed as tasks failed. failedMaps:1 failedReduces:0 19/03/27 17:54:08 INFO mapreduce.Job: Counters: 33 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=290362 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=1130 HDFS: Number of bytes written=0 HDFS: Number of read operations=8 HDFS: Number of large read operations=0 HDFS: Number of write operations=0 Job Counters Failed map tasks=1 Killed map tasks=1 Launched map tasks=4 Data-local map tasks=1 Rack-local map tasks=3 Total time spent by all maps in occupied slots (ms)=300866 Total time spent by all reduces in occupied slots (ms)=0 Total time spent by all map tasks (ms)=300866 Total vcore-milliseconds taken by all map tasks=300866 Total megabyte-milliseconds taken by all map tasks=308086784 Map-Reduce Framework Map input records=5 Map output records=5 Input split bytes=282 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=664 CPU time spent (ms)=2460 Physical memory (bytes) snapshot=315748352 Virtual memory (bytes) snapshot=4170031104 Total committed heap usage (bytes)=146800640 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=0 19/03/27 17:54:08 INFO mapreduce.ExportJobBase: Transferred 1.1035 KB in 223.4219 seconds (5.0577 bytes/sec) 19/03/27 17:54:08 INFO mapreduce.ExportJobBase: Exported 5 records. 19/03/27 17:54:08 ERROR mapreduce.ExportJobBase: Export job failed! 19/03/27 17:54:08 ERROR tool.ExportTool: Error during export: Export job failed! at org.apache.sqoop.mapreduce.ExportJobBase.runExport(ExportJobBase.java:445) at org.apache.sqoop.manager.SqlManager.exportTable(SqlManager.java:931) at org.apache.sqoop.tool.ExportTool.exportTable(ExportTool.java:80) at org.apache.sqoop.tool.ExportTool.run(ExportTool.java:99) at org.apache.sqoop.Sqoop.run(Sqoop.java:147) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70) at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183) at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234) at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243) at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

当指定map为1时:

sqoop export --connect jdbc:mysql://centos1:3306/test \ --username root --password root --table order_uid --export-dir /user/hive/warehouse/test.db/order_uid --fields-terminated-by ‘,‘ --m 1

运行成功了!!!

sqoop默认情况下的map数量为4,也就是说这种情况下1个map能运行成功,而多个map会失败。于是将map改为2又试了一遍:

sqoop export --connect jdbc:mysql://centos1:3306/test \ --username root --password root --table order_uid --export-dir /user/hive/warehouse/test.db/order_uid --fields-terminated-by ‘,‘ --m 2

执行结果为:

19/03/27 19:17:22 INFO mapreduce.Job: Counters: 32 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=145181 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=705 HDFS: Number of bytes written=0 HDFS: Number of read operations=4 HDFS: Number of large read operations=0 HDFS: Number of write operations=0 Job Counters Failed map tasks=1 Launched map tasks=2 Data-local map tasks=1 Rack-local map tasks=1 Total time spent by all maps in occupied slots (ms)=88960 Total time spent by all reduces in occupied slots (ms)=0 Total time spent by all map tasks (ms)=88960 Total vcore-milliseconds taken by all map tasks=88960 Total megabyte-milliseconds taken by all map tasks=91095040 Map-Reduce Framework Map input records=5 Map output records=5 Input split bytes=141 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=278 CPU time spent (ms)=1200 Physical memory (bytes) snapshot=159162368 Virtual memory (bytes) snapshot=2087399424 Total committed heap usage (bytes)=77070336 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=0 19/03/27 19:17:22 INFO mapreduce.ExportJobBase: Transferred 705 bytes in 99.4048 seconds (7.0922 bytes/sec) 19/03/27 19:17:22 INFO mapreduce.ExportJobBase: Exported 5 records. 19/03/27 19:17:22 ERROR mapreduce.ExportJobBase: Export job failed! 19/03/27 19:17:22 ERROR tool.ExportTool: Error during export: Export job failed! at org.apache.sqoop.mapreduce.ExportJobBase.runExport(ExportJobBase.java:445) at org.apache.sqoop.manager.SqlManager.exportTable(SqlManager.java:931) at org.apache.sqoop.tool.ExportTool.exportTable(ExportTool.java:80) at org.apache.sqoop.tool.ExportTool.run(ExportTool.java:99) at org.apache.sqoop.Sqoop.run(Sqoop.java:147) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70) at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183) at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234) at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243) at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

可以看到它成功的导入了5条记录!!!

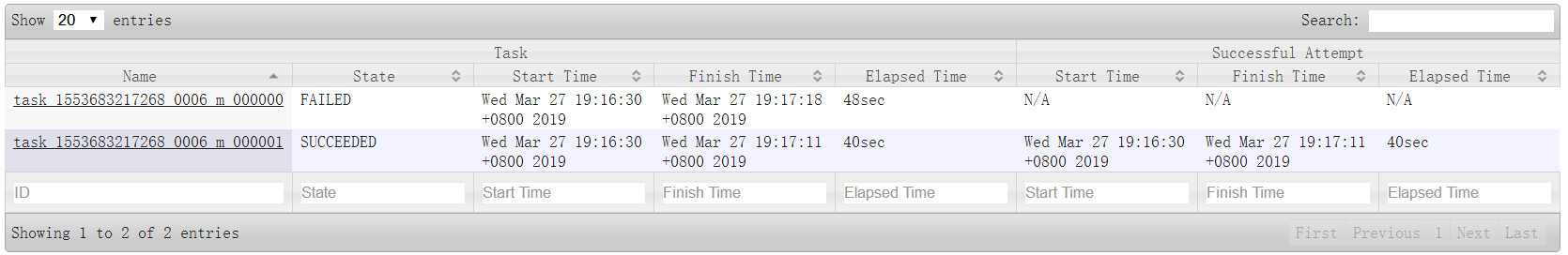

通过jobhistory窗口可以看到两个map一个成功,一个执行失败

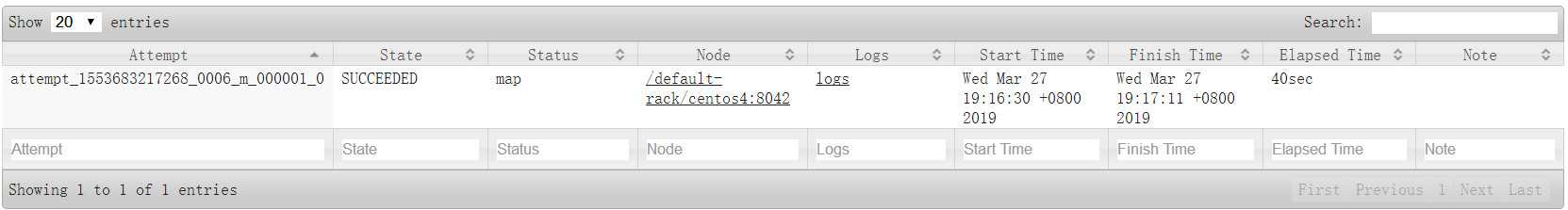

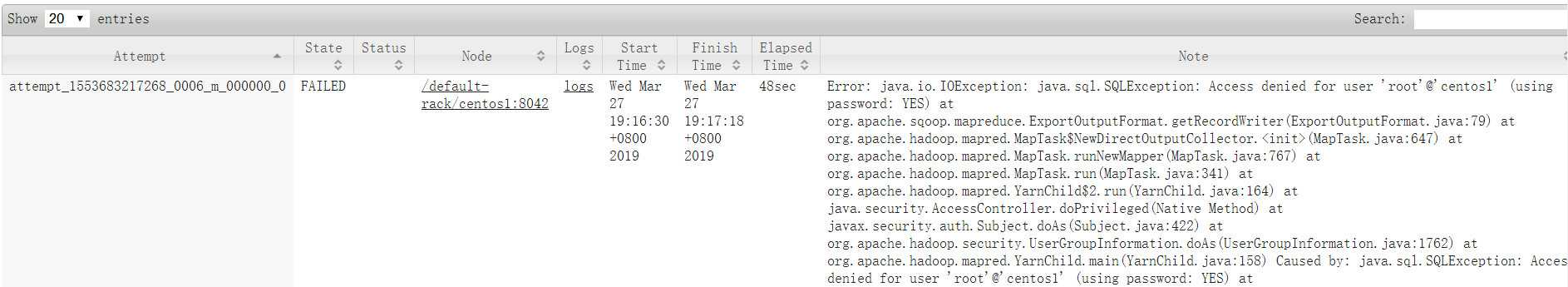

点击task的name:

成功的任务由centos4节点运行的,失败的task由centos1运行,又回到了问题1,就是centos1不能访问centos1的mysql数据!

最终一个朋友告诉我再centos1上单独添加对centos1的远程访问权限:

grant all privileges on *.* to ‘root‘@‘centos1‘ identified by ‘root‘ with grant option; flush privileges;

然后重新运行一下,问题1和问题2都被愉快的解决了!!!

当时在centos1上的mysql里执行了:

GRANT ALL PRIVILEGES ON *.* TO ‘root‘@‘%‘ IDENTIFIED BY ‘root‘ WITH GRANT OPTION;

flush privileges;

对其他节点添加了远程访问,但没有对自己添加远程访问权限。

标签:任务 sub shuffle state sys ado byte port pie

原文地址:https://www.cnblogs.com/wang-bing/p/10610053.html