标签:地址 .class gate 变形 opp tee sea rop 表示

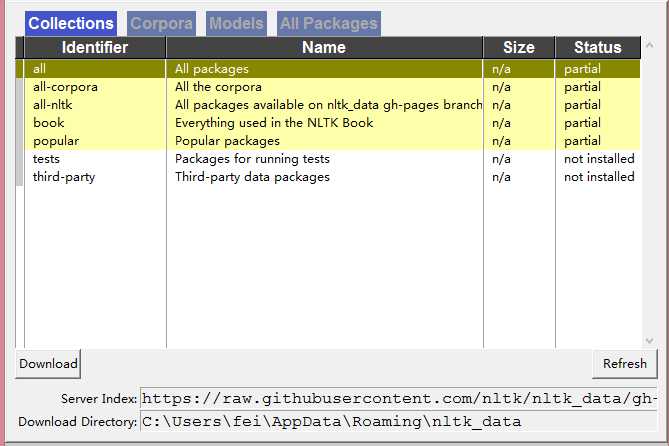

# 方式一 import nltk nltk.download() showing info https://raw.githubusercontent.com/nltk/nltk_data/gh-pages/index.xml

官方下载地址 http://www.nltk.org/nltk_data/¶

githup下载地址 https://github.com/nltk/nltk_data

from nltk import data data.path.append(‘D:/python3.6/nltk_data‘)

下载语料库

# 请下载 nltk.download(‘brown‘) [nltk_data] Downloading package brown to [nltk_data] C:\Users\fei\AppData\Roaming\nltk_data... [nltk_data] Unzipping corpora\brown.zip.

nltk自带语料库

# nltk自带语料库 from nltk.corpus import brown brown.categories() [‘adventure‘, ‘belles_lettres‘, ‘editorial‘, ‘fiction‘, ‘government‘, ‘hobbies‘, ‘humor‘, ‘learned‘, ‘lore‘, ‘mystery‘, ‘news‘, ‘religion‘, ‘reviews‘, ‘romance‘, ‘science_fiction‘] brown.readme() # 语料信息描述 print(brown.words()[:10]) # 单词 print(len(brown.words())) [‘The‘, ‘Fulton‘, ‘County‘, ‘Grand‘, ‘Jury‘, ‘said‘, ‘Friday‘, ‘an‘, ‘investigation‘, ‘of‘] 1161192 print(brown.sents()[:10]) # 句子 print(brown.sents().__len__()) [[‘The‘, ‘Fulton‘, ‘County‘, ‘Grand‘, ‘Jury‘, ‘said‘, ‘Friday‘, ‘an‘, ‘investigation‘, ‘of‘, "Atlanta‘s", ‘recent‘, ‘primary‘, ‘election‘, ‘produced‘, ‘``‘, ‘no‘, ‘evidence‘, "‘‘", ‘that‘, ‘any‘, ‘irregularities‘, ‘took‘, ‘place‘, ‘.‘], [‘The‘, ‘jury‘, ‘further‘, ‘said‘, ‘in‘, ‘term-end‘, ‘presentments‘, ‘that‘, ‘the‘, ‘City‘, ‘Executive‘, ‘Committee‘, ‘,‘, ‘which‘, ‘had‘, ‘over-all‘, ‘charge‘, ‘of‘, ‘the‘, ‘election‘, ‘,‘, ‘``‘, ‘deserves‘, ‘the‘, ‘praise‘, ‘and‘, ‘thanks‘, ‘of‘, ‘the‘, ‘City‘, ‘of‘, ‘Atlanta‘, "‘‘", ‘for‘, ‘the‘, ‘manner‘, ‘in‘, ‘which‘, ‘the‘, ‘election‘, ‘was‘, ‘conducted‘, ‘.‘], [‘The‘, ‘September-October‘, ‘term‘, ‘jury‘, ‘had‘, ‘been‘, ‘charged‘, ‘by‘, ‘Fulton‘, ‘Superior‘, ‘Court‘, ‘Judge‘, ‘Durwood‘, ‘Pye‘, ‘to‘, ‘investigate‘, ‘reports‘, ‘of‘, ‘possible‘, ‘``‘, ‘irregularities‘, "‘‘", ‘in‘, ‘the‘, ‘hard-fought‘, ‘primary‘, ‘which‘, ‘was‘, ‘won‘, ‘by‘, ‘Mayor-nominate‘, ‘Ivan‘, ‘Allen‘, ‘Jr.‘, ‘.‘], [‘``‘, ‘Only‘, ‘a‘, ‘relative‘, ‘handful‘, ‘of‘, ‘such‘, ‘reports‘, ‘was‘, ‘received‘, "‘‘", ‘,‘, ‘the‘, ‘jury‘, ‘said‘, ‘,‘, ‘``‘, ‘considering‘, ‘the‘, ‘widespread‘, ‘interest‘, ‘in‘, ‘the‘, ‘election‘, ‘,‘, ‘the‘, ‘number‘, ‘of‘, ‘voters‘, ‘and‘, ‘the‘, ‘size‘, ‘of‘, ‘this‘, ‘city‘, "‘‘", ‘.‘], [‘The‘, ‘jury‘, ‘said‘, ‘it‘, ‘did‘, ‘find‘, ‘that‘, ‘many‘, ‘of‘, "Georgia‘s", ‘registration‘, ‘and‘, ‘election‘, ‘laws‘, ‘``‘, ‘are‘, ‘outmoded‘, ‘or‘, ‘inadequate‘, ‘and‘, ‘often‘, ‘ambiguous‘, "‘‘", ‘.‘], [‘It‘, ‘recommended‘, ‘that‘, ‘Fulton‘, ‘legislators‘, ‘act‘, ‘``‘, ‘to‘, ‘have‘, ‘these‘, ‘laws‘, ‘studied‘, ‘and‘, ‘revised‘, ‘to‘, ‘the‘, ‘end‘, ‘of‘, ‘modernizing‘, ‘and‘, ‘improving‘, ‘them‘, "‘‘", ‘.‘], [‘The‘, ‘grand‘, ‘jury‘, ‘commented‘, ‘on‘, ‘a‘, ‘number‘, ‘of‘, ‘other‘, ‘topics‘, ‘,‘, ‘among‘, ‘them‘, ‘the‘, ‘Atlanta‘, ‘and‘, ‘Fulton‘, ‘County‘, ‘purchasing‘, ‘departments‘, ‘which‘, ‘it‘, ‘said‘, ‘``‘, ‘are‘, ‘well‘, ‘operated‘, ‘and‘, ‘follow‘, ‘generally‘, ‘accepted‘, ‘practices‘, ‘which‘, ‘inure‘, ‘to‘, ‘the‘, ‘best‘, ‘interest‘, ‘of‘, ‘both‘, ‘governments‘, "‘‘", ‘.‘], [‘Merger‘, ‘proposed‘], [‘However‘, ‘,‘, ‘the‘, ‘jury‘, ‘said‘, ‘it‘, ‘believes‘, ‘``‘, ‘these‘, ‘two‘, ‘offices‘, ‘should‘, ‘be‘, ‘combined‘, ‘to‘, ‘achieve‘, ‘greater‘, ‘efficiency‘, ‘and‘, ‘reduce‘, ‘the‘, ‘cost‘, ‘of‘, ‘administration‘, "‘‘", ‘.‘], [‘The‘, ‘City‘, ‘Purchasing‘, ‘Department‘, ‘,‘, ‘the‘, ‘jury‘, ‘said‘, ‘,‘, ‘``‘, ‘is‘, ‘lacking‘, ‘in‘, ‘experienced‘, ‘clerical‘, ‘personnel‘, ‘as‘, ‘a‘, ‘result‘, ‘of‘, ‘city‘, ‘personnel‘, ‘policies‘, "‘‘", ‘.‘]] 57340 print(brown.tagged_words()[:10]) # 词性标注 print(brown.tagged_words().__len__()) [(‘The‘, ‘AT‘), (‘Fulton‘, ‘NP-TL‘), (‘County‘, ‘NN-TL‘), (‘Grand‘, ‘JJ-TL‘), (‘Jury‘, ‘NN-TL‘), (‘said‘, ‘VBD‘), (‘Friday‘, ‘NR‘), (‘an‘, ‘AT‘), (‘investigation‘, ‘NN‘), (‘of‘, ‘IN‘)] 1161192

1.preprocess

2.tokenize

3.stopwords

4....

5.make features

6.machine learning

import nltk sentence = ‘Never underestimate the heart of a champion ‘ tokens = nltk.word_tokenize(sentence) tokens [‘Never‘, ‘underestimate‘, ‘the‘, ‘heart‘, ‘of‘, ‘a‘, ‘champion‘]

中文分词

import jieba

seg_list = jieba.cut("我来到北京清华?学", cut_all=True)

print("全模式:", "/ ".join(seg_list)) # 全模式

seg_list = jieba.cut("我来到北京清华?学", cut_all=False)

print("精确模式:", "/ ".join(seg_list)) # 精确模式

seg_list = jieba.cut("他来到了?易杭研?厦") # 默认是精确模式

print(‘新词识别:‘,", ".join(seg_list))

seg_list = jieba.cut_for_search("?明硕?毕业于中国科学院计算所,后在?本京都?学深造")

print(‘搜索引擎模式:‘,‘,‘.join(seg_list))

全模式: 我/ 来到/ 北京/ 清华/ / / 学

精确模式: 我/ 来到/ 北京/ 清华/ ?/ 学

新词识别: 他, 来到, 了, ?, 易, 杭研, ?, 厦

搜索引擎模式: ?,明硕,?,毕业,于,中国,科学,学院,科学院,中国科学院,计算,计算所,,,后,在,?,本,京都,?,学,深造

例子

# 社交网络语言的tokenize from nltk.tokenize import word_tokenize tweet = ‘RT @angelababy: love you baby! :D http://ah.love #168cm‘ print(word_tokenize(tweet)) [‘RT‘, ‘@‘, ‘angelababy‘, ‘:‘, ‘love‘, ‘you‘, ‘baby‘, ‘!‘, ‘:‘, ‘D‘, ‘http‘, ‘:‘, ‘//ah.love‘, ‘#‘, ‘168cm‘]

解决方法:正则表达式过滤

import re

emoticons_str = r"""

(?:

[:=;] # 眼睛

[oO\-]? # ??

[D\)\]\(\]/\\OpP] # 嘴

)"""

regex_str = [

emoticons_str,

r‘<[^>]+>‘, # HTML tags

r‘(?:@[\w_]+)‘, # @某?

r"(?:\#+[\w_]+[\w\‘_\-]*[\w_]+)", # 话题标签

r‘http[s]?://(?:[a-z]|[0-9]|[$-_@.&+]|[!*\(\),]|(?:%[0-9a-f][0-9a-f]))+‘,

# URLs

r‘(?:(?:\d+,?)+(?:\.?\d+)?)‘, # 数字

r"(?:[a-z][a-z‘\-_]+[a-z])", # 含有 - 和 ‘ 的单词

r‘(?:[\w_]+)‘, # 其他

r‘(?:\S)‘ # 其他

]

tokens_re = re.compile(r‘(‘+‘|‘.join(regex_str)+‘)‘, re.VERBOSE | re.IGNORECASE)

emoticon_re = re.compile(r‘^‘+emoticons_str+‘$‘, re.VERBOSE | re.IGNORECASE)

def tokenize(s):

return tokens_re.findall(s)

def preprocess(s, lowercase=False):

tokens = tokenize(s)

if lowercase:

tokens = [token if emoticon_re.search(token) else token.lower() for token in

tokens]

return tokens

tweet = ‘RT @angelababy: love you baby! :D http://ah.love #168cm‘

print(preprocess(tweet))

[‘RT‘, ‘@angelababy‘, ‘:‘, ‘love‘, ‘you‘, ‘baby‘, ‘!‘, ‘:D‘, ‘http://ah.love‘, ‘#168cm‘]

from nltk.stem.porter import PorterStemmer porter_stemmer = PorterStemmer() print(porter_stemmer.stem(‘maximum‘)) print(porter_stemmer.stem(‘presumably‘)) print(porter_stemmer.stem(‘multiply‘)) print(porter_stemmer.stem(‘provision‘)) maximum presum multipli provis

from nltk.stem import WordNetLemmatizer wordnet_lemmatizer = WordNetLemmatizer() print(wordnet_lemmatizer.lemmatize(‘dogs‘)) print(wordnet_lemmatizer.lemmatize(‘churches‘)) print(wordnet_lemmatizer.lemmatize(‘aardwolves‘)) print(wordnet_lemmatizer.lemmatize(‘abaci‘)) print(wordnet_lemmatizer.lemmatize(‘hardrock‘)) dog church aardwolf abacus hardrock

没有pos tag,,默认是nn

# ?有POS Tag,默认是NN 名词 wordnet_lemmatizer.lemmatize(‘are‘) ‘are‘ wordnet_lemmatizer.lemmatize(‘is‘) ‘is‘

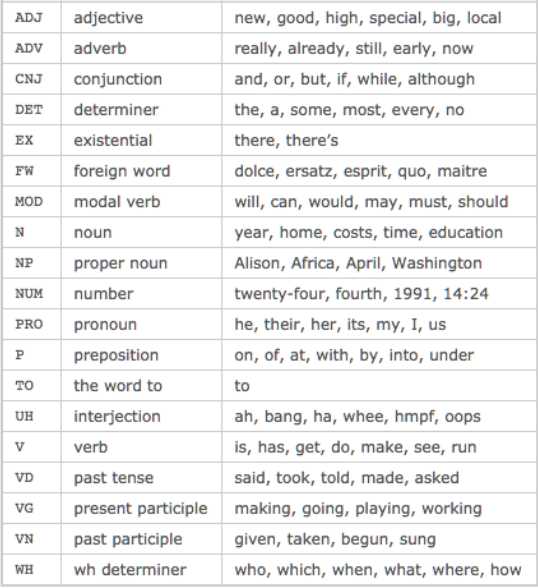

词性标注

方式一:手动标注

# 加上POS Tag print(wordnet_lemmatizer.lemmatize(‘is‘, pos=‘v‘)) print(wordnet_lemmatizer.lemmatize(‘are‘, pos=‘v‘)) ‘be‘ ‘be‘

方式二:

import nltk text = nltk.word_tokenize(‘what does the fox say‘) print(text) print(nltk.pos_tag(text)) [‘what‘, ‘does‘, ‘the‘, ‘fox‘, ‘say‘] [(‘what‘, ‘WDT‘), (‘does‘, ‘VBZ‘), (‘the‘, ‘DT‘), (‘fox‘, ‘NNS‘), (‘say‘, ‘VBP‘)]

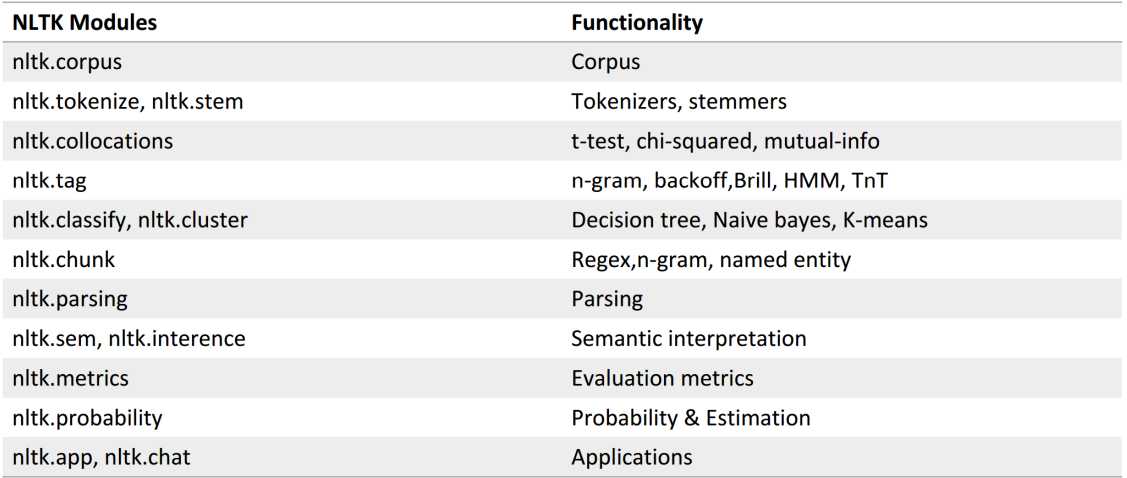

词性关系表

?千个HE有?千种指代

?千个THE有?千种指事

对于注重理解?本『意思』的应?场景来说

歧义太多

全体stopwords列表 http://www.ranks.nl/stopwords

?先记得在console??下载?下词库

或者nltk.download(‘stopwords’)

from nltk.corpusimportstopwords #先token?把,得到?个word_list # ... #然后filter?把 filtered_words = [wordforwordinword_listifwordnot instopwords.words(‘english‘)]

import nltk from nltk import FreqDist # 做个词库先 corpus = ‘this is my sentence ‘ ‘this is my life ‘ ‘this is the day‘ # 随便tokenize?下 # 显然, 正如上?提到, # 这?可以根据需要做任何的preprocessing: # stopwords, lemma, stemming, etc. tokens = nltk.word_tokenize(corpus) print(tokens) [‘this‘, ‘is‘, ‘my‘, ‘sentence‘, ‘this‘, ‘is‘, ‘my‘, ‘life‘, ‘this‘, ‘is‘, ‘the‘, ‘day‘]

# 借?NLTK的FreqDist统计?下?字出现的频率

fdist = FreqDist(tokens)

# 它就类似于?个Dict

# 带上某个单词, 可以看到它在整个?章中出现的次数

print(fdist.most_common(50))

for k,v in fdist.items():

print(k,v)

[(‘this‘, 3), (‘is‘, 3), (‘my‘, 2), (‘sentence‘, 1), (‘life‘, 1), (‘the‘, 1), (‘day‘, 1)]

this 3

is 3

my 2

sentence 1

life 1

the 1

day 1

# 好, 此刻, 我们可以把最常?的50个单词拿出来

standard_freq_vector = fdist.most_common(50)

size = len(standard_freq_vector)

print(standard_freq_vector)

[(‘this‘, 3), (‘is‘, 3), (‘my‘, 2), (‘sentence‘, 1), (‘life‘, 1), (‘the‘, 1), (‘day‘, 1)]

Func: 按照出现频率??, 记录下每?个单词的位置

def position_lookup(v):

res = {}

counter = 0

for word in v:

res[word[0]] = counter

counter += 1

return res

# 把标准的单词位置记录下来

standard_position_dict = position_lookup(standard_freq_vector)

print(standard_position_dict)

# 得到?个位置对照表

{‘this‘: 0, ‘is‘: 1, ‘my‘: 2, ‘sentence‘: 3, ‘life‘: 4, ‘the‘: 5, ‘day‘: 6}

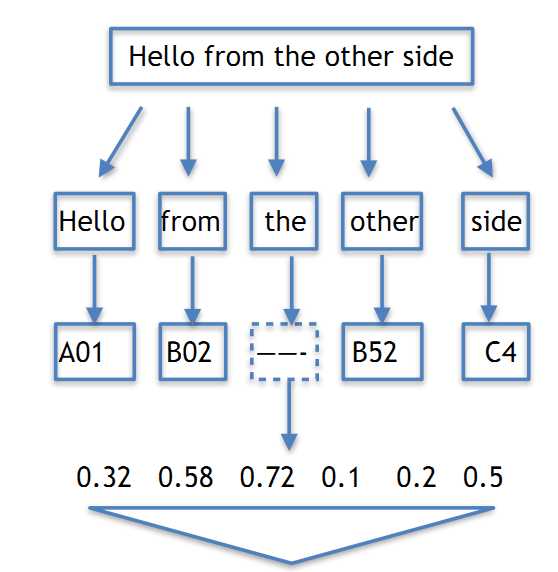

这时,我们有个新句子

[1, 1, 0, 0, 0, 0, 0]sentence = ‘this is cool‘

# 先新建?个跟我们的标准vector同样??的向量

freq_vector = [0] * size

# 简单的Preprocessing

tokens = nltk.word_tokenize(sentence)

# 对于这个新句??的每?个单词

for word in tokens:

try:

# 如果在我们的词库?出现过

# 那么就在"标准位置"上+1

freq_vector[standard_position_dict[word]] += 1

except KeyError:

# 如果是个新词

# 就pass掉

continue

print(freq_vector)

# 第?个位置代表 is, 出现了?次

# 第?个位置代表 this, 出现了?次

# 后?都?有

[1, 1, 0, 0, 0, 0, 0]

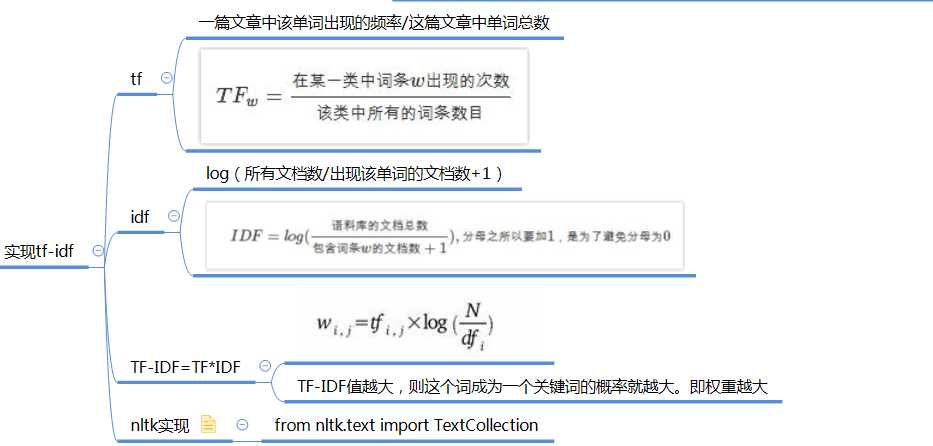

import nltk from nltk.text import TextCollection sents = [‘this is sentence one‘, ‘this is sentence two‘, ‘this is sentence three‘] sents = [nltk.word_tokenize(sent) for sent in sents] corpus = TextCollection(sents) # 直接就能算出tfidf # (term: ?句话中的某个term, text: 这句话) print(corpus.idf(‘three‘)) print(corpus.tf(‘four‘,nltk.word_tokenize(‘this is a sentence four‘))) print(corpus.tf_idf(‘four‘,nltk.word_tokenize(‘this is a sentence four‘))) 1.0986122886681098 0.2 0.0

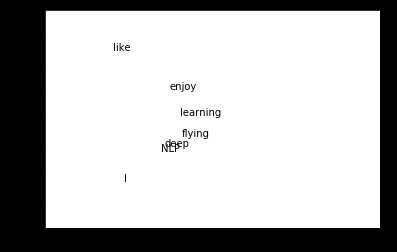

%matplotlib inline

import numpy as np

import matplotlib.pyplot as plt

la = np.linalg

words = [‘I‘,‘like‘,‘enjoy‘,‘deep‘,‘learning‘,‘NLP‘,‘flying‘]

X = np.array([[0,2,1,0,0,0,0,0],

[2,0,0,1,0,1,0,0],

[1,0,0,0,0,0,1,0],

[0,1,0,0,1,0,0,0],

[0,0,0,1,0,0,0,1],

[0,1,0,0,0,0,0,1],

[0,0,1,0,0,0,0,1],

[0,0,0,0,1,1,1,0],

])

U,s,Vh = la.svd(X,full_matrices=False)

# print(U,s,Vh)

for i in range(len(words)):

plt.text(U[i,0],U[i,1],words[i])

plt.xlim(-1,1)

plt.ylim(-1,1)

plt.show()

简单的情感分析

import nltk

words = nltk.word_tokenize(‘I am very happy,i love you‘)

sentiment_dictionary = {}

for line in open(‘data/AFINN/AFINN-111.txt‘):

word, score = line.split(‘\t‘)

sentiment_dictionary[word] = int(score)

# 把这个打分表记录在?个Dict上以后

# 跑?遍整个句?,把对应的值相加

total_score = sum(sentiment_dictionary.get(word, 0) for word in words)

# 有值就是Dict中的值,没有就是0

# 于是你就得到了?个 sentiment score

print(total_score)

6

配上ML的情感分析

from nltk.classify import NaiveBayesClassifier

# 随?造点训练集

s1 = ‘this is a good book‘

s2 = ‘this is a awesome book‘

s3 = ‘this is a bad book‘

s4 = ‘this is a terrible book‘

def preprocess(s):

# Func: 句?处理

# 这?简单的?了split(), 把句?中每个单词分开

# 显然 还有更多的processing method可以?

return {word: True for word in s.lower().split()}

# return?这样:

# {‘this‘: True, ‘is‘:True, ‘a‘:True, ‘good‘:True, ‘book‘:True}

# 其中, 前?个叫fname, 对应每个出现的?本单词;

# 后?个叫fval, 指的是每个?本单词对应的值。

# 这?我们?最简单的True,来表示,这个词『出现在当前的句?中』的意义。

# 当然啦, 我们以后可以升级这个?程, 让它带有更加?逼的fval, ?如 word2vec

# 把训练集给做成标准形式

training_data = [[preprocess(s1), ‘pos‘],

[preprocess(s2), ‘pos‘],

[preprocess(s3), ‘neg‘],

[preprocess(s4), ‘neg‘]]

# 喂给model吃

model = NaiveBayesClassifier.train(training_data)

# 打出结果

print(model.classify(preprocess(‘this is a good book‘)))

pos

标签:地址 .class gate 变形 opp tee sea rop 表示

原文地址:https://www.cnblogs.com/zhangyafei/p/10618585.html