标签:dfs 完全 schema group man home test exe ror

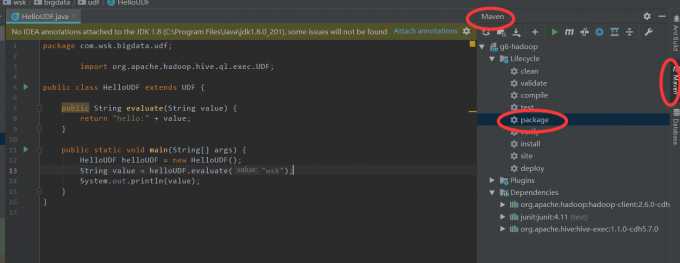

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.wsk.bigdata</groupId> <artifactId>g6-hadoop</artifactId> <version>1.0</version> <name>g6-hadoop</name> <properties> <maven.compiler.source>1.7</maven.compiler.source> <maven.compiler.target>1.7</maven.compiler.target> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <hadoop.version>2.6.0-cdh5.7.0</hadoop.version> <hive.version>1.1.0-cdh5.7.0</hive.version> </properties> <!--添加CDH的仓库--> <repositories> <repository> <id>nexus-aliyun</id> <url>http://maven.aliyun.com/nexus/content/groups/public</url> </repository> <repository> <id>cloudera</id> <url>https://repository.cloudera.com/artifactory/cloudera-repos</url> </repository> </repositories> <dependencies> <!--添加Hadoop的依赖--> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>${hadoop.version}</version> </dependency> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>4.11</version> <scope>test</scope> </dependency> <!--添加hive依赖--> <dependency> <groupId>org.apache.hive</groupId> <artifactId>hive-exec</artifactId> <version>${hive.version}</version> </dependency> </dependencies> <build> <plugins> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> <version>2.4</version> <configuration> <source>1.7</source> <target>1.7</target> <encoding>UTF-8</encoding> </configuration> </plugin> </plugins> </build> </project>

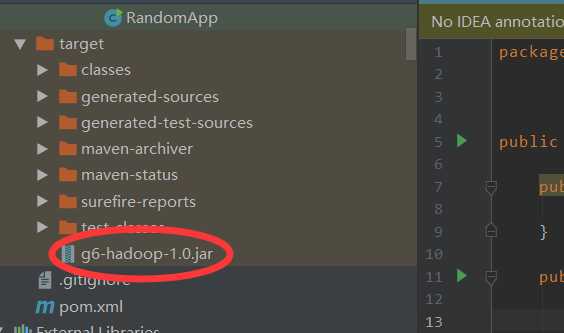

[hadoop@hadoop001 lib]$ rz [hadoop@hadoop001 lib]$ ll g6-hadoop-1.0.jar -rw-r--r--. 1 hadoop hadoop 11447 Apr 19 2019 g6-hadoop-1.0.jar

hive> add jar /home/hadoop/data/hive/g6-hadoop-1.0.jar;

CREATE TEMPORARY FUNCTION function_name AS class_name;

function_name函数名

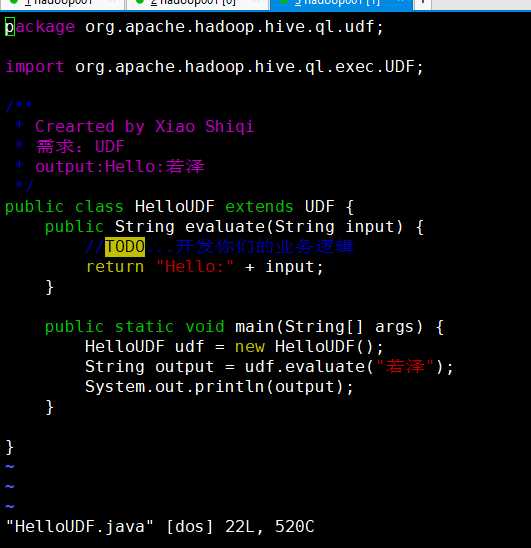

*******class_name 类路径,包名+类名********* 这里就是你写的UDF函数的第一行的package后边的东西然后在加个点加个类的名字

hive>CREATE TEMPORARY FUNCTION HelloUDF AS ‘org.apache.hadoop.hive.ql.udf.HelloUDF‘; OK Time taken: 0.485 seconds hive> hive> show functions; 【查看可以看到HelloUDF】

hive>select HelloUDF(‘17‘); OK Hello:17 #检查mysql中的元数据,因为是临时函数,故元数据中并没有相关的信息 mysql> select * from funcs; Empty set (0.11 sec)

hive> DROP TEMPORARY FUNCTION IF EXISTS HelloUDF; OK Time taken: 0.003 seconds hive> select HelloUDF(‘17‘); FAILED: SemanticException [Error 10011]: Line 1:7 Invalid function ‘HelloUDF‘ ##其实不删除也无所谓,重新开一个窗口即可

CREATE TEMPORARY FUNCTION function_name AS class_name USING JAR path; function_name函数名 class_name 类路径, 包名+类名 path jar包hdfs路径

[hadoop@hadoop hive-1.1.0-cdh5.7.0]$ hadoop fs -mkdir /lib [hadoop@hadoop hive-1.1.0-cdh5.7.0]$ hadoop fs -put /home/hadoop/data/hive/hive_UDF.jar /lib/ [hadoop@hadoop001 ~]$ hadoop fs -mkdir /lib [hadoop@hadoop001 ~]$ hadoop fs -ls /lib [hadoop@hadoop001 ~]$ hadoop fs -put ~/lib/g6-hadoop-1.0.jar /lib/ 把本地的jar上传到HDFS的/lib/目录下 [hadoop@hadoop001 ~]$ hadoop fs -ls /lib

CREATE FUNCTION HelloUDF AS ‘org.apache.hadoop.hive.ql.udf.HelloUDF‘ USING JAR ‘hdfs://hadoop001:9000/lib/g6-hadoop-1.0.jar‘; #测试 hive> select HelloUDF("17") ; OK hello:17

mysql> select * from funcs; +---------+------------------------------+-------------+-------+------------+-----------+------------+------------+ | FUNC_ID | CLASS_NAME | CREATE_TIME | DB_ID | FUNC_NAME | FUNC_TYPE | OWNER_NAME | OWNER_TYPE | +---------+------------------------------+-------------+-------+------------+-----------+------------+------------+ | 1 |org.apache.hadoop.hive.ql.udf.HelloUDF | 1555263915 | 6 | HelloUDF | 1 | NULL | USER | +---------+------------------------------+-------------+-------+------------+-----------+------------+------------+

官网参考地址:LanguageManual UDF

标签:dfs 完全 schema group man home test exe ror

原文地址:https://www.cnblogs.com/xuziyu/p/10754592.html