标签:read rop put 接收 enc depend producer for comm

1、Maven里面配置jar

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.11</artifactId>

<version>0.11.0.2</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-kafka-0-8_2.11</artifactId>

<version>2.2.0</version>

</dependency>

2、Idea里面编写producer程序

import org.apache.kafka.clients.producer.{KafkaProducer, ProducerConfig, ProducerRecord}

import scala.util.Random

import java.util

object KafkaProducer {

def main(args: Array[String]): Unit = {

//kafka-console-producer.sh --broker-list master:9092,master:9093 -topic mykafka2

val brokers="master:9092,master:9093"

val topic = "mykafka2"

val props = new util.HashMap[String,Object]()

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,brokers)

props.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer")

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer")

val msgPerSec = 2

val wordgPerMsg = 3

val producer = new KafkaProducer[String,String](props)

while(true){

for(i<- 1 to msgPerSec){

val str = (1 to wordgPerMsg).map(x=>Random.nextInt(100)).toString.mkString(" ")

println(str)

val msg = new ProducerRecord[String,String](topic,null,str)

producer.send(msg)

}

Thread.sleep(1000)

}

}

}

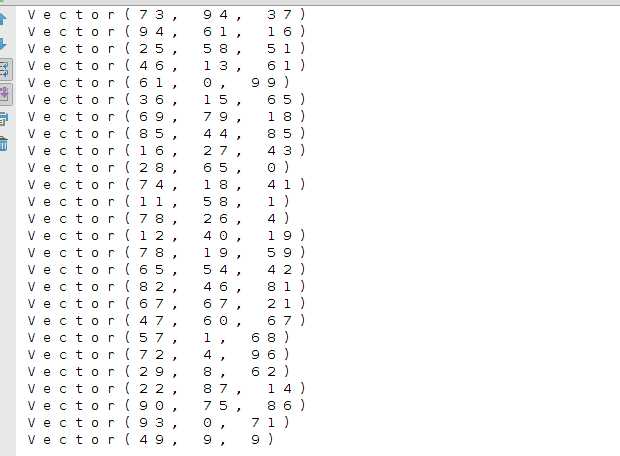

3、Idea里面查看结果

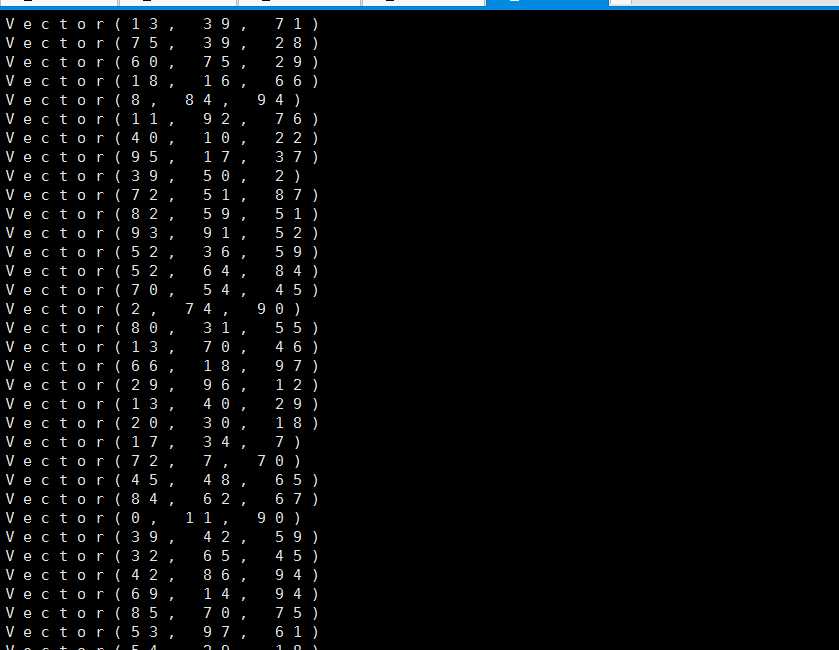

4、通过kafka 消费着接收的数据

kafka-console-consumer.sh --zookeeper master:12181/kafka0.11 --topic mykafka2

标签:read rop put 接收 enc depend producer for comm

原文地址:https://www.cnblogs.com/BrentBoys/p/10802225.html