标签:dep set check epo span binding har 显示 forward

// This script is used to run style transfer models from ‘

// https://github.com/jcjohnson/fast-neural-style using OpenCV

#include <opencv2/dnn.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/highgui.hpp>

#include <iostream>

using namespace cv;

using namespace cv::dnn;

using namespace std;

int main(int argc, char **argv)

{

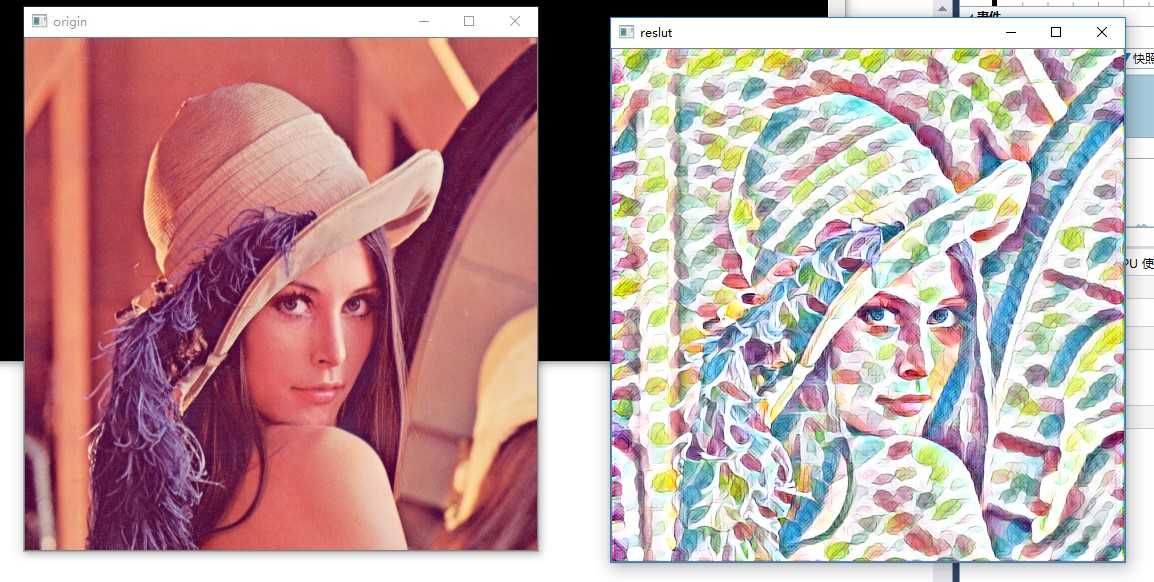

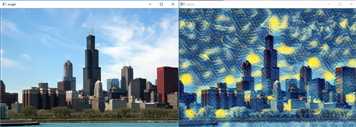

string modelBin = "../../data/testdata/dnn/fast_neural_style_instance_norm_feathers.t7";

string imageFile = "../../data/image/chicago.jpg";

float scale = 1.0;

cv::Scalar mean { 103.939, 116.779, 123.68 };

bool swapRB = false;

bool crop = false;

bool useOpenCL = false;

Mat img = imread(imageFile);

if (img.empty()) {

cout << "Can‘t read image from file: " << imageFile << endl;

return 2;

}

// Load model

Net net = dnn::readNetFromTorch(modelBin);

if (useOpenCL)

net.setPreferableTarget(DNN_TARGET_OPENCL);

// Create a 4D blob from a frame.

Mat inputBlob = blobFromImage(img,scale, img.size(),mean,swapRB,crop);

// forward netword

net.setInput(inputBlob);

Mat output = net.forward();

// process output

Mat(output.size[2], output.size[3], CV_32F, output.ptr<float>(0, 0)) += 103.939;

Mat(output.size[2], output.size[3], CV_32F, output.ptr<float>(0, 1)) += 116.779;

Mat(output.size[2], output.size[3], CV_32F, output.ptr<float>(0, 2)) += 123.68;

std::vector<cv::Mat> ress;

imagesFromBlob(output, ress);

// show res

Mat res;

ress[0].convertTo(res, CV_8UC3);

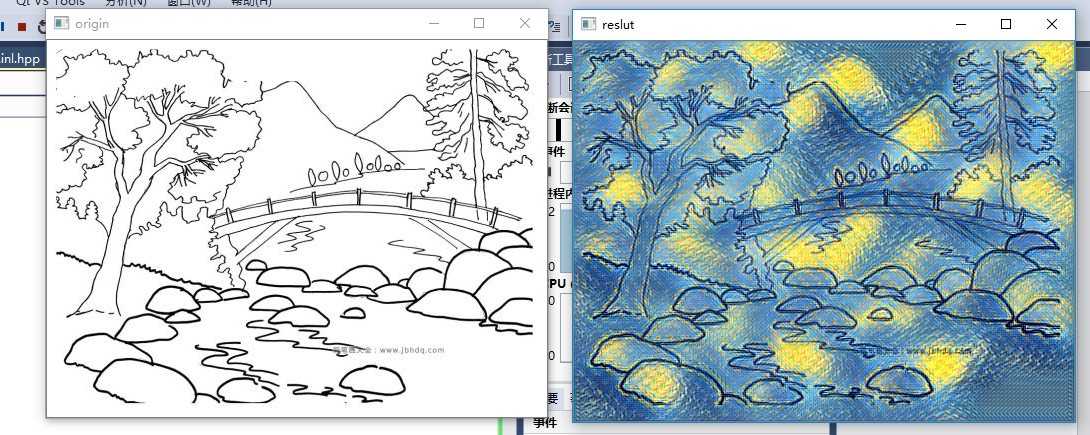

imshow("reslut", res);

imshow("origin", img);

waitKey();

return 0;

}

To train new style transfer models, first use the scriptscripts/make_style_dataset.py to create an HDF5 file from folders of images.You will then use the script train.lua to actually train models.

You first need to install the header files for Python 2.7 and HDF5. On Ubuntuyou should be able to do the following:

You can then install Python dependencies into a virtual environment:

With the virtual environment activated, you can use the scriptscripts/make_style_dataset.py to create an HDF5 file from a directory oftraining images and a directory of validation images:

All models in thisrepository were trained using the images from theCOCO dataset.

The preprocessing script has the following flags:

--train_dir: Path to a directory of training images.--val_dir: Path to a directory of validation images.--output_file: HDF5 file where output will be written.--height, --width: All images will be resized to this size.--max_images: The maximum number of images to use for trainingand validation; -1 means use all images in the directories.--num_workers: The number of threads to use.After creating an HDF5 dataset file, you can use the script train.lua totrain feedforward style transfer models. First you need to download aTorch version of theVGG-16 modelby running the script

This will download the file vgg16.t7 (528 MB) to the models directory.

You will also need to installdeepmind/torch-hdf5which gives HDF5 bindings for Torch:

luarocks install https://raw.githubusercontent.com/deepmind/torch-hdf5/master/hdf5-0-0.rockspecYou can then train a model with the script train.lua. For basic usage thecommand will look something like this:

The full set of options for this script are described here.

OpenCv dnn模块扩展研究(1)--style transfer

标签:dep set check epo span binding har 显示 forward

原文地址:https://www.cnblogs.com/jsxyhelu/p/10804243.html