标签:tar ogr like ref col dom pipeline strong git

1 D:\USERDATA\python>scrapy startproject duanping 2 New Scrapy project ‘duanping‘, using template directory ‘c:\users\www1707\appdata\local\programs\python\python37\lib\site-packages\scrapy\templates\project‘, created in: 3 D:\USERDATA\python\duanping 4 5 You can start your first spider with: 6 cd duanping 7 scrapy genspider example example.com 8 9 D:\USERDATA\python>

1 import scrapy 2 import bs4 3 import re 4 import requests 5 import math 6 from ..items import DuanpingItem 7 8 class DuanpingItemSpider(scrapy.Spider): 9 name = ‘duanping‘ 10 allowed_domains = [‘book.douban.com‘] 11 start_urls = [‘https://book.douban.com/top250?start=0‘,‘https://book.douban.com/top250?start=0‘] 12 13 def parse(self,response): 14 bs = bs4.BeautifulSoup(response.text,‘html.parser‘) 15 datas = bs.find_all(‘a‘,class_=‘nbg‘) 16 for data in datas: 17 book_url = data[‘href‘] 18 yield scrapy.Request(book_url,callback=self.parse_book) 19 20 def parse_book(self,response): 21 book_url = str(response).split(‘ ‘)[1].replace(‘>‘,‘‘) 22 print(book_url) 23 bs = bs4.BeautifulSoup(response.text,‘html.parser‘) 24 comments = int(bs.find(‘a‘,href=re.compile(‘^https://book.douban.com/subject/.*/comments/‘)).text.split(‘ ‘)[1]) 25 pages = math.ceil(comments / 20) + 1 26 #for i in range(1,pages): 27 for i in range(1,3): 28 comments_url = ‘{}comments/hot?p={}‘.format(book_url,i) 29 print(comments_url) 30 yield scrapy.Request(comments_url,callback=self.parse_comment) 31 32 def parse_comment(self,response): 33 bs = bs4.BeautifulSoup(response.text,‘html.parser‘) 34 book_name = bs.find(‘a‘,href=re.compile(‘^https://book.douban.com/subject/‘)).text 35 datas = bs.find_all(‘li‘,class_=‘comment-item‘) 36 for data in datas: 37 item = DuanpingItem() 38 item[‘book_name‘] = book_name 39 item[‘user_id‘]= data.find_all(‘a‘,href=re.compile(‘^https://www.douban.com/people/‘))[1].text 40 item[‘comment‘] = data.find(‘span‘,class_=‘short‘).text 41 yield item

1 import scrapy 2 3 class DuanpingItem(scrapy.Item): 4 book_name = scrapy.Field() 5 user_id = scrapy.Field() 6 comment = scrapy.Field()

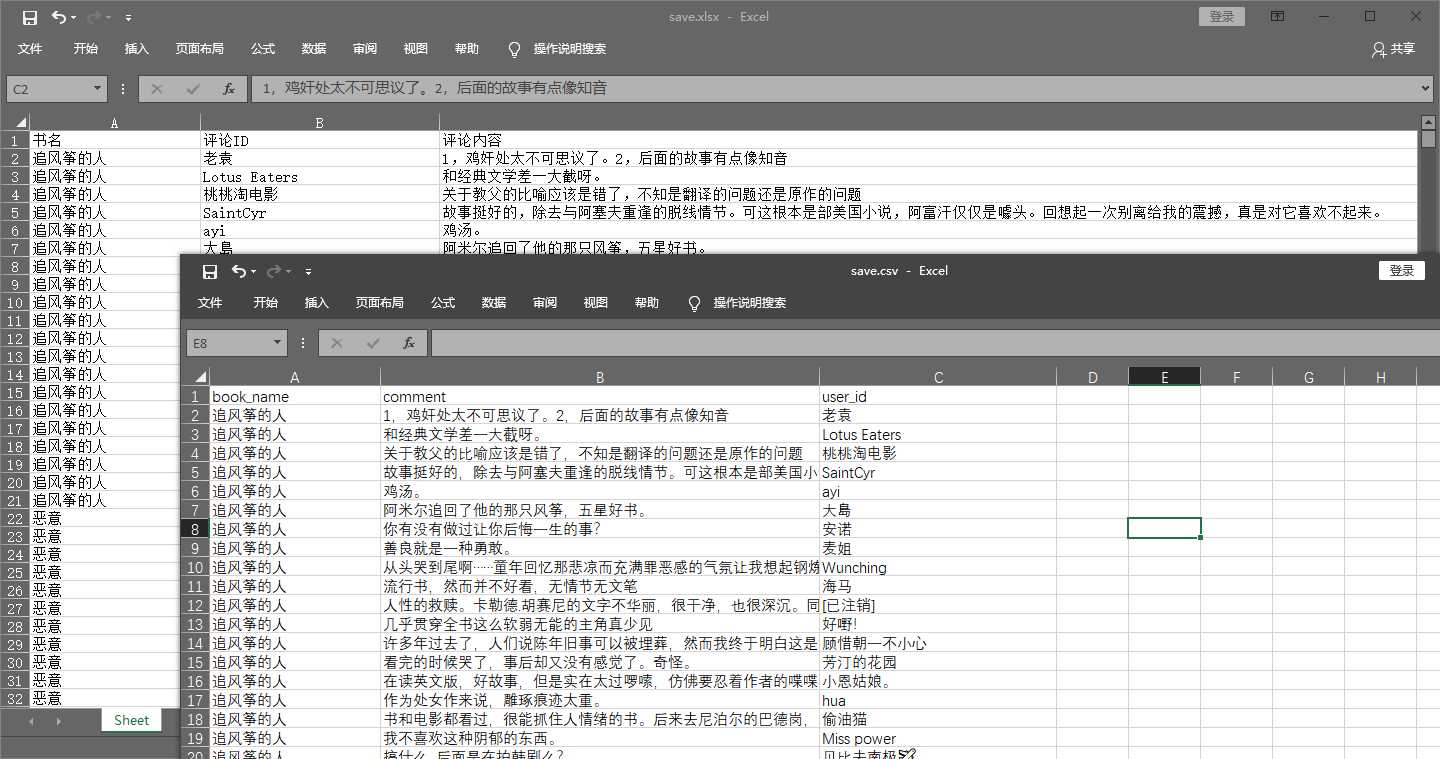

1 import openpyxl 2 3 class DuanpingPipeline(object): 4 def __init__(self): 5 self.wb = openpyxl.Workbook() 6 self.ws = self.wb.active 7 self.ws.append([‘书名‘,‘评论ID‘,‘评论内容‘]) 8 9 def process_item(self, item, spider): 10 line = [item[‘book_name‘],item[‘user_id‘],item[‘comment‘]] 11 self.ws.append(line) 12 return item 13 def close_spider(self,spider): 14 self.wb.save(‘./save.xlsx‘) 15 self.wb.close()

1 BOT_NAME = ‘duanping‘ 2 3 SPIDER_MODULES = [‘duanping.spiders‘] 4 NEWSPIDER_MODULE = ‘duanping.spiders‘ 5 6 USER_AGENT = ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.131 Safari/537.36‘ 7 8 ROBOTSTXT_OBEY = False 9 10 DOWNLOAD_DELAY = 1 11 12 ITEM_PIPELINES = { 13 ‘duanping.pipelines.DuanpingPipeline‘: 300, 14 } 15 16 FEED_URI = ‘./save.csv‘ 17 FEED_FORMAT=‘CSV‘ 18 FEED_EXPORT_ENCODING=‘utf-8-sig‘

以下是草稿部分

1 <li class="comment-item" data-cid="693413905"> 2 <div class="avatar"> 3 <a title="福根儿" href="https://www.douban.com/people/fugen/"> 4 <img src="https://img3.doubanio.com/icon/u3825598-141.jpg"> 5 </a> 6 </div> 7 <div class="comment"> 8 <h3> 9 <span class="comment-vote"> 10 <span id="c-693413905" class="vote-count">4756</span> 11 <a href="javascript:;" id="btn-693413905" class="j a_show_login" data-cid="693413905">有用</a> 12 </span> 13 <span class="comment-info"> 14 <a href="https://www.douban.com/people/fugen/">福根儿</a> 15 <span class="user-stars allstar50 rating" title="力荐"></span> 16 <span>2013-09-18</span> 17 </span> 18 </h3> 19 <p class="comment-content"> 20 21 <span class="short">为你,千千万万遍。</span> 22 </p> 23 </div> 24 </li>

标签:tar ogr like ref col dom pipeline strong git

原文地址:https://www.cnblogs.com/www1707/p/10850700.html