标签:定义 全连接 cells xtend style help ascii tensor 对话

Tensorflow版本:

GPU: 1.12.0

理论部分:

参考:https://www.bilibili.com/video/av19080685,讲解的超级详细。

代码部分:

1、语料库预处理

2、搭建模型计算图

3、启动session会话,进行模型训练。

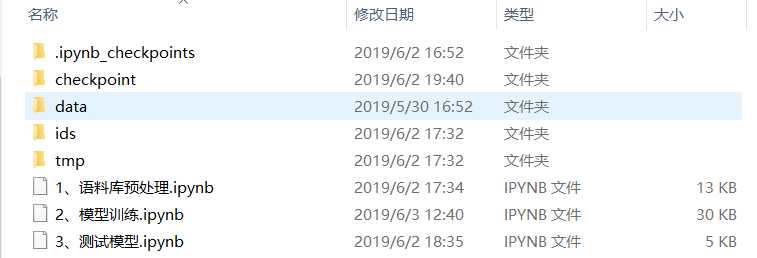

文件夹图示如下:其中data文件夹存储对话语料,ids文件夹存储词语和id之间的映射关系,tmp文件夹存储了整个的字典以及word2vec模型,checkpoint文件存储了tensorflow训练的模型。

进入代码实战部分:

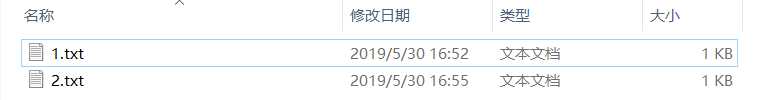

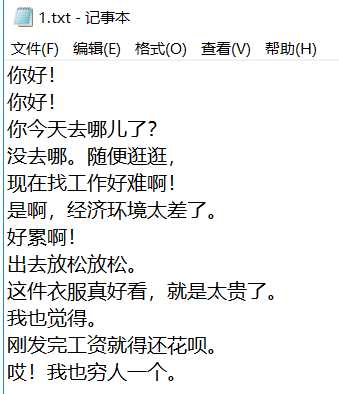

首先得准备一些聊天机器人的语料库,这个可以自己搜索。这里自己手写了两个txt文件的对话,便于演示如何使用tensorflow搭建聊天机器人的流程。

1.1 读取语料库

1 import os 2 import jieba 3 import json 4 from gensim.models import Word2Vec 5 corpus_path = ‘./data/‘ 6 corpus_files = os.listdir(corpus_path) 7 corpus = [] 8 for corpus_file in corpus_files: 9 with open(os.path.join(corpus_path, corpus_file), ‘r‘, encoding=‘utf-8‘) as f: 10 lines = f.readlines() 11 corpus.extend(lines) 12 corpus = [sentence.replace(‘\n‘, ‘‘) for sentence in corpus] 13 corpus = [sentence.replace(‘\ufeff‘, ‘‘) for sentence in corpus] 14 print(‘语料库读取完成‘.center(30, ‘=‘))

1.2 分词,构建词典

1 corpus_cut = [jieba.lcut(sentence) for sentence in corpus] 2 print(‘分词完成‘.center(30, ‘=‘)) 3 from tkinter import _flatten 4 tem = _flatten(corpus_cut) 5 _PAD, _BOS, _EOS, _UNK = ‘_PAD‘, ‘_BOS‘, ‘_EOS‘, ‘_UNK‘ 6 all_dict = [_PAD, _BOS, _EOS, _UNK] + list(set(tem)) 7 print(‘词典构建完成‘.center(30, ‘=‘))

1.3 构建映射关系

1 id2word = {i: j for i, j in enumerate(all_dict)} 2 word2id = {j: i for i, j in enumerate(all_dict)} 3 # dict(zip(id2word.values(), id2word.keys())) 4 print(‘映射关系构建完成‘.center(30, ‘=‘))

1.4 语料转为id向量

1 ids = [[word2id.get(word, word2id[_UNK]) for word in sentence] for sentence in corpus_cut]

1.5 将语料拆分成source、target(问、答数据集)

1 # 拆分成问答数据集 2 fromids = ids[::2] 3 toids = ids[1::2] 4 len(fromids) == len(toids)

1.6 训练词向量

1 emb_size = 50 2 tmp = [list(map(str, id)) for id in ids] 3 if not os.path.exists(‘./tmp/word2vec.model‘): 4 model = Word2Vec(tmp, size=emb_size, window=10, min_count=1, workers=-1) 5 model.save(‘./tmp/word2vec.model‘) 6 else: 7 print(‘词向量模型已构建,可直接调取‘.center(50, ‘=‘))

1.7 保存文件

1 # 用记事本存储 2 with open(‘./tmp/fromids.txt‘, ‘w‘, encoding=‘utf-8‘) as f: 3 f.writelines([‘ ‘.join(map(str, fromid)) for fromid in fromids]) 4 # 用json存储 5 with open(‘./ids/ids.json‘, ‘w‘) as f: 6 json.dump({‘fromids‘:fromids, ‘toids‘:toids}, fp=f, ensure_ascii=False)

2、搭建模型计算图

2.1 读取文件

1 with open(‘./ids/ids.json‘, ‘r‘) as f: 2 tmp = json.load(f) 3 fromids = tmp[‘fromids‘] 4 toids = tmp[‘toids‘] 5 with open(‘./tmp/dic.txt‘, ‘r‘, encoding=‘utf-8‘) as f: 6 all_dict = f.read().split(‘\n‘) 7 word2id = {j: i for i, j in enumerate(all_dict)} 8 id2word = {i: j for i, j in enumerate(all_dict)} 9 model = Word2Vec.load(‘./tmp/word2vec.model‘) 10 emb_size = model.layer1_size

2.2 构建词向量矩阵

1 vocab_size = len(all_dict) # 词典大小 2 corpus_size = len(fromids) # 对话长度 3 4 embedding_matrix = np.zeros((vocab_size, emb_size), dtype=np.float32) 5 tmp = np.diag([1] * emb_size) # 对于词典中不存在的词语 6 7 k = 0 8 for i in range(vocab_size): 9 try: 10 embedding_matrix[i] = model.wv[str(i)] 11 except: 12 embedding_matrix[i] = tmp[k] 13 k += 1

2.3 统一长度

1 from_length = [len(i) for i in fromids] 2 max_from_length = max(from_length) 3 source = [i + [word2id[‘_PAD‘]] * (max_from_length - len(i)) for i in fromids] 4 to_length = [len(i) for i in toids] 5 max_to_length = max(to_length) 6 target = [i + [word2id[‘_PAD‘]] * (max_to_length - len(i)) for i in toids]

2.4 定义Tensor

1 num_layers = 2 # 神经元层数 2 hidden_size = 100 # 隐藏神经元个数 3 learning_rate = 0.001 # 学习率,0.0001-0.001 4 max_inference_sequence_length = 35 5 with tf.variable_scope(‘tensor‘, reuse=tf.AUTO_REUSE): 6 # 输入 7 input_data = tf.placeholder(tf.int32, [corpus_size, None], name=‘source‘) 8 # 输出 9 output_data = tf.placeholder(tf.int32, [corpus_size, None], name=‘target‘) 10 # 输入句子的长度 11 input_sequence_length = tf.placeholder(tf.int32, [corpus_size,], name=‘source_sequence_length‘) 12 # 输出句子的长度 13 output_sequence_length = tf.placeholder(tf.int32, [corpus_size,], name=‘target_sequence_length‘) 14 # 输出句子的最大长度 15 max_output_sequence_length = tf.reduce_max(output_sequence_length) 16 # 词向量矩阵 17 emb_matrix = tf.constant(embedding_matrix, name=‘embedding_matrix‘, dtype=tf.float32)

2.5 Encoder

1 def get_lstm_cell(hidden_size): 2 lstm_cell = tf.contrib.rnn.LSTMCell( 3 num_units=hidden_size, 4 initializer=tf.random_uniform_initializer(minval=-0.1, maxval=0.1, seed=2019) 5 ) 6 return lstm_cell 7 def encoder(hidden_size, num_layers, emb_matrix, input_data): 8 encoder_embedding_input = tf.nn.embedding_lookup(params=emb_matrix, ids=input_data) 9 encoder_cells = tf.contrib.rnn.MultiRNNCell( 10 [get_lstm_cell(hidden_size) for i in range(num_layers)] 11 ) 12 encoder_output, encoder_state= tf.nn.dynamic_rnn(cell=encoder_cells, 13 inputs=encoder_embedding_input, 14 sequence_length=input_sequence_length, 15 dtype=tf.float32 16 ) 17 return encoder_output, encoder_state

2.6.1 普通Decoder

1 def decoder(output_data, corpus_size, word2id, emb_matrix, hidden_size, num_layers, 2 vocab_size, output_sequence_length, max_output_sequence_length, max_inference_sequence_length, encoder_state): 3 # numpy数据切片 output_data[0:corpus_size:1,0:-1:1],删除output_data最后一列数据 4 ending = tf.strided_slice(output_data, begin=[0, 0], end=[corpus_size, -1], strides=[1, 1]) 5 begin_sigmal = tf.fill(dims=[corpus_size, 1], value=word2id[‘_BOS‘]) 6 decoder_input_data = tf.concat([begin_sigmal, ending], axis=1, name=‘decoder_input_data‘) 7 decoder_embedding_input = tf.nn.embedding_lookup(params=emb_matrix, ids=decoder_input_data) 8 decoder_cells = tf.contrib.rnn.MultiRNNCell([get_lstm_cell(hidden_size) for i in range(num_layers)]) 9 project_layer = tf.layers.Dense( 10 units=vocab_size, # 全连接层神经元个数 11 kernel_initializer=tf.truncated_normal_initializer(mean=0.0, stddev=0.1) # 权重矩阵初始化 12 ) 13 with tf.variable_scope(‘Decoder‘): 14 # Helper对象 15 training_helper = tf.contrib.seq2seq.TrainingHelper( 16 inputs=decoder_embedding_input, 17 sequence_length=output_sequence_length) 18 # Basic Decoder 19 training_decoder = tf.contrib.seq2seq.BasicDecoder( 20 cell=decoder_cells, 21 helper=training_helper, 22 output_layer=project_layer, 23 initial_state=encoder_state 24 ) 25 # Dynamic RNN 26 training_final_output, training_final_state, training_sequence_length = tf.contrib.seq2seq.dynamic_decode( 27 decoder=training_decoder, 28 maximum_iterations=max_output_sequence_length, 29 impute_finished=True) 30 with tf.variable_scope(‘Decoder‘, reuse=True): 31 # Helper对象 32 start_tokens = tf.tile(input=tf.constant(value=[word2id[‘_BOS‘]], dtype=tf.int32), 33 multiples=[corpus_size], name=‘start_tokens‘) 34 inference_helper = tf.contrib.seq2seq.GreedyEmbeddingHelper( 35 embedding=emb_matrix, 36 start_tokens=start_tokens, 37 end_token=word2id[‘_EOS‘]) 38 # Basic Decoder 39 inference_decoder = tf.contrib.seq2seq.BasicDecoder( 40 cell=decoder_cells, 41 helper=inference_helper, 42 output_layer=project_layer, 43 initial_state=encoder_state 44 ) 45 # Dynamic RNN 46 inference_final_output, inference_final_state, inference_sequence_length = tf.contrib.seq2seq.dynamic_decode( 47 decoder=inference_decoder, 48 maximum_iterations=max_inference_sequence_length, 49 impute_finished=True) 50 return training_final_output, training_final_state, inference_final_output, inference_final_state

2.6.2 Attention-Decoder

1 def attention_decoder(output_data, corpus_size, word2id, emb_matrix, hidden_size, num_layers, 2 vocab_size, output_sequence_length, max_output_sequence_length, max_inference_sequence_length, encoder_output): 3 # numpy数据切片 output_data[0:corpus_size:1,0:-1:1],删除output_data最后一列数据 4 ending = tf.strided_slice(output_data, begin=[0, 0], end=[corpus_size, -1], strides=[1, 1]) 5 begin_sigmal = tf.fill(dims=[corpus_size, 1], value=word2id[‘_BOS‘]) 6 decoder_input_data = tf.concat([begin_sigmal, ending], axis=1, name=‘decoder_input_data‘) 7 decoder_embedding_input = tf.nn.embedding_lookup(params=emb_matrix, ids=decoder_input_data) 8 decoder_cells = tf.contrib.rnn.MultiRNNCell([get_lstm_cell(hidden_size) for i in range(num_layers)]) 9 # Attention机制 10 attention_mechanism = tf.contrib.seq2seq.LuongAttention( 11 num_units=hidden_size, 12 memory=encoder_output, 13 memory_sequence_length=input_sequence_length 14 ) 15 decoder_cells = tf.contrib.seq2seq.AttentionWrapper( 16 cell=decoder_cells, 17 attention_mechanism=attention_mechanism, 18 attention_layer_size=hidden_size 19 ) 20 project_layer = tf.layers.Dense( 21 units=vocab_size, # 全连接层神经元个数 22 kernel_initializer=tf.truncated_normal_initializer(mean=0.0, stddev=0.1) # 权重矩阵初始化 23 ) 24 with tf.variable_scope(‘Decoder‘): 25 # Helper对象 26 training_helper = tf.contrib.seq2seq.TrainingHelper( 27 inputs=decoder_embedding_input, 28 sequence_length=output_sequence_length) 29 # Basic Decoder 30 training_decoder = tf.contrib.seq2seq.BasicDecoder( 31 cell=decoder_cells, 32 helper=training_helper, 33 output_layer=project_layer, 34 initial_state=decoder_cells.zero_state(batch_size=corpus_size, dtype=tf.float32) 35 ) 36 # Dynamic RNN 37 training_final_output, training_final_state, training_sequence_length = tf.contrib.seq2seq.dynamic_decode( 38 decoder=training_decoder, 39 maximum_iterations=max_output_sequence_length, 40 impute_finished=True) 41 with tf.variable_scope(‘Decoder‘, reuse=True): 42 # Helper对象 43 start_tokens = tf.tile(input=tf.constant(value=[word2id[‘_BOS‘]], dtype=tf.int32), 44 multiples=[corpus_size], name=‘start_tokens‘) 45 inference_helper = tf.contrib.seq2seq.GreedyEmbeddingHelper( 46 embedding=emb_matrix, 47 start_tokens=start_tokens, 48 end_token=word2id[‘_EOS‘]) 49 # Basic Decoder 50 inference_decoder = tf.contrib.seq2seq.BasicDecoder( 51 cell=decoder_cells, 52 helper=inference_helper, 53 output_layer=project_layer, 54 initial_state=decoder_cells.zero_state(batch_size=corpus_size, dtype=tf.float32) 55 ) 56 # Dynamic RNN 57 inference_final_output, inference_final_state, inference_sequence_length = tf.contrib.seq2seq.dynamic_decode( 58 decoder=inference_decoder, 59 maximum_iterations=max_inference_sequence_length, 60 impute_finished=True) 61 return training_final_output, training_final_state, inference_final_output, inference_final_state

2.7 Encoder-Decoder Model

1 encoder_output, encoder_state = encoder(hidden_size, num_layers, emb_matrix, input_data) 2 # training_final_output, training_final_state, inference_final_output, inference_final_state = decoder( 3 # output_data, corpus_size, word2id, emb_matrix, hidden_size, num_layers, vocab_size, 4 # output_sequence_length, max_output_sequence_length, max_inference_sequence_length, encoder_state) 5 training_final_output, training_final_state, inference_final_output, inference_final_state = attention_decoder( 6 output_data, corpus_size, word2id, emb_matrix, hidden_size, num_layers, vocab_size, 7 output_sequence_length, max_output_sequence_length, max_inference_sequence_length, encoder_output)

2.7.1 Loss Fuction

1 # tf.identity 相当与 copy 2 training_logits = tf.identity(input=training_final_output.rnn_output, name=‘training_logits‘) 3 inference_logits = tf.identity(input=inference_final_output.sample_id, name=‘inference_logits‘) 4 # [2,5] -> [[1,1,0,0,0],[1,1,1,1,1]] 5 mask = tf.sequence_mask(lengths=output_sequence_length, maxlen=max_output_sequence_length, name=‘mask‘, dtype=tf.float32)

2.7.2 Optimize

1 optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate)

2.7.3 梯度剪枝

1 gradients = optimizer.compute_gradients(cost) # 计算损失函数的梯度 2 clip_gradients = [(tf.clip_by_value(t=grad, clip_value_max=5, clip_value_min=-5),var) 3 for grad, var in gradients if grad is not None] 4 train_op = optimizer.apply_gradients(clip_gradients)

3 Train

1 with tf.Session() as sess: 2 sess.run(tf.global_variables_initializer()) 3 ckpt_dir = ‘./checkpoint/‘ 4 saver = tf.train.Saver() 5 ckpt = tf.train.latest_checkpoint(checkpoint_dir=ckpt_dir) 6 if ckpt: 7 saver.restore(sess, ckpt) 8 print(‘加载模型完成‘) 9 else: 10 print(‘没有找到训练过的模型‘) 11 for i in range(500): 12 _, training_pre, loss = sess.run([train_op, training_final_output.sample_id, cost], 13 feed_dict={ 14 input_data:source, 15 output_data:target, 16 input_sequence_length:from_length, 17 output_sequence_length:to_length 18 }) 19 if i % 100 == 0: 20 print(f‘第{i}次训练‘.center(50, ‘=‘)) 21 print(f‘损失值为{loss}‘.center(50, ‘=‘)) 22 print(‘输入:‘,‘ ‘.join([id2word[i] for i in source[0] if i != word2id[‘_PAD‘]])) 23 print(‘输出:‘,‘ ‘.join([id2word[i] for i in target[0] if i != word2id[‘_PAD‘]])) 24 print(‘Train预测:‘,‘ ‘.join([id2word[i] for i in training_pre[0] if i != word2id[‘_PAD‘]])) 25 saver.save(sess, ckpt_dir + ‘trained_model.ckpt‘) 26 inference_pre = sess.run( 27 inference_final_output.sample_id, 28 feed_dict={ 29 input_data:source, 30 input_sequence_length:from_length 31 }) 32 print(‘Inference预测:‘,‘ ‘.join([id2word[i] for i in inference_pre[0] if i != word2id[‘_PAD‘]])) 33 print(‘模型已保存‘.center(50, ‘=‘))

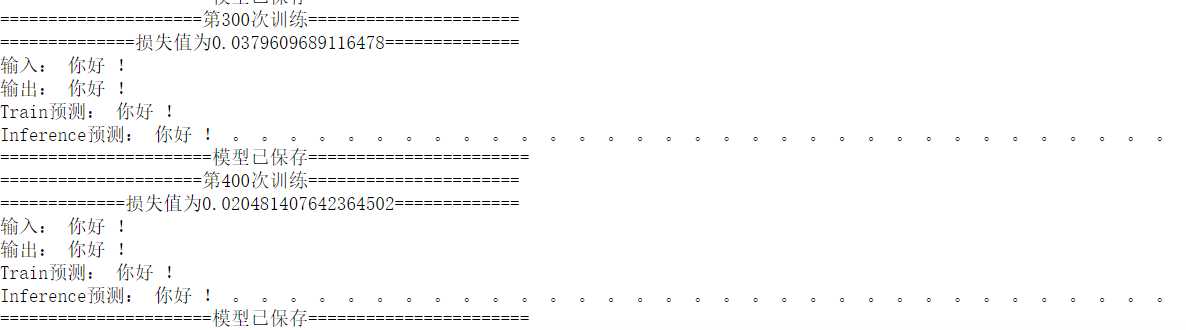

训练结果展示

相比较seq2seq网络,带有Attention机制的seq2seq效果会好很多。

代码部分参考在网上找到的最新的Tensorflow API视频讲解,特手敲一遍供大家学习。由于Tensorflow Seq2Seq API经常大改,如运行出错,请参考官网对应版本API。

用tensorflow框架搭建基于seq2seq-attention的聊天机器人

标签:定义 全连接 cells xtend style help ascii tensor 对话

原文地址:https://www.cnblogs.com/wf-ml/p/10967042.html