标签:链接 编辑 pwd 对象 oss 告诉 lib 选择 exce

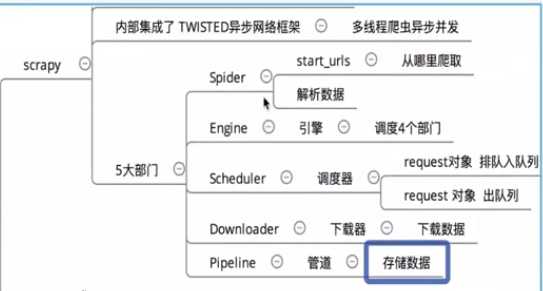

Scrapy框架主要由六大组件组成,它们分别是调试器(Scheduler)、下载器(Downloader)、爬虫(Spider)、中间件(Middleware)、

实体管道(Item Pipeline)和Scrapy引擎(Scrapy Engine)

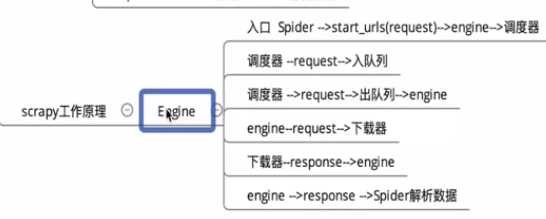

1.The Engine gets the initial Requests to crawl from the Spider. 2.The Engine schedules the Requests in the Scheduler and asks for the next Requests to crawl. 3.The Scheduler returns the next Requests to the Engine. 4.The Engine sends the Requests to the Downloader, passing through the Downloader Middlewares (see process_request()). 5.Once the page finishes downloading the Downloader generates a Response (with that page) and sends it to the Engine, passing through the Downloader Middlewares (see process_response()). 6.The Engine receives the Response from the Downloader and sends it to the Spider for processing, passing through the Spider Middleware (see process_spider_input()). 7.The Spider processes the Response and returns scraped items and new Requests (to follow) to the Engine, passing through the Spider Middleware (see process_spider_output()). 8.The Engine sends processed items to Item Pipelines, then send processed Requests to the Scheduler and asks for possible next Requests to crawl. 9.The process repeats (from step 1) until there are no more requests from the Scheduler.

中文解释:

1、Scrapy Engine(引擎): 引擎负责控制数据流在系统的所有组件中流动,并在相应动作发生时触发事件。

2、Scheduler(调度器): 调度器从引擎接受request并将他们入队,以便之后引擎请求他们时提供给引擎。

3、Downloader(下载器): 下载器负责获取页面数据并提供给引擎,而后提供给spider。

4、Spider(爬虫): Spider是Scrapy用户编写用于分析response并提取item(即获取到的item)或额外跟进的URL的类。 每个spider负责处理一个特定(或一些)网站。

5、Item Pipeline(管道): Item Pipeline负责处理被spider提取出来的item。典型的处理有清理、 验证及持久化(例如存储到数据库中)。

6、Downloader Middlewares(下载中间件): 下载器中间件是在引擎及下载器之间的特定钩子(specific hook),处理Downloader传递给引擎的response。 其提供了一个简便的机制,通过插入自定义代码来扩展Scrapy功能。

7、Spider Middlewares(Spider中间件): Spider中间件是在引擎及Spider之间的特定钩子(specific hook),处理spider的输入(response)和输出(items及requests)。 其提供了一个简便的机制,通过插入自定义代码来扩展Scrapy功能。

流程对话:

1、引擎:怎么样,爬虫老弟,搞起来啊!

2、Spider:好啊,老哥,来来来,开始吧。今天就爬xxx网站怎么样

3、引擎:没问题,入口URL发过来!

4、Spider:呐,入口URL是https://www.xxx.com。

5、引擎:调度器老弟,我这有request请求你帮我排序入队一下吧。

6、调度器:引擎老哥,这是我处理好的request。

7、引擎:下载器老弟,你按照下载中间件的设置帮我下载一下这个request请求。

8、下载器:可以了,这是下载好的东西。(如果失败:sorry,这个request下载失败了。然后引擎告诉调度器,这个request下载失败了,你记录一下,我们待会儿再下载)

9、引擎:爬虫老弟,这是下载好的东西,下载器已经按照下载中间件处理过了,你自己处理一下吧。

10、Spider:引擎老哥,我的数据处理完毕了,这里有两个结果,这个是我需要跟进的URL,还有这个是我获取到的Item数据。

11、引擎:管道老弟,我这儿有个item你帮我处理一下!

12、引擎:调度器老弟,这是需要跟进URL你帮我处理下。(然后从第四步开始循环,直到获取完需要全部信息)

配图:

----------------------------------------------------------------------------------------------------------------------------------------

-----------------------------------------------------------------------------------------------------------------------------------------

二.项目创建:

1.简单的命令:

#1 查看帮助 scrapy -h scrapy <command> -h #2 有两种命令:其中Project-only必须切到项目文件夹下才能执行,而Global的命令则不需要 Global commands: startproject #创建项目 genspider #创建爬虫程序 settings #如果是在项目目录下,则得到的是该项目的配置 runspider #运行一个独立的python文件,不必创建项目 shell #scrapy shell url地址 在交互式调试,如选择器规则正确与否 fetch #独立于程单纯地爬取一个页面,可以拿到请求头 view #下载完毕后直接弹出浏览器,以此可以分辨出哪些数据是ajax请求 version #scrapy version 查看scrapy的版本,scrapy version -v查看scrapy依赖库的版本 Project-only commands: crawl #运行爬虫,必须创建项目才行,确保配置文件中ROBOTSTXT_OBEY = False check #检测项目中有无语法错误 list #列出项目中所包含的爬虫名 edit #编辑器,一般不用 parse #scrapy parse url地址 --callback 回调函数 #以此可以验证我们的回调函数是否正确 bench #scrapy bentch压力测试 #3 官网链接 https://docs.scrapy.org/en/latest/topics/commands.html

#1、执行全局命令:请确保不在某个项目的目录下,排除受该项目配置的影响 scrapy startproject MyProject cd MyProject scrapy genspider baidu www.baidu.com scrapy settings --get XXX #如果切换到项目目录下,看到的则是该项目的配置 scrapy runspider baidu.py scrapy shell https://www.baidu.com response response.status response.body view(response) scrapy view https://www.taobao.com #如果页面显示内容不全,不全的内容则是ajax请求实现的,以此快速定位问题 scrapy fetch --nolog --headers https://www.taobao.com scrapy version #scrapy的版本 scrapy version -v #依赖库的版本 #2、执行项目命令:切到项目目录下 scrapy crawl baidu scrapy check scrapy list scrapy parse http://quotes.toscrape.com/ --callback parse scrapy bench

比如创建一个Amazon的项目:

scrapy startproject Amazon --> cd Amazon --> scrapy genspider amazon www.amazon.cn

运行python文件的两种方式:

scrapy crawl amazon / scrapy runspider amazon.py(可走绝对路径)

2.文件目录说明

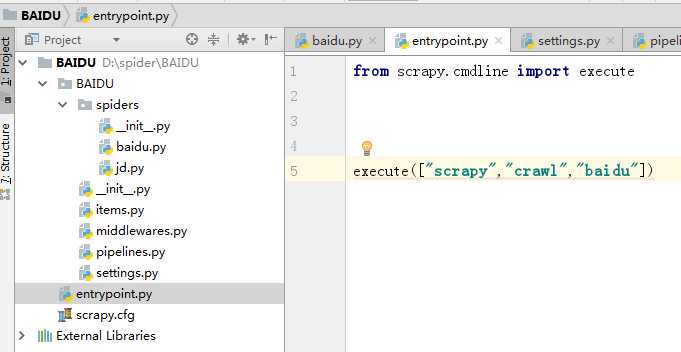

新建一个baidu的项目,给它加个入口:

文件说明:

注意:一般创建爬虫文件时,以网站域名命名

First. ........ 在settings.py 配置文件中加入代理:

DEFAULT_REQUEST_HEADERS = { ‘User-Agent‘:‘Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36 SE 2.X MetaSr 1.0‘ }

注:在py文件中可补充settings内容

Second .........在spiders中可发送新的urls,也可解析response数据:

# -*- coding: utf-8 -*- import scrapy from urllib.parse import urlencode class AmazonSpider(scrapy.Spider): name = ‘amazon‘ allowed_domains = [‘www.amazon.cn‘] start_urls = [‘http://www.amazon.cn/‘] # # custom_settings = { # # ‘BOT_NAME‘:‘SL‘, # ‘REQUEST_HEADERS‘:{ # ‘referer‘:‘....‘ # # } # } def __init__(self,keyword,*args,**kwargs): super(AmazonSpider,self).__init__(*args,**kwargs) self.keyword=keyword #初次请求必须发送可迭代对象 def start_requests(self): url = ‘https://www.amazon.cn/s/ref=nb_sb_noss_1?‘ + urlencode({"field-keywords": self.keyword}) # url2 =‘https://www.amazon.cn/s?‘+ urlencode({‘field-keywords‘: self.keyword}) # url3 =‘https://www.amazon.cn/s?‘+ urlencode({‘field-keywords‘: self.keyword}) yield scrapy.Request(url, meta = { ‘name‘:‘scrapy‘ }, callback=self.index_page, #回调函数 dont_filter=False) #过滤为真 # yield scrapy.Request(url2, dont_filter=False) # yield scrapy.Request(url3, dont_filter=False) # def parse(self, response): # print(‘>>>>>‘,response.url) # print(‘>>>>>‘,len(response.body)) # print(‘------------‘,response.meta) def index_page(self,response): print(response.url) # print(detail_urls) # print(response.body)

执行入口:

from scrapy.cmdline import execute execute([‘scrapy‘,‘crawl‘,‘amazon‘,‘-a‘,‘keyword=macbook‘,‘--nolog‘])

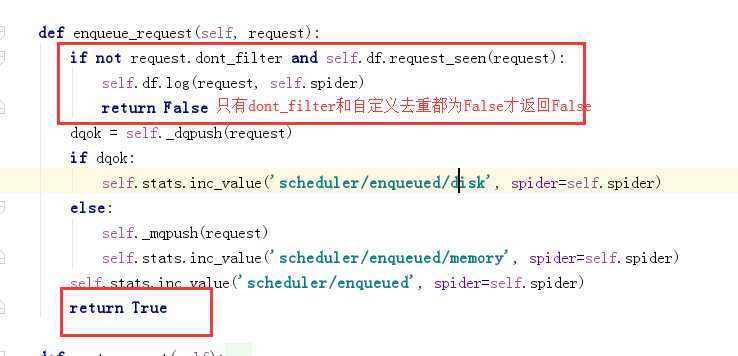

Third..........去重

默认去重 : dont_filter=False

也可自定义,新建一个 MyDupeFilter.py

settinfs.py:

DUPEFILTER_CLASS = ‘AMAZON.MyDupeFilter.DupeFilter‘

自定义去重代码:

from scrapy.dupefilters import RFPDupeFilter from scrapy.core.scheduler import Scheduler #从RF父类中直接拿,重写解耦 class DupeFilter(object): def __init__(self): self.set=set() @classmethod def from_settings(cls, settings): return cls() #自定义去重规则 def request_seen(self, request): #第二种方式,可不用集合,如果存在重复url,进入Scheduler返回False,交给系统去重 if request.url in self.set: return True #第一种方式,不用上面的if,利用集合去重,进入Scheduler返回True self.set.add(request.url) return False def open(self): # can return deferred pass def close(self, reason): # can return a deferred pass def log(self, request, spider): # log that a request has been filtered pass

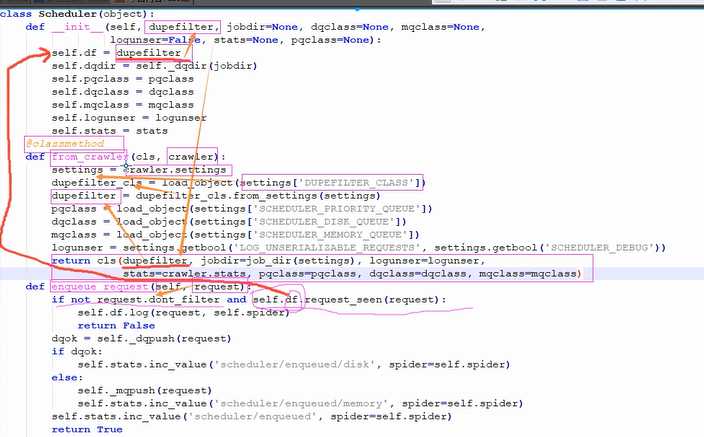

Scheduler源码:

自定义去重df的由来(过滤完毕后会把request交给Scheduler):

Fourth.............Pipeline:

https://docs.scrapy.org/en/latest/topics/item-pipeline.html

#一:可以写多个Pipeline类 #1、如果优先级高的Pipeline的process_item返回一个值或者None,会自动传给下一个pipline的process_item, #2、如果只想让第一个Pipeline执行,那得让第一个pipline的process_item抛出异常raise DropItem() #3、可以用spider.name == ‘爬虫名‘ 来控制哪些爬虫用哪些pipeline 二:示范 from scrapy.exceptions import DropItem class CustomPipeline(object): def __init__(self,v): self.value = v @classmethod def from_crawler(cls, crawler): """ Scrapy会先通过getattr判断我们是否自定义了from_crawler,有则调它来完 成实例化 """ val = crawler.settings.getint(‘MMMM‘) return cls(val) def open_spider(self,spider): """ 爬虫刚启动时执行一次 """ print(‘000000‘) def close_spider(self,spider): """ 爬虫关闭时执行一次 """ print(‘111111‘) def process_item(self, item, spider): # 操作并进行持久化 # return表示会被后续的pipeline继续处理 return item # 表示将item丢弃,不会被后续pipeline处理 # raise DropItem()

#1、settings.py HOST="127.0.0.1" PORT=27017 USER="root" PWD="123" DB="amazon" TABLE="goods" ITEM_PIPELINES = { ‘Amazon.pipelines.CustomPipeline‘: 200, } #2、pipelines.py class CustomPipeline(object): def __init__(self,host,port,user,pwd,db,table): self.host=host self.port=port self.user=user self.pwd=pwd self.db=db self.table=table @classmethod def from_crawler(cls, crawler): """ Scrapy会先通过getattr判断我们是否自定义了from_crawler,有则调它来完 成实例化 """ HOST = crawler.settings.get(‘HOST‘) PORT = crawler.settings.get(‘PORT‘) USER = crawler.settings.get(‘USER‘) PWD = crawler.settings.get(‘PWD‘) DB = crawler.settings.get(‘DB‘) TABLE = crawler.settings.get(‘TABLE‘) return cls(HOST,PORT,USER,PWD,DB,TABLE) def open_spider(self,spider): """ 爬虫刚启动时执行一次 """ self.client = MongoClient(‘mongodb://%s:%s@%s:%s‘ %(self.user,self.pwd,self.host,self.port)) def close_spider(self,spider): """ 爬虫关闭时执行一次 """ self.client.close() def process_item(self, item, spider): # 操作并进行持久化 self.client[self.db][self.table].save(dict(item))

LAST....................中间件

1. Dowloader Middeware

下载中间件的用途 1、在process——request内,自定义下载,不用scrapy的下载 2、对请求进行二次加工,比如 设置请求头 设置cookie 添加代理 scrapy自带的代理组件: from scrapy.downloadermiddlewares.httpproxy import HttpProxyMiddleware from urllib.request import getproxies

class DownMiddleware1(object): def process_request(self, request, spider): """ 请求需要被下载时,经过所有下载器中间件的process_request调用 :param request: :param spider: :return: None,继续后续中间件去下载; Response对象,停止process_request的执行,开始执行process_response Request对象,停止中间件的执行,将Request重新调度器 raise IgnoreRequest异常,停止process_request的执行,开始执行process_exception """ pass def process_response(self, request, response, spider): """ spider处理完成,返回时调用 :param response: :param result: :param spider: :return: Response 对象:转交给其他中间件process_response Request 对象:停止中间件,request会被重新调度下载 raise IgnoreRequest 异常:调用Request.errback """ print(‘response1‘) return response def process_exception(self, request, exception, spider): """ 当下载处理器(download handler)或 process_request() (下载中间件)抛出异常 :param response: :param exception: :param spider: :return: None:继续交给后续中间件处理异常; Response对象:停止后续process_exception方法 Request对象:停止中间件,request将会被重新调用下载 """ return None

#1、与middlewares.py同级目录下新建proxy_handle.py import requests def get_proxy(): return requests.get("http://127.0.0.1:5010/get/").text def delete_proxy(proxy): requests.get("http://127.0.0.1:5010/delete/?proxy={}".format(proxy)) #2、middlewares.py from Amazon.proxy_handle import get_proxy,delete_proxy class DownMiddleware1(object): def process_request(self, request, spider): """ 请求需要被下载时,经过所有下载器中间件的process_request调用 :param request: :param spider: :return: None,继续后续中间件去下载; Response对象,停止process_request的执行,开始执行process_response Request对象,停止中间件的执行,将Request重新调度器 raise IgnoreRequest异常,停止process_request的执行,开始执行process_exception """ proxy="http://" + get_proxy() request.meta[‘download_timeout‘]=20 request.meta["proxy"] = proxy print(‘为%s 添加代理%s ‘ % (request.url, proxy),end=‘‘) print(‘元数据为‘,request.meta) def process_response(self, request, response, spider): """ spider处理完成,返回时调用 :param response: :param result: :param spider: :return: Response 对象:转交给其他中间件process_response Request 对象:停止中间件,request会被重新调度下载 raise IgnoreRequest 异常:调用Request.errback """ print(‘返回状态吗‘,response.status) return response def process_exception(self, request, exception, spider): """ 当下载处理器(download handler)或 process_request() (下载中间件)抛出异常 :param response: :param exception: :param spider: :return: None:继续交给后续中间件处理异常; Response对象:停止后续process_exception方法 Request对象:停止中间件,request将会被重新调用下载 """ print(‘代理%s,访问%s出现异常:%s‘ %(request.meta[‘proxy‘],request.url,exception)) import time time.sleep(5) delete_proxy(request.meta[‘proxy‘].split("//")[-1]) request.meta[‘proxy‘]=‘http://‘+get_proxy() return request

2.Spider Middleware

爬虫中间件方法介绍

from scrapy import signals class SpiderMiddleware(object): # Not all methods need to be defined. If a method is not defined, # scrapy acts as if the spider middleware does not modify the # passed objects. @classmethod def from_crawler(cls, crawler): # This method is used by Scrapy to create your spiders. s = cls() crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) #当前爬虫执行时触发spider_opened return s def spider_opened(self, spider): # spider.logger.info(‘我是egon派来的爬虫1: %s‘ % spider.name) print(‘我是egon派来的爬虫1: %s‘ % spider.name) def process_start_requests(self, start_requests, spider): # Called with the start requests of the spider, and works # similarly to the process_spider_output() method, except # that it doesn’t have a response associated. # Must return only requests (not items). print(‘start_requests1‘) for r in start_requests: yield r def process_spider_input(self, response, spider): # Called for each response that goes through the spider # middleware and into the spider. # 每个response经过爬虫中间件进入spider时调用 # 返回值:Should return None or raise an exception. #1、None: 继续执行其他中间件的process_spider_input #2、抛出异常: # 一旦抛出异常则不再执行其他中间件的process_spider_input # 并且触发request绑定的errback # errback的返回值倒着传给中间件的process_spider_output # 如果未找到errback,则倒着执行中间件的process_spider_exception print("input1") return None def process_spider_output(self, response, result, spider): # Called with the results returned from the Spider, after # it has processed the response. # Must return an iterable of Request, dict or Item objects. print(‘output1‘) # 用yield返回多次,与return返回一次是一个道理 # 如果生成器掌握不好(函数内有yield执行函数得到的是生成器而并不会立刻执行),生成器的形式会容易误导你对中间件执行顺序的理解 # for i in result: # yield i return result def process_spider_exception(self, response, exception, spider): # Called when a spider or process_spider_input() method # (from other spider middleware) raises an exception. # Should return either None or an iterable of Response, dict # or Item objects. print(‘exception1‘)

当前爬虫启动时以及初始请求产生时:

#步骤一: ‘‘‘ 打开注释: SPIDER_MIDDLEWARES = { ‘Baidu.middlewares.SpiderMiddleware1‘: 200, ‘Baidu.middlewares.SpiderMiddleware2‘: 300, ‘Baidu.middlewares.SpiderMiddleware3‘: 400, } ‘‘‘ #步骤二:middlewares.py from scrapy import signals class SpiderMiddleware1(object): @classmethod def from_crawler(cls, crawler): s = cls() crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) #当前爬虫执行时触发spider_opened return s def spider_opened(self, spider): print(‘我是egon派来的爬虫1: %s‘ % spider.name) def process_start_requests(self, start_requests, spider): # Must return only requests (not items). print(‘start_requests1‘) for r in start_requests: yield r class SpiderMiddleware2(object): @classmethod def from_crawler(cls, crawler): s = cls() crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) # 当前爬虫执行时触发spider_opened return s def spider_opened(self, spider): print(‘我是egon派来的爬虫2: %s‘ % spider.name) def process_start_requests(self, start_requests, spider): print(‘start_requests2‘) for r in start_requests: yield r class SpiderMiddleware3(object): @classmethod def from_crawler(cls, crawler): s = cls() crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) # 当前爬虫执行时触发spider_opened return s def spider_opened(self, spider): print(‘我是egon派来的爬虫3: %s‘ % spider.name) def process_start_requests(self, start_requests, spider): print(‘start_requests3‘) for r in start_requests: yield r #步骤三:分析运行结果 #1、启动爬虫时则立刻执行: 我是egon派来的爬虫1: baidu 我是egon派来的爬虫2: baidu 我是egon派来的爬虫3: baidu #2、然后产生一个初始的request请求,依次经过爬虫中间件1,2,3: start_requests1 start_requests2 start_requests3

process_spider_input返回None时:

#步骤一:打开注释: SPIDER_MIDDLEWARES = { ‘Baidu.middlewares.SpiderMiddleware1‘: 200, ‘Baidu.middlewares.SpiderMiddleware2‘: 300, ‘Baidu.middlewares.SpiderMiddleware3‘: 400, } ‘‘‘ #步骤二:middlewares.py from scrapy import signals class SpiderMiddleware1(object): def process_spider_input(self, response, spider): print("input1") def process_spider_output(self, response, result, spider): print(‘output1‘) return result def process_spider_exception(self, response, exception, spider): print(‘exception1‘) class SpiderMiddleware2(object): def process_spider_input(self, response, spider): print("input2") return None def process_spider_output(self, response, result, spider): print(‘output2‘) return result def process_spider_exception(self, response, exception, spider): print(‘exception2‘) class SpiderMiddleware3(object): def process_spider_input(self, response, spider): print("input3") return None def process_spider_output(self, response, result, spider): print(‘output3‘) return result def process_spider_exception(self, response, exception, spider): print(‘exception3‘) #步骤三:运行结果分析 #1、返回response时,依次经过爬虫中间件1,2,3 input1 input2 input3 #2、spider处理完毕后,依次经过爬虫中间件3,2,1 output3 output2 output1

process_spider_input抛出异常时:

#步骤一: ‘‘‘ 打开注释: SPIDER_MIDDLEWARES = { ‘Baidu.middlewares.SpiderMiddleware1‘: 200, ‘Baidu.middlewares.SpiderMiddleware2‘: 300, ‘Baidu.middlewares.SpiderMiddleware3‘: 400, } ‘‘‘ #步骤二:middlewares.py from scrapy import signals class SpiderMiddleware1(object): def process_spider_input(self, response, spider): print("input1") def process_spider_output(self, response, result, spider): print(‘output1‘) return result def process_spider_exception(self, response, exception, spider): print(‘exception1‘) class SpiderMiddleware2(object): def process_spider_input(self, response, spider): print("input2") raise Type def process_spider_output(self, response, result, spider): print(‘output2‘) return result def process_spider_exception(self, response, exception, spider): print(‘exception2‘) class SpiderMiddleware3(object): def process_spider_input(self, response, spider): print("input3") return None def process_spider_output(self, response, result, spider): print(‘output3‘) return result def process_spider_exception(self, response, exception, spider): print(‘exception3‘) #运行结果 input1 input2 exception3 exception2 exception1 #分析: #1、当response经过中间件1的 process_spider_input返回None,继续交给中间件2的process_spider_input #2、中间件2的process_spider_input抛出异常,则直接跳过后续的process_spider_input,将异常信息传递给Spiders里该请求的errback #3、没有找到errback,则该response既没有被Spiders正常的callback执行,也没有被errback执行,即Spiders啥事也没有干,那么开始倒着执行process_spider_exception #4、如果process_spider_exception返回None,代表该方法推卸掉责任,并没处理异常,而是直接交给下一个process_spider_exception,全都返回None,则异常最终交给Engine抛出

指定errback:

#步骤一:spider.py import scrapy class BaiduSpider(scrapy.Spider): name = ‘baidu‘ allowed_domains = [‘www.baidu.com‘] start_urls = [‘http://www.baidu.com/‘] def start_requests(self): yield scrapy.Request(url=‘http://www.baidu.com/‘, callback=self.parse, errback=self.parse_err, ) def parse(self, response): pass def parse_err(self,res): #res 为异常信息,异常已经被该函数处理了,因此不会再抛给因此,于是开始走process_spider_output return [1,2,3,4,5] #提取异常信息中有用的数据以可迭代对象的形式存放于管道中,等待被process_spider_output取走 #步骤二: ‘‘‘ 打开注释: SPIDER_MIDDLEWARES = { ‘Baidu.middlewares.SpiderMiddleware1‘: 200, ‘Baidu.middlewares.SpiderMiddleware2‘: 300, ‘Baidu.middlewares.SpiderMiddleware3‘: 400, } ‘‘‘ #步骤三:middlewares.py from scrapy import signals class SpiderMiddleware1(object): def process_spider_input(self, response, spider): print("input1") def process_spider_output(self, response, result, spider): print(‘output1‘,list(result)) return result def process_spider_exception(self, response, exception, spider): print(‘exception1‘) class SpiderMiddleware2(object): def process_spider_input(self, response, spider): print("input2") raise TypeError(‘input2 抛出异常‘) def process_spider_output(self, response, result, spider): print(‘output2‘,list(result)) return result def process_spider_exception(self, response, exception, spider): print(‘exception2‘) class SpiderMiddleware3(object): def process_spider_input(self, response, spider): print("input3") return None def process_spider_output(self, response, result, spider): print(‘output3‘,list(result)) return result def process_spider_exception(self, response, exception, spider): print(‘exception3‘) #步骤四:运行结果分析 input1 input2 output3 [1, 2, 3, 4, 5] #parse_err的返回值放入管道中,只能被取走一次,在output3的方法内可以根据异常信息封装一个新的request请求 output2 [] output1 []

标签:链接 编辑 pwd 对象 oss 告诉 lib 选择 exce

原文地址:https://www.cnblogs.com/sima-3/p/11104859.html