标签:tox sha localhost nta none alpha logger ssh ica

hadoop:3.1.2

hive:3.1.1

mysql:5.6.40

添加IP与主机名的映射关系,在/etc/hosts文件里添加如下内容:

172.31.58.154 HadoopMaster 172.31.51.195 HadoopSlave01 172.31.1.11 HadoopSlave02

让Master能够通过SSH无密码登录各个Slave节点。如果修改过主机名,需要重新生成的新的公钥。

1、新建用户

useradd -m hadoop -s /bin/bash

passwd hadoop

2、在 Master 上执行如下命令:

su - hadoop cd ~/.ssh rm ./id_rsa* ssh-keygen -t rsa

3、接着将Master中的id_rsa.pub文件复制到所有节点中

ssh-copy-id hadoop@HadoopMaster ssh-copy-id hadoop@HadoopSlave01 ssh-copy-id hadoop@HadoopSlave02

后续所有操作用 root 账户操作即可。

1、安装

yum install java-1.8.0-openjdk*

2、添加环境变量

vim /etc/profile export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.201.b09-0.43.amzn1.x86_64/ export JRE_HOME=$JAVA_HOME/jre export PATH=$PATH:$JAVA_HOME/bin export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

cd /opt wget http://mirror.bit.edu.cn/apache/hadoop/common/hadoop-3.1.2/hadoop-3.1.2.tar.gz tar -zxvf hadoop-3.1.2.tar.gz cd hadoop-3.1.2/etc/hadoop/

需要修改6个文件,hadoop-env.sh、core-site.xml、hdfs-site.xml、yarn-site.xml、mapred-site.xml、workers

vim hadoop-env.sh export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.201.b09-0.43.amzn1.x86_64 export JAVA_HOME=/usr/java/jdk1.8.0_91 export HDFS_NAMENODE_USER="hadoop" export HDFS_DATANODE_USER="hadoop" export HDFS_SECONDARYNAMENODE_USER="hadoop" export YARN_RESOURCEMANAGER_USER="hadoop" export YARN_NODEMANAGER_USER="hadoop"

vim core-site.xml <configuration> <!-- 指定HADOOP所使用的文件系统schema(URI)--> <property> <name>fs.defaultFS</name> <value>hdfs://HadoopMaster:9000</value> </property> <property> <name>io.file.buffer.size</name> <value>131072</value> </property> </configuration>

vim hdfs-site.xml <configuration> <!-- Configurations for NameNode: --> <property> <name>dfs.namenode.name.dir</name> <value>/opt/hadoop-3.1.2/hdfs/name/</value> </property> <property> <name>dfs.blocksize</name> <value>268435456</value> </property> <property> <name>dfs.namenode.handler.count </name> <value>100</value> </property> <!-- Configurations for DataNode: --> <property> <name>dfs.datanode.data.dir</name> <value>/opt/hadoop-3.1.2/hdfs/data/</value> </property> <property> <name>dfs.replication</name> <value>3</value> </property> </configuration>

vim yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>HadoopMaster</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

vim mapred-site.xml

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>mapreduce.jobhistory.address</name> <value>HadoopMaster:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>HadoopMaster:19888</value> </property> </configuration>

vim workers

HadoopSlave01

HadoopSlave02

每个节点都要设置

vim /etc/profile export PATH=$PATH:/opt/hadoop-3.1.2/bin:/opt/hadoop-3.1.2/sbin source /etc/profile

只在Master上执行,首次运行需要格式化HDFS [只有首次部署才可使用]

hdfs namenode -format wmqhadoop

输出结果:

2019-07-03 03:30:52,013 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = HadoopMaster/172.31.58.154 STARTUP_MSG: args = [-format, wmqhadoop] STARTUP_MSG: version = 3.1.2 STARTUP_MSG: classpath = /opt/hadoop-3.1.2/etc/hadoop:/opt/hadoop-3.1.2/share/hadoop/common/lib/jackson-annotations-2.7.8.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/re2j-1.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/asm-5.0.4.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jackson-databind-2.7.8.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/kerb-simplekdc-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/avro-1.7.7.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/paranamer-2.3.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/httpclient-4.5.2.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jsr311-api-1.1.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jersey-servlet-1.19.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/curator-framework-2.13.0.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/curator-client-2.13.0.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/metrics-core-3.2.4.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jsch-0.1.54.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/slf4j-api-1.7.25.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/htrace-core4-4.1.0-incubating.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jersey-server-1.19.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/kerb-client-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/kerb-identity-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/accessors-smart-1.2.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/commons-compress-1.18.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/kerb-common-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jetty-security-9.3.24.v20180605.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/commons-cli-1.2.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/kerb-admin-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/commons-configuration2-2.1.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jetty-io-9.3.24.v20180605.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/json-smart-2.3.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/commons-net-3.6.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jul-to-slf4j-1.7.25.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/commons-io-2.5.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/commons-beanutils-1.9.3.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/nimbus-jose-jwt-4.41.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/audience-annotations-0.5.0.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/zookeeper-3.4.13.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/snappy-java-1.0.5.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/kerb-crypto-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/token-provider-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/hadoop-auth-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/guava-11.0.2.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jackson-core-2.7.8.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/netty-3.10.5.Final.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jaxb-api-2.2.11.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jetty-servlet-9.3.24.v20180605.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/gson-2.2.4.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/httpcore-4.4.4.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jetty-webapp-9.3.24.v20180605.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jettison-1.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/kerby-config-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/log4j-1.2.17.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/hadoop-annotations-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/javax.servlet-api-3.1.0.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jsr305-3.0.0.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/kerb-core-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/kerby-pkix-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/commons-lang3-3.4.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/curator-recipes-2.13.0.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jersey-json-1.19.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/kerb-util-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/stax2-api-3.1.4.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jetty-http-9.3.24.v20180605.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/commons-logging-1.1.3.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/commons-collections-3.2.2.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/kerby-util-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/commons-codec-1.11.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/commons-math3-3.1.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jetty-server-9.3.24.v20180605.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/commons-lang-2.6.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jersey-core-1.19.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/woodstox-core-5.0.3.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jetty-util-9.3.24.v20180605.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/kerby-asn1-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jetty-xml-9.3.24.v20180605.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/kerby-xdr-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/jsp-api-2.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/lib/kerb-server-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/common/hadoop-kms-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/common/hadoop-common-3.1.2-tests.jar:/opt/hadoop-3.1.2/share/hadoop/common/hadoop-common-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/common/hadoop-nfs-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jackson-annotations-2.7.8.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/re2j-1.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/asm-5.0.4.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jackson-databind-2.7.8.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jcip-annotations-1.0-1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-simplekdc-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/avro-1.7.7.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/paranamer-2.3.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/httpclient-4.5.2.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jsr311-api-1.1.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jersey-servlet-1.19.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/curator-framework-2.13.0.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/curator-client-2.13.0.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jsch-0.1.54.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/htrace-core4-4.1.0-incubating.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jersey-server-1.19.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-client-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-identity-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/accessors-smart-1.2.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-compress-1.18.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-common-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-security-9.3.24.v20180605.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jackson-jaxrs-1.9.13.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-admin-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-configuration2-2.1.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-io-9.3.24.v20180605.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/json-smart-2.3.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-net-3.6.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-io-2.5.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-beanutils-1.9.3.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/netty-all-4.0.52.Final.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/nimbus-jose-jwt-4.41.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/audience-annotations-0.5.0.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/zookeeper-3.4.13.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/snappy-java-1.0.5.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-crypto-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/token-provider-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/hadoop-auth-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/guava-11.0.2.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jackson-core-2.7.8.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/netty-3.10.5.Final.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jaxb-api-2.2.11.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-servlet-9.3.24.v20180605.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/gson-2.2.4.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/okio-1.6.0.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/httpcore-4.4.4.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-webapp-9.3.24.v20180605.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jettison-1.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/kerby-config-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/okhttp-2.7.5.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/hadoop-annotations-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/javax.servlet-api-3.1.0.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jaxb-impl-2.2.3-1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-core-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/kerby-pkix-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-lang3-3.4.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/curator-recipes-2.13.0.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jackson-xc-1.9.13.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jersey-json-1.19.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-util-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/stax2-api-3.1.4.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-http-9.3.24.v20180605.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/json-simple-1.1.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-collections-3.2.2.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/kerby-util-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-util-ajax-9.3.24.v20180605.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-codec-1.11.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-math3-3.1.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-server-9.3.24.v20180605.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jersey-core-1.19.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/woodstox-core-5.0.3.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-util-9.3.24.v20180605.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/kerby-asn1-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-xml-9.3.24.v20180605.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/kerby-xdr-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-server-1.0.1.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-client-3.1.2-tests.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-3.1.2-tests.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-native-client-3.1.2-tests.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-rbf-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-rbf-3.1.2-tests.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-httpfs-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-client-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-nfs-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-native-client-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/mapreduce/lib/junit-4.11.jar:/opt/hadoop-3.1.2/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/opt/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-common-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.2-tests.jar:/opt/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-app-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-uploader-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-core-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-nativetask-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/yarn:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/java-util-1.9.0.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/swagger-annotations-1.5.4.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/json-io-2.5.1.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/metrics-core-3.2.4.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/jackson-module-jaxb-annotations-2.7.8.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/fst-2.50.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/snakeyaml-1.16.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/jackson-jaxrs-json-provider-2.7.8.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/jersey-guice-1.19.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/objenesis-1.0.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/guice-4.0.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/guice-servlet-4.0.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/jackson-jaxrs-base-2.7.8.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/javax.inject-1.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/HikariCP-java7-2.4.12.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/dnsjava-2.1.7.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/aopalliance-1.0.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/lib/jersey-client-1.19.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-registry-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-router-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-client-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-services-api-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-tests-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-common-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-web-proxy-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-common-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-api-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-nodemanager-3.1.2.jar:/opt/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-services-core-3.1.2.jar STARTUP_MSG: build = https://github.com/apache/hadoop.git -r 1019dde65bcf12e05ef48ac71e84550d589e5d9a; compiled by ‘sunilg‘ on 2019-01-29T01:39Z STARTUP_MSG: java = 1.8.0_201 ************************************************************/ 2019-07-03 03:30:52,027 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT] 2019-07-03 03:30:52,166 INFO namenode.NameNode: createNameNode [-format, wmqhadoop] 2019-07-03 03:30:52,883 INFO common.Util: Assuming ‘file‘ scheme for path /opt/hadoop-3.1.2/hdfs/name/ in configuration. 2019-07-03 03:30:52,886 INFO common.Util: Assuming ‘file‘ scheme for path /opt/hadoop-3.1.2/hdfs/name/ in configuration. Formatting using clusterid: CID-13c5e685-4d94-4dc5-984c-28e8001e9c1c 2019-07-03 03:30:52,940 INFO namenode.FSEditLog: Edit logging is async:true 2019-07-03 03:30:52,963 INFO namenode.FSNamesystem: KeyProvider: null 2019-07-03 03:30:52,965 INFO namenode.FSNamesystem: fsLock is fair: true 2019-07-03 03:30:52,967 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false 2019-07-03 03:30:52,976 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE) 2019-07-03 03:30:52,976 INFO namenode.FSNamesystem: supergroup = supergroup 2019-07-03 03:30:52,976 INFO namenode.FSNamesystem: isPermissionEnabled = true 2019-07-03 03:30:52,976 INFO namenode.FSNamesystem: HA Enabled: false 2019-07-03 03:30:53,045 INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling 2019-07-03 03:30:53,067 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=1000, counted=60, effected=1000 2019-07-03 03:30:53,067 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true 2019-07-03 03:30:53,074 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 2019-07-03 03:30:53,074 INFO blockmanagement.BlockManager: The block deletion will start around 2019 Jul 03 03:30:53 2019-07-03 03:30:53,077 INFO util.GSet: Computing capacity for map BlocksMap 2019-07-03 03:30:53,077 INFO util.GSet: VM type = 64-bit 2019-07-03 03:30:53,079 INFO util.GSet: 2.0% max memory 3.5 GB = 71.3 MB 2019-07-03 03:30:53,079 INFO util.GSet: capacity = 2^23 = 8388608 entries 2019-07-03 03:30:53,095 INFO blockmanagement.BlockManager: dfs.block.access.token.enable = false 2019-07-03 03:30:53,105 INFO Configuration.deprecation: No unit for dfs.namenode.safemode.extension(30000) assuming MILLISECONDS 2019-07-03 03:30:53,105 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.9990000128746033 2019-07-03 03:30:53,105 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0 2019-07-03 03:30:53,105 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000 2019-07-03 03:30:53,105 INFO blockmanagement.BlockManager: defaultReplication = 3 2019-07-03 03:30:53,106 INFO blockmanagement.BlockManager: maxReplication = 512 2019-07-03 03:30:53,106 INFO blockmanagement.BlockManager: minReplication = 1 2019-07-03 03:30:53,106 INFO blockmanagement.BlockManager: maxReplicationStreams = 2 2019-07-03 03:30:53,106 INFO blockmanagement.BlockManager: redundancyRecheckInterval = 3000ms 2019-07-03 03:30:53,106 INFO blockmanagement.BlockManager: encryptDataTransfer = false 2019-07-03 03:30:53,106 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000 2019-07-03 03:30:53,229 INFO namenode.FSDirectory: GLOBAL serial map: bits=24 maxEntries=16777215 2019-07-03 03:30:53,254 INFO util.GSet: Computing capacity for map INodeMap 2019-07-03 03:30:53,254 INFO util.GSet: VM type = 64-bit 2019-07-03 03:30:53,255 INFO util.GSet: 1.0% max memory 3.5 GB = 35.7 MB 2019-07-03 03:30:53,255 INFO util.GSet: capacity = 2^22 = 4194304 entries 2019-07-03 03:30:53,258 INFO namenode.FSDirectory: ACLs enabled? false 2019-07-03 03:30:53,258 INFO namenode.FSDirectory: POSIX ACL inheritance enabled? true 2019-07-03 03:30:53,258 INFO namenode.FSDirectory: XAttrs enabled? true 2019-07-03 03:30:53,258 INFO namenode.NameNode: Caching file names occurring more than 10 times 2019-07-03 03:30:53,267 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536 2019-07-03 03:30:53,270 INFO snapshot.SnapshotManager: SkipList is disabled 2019-07-03 03:30:53,276 INFO util.GSet: Computing capacity for map cachedBlocks 2019-07-03 03:30:53,276 INFO util.GSet: VM type = 64-bit 2019-07-03 03:30:53,277 INFO util.GSet: 0.25% max memory 3.5 GB = 8.9 MB 2019-07-03 03:30:53,277 INFO util.GSet: capacity = 2^20 = 1048576 entries 2019-07-03 03:30:53,289 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10 2019-07-03 03:30:53,289 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10 2019-07-03 03:30:53,289 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25 2019-07-03 03:30:53,294 INFO namenode.FSNamesystem: Retry cache on namenode is enabled 2019-07-03 03:30:53,295 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis 2019-07-03 03:30:53,297 INFO util.GSet: Computing capacity for map NameNodeRetryCache 2019-07-03 03:30:53,297 INFO util.GSet: VM type = 64-bit 2019-07-03 03:30:53,298 INFO util.GSet: 0.029999999329447746% max memory 3.5 GB = 1.1 MB 2019-07-03 03:30:53,298 INFO util.GSet: capacity = 2^17 = 131072 entries 2019-07-03 03:30:53,334 INFO namenode.FSImage: Allocated new BlockPoolId: BP-768253091-172.31.58.154-1562124653324 2019-07-03 03:30:53,364 INFO common.Storage: Storage directory /opt/hadoop-3.1.2/hdfs/name has been successfully formatted. 2019-07-03 03:30:53,384 INFO namenode.FSImageFormatProtobuf: Saving image file /opt/hadoop-3.1.2/hdfs/name/current/fsimage.ckpt_0000000000000000000 using no compression 2019-07-03 03:30:53,532 INFO namenode.FSImageFormatProtobuf: Image file /opt/hadoop-3.1.2/hdfs/name/current/fsimage.ckpt_0000000000000000000 of size 388 bytes saved in 0 seconds . 2019-07-03 03:30:53,551 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 2019-07-03 03:30:53,568 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at HadoopMaster/172.31.58.154 ************************************************************/

cd /opt chown -R hadoop:hadoop hadoop-3.1.2

start-dfs.sh

输出结果:

Starting namenodes on [HadoopMaster] Last login: Wed Jul 3 04:53:01 UTC 2019 on pts/1 Starting datanodes Last login: Wed Jul 3 04:57:30 UTC 2019 on pts/1 HadoopSlave02: WARNING: /opt/hadoop-3.1.2/logs does not exist. Creating. HadoopSlave01: WARNING: /opt/hadoop-3.1.2/logs does not exist. Creating. Starting secondary namenodes [ip-172-31-58-154] Last login: Wed Jul 3 04:57:33 UTC 2019 on pts/1

start-yarn.sh

输出结果:

Starting resourcemanager Last login: Wed Jul 3 04:57:37 UTC 2019 on pts/1 Starting nodemanagers Last login: Wed Jul 3 05:01:36 UTC 2019 on pts/1

#在HadoopMaster上执行:

jps

26817 NameNode 29159 Jps 27259 SecondaryNameNode 28702 ResourceManager

#在HadoopSlave01上执行:

jps

22560 Jps 21106 DataNode 22406 NodeManager

#在HadoopSlave02上执行:

jps

12772 NodeManager 8374 DataNode 13080 Jps

stop-yarn.sh

stop-dfs.sh

如果有问题,重复如下命令:

stop-dfs.sh # 关闭 cd /opt/hadoop-3.1.2/hdfs/ rm -r name # 删除 HDFS中原有的所有数据,其他节点为 data 目录 hdfs namenode -format # 重新格式化名称节点 start-dfs.sh # 启动

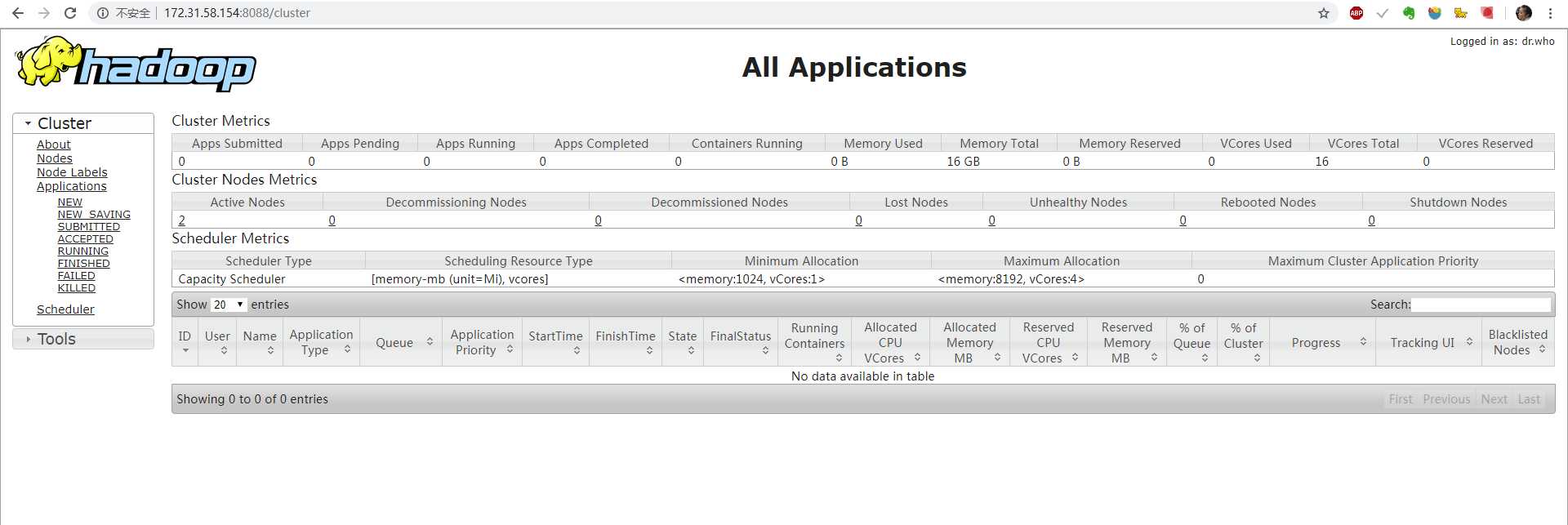

1、浏览器查看yarn:http://172.31.58.154:8088

2、查看Hadoop集群的状态

用该命令可以快速定位出哪些节点挂掉了,HDFS的容量以及使用了多少,以及每个节点的硬盘使用情况。

su - hadoop

hadoop dfsadmin -report

输出结果:

Configured Capacity: 633928880128 (590.39 GB) Present Capacity: 336200302592 (313.11 GB) DFS Remaining: 336200245248 (313.11 GB) DFS Used: 57344 (56 KB) DFS Used%: 0.00% Replicated Blocks: Under replicated blocks: 0 Blocks with corrupt replicas: 0 Missing blocks: 0 Missing blocks (with replication factor 1): 0 Low redundancy blocks with highest priority to recover: 0 Pending deletion blocks: 0 Erasure Coded Block Groups: Low redundancy block groups: 0 Block groups with corrupt internal blocks: 0 Missing block groups: 0 Low redundancy blocks with highest priority to recover: 0 Pending deletion blocks: 0 ------------------------------------------------- Live datanodes (2): Name: 172.31.1.11:9866 (HadoopSlave02) Hostname: localhost Decommission Status : Normal Configured Capacity: 528308690944 (492.03 GB) DFS Used: 28672 (28 KB) Non DFS Used: 262149668864 (244.15 GB) DFS Remaining: 239298883584 (222.86 GB) DFS Used%: 0.00% DFS Remaining%: 45.30% Configured Cache Capacity: 0 (0 B) Cache Used: 0 (0 B) Cache Remaining: 0 (0 B) Cache Used%: 100.00% Cache Remaining%: 0.00% Xceivers: 1 Last contact: Wed Jul 03 07:13:50 UTC 2019 Last Block Report: Wed Jul 03 07:11:56 UTC 2019 Num of Blocks: 0 Name: 172.31.51.195:9866 (HadoopSlave01) Hostname: HadoopSlave01 Decommission Status : Normal Configured Capacity: 105620189184 (98.37 GB) DFS Used: 28672 (28 KB) Non DFS Used: 8616144896 (8.02 GB) DFS Remaining: 96901361664 (90.25 GB) DFS Used%: 0.00% DFS Remaining%: 91.75% Configured Cache Capacity: 0 (0 B) Cache Used: 0 (0 B) Cache Remaining: 0 (0 B) Cache Used%: 100.00% Cache Remaining%: 0.00% Xceivers: 1 Last contact: Wed Jul 03 07:13:49 UTC 2019 Last Block Report: Wed Jul 03 07:11:58 UTC 2019 Num of Blocks: 0

1、安装mysql

yum install mysql-server

service mysqld start

2、创建数据库

mysql -uroot -p

#创建数据库

create database hive;

#创建用户

grant all on hive.* to hadoop@‘%‘ identified by ‘hive2019‘;

flush privileges;

hive的安装可以安装在任一个节点上

1、下载

cd /opt wget https://mirrors.tuna.tsinghua.edu.cn/apache/hive/hive-3.1.1/apache-hive-3.1.1-bin.tar.gz tar -zxvf apache-hive-3.1.1-bin.tar.gz mv apache-hive-3.1.1-bin hive-3.1.1

2、环境变量

vim /etc/profile export PATH=$PATH:/opt/hive-3.1.1/bin source /etc/profile

hive需用通过jdbc连接mysql

cd /opt wget http://mirrors.163.com/mysql/Downloads/Connector-J/mysql-connector-java-5.1.47.tar.gz tar -zxvf mysql-connector-java-5.1.47.tar.gz cp mysql-connector-java-5.1.47/mysql-connector-java-5.1.47-bin.jar /opt/hive-3.1.1/lib/

1、新建文件夹

mkdir /opt/hive-3.1.1/warehouse hadoop fs -mkdir -p /opt/hive-3.1.1/warehouse hadoop fs -chmod 777 /opt/hive-3.1.1/warehouse hadoop fs -ls /opt/hive-3.1.1/

2、复制配置文件

cd /opt/hive-3.1.1/conf/ cp hive-exec-log4j2.properties.template hive-exec-log4j2.properties cp hive-log4j2.properties.template hive-log4j2.properties cp hive-default.xml.template hive-default.xml cp hive-default.xml.template hive-site.xml cp hive-env.sh.template hive-env.sh

3、修改hive-env.sh文件

vim hive-env.sh HADOOP_HOME=/opt/hadoop-3.1.2 export HIVE_CONF_DIR=/opt/hive-3.1.1/conf export HIVE_AUX_JARS_PATH=/opt/hive-3.1.1/lib

4、添加hive-site.xml文件

vim hive-site.xml <?xml version="1.0" encoding="UTF-8" standalone="no"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://172.31.48.30:13306/hive?allowMultiQueries=true&useSSL=false&verifyServerCertificate=false</value> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>hadoop</value> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>hive2019</value> </property> <property> <name>datanucleus.readOnlyDatastore</name> <value>false</value> </property> <property> <name>datanucleus.fixedDatastore</name> <value>false</value> </property> <property> <name>datanucleus.autoCreateSchema</name> <value>true</value> </property> <property> <name>datanucleus.autoCreateTables</name> <value>true</value> </property> <property> <name>datanucleus.autoCreateColumns</name> <value>true</value> </property> </configuration>

schematool -initSchema -dbType mysql

输出结果: SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/hive-3.1.1/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/hadoop-3.1.2/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Metastore connection URL: jdbc:mysql://172.31.48.30:13306/hive?allowMultiQueries=true&useSSL=false&verifyServerCertificate=false Metastore Connection Driver : com.mysql.jdbc.Driver Metastore connection User: hadoop Starting metastore schema initialization to 3.1.0 Initialization script hive-schema-3.1.0.mysql.sql Initialization script completed schemaTool completed

2.6 验证hive

hive

输出如下: SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/hive-3.1.1/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/hadoop-3.1.2/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] which: no hbase in (/usr/local/sbin:/sbin:/bin:/usr/sbin:/usr/bin:/opt/aws/bin:/opt/aws/bin:/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.201.b09-0.43.amzn1.x86_64//bin:/opt/aws/bin:/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.201.b09-0.43.amzn1.x86_64/bin:/opt/aws/bin:/opt/hadoop-3.1.2/bin:/opt/hadoop-3.1.2/sbin:/opt/aws/bin:/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.201.b09-0.43.amzn1.x86_64/bin:/opt/hadoop-3.1.2/bin:/opt/hadoop-3.1.2/sbin:/opt/aws/bin:/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.201.b09-0.43.amzn1.x86_64/bin:/opt/hadoop-3.1.2/bin:/opt/hadoop-3.1.2/sbin:/opt/hive-3.1.1/bin) SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/hive-3.1.1/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/hadoop-3.1.2/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Hive Session ID = 3ef10f1f-826d-4a1b-87a8-c083c3ecac4a Logging initialized using configuration in file:/opt/hive-3.1.1/conf/hive-log4j2.properties Async: true Hive Session ID = a6521e70-9e95-4f04-b8ae-e1b8fc3949b4 Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. hive> hive> show databases; OK default Time taken: 1.522 seconds, Fetched: 1 row(s) hive> quit;

同时登入mysql数据库中,可以看到hive库已经生成了表结构

mysql> use hive; Database changed mysql> mysql> show tables; +-------------------------------+ | Tables_in_hive | +-------------------------------+ | AUX_TABLE | | BUCKETING_COLS | | CDS | | COLUMNS_V2 | | COMPACTION_QUEUE | | COMPLETED_COMPACTIONS | | COMPLETED_TXN_COMPONENTS | | CTLGS | | DATABASE_PARAMS | | DBS | | DB_PRIVS | | DELEGATION_TOKENS | | FUNCS | | FUNC_RU | | GLOBAL_PRIVS | | HIVE_LOCKS | | IDXS | | INDEX_PARAMS | | I_SCHEMA | | KEY_CONSTRAINTS | | MASTER_KEYS | | MATERIALIZATION_REBUILD_LOCKS | | METASTORE_DB_PROPERTIES | | MIN_HISTORY_LEVEL | | MV_CREATION_METADATA | | MV_TABLES_USED | | NEXT_COMPACTION_QUEUE_ID | | NEXT_LOCK_ID | | NEXT_TXN_ID | | NEXT_WRITE_ID | | NOTIFICATION_LOG | | NOTIFICATION_SEQUENCE | | NUCLEUS_TABLES | | PARTITIONS | | PARTITION_EVENTS | | PARTITION_KEYS | | PARTITION_KEY_VALS | | PARTITION_PARAMS | | PART_COL_PRIVS | | PART_COL_STATS | | PART_PRIVS | | REPL_TXN_MAP | | ROLES | | ROLE_MAP | | RUNTIME_STATS | | SCHEMA_VERSION | | SDS | | SD_PARAMS | | SEQUENCE_TABLE | | SERDES | | SERDE_PARAMS | | SKEWED_COL_NAMES | | SKEWED_COL_VALUE_LOC_MAP | | SKEWED_STRING_LIST | | SKEWED_STRING_LIST_VALUES | | SKEWED_VALUES | | SORT_COLS | | TABLE_PARAMS | | TAB_COL_STATS | | TBLS | | TBL_COL_PRIVS | | TBL_PRIVS | | TXNS | | TXN_COMPONENTS | | TXN_TO_WRITE_ID | | TYPES | | TYPE_FIELDS | | VERSION | | WM_MAPPING | | WM_POOL | | WM_POOL_TO_TRIGGER | | WM_RESOURCEPLAN | | WM_TRIGGER | | WRITE_SET | +-------------------------------+ 74 rows in set (0.00 sec)

标签:tox sha localhost nta none alpha logger ssh ica

原文地址:https://www.cnblogs.com/weavepub/p/11130869.html