标签:pre tps url 图片 rom origin tree retrieve windows

import requests,lxml

images_url=‘https://www.fabiaoqing.com/search/search/keyword/%E8%9C%A1%E7%AC%94%E5%B0%8F%E6%96%B0/type/bq/page/1.html‘

head={‘User-Agent‘: ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.142 Safari/537.36‘}

req_url_images=requests.get(url=images_url,headers=head)

req_url_images.text

from lxml import etree

html_images=etree.HTML(req_url_images.text)

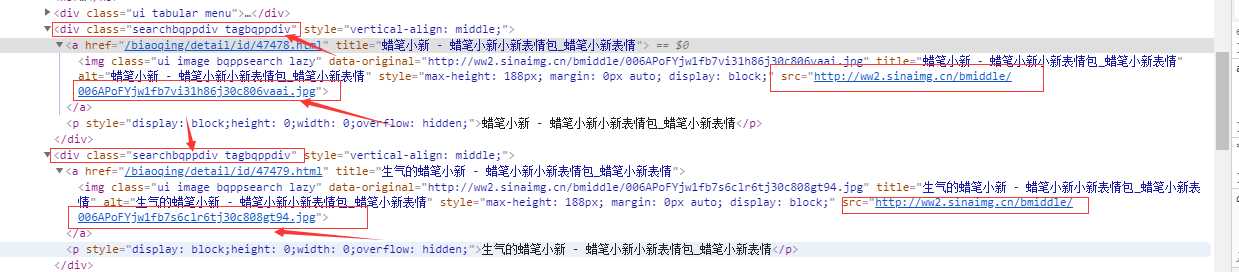

images_get="//div[@class=‘searchbqppdiv tagbqppdiv‘]//img/@data-original"

images_last=html_images.xpath(images_get)

#用ulllib自带的保存文件的方式去保存

from urllib import request

#索引出序列用来做图片名字

Indexes=1

for images_save in images_last:

#这个网站的图片都是以jpg结尾比较简单

request.urlretrieve(images_save,r"image/"+‘%s.jpg‘%Indexes)

Indexes+=1

from urllib import request

from lxml import etree

images_url=‘https://www.fabiaoqing.com/search/search/keyword/%E8%9C%A1%E7%AC%94%E5%B0%8F%E6%96%B0/type/bq/page/1.html‘

head={‘User-Agent‘: ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.142 Safari/537.36‘}

new_way=request.Request(url=images_url,headers=head)

req_url_images=request.urlopen(new_way)

html_images=etree.HTML(req_url_images.read().decode(‘utf-8‘))

images_get="//div[@class=‘searchbqppdiv tagbqppdiv‘]//img/@data-original"

images_last=html_images.xpath(images_get)

#用ulllib自带的保存文件的方式去保存

#索引出序列用来做图片名字

Indexes=1

for images_save in images_last:

#这个网站的图片都是以jpg结尾比较简单

request.urlretrieve(images_save,r"image/"+‘%s.jpg‘%Indexes)

Indexes+=1

pages = 1

Indexes = 1

while pages < 11:

import requests, lxml

images_url = ‘https://www.fabiaoqing.com/search/search/keyword/%E8%9C%A1%E7%AC%94%E5%B0%8F%E6%96%B0/type/bq/page/‘ + ‘%s.html‘ % pages

head = {

‘User-Agent‘: ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.142 Safari/537.36‘}

req_url_images = requests.get(url=images_url, headers=head)

req_url_images.text

pages += 1

from lxml import etree

html_images = etree.HTML(req_url_images.text)

images_get = "//div[@class=‘searchbqppdiv tagbqppdiv‘]//img/@data-original"

images_last = html_images.xpath(images_get)

# 用ulllib自带的保存文件的方式去保存

from urllib import request

# 索引出序列用来做图片名字

for images_save in images_last:

# 这个网站的图片都是以jpg结尾比较简单

request.urlretrieve(images_save, r"image/" + ‘%s.jpg‘ % Indexes)

print(‘已经爬取了%s张‘ % Indexes)

Indexes += 1

from urllib import request

from lxml import etree

pages=1

Indexes=1

while pages<11:

images_url=‘https://www.fabiaoqing.com/search/search/keyword/%E8%9C%A1%E7%AC%94%E5%B0%8F%E6%96%B0/type/bq/page/‘+‘%s.html‘%pages

head={‘User-Agent‘: ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.142 Safari/537.36‘}

new_way=request.Request(url=images_url,headers=head)

req_url_images=request.urlopen(new_way)

html_images=etree.HTML(req_url_images.read().decode(‘utf-8‘))

images_get="//div[@class=‘searchbqppdiv tagbqppdiv‘]//img/@data-original"

images_last=html_images.xpath(images_get)

#用ulllib自带的保存文件的方式去保存

#索引出序列用来做图片名字

count=1

for images_save in images_last:

# 这个网站的图片都是以jpg结尾比较简单

request.urlretrieve(images_save,"image/"+‘%s.jpg‘%Indexes)

print(‘已经爬取了%s张‘%Indexes)

Indexes+=1

pages+=1

标签:pre tps url 图片 rom origin tree retrieve windows

原文地址:https://www.cnblogs.com/lcyzblog/p/11285962.html