标签:type eject 学生 odi nss amp Nid port 最新版

k12在线教育公司的业务场景中,有一些业务场景需要实时统计和分析,如分析在线上课老师数量、学生数量,实时销售额,课堂崩溃率等,需要实时反应上课的质量问题,以便于对整个公司的业务情况有大致的了解。

对比了很多解决方案,如下几种,列出来供参考。

| 方案 | 实时入库 | SQL支持度 |

|---|---|---|

| Spark+CarbonData | 支持 | Spark SQL语法丰富 |

| Kylin | 不支持 | 支持join |

| Flink+Druid | 支持 | 0.15以前不支持SQL,不支持join |

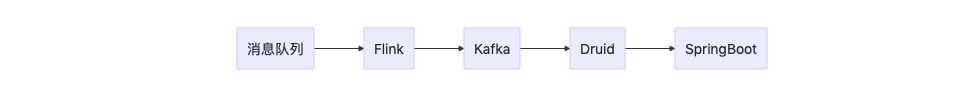

实时处理采用Flink SQL,实时入库Druid方式采用 druid-kafka-indexing-service,另一种方式入库方式,Tranquility,这种方式测试下来问题多多,放弃了。数据流向如下图。

实时计算课堂连接掉线率。此事件包含两个埋点上报,进入教室和掉线分别上报数据。druid设计的字段

将上报的数据进行解析,上报使用的是json格式,需要解析出所需要的字段然后发送到kafka。字段包含如下

sysTime,DateTime格式

pt,格式yyyy-MM-dd

eventId,事件类型(enterRoom|disconnect)

lessonId,课程ID

启动Druid Supervisor,消费Kafka里的数据,使用预聚合,配置如下

{ "type": "kafka", "dataSchema": { "dataSource": "sac_core_analyze_v1", "parser": { "parseSpec": { "dimensionsSpec": { "spatialDimensions": [], "dimensions": [ "eventId", "pt" ] }, "format": "json", "timestampSpec": { "column": "sysTime", "format": "auto" } }, "type": "string" }, "metricsSpec": [ { "filter": { "type": "selector", "dimension": "msg_type", "value": "disconnect" }, "aggregator": { "name": "lesson_offline_molecule_id", "type": "cardinality", "fields": ["lesson_id"] }, "type": "filtered" }, { "filter": { "type": "selector", "dimension": "msg_type", "value": "enterRoom" }, "aggregator": { "name": "lesson_offline_denominator_id", "type": "cardinality", "fields": ["lesson_id"] }, "type": "filtered" } ], "granularitySpec": { "type": "uniform", "segmentGranularity": "DAY", "queryGranularity": { "type": "none" }, "rollup": true, "intervals": null }, "transformSpec": { "filter": null, "transforms": [] } }, "tuningConfig": { "type": "kafka", "maxRowsInMemory": 1000000, "maxBytesInMemory": 0, "maxRowsPerSegment": 5000000, "maxTotalRows": null, "intermediatePersistPeriod": "PT10M", "basePersistDirectory": "/tmp/1564535441619-2", "maxPendingPersists": 0, "indexSpec": { "bitmap": { "type": "concise" }, "dimensionCompression": "lz4", "metricCompression": "lz4", "longEncoding": "longs" }, "buildV9Directly": true, "reportParseExceptions": false, "handoffConditionTimeout": 0, "resetOffsetAutomatically": false, "segmentWriteOutMediumFactory": null, "workerThreads": null, "chatThreads": null, "chatRetries": 8, "httpTimeout": "PT10S", "shutdownTimeout": "PT80S", "offsetFetchPeriod": "PT30S", "intermediateHandoffPeriod": "P2147483647D", "logParseExceptions": false, "maxParseExceptions": 2147483647, "maxSavedParseExceptions": 0, "skipSequenceNumberAvailabilityCheck": false }, "ioConfig": { "topic": "sac_druid_analyze_v2", "replicas": 2, "taskCount": 1, "taskDuration": "PT600S", "consumerProperties": { "bootstrap.servers": "bd-prod-kafka01:9092,bd-prod-kafka02:9092,bd-prod-kafka03:9092" }, "pollTimeout": 100, "startDelay": "PT5S", "period": "PT30S", "useEarliestOffset": false, "completionTimeout": "PT1200S", "lateMessageRejectionPeriod": null, "earlyMessageRejectionPeriod": null, "stream": "sac_druid_analyze_v2", "useEarliestSequenceNumber": false }, "context": null, "suspended": false }

最重要的配置是metricsSpec,他主要定义了预聚合的字段和条件。

数据格式如下

| pt | eventId | lesson_offline_molecule_id | lesson_offline_denominator_id |

|---|---|---|---|

| 2019-08-09 | enterRoom | "AQAAAAAAAA==" | "AQAAAAAAAA==" |

| 2019-08-09 | disconnect | "AQAAAAAAAA==" | "AQAAAAAAAA==" |

结果可以按照这样的SQL出

SELECT pt,CAST(APPROX_COUNT_DISTINCT(lesson_offline_molecule_id) AS DOUBLE)/CAST(APPROX_COUNT_DISTINCT(lesson_offline_denominator_id) AS DOUBLE) from sac_core_analyze_v1 group by pt

可以使用Druid的接口查询结果,肥肠的方便~

标签:type eject 学生 odi nss amp Nid port 最新版

原文地址:https://www.cnblogs.com/ChouYarn/p/11328900.html