标签:function duration throw action integer cas doc ash void

discretized 离散化的 http://spark.apache.org/docs/1.6.0/streaming-programming-guide.html#overview

[root@node5 ~]# yum install nc

[root@node5 ~]# nc -lk 9999

package com.bjsxt.spark;

import java.util.Arrays;

import org.apache.spark.SparkConf;

import org.apache.spark.SparkContext;

import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.api.java.function.FlatMapFunction;

import org.apache.spark.api.java.function.Function2;

import org.apache.spark.api.java.function.PairFunction;

import org.apache.spark.api.java.function.VoidFunction;

import org.apache.spark.broadcast.Broadcast;

import org.apache.spark.streaming.Duration;

import org.apache.spark.streaming.Durations;

import org.apache.spark.streaming.api.java.JavaDStream;

import org.apache.spark.streaming.api.java.JavaPairDStream;

import org.apache.spark.streaming.api.java.JavaReceiverInputDStream;

import org.apache.spark.streaming.api.java.JavaStreamingContext;

import scala.Tuple2;

public class SparkStreamingTest {

public static void main(String[] args) {

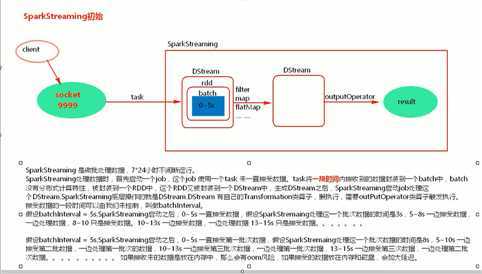

// local[1] 时只有一个task,被占用为接收数据,打印输出没有任务线程执行

SparkConf conf = new SparkConf().setMaster("local[2]").setAppName("SparkStreamingTest");

final JavaSparkContext sc = new JavaSparkContext(conf);

sc.setLogLevel("WARN");

JavaStreamingContext jsc = new JavaStreamingContext(sc,Durations.seconds(5));

JavaReceiverInputDStream<String> socketTextStream = jsc.socketTextStream("node5",9999);

JavaDStream<String> words = socketTextStream.flatMap(new FlatMapFunction<String,String>() {

/**

*

*/

private static final long serialVersionUID = 1L;

@Override

public Iterable<String> call(String lines) throws Exception {

return Arrays.asList(lines.split(" "));

}

});

JavaPairDStream<String,Integer> pairWords = words.mapToPair(new PairFunction<String, String, Integer>() {

/**

*

*/

private static final long serialVersionUID = 1L;

@Override

public Tuple2<String, Integer> call(String word) throws Exception {

return new Tuple2<String, Integer>(word,1);

}

});

JavaPairDStream<String, Integer> reduceByKey = pairWords.reduceByKey(new Function2<Integer, Integer, Integer>() {

/**

*

*/

private static final long serialVersionUID = 1L;

@Override

public Integer call(Integer v1, Integer v2) throws Exception {

System.out.println("rdd reduceByKey************************");

return v1 + v2;

}

});

// reduceByKey.print(1000);

/**

* foreachRDD 可以拿到DStream中的RDD,对拿到的RDD可以使用RDD的transformation类算子转换,要对拿到的RDD使用action算子触发执行,否则,foreachRDD也不会执行。

* foreachRDD中call方法内,拿到的RDD的算子外,代码是在Driver端执行;可以使用这个算子动态改变广播变量(使用配置文件的方式)

*/

reduceByKey.foreachRDD(new VoidFunction<JavaPairRDD<String,Integer>>() {

/**

*

*/

private static final long serialVersionUID = 1L;

@Override

public void call(JavaPairRDD<String, Integer> rdd)

throws Exception {

// Driver中获取SparkContext的正确方式; 中获取广播变量的正确方式。

SparkContext context = rdd.context();

JavaSparkContext sc = new JavaSparkContext(context);

Broadcast<String> broadcast = sc.broadcast("hello");

String value = broadcast.value();

System.out.println("Driver.........");

JavaPairRDD<String, Integer> mapToPair = rdd.mapToPair(new PairFunction<Tuple2<String,Integer>, String, Integer>() {

/**

*

*/

private static final long serialVersionUID = 1L;

@Override

public Tuple2<String, Integer> call(

Tuple2<String, Integer> tuple) throws Exception {

System.out.println("Executor.........");

return new Tuple2<String,Integer>(tuple._1,tuple._2);

}

});

mapToPair.foreach(new VoidFunction<Tuple2<String,Integer>>() {

/**

*

*/

private static final long serialVersionUID = 1L;

@Override

public void call(Tuple2<String, Integer> arg0)

throws Exception {

System.out.println(arg0);

}

});

}

});

jsc.start();

jsc.awaitTermination();

jsc.stop();

}

}

[root@node5 ~]# nc -lk 9999 ## linux 发送socket数据。

hello sxt

hello bj

hello

hello

zhongguo

zhngguo

zhongguo

标签:function duration throw action integer cas doc ash void

原文地址:https://www.cnblogs.com/xhzd/p/11595288.html