标签:pipe setting scale 参数配置 rank 组合 balance api select

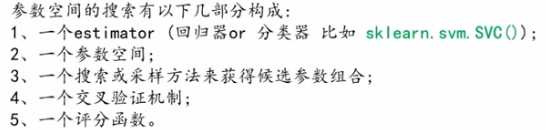

学习器模型中一般有两个参数:一类参数可以从数据中学习估计得到,还有一类参数无法从数据中估计,只能靠人的经验进行指定,后一类参数就叫超参数

比如,支持向量机里的C,Kernel,gama,朴素贝叶斯里的alpha等,在学习其模型的设计中,我们要搜索超参数空间为学习器模型找到最合理的超参数,可以通过以下方法获得学习器模型的参数列表和当前取值:estimator.get_params()

sklearn 提供了两种通用的参数优化方法:网络搜索和随机采样,

sklearn.model_selection:GridSearchCV,RandomizedSearchCV,ParameterGrid,ParameterSampler,fit_grid_point

①GridSearchCV:

该方法提供了在参数网格上穷举候选参数组合的方法。参数网格由参数param_grid来指定,比如,下面展示了设置网格参数param_grid的一个例子:

param_grid=[

{‘C‘:[1,10,100,1000],‘kernel‘:[‘linear‘]},

{‘C‘:[1,10,100,1000],‘gamma‘:[0.001,0.0001],‘kernel‘:[‘rbf‘]}

]

上面的参数指定了要搜索的两个网格(每个网格就是一个字典):第一个里面有4个参数组合节点,第二个里面有4*2=8个参数组合节点

GridSearchCV的实例实现了通用的estimator API:当在数据集上训练的时候,所有可能的参数组合将会被评估,训练完成后选组最优的参数组合对应的estimator。

from sklearn import svm,datasets

from sklearn.model_selection import GridSearchCV

iris=datasets.load_iris()

parameters={‘kernel‘:(‘rbf‘,‘linear‘),‘C‘:[1,5,10]}

svr=svm.SVC()

clf=GridSearchCV(svr,parameters)

clf.fit(iris.data,iris.target)

print(clf.best_estimator_)

最终结果:

SVC(C=1, cache_size=200, class_weight=None, coef0=0.0, decision_function_shape=None, degree=3, gamma=‘auto‘, kernel=‘linear‘, max_iter=-1, probability=False, random_state=None, shrinking=True, tol=0.001, verbose=False)

②RandomizedSearchCV

RandomizedSearchCV类实现了在参数空间上进行随机搜索的机制,其中参数的取值是从某种概率分布中抽取的,这个概率分布描述了对应的参数的所有取值情况的可能性,这种随机采样机制与网格穷举搜索相比,有两大优点:

指定参数的采样范围和分布可以用一个字典开完成,跟网格搜索很像,另外,计算预算(总共要采样多少参数组合或者迭代做多少次)可以用参数n_iter来指定,针对每一个参数,既可以使用可能取值范围内的概率分布,也可以指定一个离散的取值列表(离散的列表将被均匀采样)

{‘C‘:scpiy.stats.expon(scale=100),‘gamma‘:scipy.stats.expon(scale=.1),‘kernel‘:[‘rbf‘],‘class_weight‘:[‘balanced‘:None]}

上边的例子中:C服从指数分布,gamma服从指数分布,这个例子使用了scipy.stats模块,其中包含了很多有用的分布用来产生参数采样点,像expon,gamma,uniform or randint,原则上,任何函数都可以传递进去,只要他提供一个rvs(random variate sample)方法来返回采样值,rvs函数的连续调用应该能够保证产生独立同分布的样本值。

import numpy as np

from time import time

from scipy.stats import randint as sp_randint

from sklearn.model_selection import RandomizedSearchCV

from sklearn.datasets import load_digits

from sklearn.ensemble import RandomForestClassifier

def report(results,n_top=3):

for i in range(1,n_top+1):

candidates=np.flatnonzero(results[‘rank_test_score‘]==i)

for candidate in candidates:

print("Model with rank:{0}".format(i))

print("Mean validation score:{0:.3f}(std:{1:.3f})".format(

results[‘mean_test_score‘][candidate],

results[‘std_test_score‘][candidate]))

print("Parameters:{0}".format(results[‘params‘][candidate]))

print("")

digis=load_digits()

X,y=digis.data,digis.target

clf=RandomForestClassifier(n_estimators=20)

#设置想要优化的超参数以及他们的取值分布

param_dist={"max_depth":[3,None],

"max_features":sp_randint(1,11),

"min_samples_split":sp_randint(2,11),

"min_samples_leaf":sp_randint(1,11),

"bootstrap":[True,False],

"criterion":[‘gini‘,‘entropy‘]

}

#开启超参数空间的随机搜索

n_iter_search=20

random_search=RandomizedSearchCV(clf,param_distributions=param_dist,n_iter=n_iter_search)

start=time()

random_search.fit(X,y)

print("RandomizedSearchCV took %.3f seconds for %d candidates"

"parameter settings."%((time()-start),n_iter_search))

report(random_search.cv_results_)

最终结果:

RandomizedSearchCV took 3.652 seconds for 20 candidatesparameter settings.

Model with rank:1

Mean validation score:0.930(std:0.019)

Parameters:{‘max_depth‘: None, ‘min_samples_leaf‘: 2, ‘criterion‘: ‘entropy‘, ‘max_features‘: 8, ‘bootstrap‘: False, ‘min_samples_split‘: 10}

Model with rank:2

Mean validation score:0.928(std:0.009)

Parameters:{‘max_depth‘: None, ‘min_samples_leaf‘: 2, ‘criterion‘: ‘gini‘, ‘max_features‘: 4, ‘bootstrap‘: False, ‘min_samples_split‘: 10}

Model with rank:3

Mean validation score:0.924(std:0.009)

Parameters:{‘max_depth‘: None, ‘min_samples_leaf‘: 1, ‘criterion‘: ‘gini‘, ‘max_features‘: 9, ‘bootstrap‘: True, ‘min_samples_split‘: 5}

③超参数优化中的随机搜索和网格搜索对比试验以随机森林分类器为优化对象。所有影响分类器学习的参数都被搜索了,除了树的数量之外,随机搜索和网格优化都在同一个超参数空间上对随机森林分类器进行优化,虽然得到的超参数设置组合比较相似,但是随机搜索的运行时间却比网络搜索显著的少,随机搜索得到的超参数组合的性能稍微差一点,但这很大程度上由噪声引起的,在实践中,我们只能挑几个比较重要的参数组合来进行优化。

import numpy as np

from time import time

from scipy.stats import randint as sp_randint

from sklearn.model_selection import RandomizedSearchCV

from sklearn.model_selection import GridSearchCV

from sklearn.datasets import load_digits

from sklearn.ensemble import RandomForestClassifier

def report(results,n_top=3):

for i in range(1,n_top+1):

candidates=np.flatnonzero(results[‘rank_test_score‘]==i)

for candidate in candidates:

print("Model with rank:{0}".format(i))

print("Mean validation score:{0:.3f}(std:{1:.3f})".format(

results[‘mean_test_score‘][candidate],

results[‘std_test_score‘][candidate]))

print("Parameters:{0}".format(results[‘params‘][candidate]))

print("")

digis=load_digits()

X,y=digis.data,digis.target

clf=RandomForestClassifier(n_estimators=20)

print("==========下面是RandomizedSearchCV的测试结果===============")

#设置想要优化的超参数以及他们的取值分布

param_dist={"max_depth":[3,None],

"max_features":sp_randint(1,11),

"min_samples_split":sp_randint(2,11),

"min_samples_leaf":sp_randint(1,11),

"bootstrap":[True,False],

"criterion":[‘gini‘,‘entropy‘]

}

#开启超参数空间的随机搜索

n_iter_search=20

random_search=RandomizedSearchCV(clf,param_distributions=param_dist,n_iter=n_iter_search)

start=time()

random_search.fit(X,y)

print("RandomizedSearchCV took %.3f seconds for %d candidates"

"parameter settings."%((time()-start),n_iter_search))

report(random_search.cv_results_)

print("==========下面是GridSearchCV的测试结果===============")

#在所有参数上搜索,找遍所有网络节点

param_grid={"max_depth":[3,None],

"max_features":[1,3,10],

"min_samples_split":[2,3,10],

"min_samples_leaf":[1,3,10],

"bootstrap":[True,False],

"criterion":[‘gini‘,‘entropy‘]

}

#开启超参数空间的网格搜索

grid_search=GridSearchCV(clf,param_grid=param_grid)

start=time()

grid_search.fit(X,y)

print("GridSearchCV took %.2f seconds for %d candidates parameter settings."

%(time()-start,len(grid_search.cv_results_[‘params‘])))

report(random_search.cv_results_)

最终结果:

==========下面是RandomizedSearchCV的测试结果===============

RandomizedSearchCV took 3.874 seconds for 20 candidatesparameter settings.

Model with rank:1

Mean validation score:0.928(std:0.010)

Parameters:{‘max_depth‘: None, ‘min_samples_leaf‘: 2, ‘criterion‘: ‘entropy‘, ‘max_features‘: 10, ‘bootstrap‘: False, ‘min_samples_split‘: 2}

Model with rank:2

Mean validation score:0.920(std:0.007)

Parameters:{‘max_depth‘: None, ‘min_samples_leaf‘: 4, ‘criterion‘: ‘gini‘, ‘max_features‘: 6, ‘bootstrap‘: False, ‘min_samples_split‘: 2}

Model with rank:2

Mean validation score:0.920(std:0.009)

Parameters:{‘max_depth‘: None, ‘min_samples_leaf‘: 2, ‘criterion‘: ‘entropy‘, ‘max_features‘: 7, ‘bootstrap‘: True, ‘min_samples_split‘: 10}

==========下面是GridSearchCV的测试结果===============

GridSearchCV took 37.64 seconds for 216 candidates parameter settings.

Model with rank:1

Mean validation score:0.928(std:0.010)

Parameters:{‘max_depth‘: None, ‘min_samples_leaf‘: 2, ‘criterion‘: ‘entropy‘, ‘max_features‘: 10, ‘bootstrap‘: False, ‘min_samples_split‘: 2}

Model with rank:2

Mean validation score:0.920(std:0.007)

Parameters:{‘max_depth‘: None, ‘min_samples_leaf‘: 4, ‘criterion‘: ‘gini‘, ‘max_features‘: 6, ‘bootstrap‘: False, ‘min_samples_split‘: 2}

Model with rank:2

Mean validation score:0.920(std:0.009)

Parameters:{‘max_depth‘: None, ‘min_samples_leaf‘: 2, ‘criterion‘: ‘entropy‘, ‘max_features‘: 7, ‘bootstrap‘: True, ‘min_samples_split‘: 10}

超参数空间的搜索技巧

默认情况下,参数搜索使用estimator的score函数来评估模型在某种参数配置下的性能:

分类器对应于 sklearn.metrics.accuracy_score

回归器对应于sklearn.metrics.r2_score

但是在某些应用中,其他的评分函数获取更加的合适。(比如在非平衡的分类问题中,准确率sccuracy_score通常不管用。这时,可以通过参数scoring来指定GridSearchCV类或者RandomizedSearchCV类内 部用我们自定义的评分函数)

标签:pipe setting scale 参数配置 rank 组合 balance api select

原文地址:https://www.cnblogs.com/cmybky/p/11772664.html