标签:输入 jar包 ast point mamicode for 在线安装 ide rect

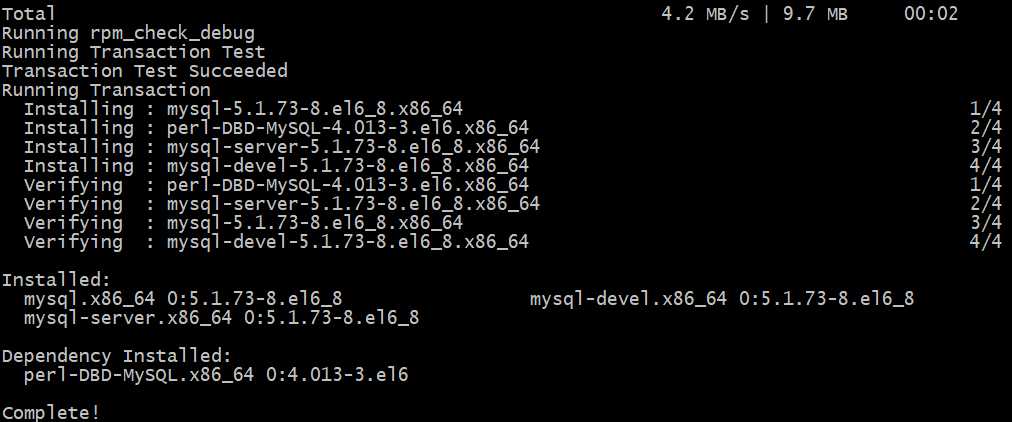

1、在线安装mysql服务

#下载安装mysql

yum install mysql mysql-server mysql-devel

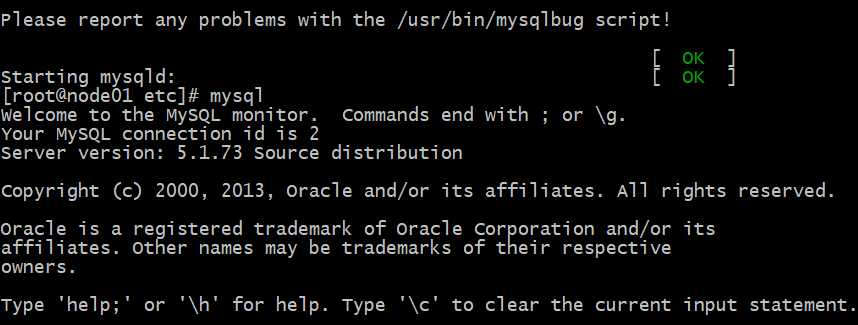

#启动mysql服务

cd /etc/

init.d/mysqld start

#mysql连接并登录

mysql

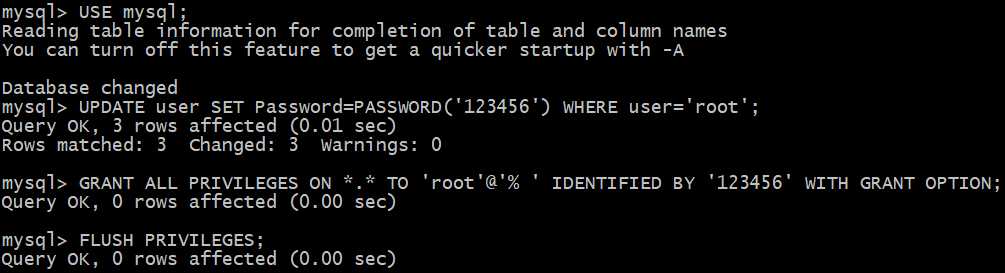

#修改登录mysql用户名及密码

USE mysql;

UPDATE user SET Password=PASSWORD(‘password‘) WHERE user=‘root‘;

#设置允许远程登录

GRANT ALL PRIVILEGES ON *.* TO ‘root‘@‘% ‘ IDENTIFIED BY ‘password‘ WITH GRANT OPTION;

#强制写入

FLUSH PRIVILEGES;

2、配置hive

#1.上传安装包并解压

#然后,cd /export/servers/hive-1.2.1/conf

#2.修改hive-env.sh

# Set HADOOP_HOME to point to a specific hadoop install directory

HADOOP_HOME=/export/servers/hadoop-2.6.0-cdh5.14.0

# Hive Configuration Directory can be controlled by:

export HIVE_CONF_DIR=/export/servers/hive-1.2.1/conf

# Folder containing extra ibraries required for hive compilation/execution can be controlled by:

export HIVE_AUX_JARS_PATH=/export/servers/hive-1.2.1/lib

#3.新建hive-site.xml文件

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://node01:3306/hive?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>password</value>

<description>password to use against metastore database</description>

</property>

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>node01</value>

</property>

</configuration>

#4.上传mysql连接驱动的jar包到/export/servers/hive-1.2.1/lib 目录下

#5.配置hive的系统环境变量

vim /etc/profile

export HIVE_HOME=/export/servers/hive-1.2.1

export PATH=:$HIVE_HOME/bin:$PATH

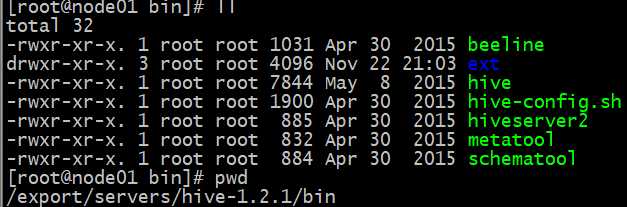

3、hive的远程服务

#1.将node01上的hive安装包分发到node02、node03上,命令如下:

cd /export/servers

scp -r hive-1.2.1 node02:$PWD

scp -r hive-1.2.1 node03:$PWD

#2.在node01上启动hadoop集群

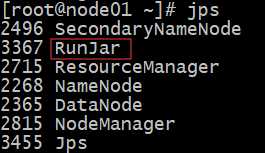

#3.在node01上启动hiveserver2服务,然后克隆当前会话,jps,查看一下当前进程

cd /export/servers/hive-1.2.1/bin

hiveserver2

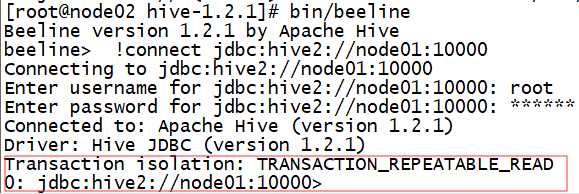

#4.在node02上使用beeline远程连接至hive服务端

cd /export/servers/hive-1.2.1

bin/beeline

#5.输入远程连接协议,连接到指定hive服务的主机名和端口(默认10000)

!connect jdbc:hive2://node01:10000

#6.输入连接hive服务器的用户名和密码

标签:输入 jar包 ast point mamicode for 在线安装 ide rect

原文地址:https://www.cnblogs.com/aurora1123/p/11927009.html