标签:oba opened ant constant variables sed height pool 技术

摘要:池化层的主要目的是降维,通过滤波器映射区域内取最大值、平均值等操作。

均值池化:tf.nn.avg_pool(input,ksize,strides,padding)

最大池化:tf.nn.max_pool(input,ksize,strides,padding)

input:通常情况下是卷积层输出的featuremap,shape=[batch,height,width,channels]

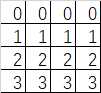

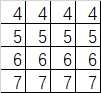

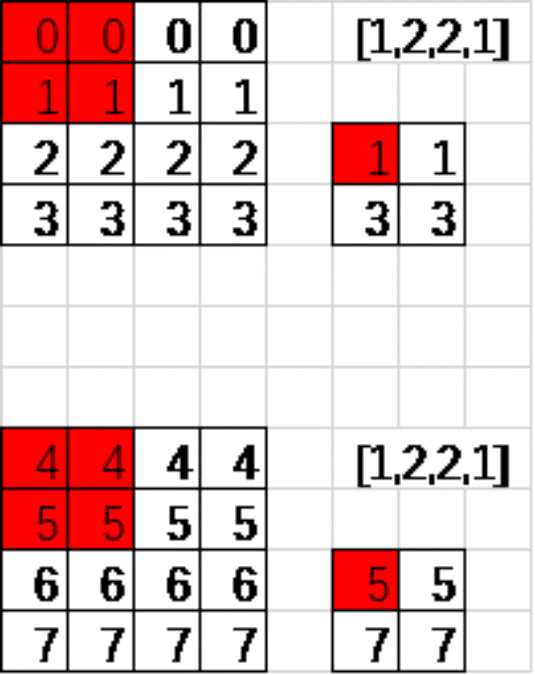

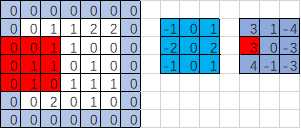

假定这个矩阵就是卷积层输出的featuremap(2通道输出) 他的shape=[1,4,4,2]

ksize:池化窗口大小 shape=[batch,height,width,channels] 比如[1,2,2,1]

strides: 窗口在每一个维度上的移动步长 shape=[batch,stride,stride,channel] 比如[1,2,2,1]

padding:“VALID”不填充 “SAME”填充0

返回:tensor shape=[batch,height,width,channels]

上图是采用的最大池化,取红色框内最大的一个数。

import tensorflow as tf feature_map = tf.constant([ [[0.0,4.0],[0.0,4.0],[0.0,4.0],[0.0,4.0]], [[1.0,5.0],[1.0,5.0],[1.0,5.0],[1.0,5.0]], [[2.0,6.0],[2.0,6.0],[2.0,6.0],[2.0,6.0]] , [[3.0,7.0],[3.0,7.0],[3.0,7.0],[3.0,7.0]] ]) feature_map = tf.reshape(feature_map,[1,4,4,2])##两通道featuremap输入 ##定义池化层 pooling = tf.nn.max_pool(feature_map,[1,2,2,1],[1,2,2,1],padding=‘VALID‘)##池化窗口2*2,高宽方向步长都为2,不填充 pooling1 = tf.nn.max_pool(feature_map,[1,2,2,1],[1,1,1,1],padding=‘VALID‘)##池化窗口2*2,高宽方向步长都为1,不填充 pooling2 = tf.nn.avg_pool(feature_map,[1,4,4,1],[1,1,1,1],padding=‘SAME‘)##池化窗口4*4,高宽方向步长都为1,填充 pooling3 = tf.nn.avg_pool(feature_map,[1,4,4,1],[1,4,4,1],padding=‘SAME‘)##池化窗口4*4,高宽方向步长都为4,填充 ##转置变形(详细解释参考另一篇博文) tran_reshape = tf.reshape(tf.transpose(feature_map),[-1,16]) pooling4 = tf.reduce_mean(tran_reshape,1) ###对行值求平均 with tf.Session() as sess: print(‘featuremap:\n‘,sess.run(feature_map)) print(‘*‘*30) print(‘pooling:\n‘,sess.run(pooling)) print(‘*‘*30) print(‘pooling1:\n‘,sess.run(pooling1)) print(‘*‘*30) print(‘pooling2:\n‘,sess.run(pooling2)) print(‘*‘*30) print(‘pooling3:\n‘,sess.run(pooling3)) print(‘*‘*30) print(‘pooling4:\n‘,sess.run(pooling4)) ‘‘‘ 输出结果: featuremap: [[[[ 0. 4.] [ 0. 4.] [ 0. 4.] [ 0. 4.]] [[ 1. 5.] [ 1. 5.] [ 1. 5.] [ 1. 5.]] [[ 2. 6.] [ 2. 6.] [ 2. 6.] [ 2. 6.]] [[ 3. 7.] [ 3. 7.] [ 3. 7.] [ 3. 7.]]]] ****************************** pooling: [[[[ 1. 5.] [ 1. 5.]] [[ 3. 7.] [ 3. 7.]]]] ****************************** pooling1: [[[[ 1. 5.] [ 1. 5.] [ 1. 5.]] [[ 2. 6.] [ 2. 6.] [ 2. 6.]] [[ 3. 7.] [ 3. 7.] [ 3. 7.]]]] ****************************** pooling2: [[[[ 1. 5. ] [ 1. 5. ] [ 1. 5. ] [ 1. 5. ]] [[ 1.5 5.5] [ 1.5 5.5] [ 1.5 5.5] [ 1.5 5.5]] [[ 2. 6. ] [ 2. 6. ] [ 2. 6. ] [ 2. 6. ]] [[ 2.5 6.5] [ 2.5 6.5] [ 2.5 6.5] [ 2.5 6.5]]]] ****************************** pooling3: [[[[ 1.5 5.5]]]] ****************************** pooling4: [ 1.5 5.5] ‘‘‘

现在我们对代码中的内容加以解释:

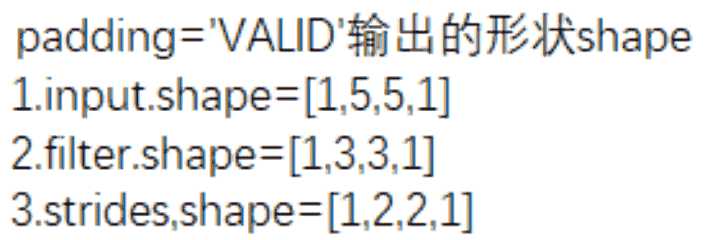

padding的规则

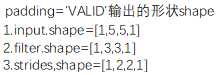

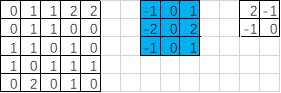

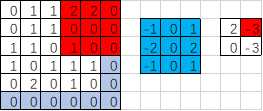

输出宽度:output_width = (in_width-filter_width+1)/strides_width =(5-3+1)/2=1.5【向上取整=2】

输出高度:output_height = (in_height-filter_height+1)/strides_height =(5-3+1)/2=1.5【向上取整=2】

输出的形状[1,2,2,1]

import tensorflow as tf image = [0,1.0,1,2,2,0,1,1,0,0,1,1,0,1,0,1,0,1,1,1,0,2,0,1,0] input = tf.Variable(tf.constant(image,shape=[1,5,5,1])) ##1通道输入 fil1 = [-1.0,0,1,-2,0,2,-1,0,1] filter = tf.Variable(tf.constant(fil1,shape=[3,3,1,1])) ##1个卷积核对应1个featuremap输出 op = tf.nn.conv2d(input,filter,strides=[1,2,2,1],padding=‘VALID‘) ##步长2,VALID不补0操作 init = tf.global_variables_initializer() with tf.Session() as sess: sess.run(init) # print(‘input:\n‘, sess.run(input)) # print(‘filter:\n‘, sess.run(filter)) print(‘op:\n‘,sess.run(op)) ##输出结果 ‘‘‘ [[[[ 2.] [-1.]] [[-1.] [ 0.]]]] ‘‘‘

如果strides=[1,3,3,1]的情况又是如何呢?

输出宽度:output_width = (in_width-filter_width+1)/strides_width =(5-3+1)/3=1

输出高度:output_height = (in_height-filter_height+1)/strides_height =(5-3+1)/3=1

输出的形状[1,1,1,1],因此输出的结果只有一个

import tensorflow as tf image = [0,1.0,1,2,2,0,1,1,0,0,1,1,0,1,0,1,0,1,1,1,0,2,0,1,0] input = tf.Variable(tf.constant(image,shape=[1,5,5,1])) ##1通道输入 fil1 = [-1.0,0,1,-2,0,2,-1,0,1] filter = tf.Variable(tf.constant(fil1,shape=[3,3,1,1])) ##1个卷积核对应1个featuremap输出 op = tf.nn.conv2d(input,filter,strides=[1,3,3,1],padding=‘VALID‘) ##步长2,VALID不补0操作 init = tf.global_variables_initializer() with tf.Session() as sess: sess.run(init) # print(‘input:\n‘, sess.run(input)) # print(‘filter:\n‘, sess.run(filter)) print(‘op:\n‘,sess.run(op)) ##输出结果 ‘‘‘ op: [[[[ 2.]]]] ‘‘‘

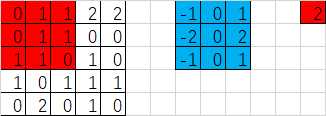

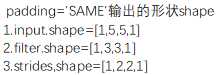

padding=‘SAME’时,输出的宽度和高度的计算公式(下图gif为例)

输出宽度:output_width = in_width/strides_width=5/2=2.5【向上取整3】

输出高度:output_height = in_height/strides_height=5/2=2.5【向上取整3】

则输出的形状:[1,3,3,1]

那么padding补0的规则又是如何的呢?【先确定输出形状,再计算补多少0】

pad_width = max((out_width-1)*strides_width+filter_width-in_width,0)=max((3-1)*2+3-5,0)=2

pad_height = max((out_height-1)*strides_height+filter_height-in_height,0)=max((3-1)*2+3-5,0)=2

pad_top = pad_height/2=1

pad_bottom = pad_height-pad_top=1

pad_left = pad_width/2=1

pad_right = pad_width-pad_left=1

import tensorflow as tf image = [0,1.0,1,2,2,0,1,1,0,0,1,1,0,1,0,1,0,1,1,1,0,2,0,1,0] input = tf.Variable(tf.constant(image,shape=[1,5,5,1])) ##1通道输入 fil1 = [-1.0,0,1,-2,0,2,-1,0,1] filter = tf.Variable(tf.constant(fil1,shape=[3,3,1,1])) ##1个卷积核对应1个featuremap输出 op = tf.nn.conv2d(input,filter,strides=[1,2,2,1],padding=‘SAME‘) ##步长2,VALID不补0操作 init = tf.global_variables_initializer() with tf.Session() as sess: sess.run(init) # print(‘input:\n‘, sess.run(input)) # print(‘filter:\n‘, sess.run(filter)) print(‘op:\n‘,sess.run(op)) ##输出结果 ‘‘‘ op: [[[[ 3.] [ 1.] [-4.]] [[ 3.] [ 0.] [-3.]] [[ 4.] [-1.] [-3.]]]] ‘‘‘

如果步长为3呢?补0的规则又如何?

输出宽度:output_width = in_width/strides_width=5/3=2

输出高度:output_height = in_height/strides_height=5/3=2

则输出的形状:[1,2,2,1]

那么padding补0的规则又是如何的呢?【先确定输出形状,再计算补多少0】

pad_width = max((out_width-1)*strides_width+filter_width-in_width,0)=max((2-1)*3+3-5,0)=1

pad_height = max((out_height-1)*strides_height+filter_height-in_height,0)=max((2-1)*3+3-5,0)=1

pad_top = pad_height/2=0【向下取整】

pad_bottom = pad_height-pad_top=1

pad_left = pad_width/2=0【向下取整】

pad_right = pad_width-pad_left=1

import tensorflow as tf print(3/2) image = [0,1.0,1,2,2,0,1,1,0,0,1,1,0,1,0,1,0,1,1,1,0,2,0,1,0] input = tf.Variable(tf.constant(image,shape=[1,5,5,1])) ##1通道输入 fil1 = [-1.0,0,1,-2,0,2,-1,0,1] filter = tf.Variable(tf.constant(fil1,shape=[3,3,1,1])) ##1个卷积核对应1个featuremap输出 op = tf.nn.conv2d(input,filter,strides=[1,3,3,1],padding=‘SAME‘) ##步长2,VALID不补0操作 init = tf.global_variables_initializer() with tf.Session() as sess: sess.run(init) # print(‘input:\n‘, sess.run(input)) # print(‘filter:\n‘, sess.run(filter)) print(‘op:\n‘,sess.run(op)) ##输出结果 ‘‘‘ op: [[[[ 2.] [-3.]] [[ 0.] [-3.]]]] ‘‘‘

这里借用的卷积中的padding规则,在池化层中的padding规则与卷积中的padding规则一致

CNN之池化层tf.nn.max_pool|tf.nn.avg_pool|tf.reduce_mean

标签:oba opened ant constant variables sed height pool 技术

原文地址:https://www.cnblogs.com/liuhuacai/p/12003697.html