标签:tin ima lse 图片 width 并且 像素 sort ever

import wordcloud

import jieba

from imageio import imread

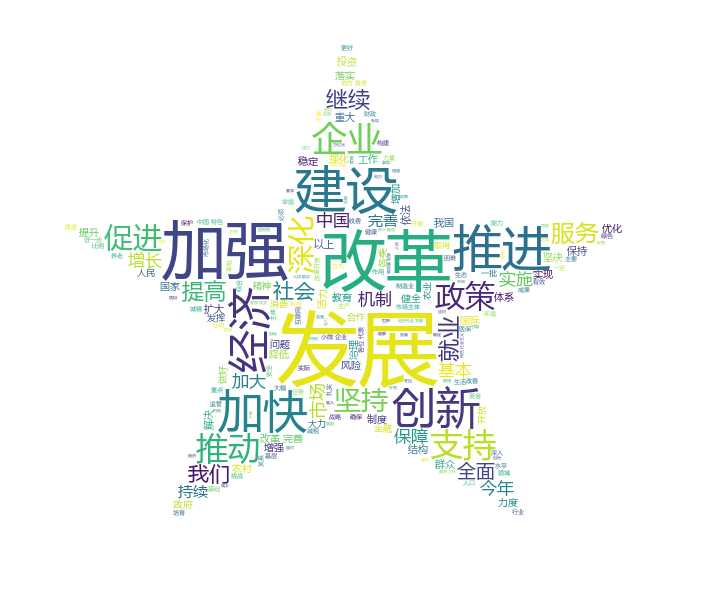

mask = imread("五角星.png")

f = open("2019政府工作报告.txt","r",encoding="utf-8")

t = f.read()

f.close()

ls = jieba.lcut(t)

txt = " ".join(ls)

w = wordcloud.WordCloud(font_path = "msyh.ttc",width = 1000, height = 700,background_color = "white", mask = mask )

#生成词云:字体微软雅黑,宽1000,高度700,背景白色

w.generate(txt)

w.to_file("grwordcloud.png")

import wordcloud

def getText():

"""打开文件,将文件内容变为字符串,并且全部转换为英文小写,同时将文本中的特殊字符替换为空格"""

txt = open("Hamlet.txt", "r", encoding='UTF-8').read()

txt = txt.lower()

for ch in '!"#$%&()*+,-./:;<=>?@[\\]^_‘’{|}~':

txt = txt.replace(ch, " ")

return txt

#排除常用词(随便改)

excludes = {

"ll",

"him",

"your",

"but",

"the",

"we",

"o",

"by",

"do",

"are",

"and",

"no",

"s",

"d",

"this",

"my",

"or",

"if",

"our",

"shall",

"from",

"come",

"come",

"thou",

"let",

"there",

"t",

"how",

"now",

"thy",

"may",

"most",

"more",

"to",

"he",

"a",

"of",

"was",

"it",

"you",

"she",

"in",

"that",

"said",

"her",

"i",

"his",

"they",

"had",

"as",

"for",

"with",

"so",

"not",

"then",

"when",

"on",

"at",

"all",

"will",

"be",

"have",

"is",

"into",

"out",

"came",

"me",

"went",

"what",

"like",

"know",

"would",

"them",

"well"

}

hamTxet = getText()

words = hamTxet.split()

counts = {}

clouds = []

# 统计单词数,首先排除常用词,如果word在counts中,则返回word对应的值,如果word不在counts中,则返回0

for word in words:

if word in excludes:

continue

else:

counts[word] = counts.get(word, 0) + 1

# 将字典转化为列表

items = list(counts.items())

#根据单词出现的次数进行排序(倒序) --- 根据第二列排序,即单词的次数,而不是单词的名字

items.sort(key=lambda x:x[1], reverse=True)

#输出词汇出现频率的前十的词汇

for i in range(15):

word, count = items[i]

clouds.append(word)

print("{0:<10}{1:>10}".format(word, count))

#生成词云,宽度为1000像素,高度为700像素,使用微软雅黑, 背景白色, 最多出现15个单词

w = wordcloud.WordCloud(width=1000, font_path="msyh.ttc", height=700, background_color = "white", max_words = 15)

w.generate(hamTxet)

w.to_file("hamwcloud.png")标签:tin ima lse 图片 width 并且 像素 sort ever

原文地址:https://www.cnblogs.com/newbase/p/12077589.html