标签:apr utils cti ado encoding buffered path tput 运行

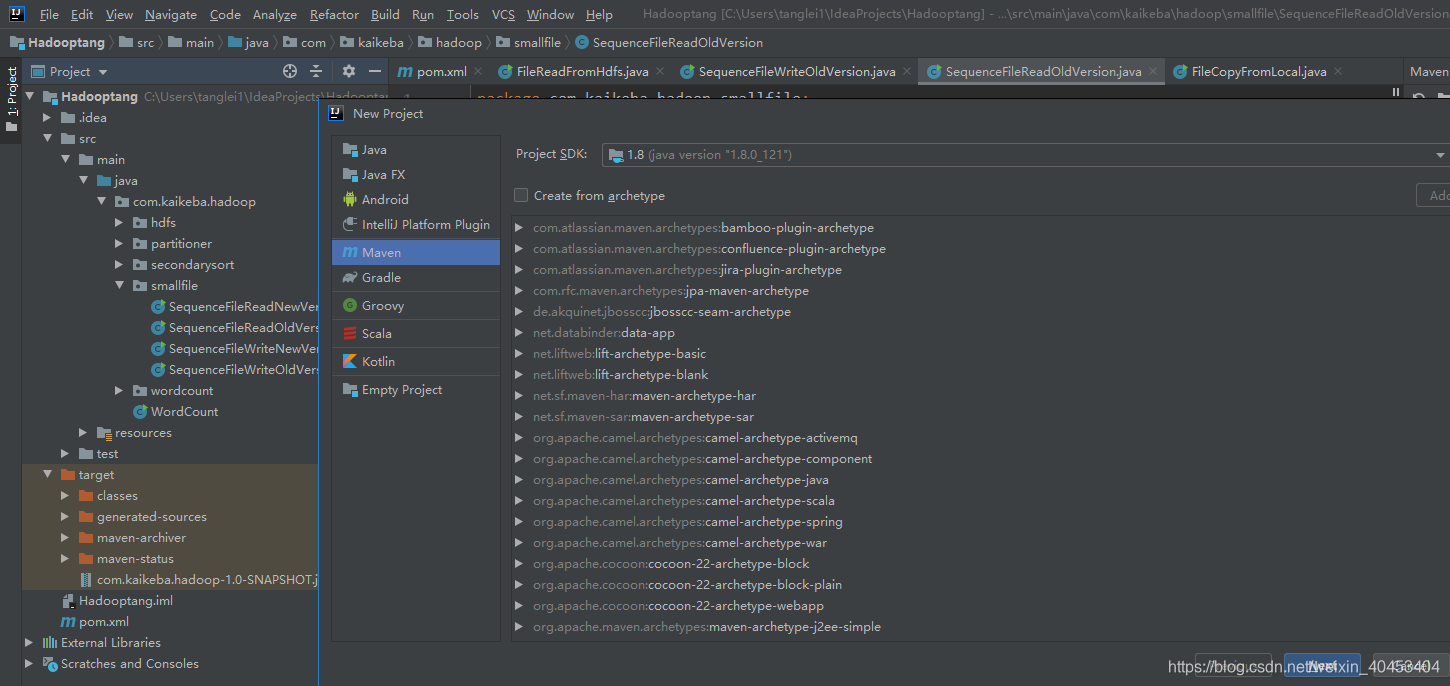

1创建工程点击project——Maven——next

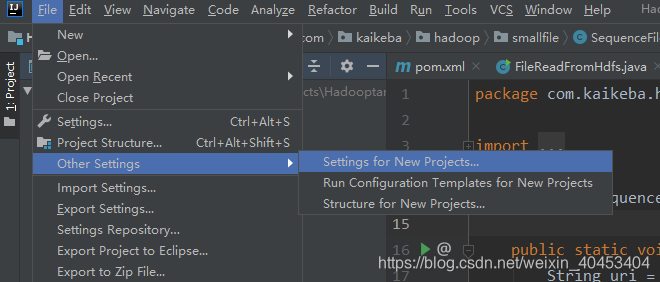

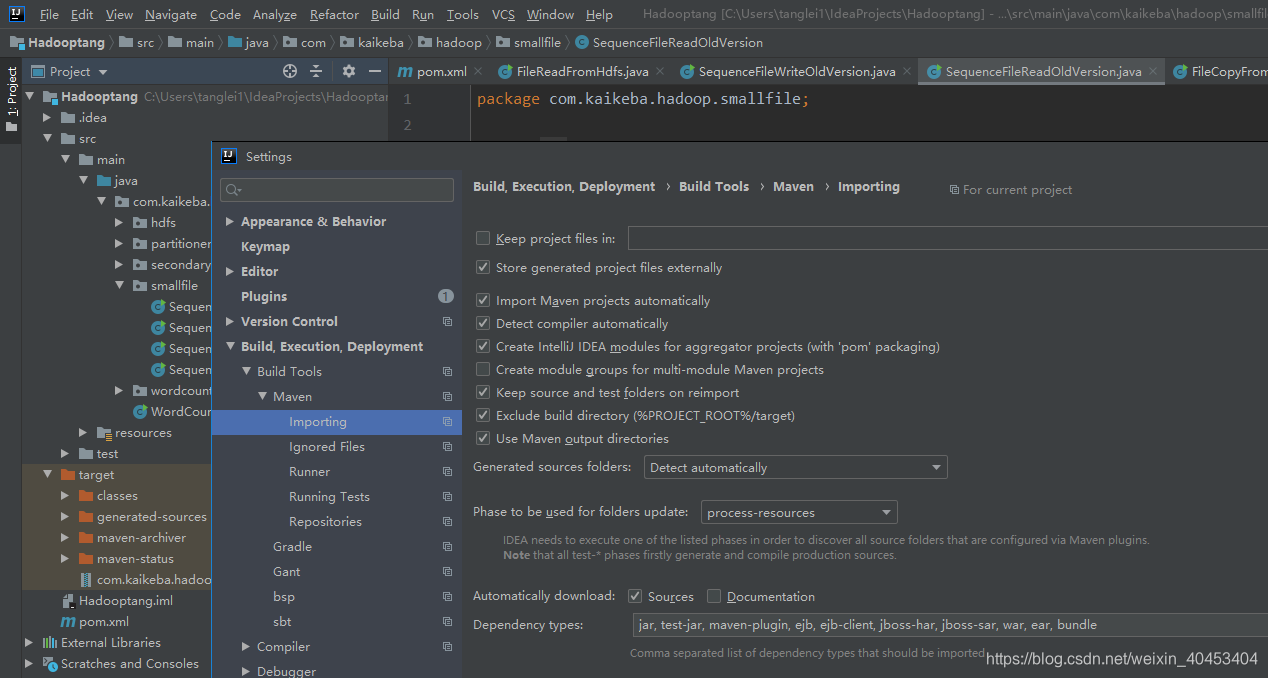

(1)设置maven自动导入依赖jar包

勾选 Import Maven projects automatically,点击apply

(2)配置pom.xml文件

pom.xml配置文件如下:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.kaikeba.hadoop</groupId>

<artifactId>com.kaikeba.hadoop</artifactId>

<version>1.0-SNAPSHOT</version>

<packaging>jar</packaging>

<properties>

<hadoop.version>2.7.3</hadoop.version>

</properties>

<dependencies>

<dependency>

<groupId>commons-cli</groupId>

<artifactId>commons-cli</artifactId>

<version>1.2</version>

</dependency>

<dependency>

<groupId>commons-logging</groupId>

<artifactId>commons-logging</artifactId>

<version>1.1.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-jobclient</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!-- 3.1.2 -->

<!-- <dependency>-->

<!-- <groupId>org.apache.hadoop</groupId>-->

<!-- <artifactId>hadoop-hdfs-client</artifactId>-->

<!-- <version>2.8.0</version>-->

<!-- </dependency>-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.7.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-app</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-hs</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!-- <dependency>-->

<!-- <groupId>org.slf4j</groupId>-->

<!-- <artifactId>slf4j-api</artifactId>-->

<!-- <version>1.7.25</version>-->

<!-- </dependency>-->

<!-- <dependency>-->

<!-- <groupId>log4j</groupId>-->

<!-- <artifactId>log4j</artifactId>-->

<!-- <version>1.2.17</version>-->

<!-- </dependency>-->

</dependencies>

</project>****把本地文件传输到HDFS****

package com.kaikeba.hadoop.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import java.io.*;

import java.net.URI;

/**

* 将本地文件系统的文件通过java-API写入到HDFS文件

*/

public class FileCopyFromLocal {

public static void main(String[] args) {

String source = "E:\\aa.mp4";

//获取/data的m目录存在(根据自己的环境更改)

String destination = "hdfs://122.51.241.109:9000/data/hdfs01.mp4";

InputStream in = null;

try {

in = new BufferedInputStream(new FileInputStream(source));

//HDFS读写的配置文件

Configuration conf = new Configuration();

//生成一个文件系统对象

FileSystem fs = FileSystem.get(URI.create(destination),conf);

//生成一个输出流

OutputStream out = fs.create(new Path(destination));

IOUtils.copyBytes(in, out, 4096, true);

} catch (FileNotFoundException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

}

}**把HDFS中的文件传输到本地**

package com.kaikeba.hadoop.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import java.io.BufferedOutputStream;

import java.io.FileOutputStream;

import java.io.IOException;

import java.net.URI;

/**

* 从HDFS读取文件

* 打包运行jar包 [bruce@node-01 Desktop]$ hadoop jar com.kaikeba.hadoop-1.0-SNAPSHOT.jar com.kaikeba.hadoop.hdfs.FileReadFromHdfs

*/

public class FileReadFromHdfs {

public static void main(String[] args) {

try {

//

String srcFile = "hdfs://122.51.241.109:9000/data/hdfs01.mp4";

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(URI.create(srcFile),conf);

FSDataInputStream hdfsInStream = fs.open(new Path(srcFile));

BufferedOutputStream outputStream = new BufferedOutputStream(new FileOutputStream("/opt/hdfs01.mp4"));

IOUtils.copyBytes(hdfsInStream, outputStream, 4096, true);

} catch (IOException e) {

e.printStackTrace();

}

}

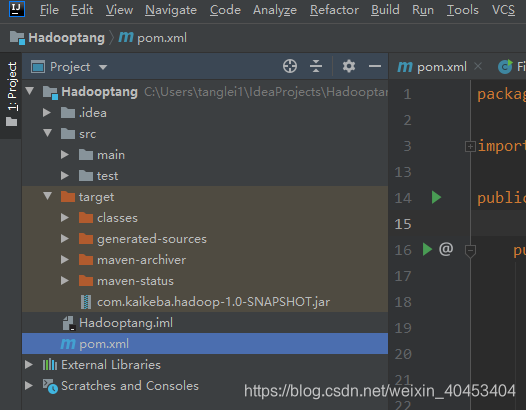

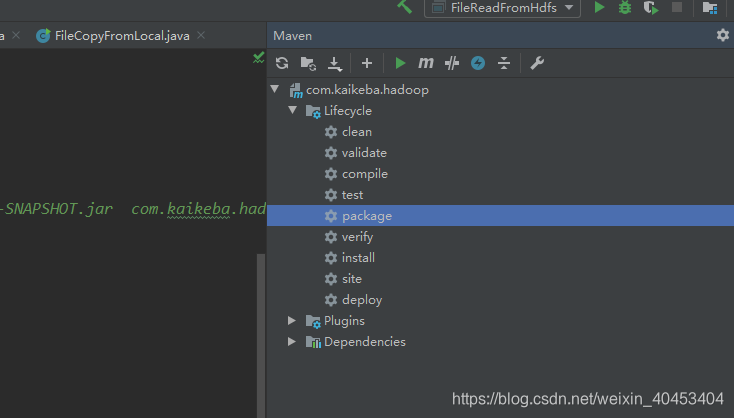

}双击package

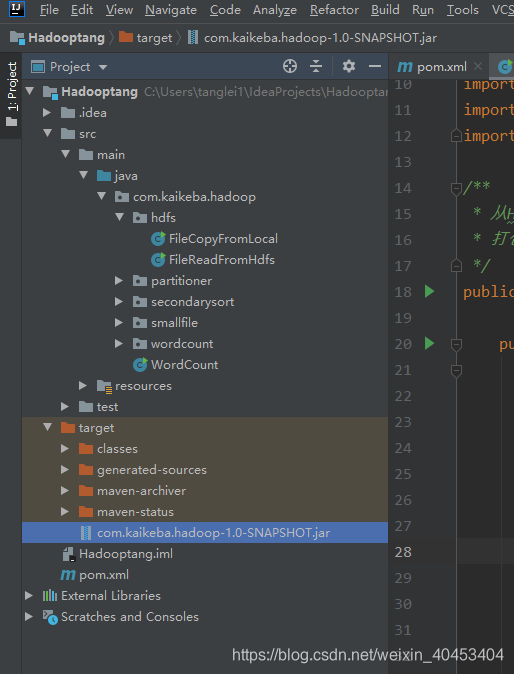

生成com.kaikeba.hadoop-1.0-SNAPSHOT.jar,考入到服务器中执行

执行命令:hadoop jar com.kaikeba.hadoop-1.0-SNAPSHOT.jar com.kaikeba.hadoop.hdfs.FileReadFromHdfs

注意: com.kaikeba.hadoop.hdfs.FileReadFromHdfs是全类名,根据自己的项目更改。

Hadoop学习之路(4)Intelij+Maven搭建Hadoop项目

标签:apr utils cti ado encoding buffered path tput 运行

原文地址:https://blog.51cto.com/10312890/2461930