标签:临时 start function val rollup 定向 cos repo stdin

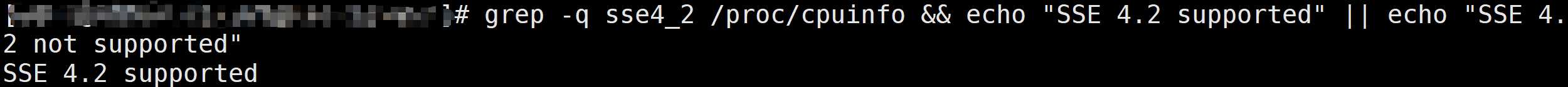

CentOS 7.6

grep -q sse4_2 /proc/cpuinfo && echo "SSE 4.2 supported" || echo "SSE 4.2 not supported"

文件打开数限制

在 /etc/security/limits.conf 文件尾追加

* soft nofile 65536

* hard nofile 65536

* soft nproc 131072

* hard nproc 131072

# "*" 表示所有用户都生效;

# "soft"表示应用软件级别;

# "hard"表示操作系统级别;

# "nofile"表示最大文件打开数

# "nproc"表示最大进程数用户进程数限制

vim /etc/security/limits.d/20-nproc.conf

* soft nproc 131072

root soft nproc unlimited重启服务器后生效

临时生效:ulimit -n 65535

vim /etc/selinux/config

SELINUX=disabledyum install -y libtool

yum install -y *unixODBC*

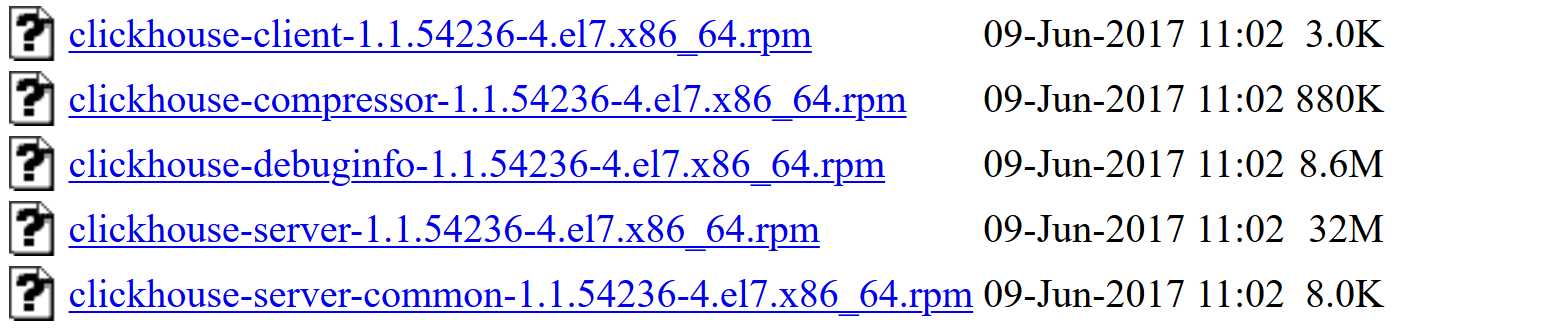

yum install libicu.x86_64官网:https://clickhouse.yandex/

下载地址:http://repo.red-soft.biz/repos/clickhouse/stable/el7/

rpm -ivh clickhouse-server-common-1.1.54236-4.el7.x86_64.rpm

rpm -ivh clickhouse-server-1.1.54236-4.el7.x86_64.rpm

rpm -ivh clickhouse-compressor-1.1.54236-4.el7.x86_64.rpm

rpm -ivh clickhouse-debuginfo-1.1.54236-4.el7.x86_64.rpm

rpm -ivh clickhouse-client-1.1.54236-4.el7.x86_64.rpm 前台启动

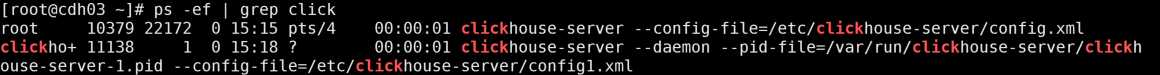

clickhouse-server --config-file=/etc/clickhouse-server/config.xml后台启动

nohup clickhouse-server --config-file=/etc/clickhouse-server/config.xml >/dev/null 2>&1 &

# /dev/null 表示空设备文件;可以将 /dev/null 看作 "黑洞",所有写入它的东西都会丢失

# 0 表示 stdin 标准输入

# 1 表示 stdout 标准输出

# 2 表示 stderr 标准错误

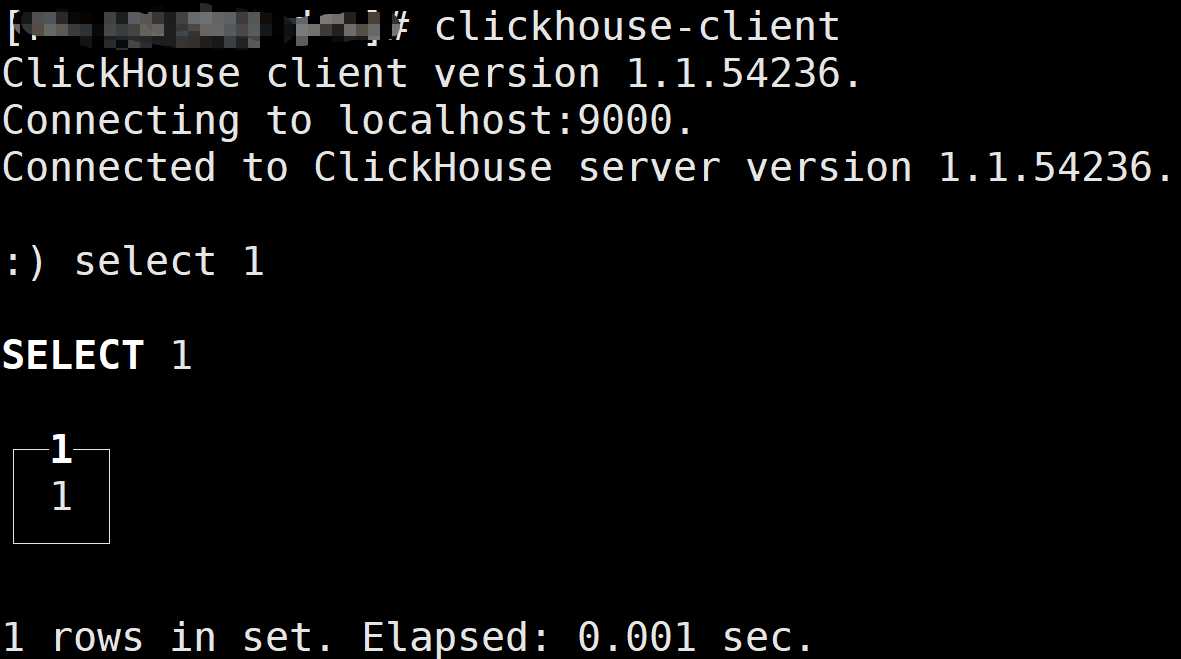

# 2>&1 表示将标准错误重定向到标准输出。这里表示标准错误也会输出到 /dev/nullclickhouse-client

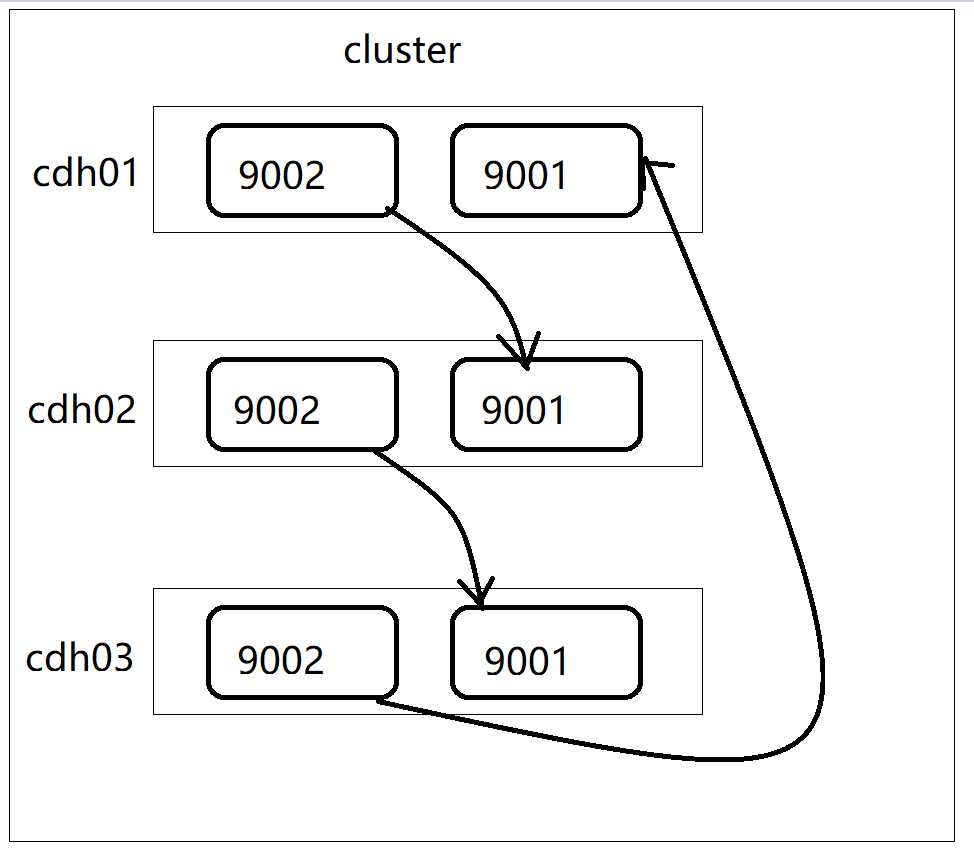

ClickHouse集群:

3个节点

1个节点,2个ClickHouse实例

3分片,2副本

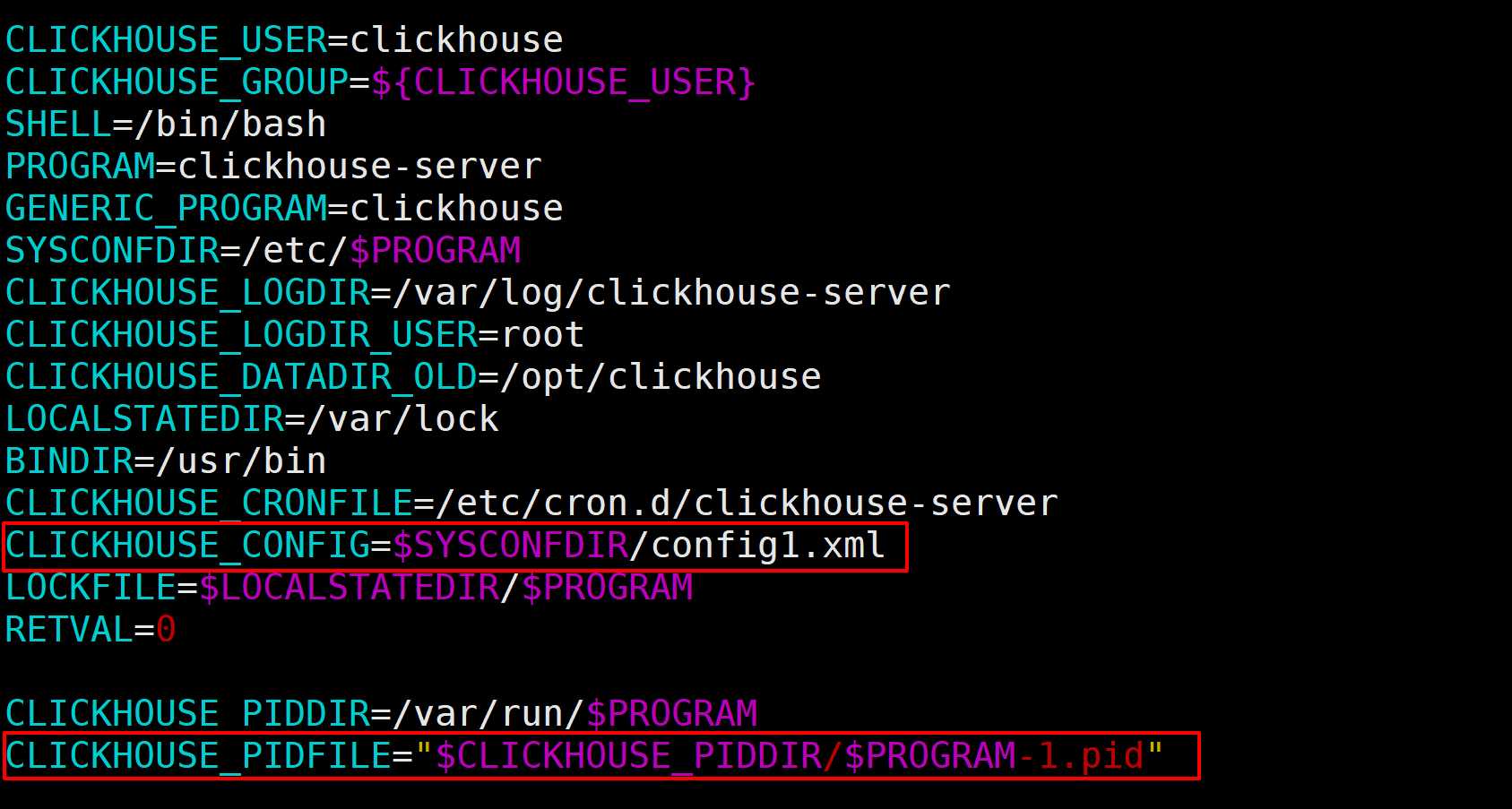

cp /etc/rc.d/init.d/clickhouse-server /etc/rc.d/init.d/clickhouse-server-1

vim /etc/rc.d/init.d/clickhouse-server-1

配置文件使用 config1.xml,pid使用clickhouse-server-1.pid

注意端口号 tcp-port 改为 9092,每个节点的 interserver_http_host 不一样

vim /etc/clickhouse-server/config.xml<?xml version="1.0"?>

<yandex>

<logger>

<level>trace</level>

<log>/var/log/clickhouse-server/clickhouse-server.log</log>

<errorlog>/var/log/clickhouse-server/clickhouse-server.err.log</errorlog>

<size>1000M</size>

<count>10</count>

</logger>

<!--http 端口-->

<http_port>8123</http_port>

<openSSL>

<server>

<certificateFile>/etc/clickhouse-server/server.crt</certificateFile>

<privateKeyFile>/etc/clickhouse-server/server.key</privateKeyFile>

<dhParamsFile>/etc/clickhouse-server/dhparam.pem</dhParamsFile>

<verificationMode>none</verificationMode>

<loadDefaultCAFile>true</loadDefaultCAFile>

<cacheSessions>true</cacheSessions>

<disableProtocols>sslv2,sslv3</disableProtocols>

<preferServerCiphers>true</preferServerCiphers>

</server>

<client>

<loadDefaultCAFile>true</loadDefaultCAFile>

<cacheSessions>true</cacheSessions>

<disableProtocols>sslv2,sslv3</disableProtocols>

<preferServerCiphers>true</preferServerCiphers>

<invalidCertificateHandler>

<name>RejectCertificateHandler</name>

</invalidCertificateHandler>

</client>

</openSSL>

<!--tcp 端口-->

<tcp_port>9002</tcp_port>

<!-- Port for communication between replicas. Used for data exchange. -->

<interserver_http_port>9009</interserver_http_port>

<!--其他副本用于请求此服务器的主机名-->

<interserver_http_host>cdh01</interserver_http_host>

<!--注释掉监听主机-->

<!--<listen_host>::</listen_host>-->

<!--<listen_host>::1</listen_host>-->

<!--<listen_host>127.0.0.1</listen_host>-->

<!-- 监听IP -->

<listen_host>0.0.0.0</listen_host>

<max_connections>4096</max_connections>

<keep_alive_timeout>3</keep_alive_timeout>

<!-- 最大并发查询数 -->

<max_concurrent_queries>100</max_concurrent_queries>

<!-- 单位是B -->

<uncompressed_cache_size>8589934592</uncompressed_cache_size>

<mark_cache_size>5368709120</mark_cache_size>

<!-- 存储路径 -->

<path>/data1/clickhouse/</path>

<!-- 临时存储路径 -->

<tmp_path>/data1/clickhouse/tmp/</tmp_path>

<users_config>users.xml</users_config>

<default_profile>default</default_profile>

<default_database>default</default_database>

<!--设置时区为东八区-->

<timezone>Asia/Shanghai</timezone>

<remote_servers incl="clickhouse_remote_servers" />

<!--设置扩展配置文件的路径,默认为/etc/metrika.xml-->

<include_from>/etc/clickhouse-server/metrika.xml</include_from>

<zookeeper incl="zookeeper-servers" optional="true" />

<macros incl="macros" optional="true" />

<builtin_dictionaries_reload_interval>3600</builtin_dictionaries_reload_interval>

<query_log>

<database>system</database>

<table>query_log</table>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

</query_log>

<dictionaries_config>*_dictionary.xml</dictionaries_config>

<compression incl="clickhouse_compression">

</compression>

<resharding>

<task_queue_path>/clickhouse/task_queue</task_queue_path>

</resharding>

<!-- 控制大表(50G)的删除,0为允许删除任何表 -->

<max_table_size_to_drop>0</max_table_size_to_drop>

<graphite_rollup_example>

<pattern>

<regexp>click_cost</regexp>

<function>any</function>

<retention>

<age>0</age>

<precision>3600</precision>

</retention>

<retention>

<age>86400</age>

<precision>60</precision>

</retention>

</pattern>

<default>

<function>max</function>

<retention>

<age>0</age>

<precision>60</precision>

</retention>

<retention>

<age>3600</age>

<precision>300</precision>

</retention>

<retention>

<age>86400</age>

<precision>3600</precision>

</retention>

</default>

</graphite_rollup_example>

</yandex>注意端口号 tcp_port 改为 9001,http_port 改为 8124,同步端口 改为 9011,每个节点的 interserver_http_host 不一样

主要修改内容

<logger>

<level>trace</level>

<log>/var/log/clickhouse-server/clickhouse-server_1.log</log>

<errorlog>/var/log/clickhouse-server/clickhouse-server_1.err.log</errorlog>

<size>1000M</size>

<count>10</count>

</logger>

<http_port>8124</http_port>

<!-- 存储路径 -->

<path>/data1/clickhouse_1/</path>

<!-- 临时存储路径 -->

<tmp_path>/data1/clickhouse_1/tmp/</tmp_path>

<tcp_port>9001</tcp_port>

<!-- Port for communication between replicas. Used for data exchange. -->

<interserver_http_port>9011</interserver_http_port>

<!--设置扩展配置文件的路径,默认为/etc/metrika_1.xml-->

<include_from>/etc/clickhouse-server/metrika_1.xml</include_from><?xml version="1.0"?>

<yandex>

<profiles>

<default>

<max_memory_usage>10000000000</max_memory_usage>

<use_uncompressed_cache>0</use_uncompressed_cache>

<load_balancing>random</load_balancing>

</default>

<readonly>

<max_memory_usage>10000000000</max_memory_usage>

<use_uncompressed_cache>0</use_uncompressed_cache>

<load_balancing>random</load_balancing>

<readonly>1</readonly>

</readonly>

</profiles>

<quotas>

<!-- Name of quota. -->

<default>

<interval>

<duration>3600</duration>

<queries>0</queries>

<errors>0</errors>

<result_rows>0</result_rows>

<read_rows>0</read_rows>

<execution_time>0</execution_time>

</interval>

</default>

</quotas>

<users>

<!--读写用户-->

<default>

<password_sha256_hex>37a8eec1ce19687d132fe29051dca629d164e2c4958ba141d5f4133a33f0688f</password_sha256_hex>

<networks incl="networks" replace="replace">

<ip>::/0</ip>

</networks>

<profile>default</profile>

<quota>default</quota>

</default>

<!--只读用户-->

<ck>

<password_sha256_hex>d93beca6efd0421b314c081066064ac0e371b306f715cc0935b2879e249ba9df</password_sha256_hex>

<networks incl="networks" replace="replace">

<ip>::/0</ip>

</networks>

<profile>readonly</profile>

<quota>default</quota>

</ck>

</users>

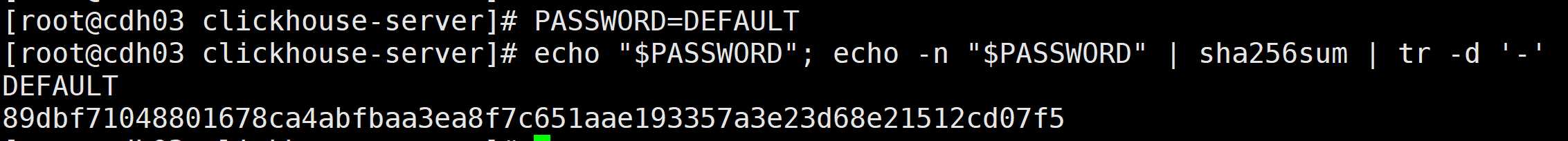

</yandex>如何生成密码?

暗文,sha256sum的Hash值

PASSWORD=default

echo "$PASSWORD"; echo -n "$PASSWORD" | sha256sum | tr -d '-'

cdh01,cdh02,cdh03 的实例1

vim /etc/clickhouse-server/metrika.xmlcdh01,cdh02,cdh03 的实例1

vim /etc/clickhouse-server/metrika_1.xml<yandex>

<clickhouse_remote_servers>

<!--3分片2备份-->

<perftest_3shards_2replicas>

<!--数据分片1-->

<shard>

<!--分片权重,即有多大概率落到此分片上-->

<weight>1</weight>

<!--表示是否只将数据写入其中一个副本,默认为flase,表示写入所有副本-->

<internal_replication>true</internal_replication>

<replica>

<host>cdh01</host>

<port>9002</port>

<user>default</user>

<password>default</password>

</replica>

<replica>

<host>cdh02</host>

<port>9001</port>

<user>default</user>

<password>default</password>

</replica>

</shard>

<!--数据分片2-->

<shard>

<!--分片权重,即有多大概率落到此分片上-->

<weight>1</weight>

<!--表示是否只将数据写入其中一个副本,默认为flase,表示写入所有副本-->

<internal_replication>true</internal_replication>

<replica>

<host>cdh02</host>

<port>9002</port>

<user>default</user>

<password>default</password>

</replica>

<replica>

<host>cdh03</host>

<port>9001</port>

<user>default</user>

<password>default</password>

</replica>

</shard>

<!--数据分片3-->

<shard>

<!--分片权重,即有多大概率落到此分片上-->

<weight>1</weight>

<!--表示是否只将数据写入其中一个副本,默认为flase,表示写入所有副本-->

<internal_replication>true</internal_replication>

<replica>

<host>cdh03</host>

<port>9002</port>

<user>default</user>

<password>default</password>

</replica>

<replica>

<host>cdh01</host>

<port>9001</port>

<user>default</user>

<password>default</password>

</replica>

</shard>

</perftest_3shards_2replicas>

</clickhouse_remote_servers>

<zookeeper-servers>

<node index="1">

<host>cdh01</host>

<port>2181</port>

</node>

<node index="2">

<host>cdh02</host>

<port>2181</port>

</node>

<node index="3">

<host>cdh03</host>

<port>2181</port>

</node>

</zookeeper-servers>

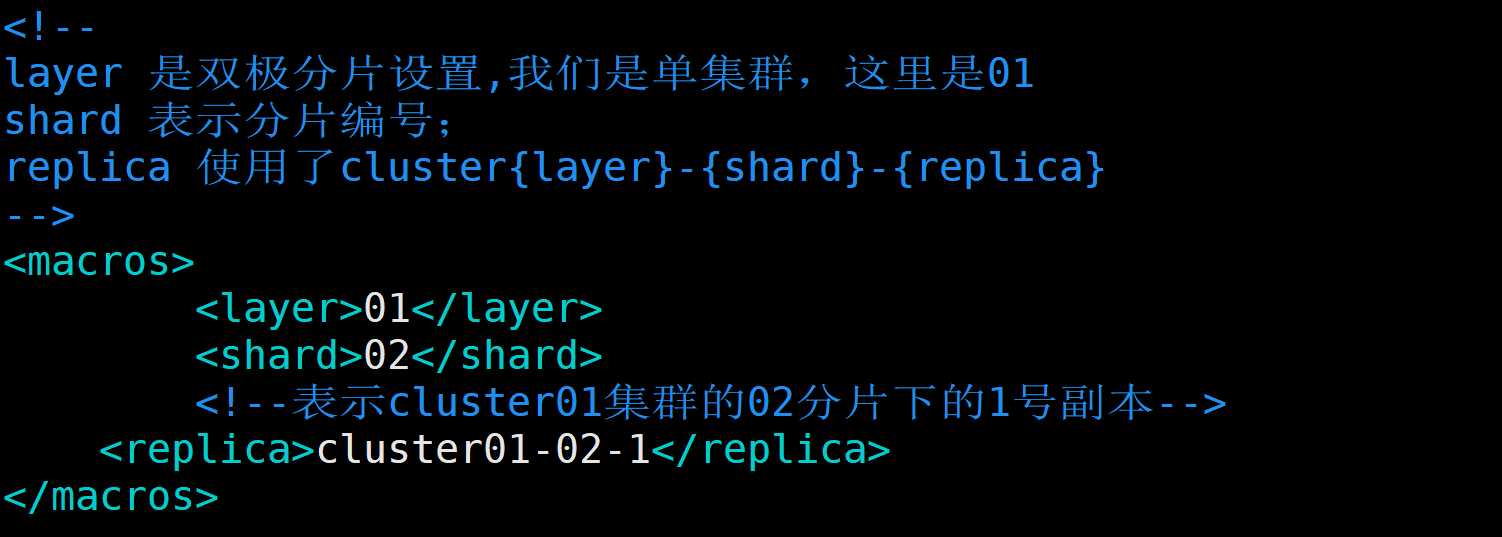

<!--

layer 是双极分片设置,我们是单集群,这里是01

shard 表示分片编号;

replica 使用了cluster{layer}-{shard}-{replica}

-->

<macros>

<layer>01</layer>

<shard>03</shard>

<!--表示cluster01集群的03分片下的1号副本-->

<replica>cluster01-03-1</replica>

</macros>

<networks>

<ip>::/0</ip>

</networks>

<clickhouse_compression>

<case>

<min_part_size>10000000000</min_part_size>

<min_part_size_ratio>0.01</min_part_size_ratio>

<method>lz4</method>

</case>

</clickhouse_compression>

</yandex>注意:6个节点(除 macros 外)metrika.xml 配置相同

启动6个实例,两种启动方式

nohup clickhouse-server --config-file=/etc/clickhouse-server/config.xml >/dev/null 2>&1 &

service click-server-1 start

clickhouse-client -h cdh01 -m -u default --password default --port 9002

clickhouse-client -h cdh01 -m -u default --password default --port 9001

clickhouse-client -h cdh02 -m -u default --password default --port 9002

clickhouse-client -h cdh02 -m -u default --password default --port 9001

clickhouse-client -h cdh03 -m -u default --password default --port 9002

clickhouse-client -h cdh03 -m -u default --password default --port 9001采用 ReplicatedMergeTree + Distributed引擎作为集群结构的引擎

ReplicatedMergeTree(zoo_path, replica_name,partition,primykey,8192)

Distributed(cluster, datebase, local_table[, sharding_key])

create database ontime;

use ontime;

CREATE TABLE ontime (FlightDate Date,Year UInt16) ENGINE = ReplicatedMergeTree('/clickhouse/tables/01-01/ontime','cluster01-01-1',FlightDate,(Year, FlightDate),8192); #cdh01的实例1,分片01,副本1

CREATE TABLE ontime (FlightDate Date,Year UInt16) ENGINE = ReplicatedMergeTree('/clickhouse/tables/01-03/ontime','cluster01-03-2',FlightDate,(Year, FlightDate),8192); #cdh01的实例2,分片03,副本2

CREATE TABLE ontime (FlightDate Date,Year UInt16) ENGINE = ReplicatedMergeTree('/clickhouse/tables/01-02/ontime','cluster01-02-1',FlightDate,(Year, FlightDate),8192); #cdh02的实例1,分片02,副本1

CREATE TABLE ontime (FlightDate Date,Year UInt16) ENGINE = ReplicatedMergeTree('/clickhouse/tables/01-01/ontime','cluster01-01-2',FlightDate,(Year, FlightDate),8192); #cdh02的实例2,分片01,副本2

CREATE TABLE ontime (FlightDate Date,Year UInt16) ENGINE = ReplicatedMergeTree('/clickhouse/tables/01-03/ontime','cluster01-03-1',FlightDate,(Year, FlightDate),8192); #cdh03的实例1,分片03,副本1

CREATE TABLE ontime (FlightDate Date,Year UInt16) ENGINE = ReplicatedMergeTree('/clickhouse/tables/01-02/ontime','cluster01-02-2',FlightDate,(Year, FlightDate),8192); #cdh03的实例2,分片02,副本2

CREATE TABLE ontime_all (FlightDate Date,Year UInt16) ENGINE= Distributed(perftest_3shards_2replicas, ontime, ontime, rand()); #每个实例都执行# 在 cdh01 的实例1 (分片01,副本1) 上执行该语句

insert into ontime (FlightDate,Year)values('2001-10-12',2001);

# 在 6个实例上执行该语句

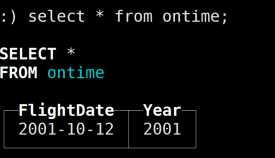

select * from ontime;

可以发现cdh01 实例1(分片01,副本1) 和 cdh02 实例2(分片01,副本2) 两个节点的本地表查询到数据;数据成功复制副本,当其中1个副本挂掉后,集群还可继续使用

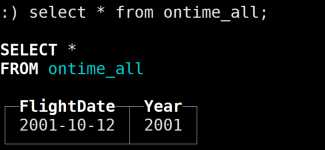

# 在 6个实例上执行该语句

select * from ontime_all;

在所有实例上均可查询到数据;任一节点都可读取分布表

标签:临时 start function val rollup 定向 cos repo stdin

原文地址:https://www.cnblogs.com/wuning/p/12061756.html