标签:lua print loss shuffle 测试 == min shuff drop

代码如下:

# encoding :utf-8 import io # 文件数据流 import datetime import matplotlib.pyplot as plt import tensorflow as tf from tensorflow import keras # 导入常见网络层, sequential容器, 优化器, 损失函数 from tensorflow.keras import layers, Sequential, optimizers, losses, metrics import os # 运维模块, 调用系统命令 os.environ[‘TF_CPP_MIN_LOG_LEVEL‘] = ‘2‘ # 只显示warring和error def preprocess(x, y): x = tf.cast(x, dtype=tf.float32) / 255. y = tf.cast(y, dtype=tf.int32) return x, y def plot_to_image(figure): buf = io.BytesIO() # 在内存中存储画 plt.savefig(buf, format=‘png‘) plt.close(figure) buf.seek(0) # 传化为TF 图 image = tf.image.decode_png(buf.getvalue(), channels=4) image = tf.expand_dims(image, 0) return image def image_grid(images): # 返回一个5x5的mnist图像 figure = plt.figure(figsize=(10, 10)) for i in range(25): plt.subplot(5, 5, i+1, title=‘name‘) plt.xticks([]) plt.yticks([]) plt.grid(False) plt.imshow(images[i], cmap=plt.cm.binary) return figure batchsz = 128 path = r‘G:\2019\python\mnist.npz‘ (x, y), (x_val, y_val) = tf.keras.datasets.mnist.load_data(path) print(‘datasets:‘, x.shape, y.shape, x.min(), x.max()) db = tf.data.Dataset.from_tensor_slices((x,y)) db = db.map(preprocess).shuffle(60000).batch(batchsz).repeat(10) ds_val = tf.data.Dataset.from_tensor_slices((x_val, y_val)) ds_val = ds_val.map(preprocess).batch(batchsz, drop_remainder=True) network = Sequential([ layers.Dense(256, activation=‘relu‘), layers.Dense(128, activation=‘relu‘), layers.Dense(64, activation=‘relu‘), layers.Dense(32, activation=‘relu‘), layers.Dense(10) ]) network.build(input_shape=(None, 28*28)) network.summary() optimizer=optimizers.Adam(lr=0.01) current_time = datetime.datetime.now().strftime("%Y%m%d-%H%M%S") log_dir = ‘logs/‘ + current_time summary_writer = tf.summary.create_file_writer(log_dir) # 创建监控类,监控数据写入到log_dir目录 sample_img = next(iter(db))[0] sample_img = sample_img[0] # 第一张图 sample_img = tf.reshape(sample_img, [1, 28, 28, 1]) with summary_writer.as_default(): # 写入环境 tf.summary.image("Training sample:", sample_img, step=0) for step, (x, y) in enumerate(db): # 遍历切分好的数据step:0->599 with tf.GradientTape() as tape: x = tf.reshape(x, (-1, 28*28)) out = network(x) y = tf.one_hot(y, depth=10) loss = tf.reduce_mean(tf.losses.categorical_crossentropy(y, out, from_logits=True)) grads = tape.gradient(loss, network.trainable_variables) optimizer.apply_gradients(zip(grads, network.trainable_variables)) if step % 100 == 0: print(step, ‘loss:‘, float(loss)) # 读统计数据 with summary_writer.as_default(): tf.summary.scalar(‘train-loss‘, float(loss), step=step) # 将loss写入到train-loss中 if step % 500 == 0: total, total_correct = 0., 0 for _, (m, n) in enumerate(ds_val): m = tf.reshape(m, (-1, 28*28)) out = network(m) pred = tf.argmax(out, axis=1) pred = tf.cast(pred, dtype=tf.int32) correct = tf.equal(pred, n) total_correct += tf.reduce_sum(tf.cast(correct, dtype=tf.int32)).numpy() total += m.shape[0] print(step, ‘Evaluate Acc:‘, total_correct / total) val_images = m[:25] val_images = tf.reshape(val_images, [-1, 28, 28, 1]) with summary_writer.as_default(): tf.summary.scalar(‘test-acc‘, float(total_correct / total), step=step) # 写入测试准确率 tf.summary.image("val-onebyone-images:", val_images, max_outputs=25, step=step) # 可视化测试用图片,25张 val_images = tf.reshape(val_images, [-1, 28, 28]) figure = image_grid(val_images) tf.summary.image(‘val-images:‘, plot_to_image(figure), step=step)

后台cmd下,输入:tensorboard --logdir "C:\Users\Z He\PycharmProjects\he-learn\logs";

复制链接,在edge中打开,如下:

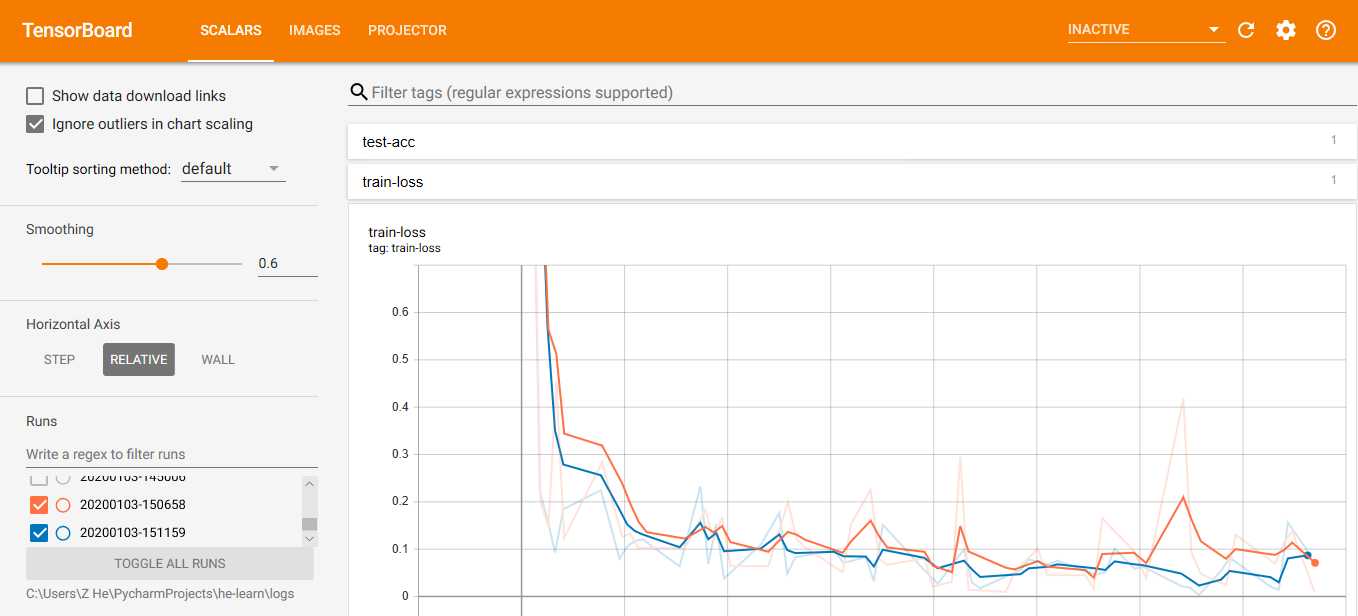

loss率

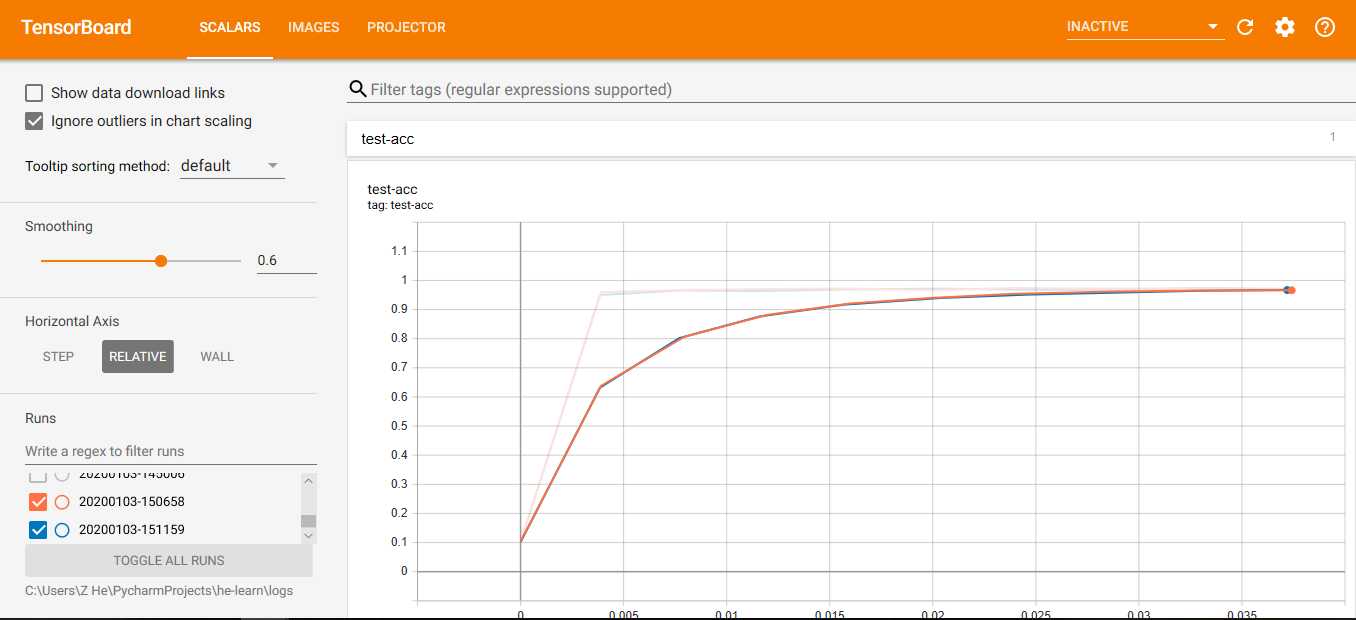

准确率:

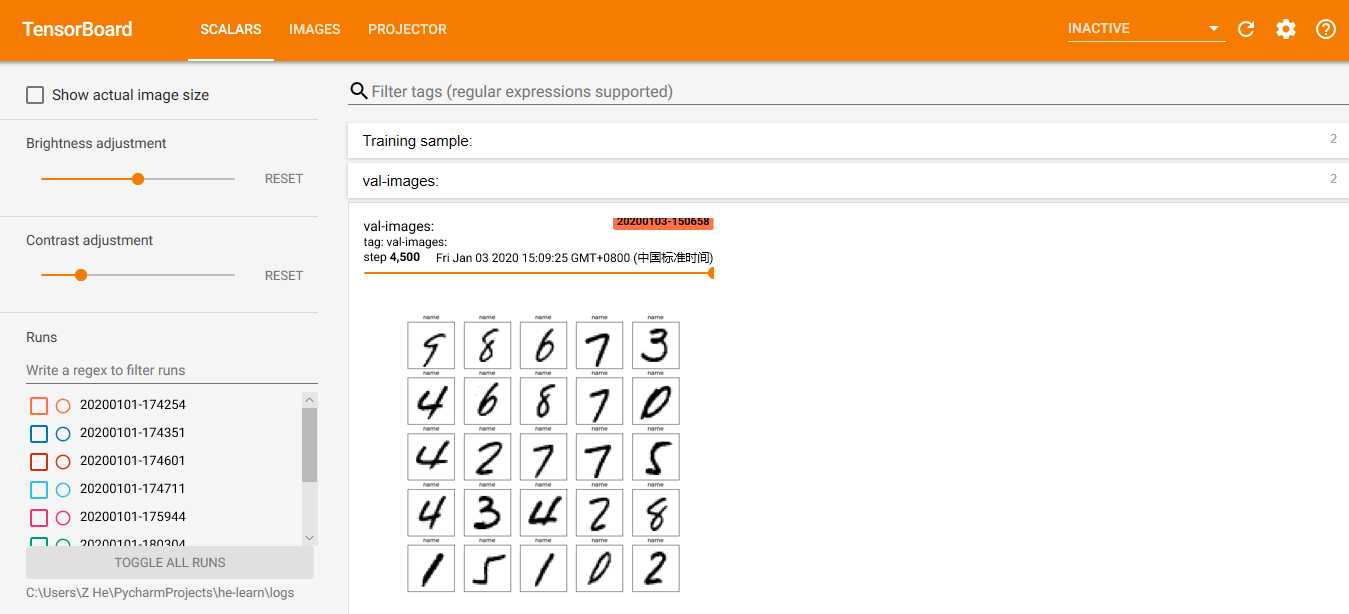

图像:

可视化确实有助于认识学习的效果,今后尽可能用上可视化。

下次更新,拟合与过拟合中的关于月牙形图像处理的例子。

tensorflow 2.0 学习 (九) tensorboard可视化功能认识

标签:lua print loss shuffle 测试 == min shuff drop

原文地址:https://www.cnblogs.com/heze/p/12145166.html