标签:src contex api mic bsp sele cal png apache

api差异参考官网地址:https://spark.apache.org/docs/2.1.1/sql-programming-guide.html#upgrading-from-spark-sql-16-to-20

1.SparkSession is now the new entry point of Spark that replaces the old SQLContext and HiveContext

2.Dataset API and DataFrame API are unified. In Scala, becomes a type alias for Dataset[Row]

3.Dataset and DataFrame API registerTempTable has been deprecated and replaced by createOrReplaceTempView

val computerTable = dataComputerInfo(sqlContext, sparkModel, countDay)

computerTable.registerTempTable("table_computer_info")

to

val computerTable = dataComputerInfo(sqlContext, sparkModel, countDay)

computerTable.createOrReplaceTempView("table_computer_info")

4.Dataset and DataFrame API unionAll has been deprecated and replaced by union

5.Dataset and DataFrame API explode has been deprecated, alternatively, use functions.explode() with select or flatMap

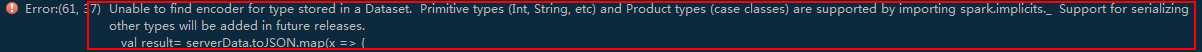

6.根据第2项知道dataSet已过期,后面hiveutil或者hbaseutils需要的是rdd

做如下修改,看实际情况

val result=serverData.rdd.map(x => {

-------------------------------------------------------------------------------------------------------------------------------

val resultRdd = result.toJSON.rdd.map(x => {

// No pre-defined encoders for Dataset[Map[K,V]], define explicitly

implicit val mapEncoder = org.apache.spark.sql.Encoders.kryo[Map[String, Any]]

// Primitive types and case classes can be also defined as

// implicit val stringIntMapEncoder: Encoder[Map[String, Any]] = ExpressionEncoder()

7. 获取业务启动参数

val htable = sparkModel.getUserParamsVal("htable", "t_table")

标签:src contex api mic bsp sele cal png apache

原文地址:https://www.cnblogs.com/shaozhiqi/p/12157873.html